“Deferred neural rendering: image synthesis using neural textures” by Thies, Zollhöfer and Niessner

Conference:

Type(s):

Title:

- Deferred neural rendering: image synthesis using neural textures

Session/Category Title:

- Neural Rendering

Presenter(s)/Author(s):

Abstract:

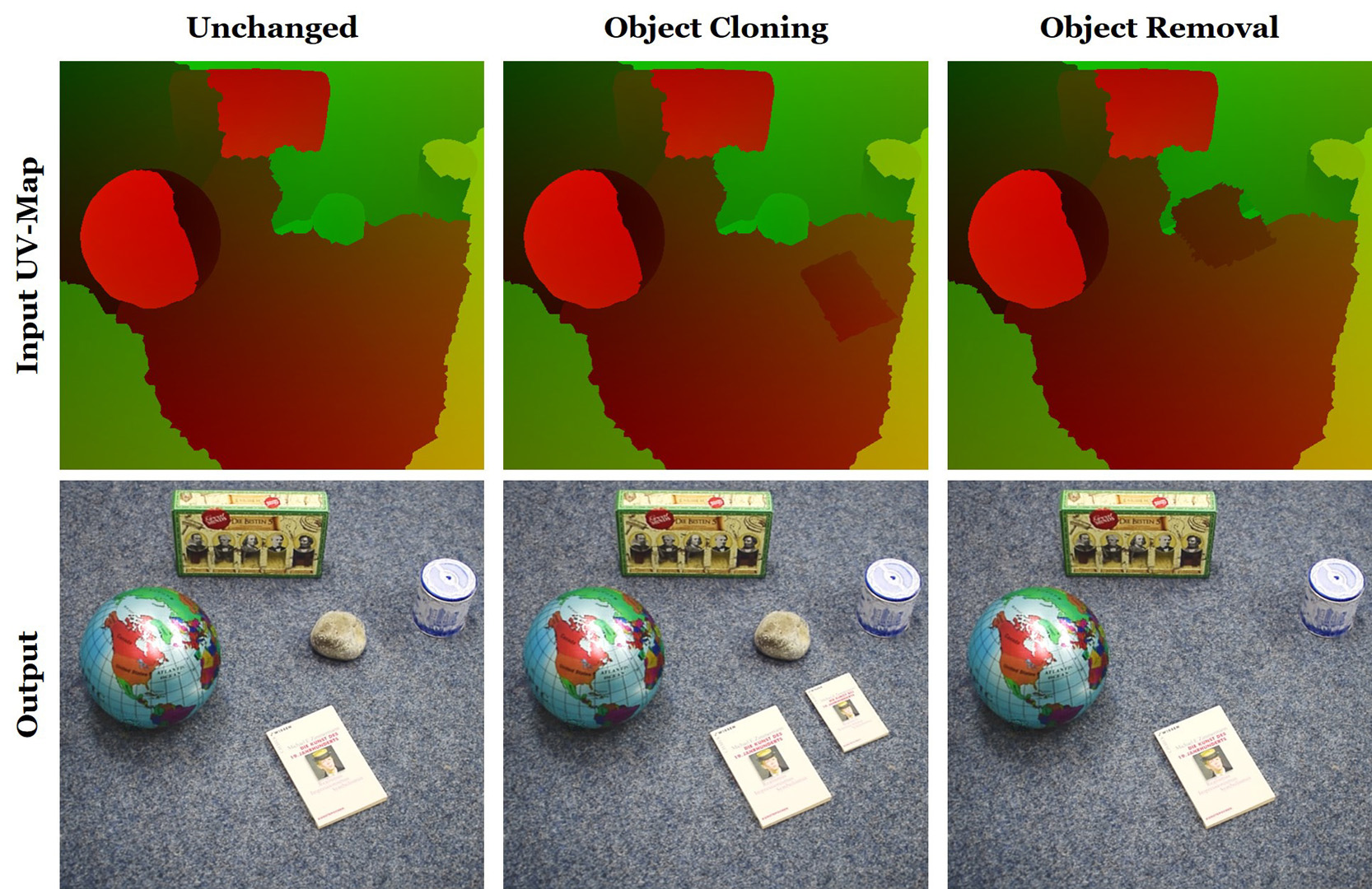

The modern computer graphics pipeline can synthesize images at remarkable visual quality; however, it requires well-defined, high-quality 3D content as input. In this work, we explore the use of imperfect 3D content, for instance, obtained from photo-metric reconstructions with noisy and incomplete surface geometry, while still aiming to produce photo-realistic (re-)renderings. To address this challenging problem, we introduce Deferred Neural Rendering, a new paradigm for image synthesis that combines the traditional graphics pipeline with learnable components. Specifically, we propose Neural Textures, which are learned feature maps that are trained as part of the scene capture process. Similar to traditional textures, neural textures are stored as maps on top of 3D mesh proxies; however, the high-dimensional feature maps contain significantly more information, which can be interpreted by our new deferred neural rendering pipeline. Both neural textures and deferred neural renderer are trained end-to-end, enabling us to synthesize photo-realistic images even when the original 3D content was imperfect. In contrast to traditional, black-box 2D generative neural networks, our 3D representation gives us explicit control over the generated output, and allows for a wide range of application domains. For instance, we can synthesize temporally-consistent video re-renderings of recorded 3D scenes as our representation is inherently embedded in 3D space. This way, neural textures can be utilized to coherently re-render or manipulate existing video content in both static and dynamic environments at real-time rates. We show the effectiveness of our approach in several experiments on novel view synthesis, scene editing, and facial reenactment, and compare to state-of-the-art approaches that leverage the standard graphics pipeline as well as conventional generative neural networks.

References:

1. Chris Buehler, Michael Bosse, Leonard McMillan, Steven Gortler, and Michael Cohen. 2001. Unstructured Lumigraph Rendering. In Proceedings of the 28th Annual Conference on Computer Graphics and Interactive Techniques (SIGGRAPH ’01). ACM, New York, NY, USA, 425–432. Google ScholarDigital Library

2. Joel Carranza, Christian Theobalt, Marcus A. Magnor, and Hans-Peter Seidel. 2003. Free-viewpoint Video of Human Actors. ACM Trans. Graph. (Proc. SIGGRAPH) 22, 3 (July 2003), 569–577. Google ScholarDigital Library

3. Dan Casas, Christian Richardt, John P. Collomosse, Christian Theobalt, and Adrian Hilton. 2015. 4D Model Flow: Precomputed Appearance Alignment for Real-time 4D Video Interpolation. Comput. Graph. Forum 34, 7 (2015), 173–182. Google ScholarDigital Library

4. Gaurav Chaurasia, Sylvain Duchene, Olga Sorkine-Hornung, and George Drettakis. 2013. Depth Synthesis and Local Warps for Plausible Image-based Navigation. ACM Trans. Graph. 32, 3, Article 30 (July 2013), 12 pages. Google ScholarDigital Library

5. Anpei Chen, Minye Wu, Yingliang Zhang, Nianyi Li, Jie Lu, Shenghua Gao, and Jingyi Yu. 2018. Deep Surface Light Fields. Proc. ACM Comput. Graph. Interact. Tech. 1, 1, Article 14 (July 2018), 17 pages. Google ScholarDigital Library

6. Jiawen Chen, Dennis Bautembach, and Shahram Izadi. 2013. Scalable real-time volumetric surface reconstruction. ACM Transactions on Graphics (ToG) 32, 4 (2013), 113. Google ScholarDigital Library

7. Wei-Chao Chen, Jean-Yves Bouguet, Michael H. Chu, and Radek Grzeszczuk. 2002. Light Field Mapping: Efficient Representation and Hardware Rendering of Surface Light Fields. In Proceedings of the 29th Annual Conference on Computer Graphics and Interactive Techniques (SIGGRAPH ’02). ACM, New York, NY, USA, 447–456. Google ScholarDigital Library

8. Sungjoon Choi, Qian-Yi Zhou, and Vladlen Koltun. 2015. Robust reconstruction of indoor scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 5556–5565.Google Scholar

9. Taco S Cohen and Max Welling. 2014. Transformation properties of learned visual representations. arXiv preprint arXiv:1412.7659 (2014).Google Scholar

10. Davide Cozzolino, Justus Thies, Andreas Rössler, Christian Riess, Matthias Nießner, and Luisa Verdoliva. 2018. ForensicTransfer: Weakly-supervised Domain Adaptation for Forgery Detection. arXiv (2018).Google Scholar

11. Angela Dai, Matthias Nießner, Michael Zollhöfer, Shahram Izadi, and Christian Theobalt. 2017. Bundlefusion: Real-time globally consistent 3d reconstruction using on-the-fly surface reintegration. ACM Transactions on Graphics (TOG) 36, 4 (2017), 76a. Google ScholarDigital Library

12. Paul Debevec, Yizhou Yu, and George Boshokov. 1998. Efficient View-Dependent IBR with Projective Texture-Mapping. EG Rendering Workshop.Google ScholarDigital Library

13. Mingsong Dou, Sameh Khamis, Yury Degtyarev, Philip Davidson, Sean Ryan Fanello, Adarsh Kowdle, Sergio Orts Escolano, Christoph Rhemann, David Kim, Jonathan Taylor, and others. 2016. Fusion4d: Real-time performance capture of challenging scenes. ACM Transactions on Graphics (TOG) 35, 4 (2016), 114. Google ScholarDigital Library

14. Ruofei Du, Ming Chuang, Wayne Chang, Hugues Hoppe, and Amitabh Varshney. 2018. Montage4D: Interactive Seamless Fusion of Multiview Video Textures. In Proceedings of the ACM SIGGRAPH Symposium on Interactive 3D Graphics and Games (I3D ’18). ACM, New York, NY, USA, Article 5, 11 pages. Google ScholarDigital Library

15. M. Eisemann, B. De Decker, M. Magnor, P. Bekaert, E. De Aguiar, N. Ahmed, C. Theobalt, and A. Sellent. 2008. Floating Textures. Computer Graphics Forum (Proc. EUROGRAHICS (2008).Google Scholar

16. SM Ali Eslami, Danilo Jimenez Rezende, Frederic Besse, Fabio Viola, Ari S Morcos, Marta Garnelo, Avraham Ruderman, Andrei A Rusu, Ivo Danihelka, Karol Gregor, and others. 2018. Neural scene representation and rendering. Science 360, 6394 (2018), 1204–1210.Google Scholar

17. Luca Falorsi, Pim de Haan, Tim R Davidson, Nicola De Cao, Maurice Weiler, Patrick Forre, and Taco S Cohen. 2018. Explorations in Homeomorphic Variational Auto-Encoding. ICML Workshop on Theoretical Foundations and Applications of Generative Models (2018).Google Scholar

18. John Flynn, Ivan Neulander, James Philbin, and Noah Snavely. 2016. Deepstereo: Learning to predict new views from the world’s imagery. In Proc. CVPR. 5515–5524.Google Scholar

19. Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2014. Generative adversarial nets. In Proc. NIPS. 2672–2680. Google ScholarDigital Library

20. Steven J. Gortler, Radek Grzeszczuk, Richard Szeliski, and Michael F. Cohen. 1996. The Lumigraph. In Proceedings of the 23rd Annual Conference on Computer Graphics and Interactive Techniques (SIGGRAPH ’96). ACM, New York, NY, USA, 43–54. Google ScholarDigital Library

21. Peter Hedman, Julien Philip, True Price, Jan-Michael Frahm, George Drettakis, and Gabriel Brostow. 2018. Deep blending for free-viewpoint image-based rendering. In SIGGRAPH Asia 2018 Technical Papers. ACM, 257. Google ScholarDigital Library

22. Peter Hedman, Tobias Ritschel, George Drettakis, and Gabriel Brostow. 2016. Scalable inside-out image-based rendering. ACM Transactions on Graphics (TOG) 35, 6 (2016), 231. Google ScholarDigital Library

23. Benno Heigl, Reinhard Koch, Marc Pollefeys, Joachim Denzler, and Luc J. Van Gool. 1999. Plenoptic Modeling and Rendering from Image Sequences Taken by Hand-Held Camera. In Proc. DAGM. 94–101. Google ScholarDigital Library

24. Geoffrey E. Hinton and Ruslan Salakhutdinov. 2006. Reducing the Dimensionality of Data with Neural Networks. Science 313, 5786 (July 2006), 504–507.Google ScholarCross Ref

25. Jingwei Huang, Angela Dai, Leonidas Guibas, and Matthias Nießner. 2017. 3DLite: Towards Commodity 3D Scanning for Content Creation. ACM Transactions on Graphics 2017 (TOG) (2017). Google ScholarDigital Library

26. Matthias Innmann, Michael Zollhöfer, Matthias Nießner, Christian Theobalt, and Marc Stamminger. 2016. VolumeDeform: Real-time volumetric non-rigid reconstruction. In European Conference on Computer Vision. Springer, 362–379.Google ScholarCross Ref

27. Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, and Alexei A Efros. 2016. Image-to-Image Translation with Conditional Adversarial Networks. arxiv (2016).Google Scholar

28. Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, and Alexei A. Efros. 2017. Image-to-Image Translation with Conditional Adversarial Networks. In Proc. CVPR. 5967–5976.Google Scholar

29. Shahram Izadi, David Kim, Otmar Hilliges, David Molyneaux, Richard Newcombe, Pushmeet Kohli, Jamie Shotton, Steve Hodges, Dustin Freeman, Andrew Davison, and others. 2011. KinectFusion:real-time 3D reconstruction and interaction using a moving depth camera. In Proceedings of the 24th annual ACM symposium on User interface software and technology. ACM, 559–568. Google ScholarDigital Library

30. Nima Khademi Kalantari, Ting-Chun Wang, and Ravi Ramamoorthi. 2016. Learning-Based View Synthesis for Light Field Cameras. ACM Transactions on Graphics (Proceedings of SIGGRAPH Asia 2016) 35, 6 (2016). Google ScholarDigital Library

31. Tero Karras, Timo Aila, Samuli Laine, and Jaakko Lehtinen. 2018. Progressive Growing of GANs for Improved Quality, Stability, and Variation. In Proc. ICLR.Google Scholar

32. H. Kim, P. Garrido, A. Tewari, W. Xu, J. Thies, N. Nießner, P. Pérez, C. Richardt, M. Zollhöfer, and C. Theobalt. 2018. Deep Video Portraits. ACM Transactions on Graphics 2018 (TOG) (2018). Google ScholarDigital Library

33. Diederik P. Kingma and Jimmy Ba. 2014. Adam: A Method for Stochastic Optimization. CoRR abs/1412.6980 (2014). http://arxiv.org/abs/1412.6980Google Scholar

34. Diederik P. Kingma and Max Welling. 2013. Auto-Encoding Variational Bayes. CoRR abs/1312.6114 (2013).Google Scholar

35. Tejas D Kulkarni, William F. Whitney, Pushmeet Kohli, and Josh Tenenbaum. 2015. Deep Convolutional Inverse Graphics Network. In Proc. NIPS. 2539–2547. Google ScholarDigital Library

36. Marc Levoy and Pat Hanrahan. 1996. Light Field Rendering. In Proceedings of the 23rd Annual Conference on Computer Graphics and Interactive Techniques (SIGGRAPH ’96). ACM, New York, NY, USA, 31–42. Google ScholarDigital Library

37. Tzu-Mao Li, Michaël Gharbi, Andrew Adams, Frédo Durand, and Jonathan Ragan-Kelley. 2018. Differentiable programming for image processing and deep learning in halide. ACM Transactions on Graphics (TOG) 37, 4 (2018), 139. Google ScholarDigital Library

38. Marcus Magnor and Bernd Girod. 1999. Adaptive Block-based Light Field Coding. In Proc. 3rd International Workshop on Synthetic and Natural Hybrid Coding and Three-Dimensional Imaging IWSNHC3DI’99, Santorini, Greece. 140–143.Google Scholar

39. Ehsan Miandji, Joel Kronander, and Jonas Unger. 2013. Learning Based Compression for Real-time Rendering of Surface Light Fields. In ACM SIGGRAPH 2013 Posters (SIGGRAPH ’13). ACM, New York, NY, USA, Article 44, 1 pages. Google ScholarDigital Library

40. Mehdi Mirza and Simon Osindero. 2014. Conditional Generative Adversarial Nets. (2014). https://arxiv.org/abs/1411.1784 arXiv:1411.1784.Google Scholar

41. Koki Nagano, Jaewoo Seo, Jun Xing, Lingyu Wei, Zimo Li, Shunsuke Saito, Aviral Agarwal, Jens Fursund, and Hao Li. 2018. paGAN: real-time avatars using dynamic textures. 1–12. Google ScholarDigital Library

42. Richard A Newcombe, Dieter Fox, and Steven M Seitz. 2015. Dynamicfusion: Reconstruction and tracking of non-rigid scenes in real-time. In Proceedings of the IEEE conference on computer vision and pattern recognition. 343–352.Google ScholarCross Ref

43. Richard A Newcombe, Shahram Izadi, Otmar Hilliges, David Molyneaux, David Kim, Andrew J Davison, Pushmeet Kohi, Jamie Shotton, Steve Hodges, and Andrew Fitzgibbon. 2011. KinectFusion: Real-time dense surface mapping and tracking. In Mixed and augmented reality (ISMAR), 2011 10th IEEE international symposium on. IEEE, 127–136. Google ScholarDigital Library

44. Matthias Nießner, Michael Zollhöfer, Shahram Izadi, and Marc Stamminger. 2013. Realtime 3D reconstruction at scale using voxel hashing. ACM Transactions on Graphics (ToG) 32, 6 (2013), 169. Google ScholarDigital Library

45. Aäron van den Oord, Nal Kalchbrenner, Oriol Vinyals, Lasse Espeholt, Alex Graves, and Koray Kavukcuoglu. 2016. Conditional Image Generation with PixelCNN Decoders. In Proc. NIPS. 4797–4805. Google ScholarDigital Library

46. Eunbyung Park, Jimei Yang, Ersin Yumer, Duygu Ceylan, and Alexander C. Berg. 2017. Transformation-Grounded Image Generation Network for Novel 3D View Synthesis. CoRR abs/1703.02921 (2017).Google Scholar

47. Adam Paszke, Sam Gross, Soumith Chintala, Gregory Chanan, Edward Yang, Zachary DeVito, Zeming Lin, Alban Desmaison, Luca Antiga, and Adam Lerer. 2017. Automatic differentiation in PyTorch. (2017).Google Scholar

48. Eric Penner and Li Zhang. 2017. Soft 3D Reconstruction for View Synthesis. ACM Trans. Graph. 36, 6, Article 235 (Nov. 2017), 11 pages. Google ScholarDigital Library

49. Alec Radford, Luke Metz, and Soumith Chintala. 2016. Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks. In Proc. ICLR.Google Scholar

50. Olaf Ronneberger, Philipp Fischer, and Thomas Brox. 2015. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proc. MICCAI. 234–241.Google ScholarCross Ref

51. Andreas Rössler, Davide Cozzolino, Luisa Verdoliva, Christian Riess, Justus Thies, and Matthias Nießner. 2018. FaceForensics: A Large-scale Video Dataset for Forgery Detection in Human Faces. arXiv (2018).Google Scholar

52. Andreas Rössler, Davide Cozzolino, Luisa Verdoliva, Christian Riess, Justus Thies, and Matthias Nießner. 2019. FaceForensics++: Learning to Detect Manipulated Facial Images. arXiv (2019).Google Scholar

53. Johannes Lutz Schönberger and Jan-Michael Frahm. 2016. Structure-from-Motion Revisited. In Conference on Computer Vision and Pattern Recognition (CVPR).Google Scholar

54. Johannes Lutz Schönberger, Enliang Zheng, Marc Pollefeys, and Jan-Michael Frahm. 2016. Pixelwise View Selection for Unstructured Multi-View Stereo. In European Conference on Computer Vision (ECCV).Google Scholar

55. V. Sitzmann, J. Thies, F. Heide, M. Nießner, G. Wetzstein, and M. Zollhöfer. 2019. DeepVoxels: Learning Persistent 3D Feature Embeddings. In Proc. Computer Vision and Pattern Recognition (CVPR), IEEE.Google Scholar

56. Justus Thies, M. Zollhöfer, M. Stamminger, C. Theobalt, and M. Nießner. 2016. Face2Face: Real-time Face Capture and Reenactment of RGB Videos. In Proc. CVPR.Google Scholar

57. J. Thies, M. Zollhöfer, C. Theobalt, M. Stamminger, and M. Nießner. 2018. IGNOR: Image-guided Neural Object Rendering. arXiv 2018 (2018).Google Scholar

58. Shubham Tulsiani, Richard Tucker, and Noah Snavely. 2018. Layer-structured 3D Scene Inference via View Synthesis. In Proc. ECCV.Google ScholarCross Ref

59. Ting-Chun Wang, Ming-Yu Liu, Jun-Yan Zhu, Guilin Liu, Andrew Tao, Jan Kautz, and Bryan Catanzaro. 2018a. Video-to-Video Synthesis. In Proc. NeurIPS. Google ScholarDigital Library

60. Ting-Chun Wang, Ming-Yu Liu, Jun-Yan Zhu, Andrew Tao, Jan Kautz, and Bryan Catanzaro. 2018b. High-Resolution Image Synthesis and Semantic Manipulation with Conditional GANs. In Proc. CVPR.Google ScholarCross Ref

61. Thomas Whelan, Renato F Salas-Moreno, Ben Glocker, Andrew J Davison, and Stefan Leutenegger. 2016. ElasticFusion: Real-time dense SLAM and light source estimation. The International Journal of Robotics Research 35, 14 (2016), 1697–1716. Google ScholarDigital Library

62. Daniel N. Wood, Daniel I. Azuma, Ken Aldinger, Brian Curless, Tom Duchamp, David H. Salesin, and Werner Stuetzle. 2000. Surface Light Fields for 3D Photography. In Proceedings of the 27th Annual Conference on Computer Graphics and Interactive Techniques (SIGGRAPH ’00). ACM Press/Addison-Wesley Publishing Co., New York, NY, USA, 287–296. Google ScholarDigital Library

63. Daniel E Worrall, Stephan J Garbin, Daniyar Turmukhambetov, and Gabriel J Brostow. 2017. Interpretable transformations with encoder-decoder networks. In Proc. ICCV, Vol. 4.Google ScholarCross Ref

64. Xinchen Yan, Jimei Yang, Ersin Yumer, Yijie Guo, and Honglak Lee. 2016. Perspective transformer nets: Learning single-view 3d object reconstruction without 3d supervision. In Proc. NIPS. 1696–1704. Google ScholarDigital Library

65. Ming Zeng, Fukai Zhao, Jiaxiang Zheng, and Xinguo Liu. 2013. Octree-based fusion for realtime 3D reconstruction. Graphical Models 75, 3 (2013), 126–136. Google ScholarDigital Library

66. Ke Colin Zheng, Alex Colburn, Aseem Agarwala, Maneesh Agrawala, David Salesin, Brian Curless, and Michael F. Cohen. 2009. Parallax photography: creating 3D cinematic effects from stills. In Proc. Graphics Interface. ACM Press, 111–118. Google ScholarDigital Library

67. Qian-Yi Zhou and Vladlen Koltun. 2014. Color map optimization for 3D reconstruction with consumer depth cameras. ACM Transactions on Graphics (TOG) 33, 4 (2014), 155. Google ScholarDigital Library

68. Tinghui Zhou, Richard Tucker, John Flynn, Graham Fyffe, and Noah Snavely. 2018. Stereo Magnification: Learning View Synthesis Using Multiplane Images. ACM Trans. Graph. 37, 4, Article 65 (July 2018), 12 pages. Google ScholarDigital Library

69. Tinghui Zhou, Shubham Tulsiani, Weilun Sun, Jitendra Malik, and Alexei A Efros. 2016. View synthesis by appearance flow. In Proc. ECCV. Springer, 286–301.Google Scholar