“Deep relightable textures: volumetric performance capture with neural rendering” by Meka, Pandey, Häne, Orts-Escolano, Barnum, et al. …

Conference:

Type(s):

Title:

- Deep relightable textures: volumetric performance capture with neural rendering

Session/Category Title:

- Neural Rendering

Presenter(s)/Author(s):

- Abhimitra Meka

- Rohit Pandey

- Christian Häne

- Sergio Orts-Escolano

- Peter C. Barnum

- Daniel Erickson

- Yinda Zhang

- Jonathan Taylor

- Sofien Bouaziz

- Chloe LeGendre

- Wan-Chun Alex Ma

- Ryan S. Overbeck

- Thabo Beeler

- Paul E. Debevec

- Shahram Izadi

- Christian Theobalt

- Christoph Rhemann

- Sean Ryan Fanello

- Philip David-Son

Abstract:

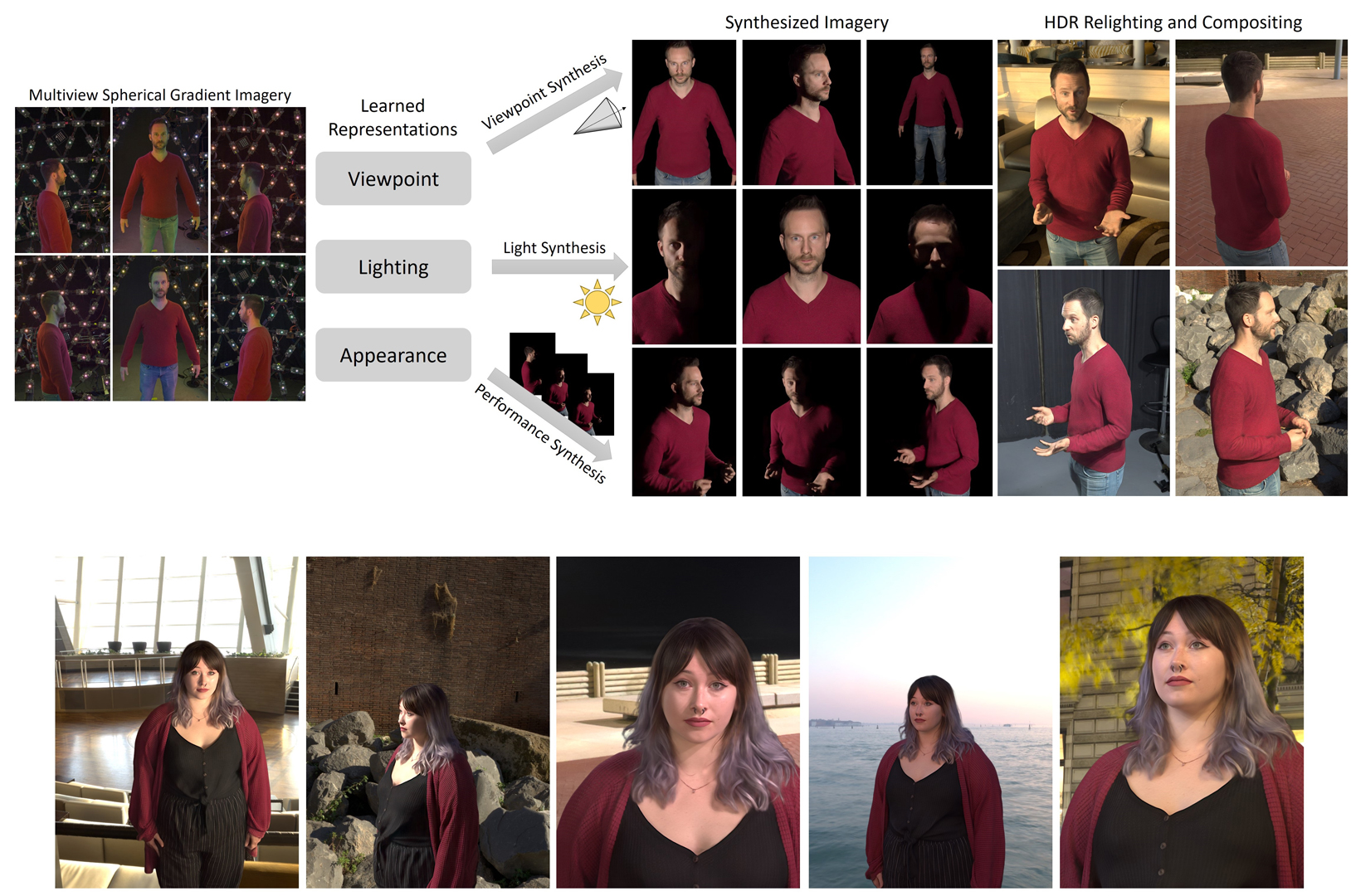

The increasing demand for 3D content in augmented and virtual reality has motivated the development of volumetric performance capture systemsnsuch as the Light Stage. Recent advances are pushing free viewpoint relightable videos of dynamic human performances closer to photorealistic quality. However, despite significant efforts, these sophisticated systems are limited by reconstruction and rendering algorithms which do not fully model complex 3D structures and higher order light transport effects such as global illumination and sub-surface scattering. In this paper, we propose a system that combines traditional geometric pipelines with a neural rendering scheme to generate photorealistic renderings of dynamic performances under desired viewpoint and lighting. Our system leverages deep neural networks that model the classical rendering process to learn implicit features that represent the view-dependent appearance of the subject independent of the geometry layout, allowing for generalization to unseen subject poses and even novel subject identity. Detailed experiments and comparisons demonstrate the efficacy and versatility of our method to generate high-quality results, significantly outperforming the existing state-of-the-art solutions.

References:

1. Robert Anderson, David Gallup, Jonathan T Barron, Janne Kontkanen, Noah Snavely, Carlos Hernández, Sameer Agarwal, and Steven M Seitz. 2016. Jump: virtual reality video. ACM Transactions on Graphics (TOG) 35, 6 (2016), 1–13.Google ScholarDigital Library

2. Guha Balakrishnan, Amy Zhao, Adrian V. Dalca, Frédo Durand, and John V. Guttag. 2018. Synthesizing Images of Humans in Unseen Poses. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). IEEE.Google Scholar

3. Jonathan T. Barron and Jitendra Malik. 2015. Shape, Illumination, and Reflectance from Shading. IEEE Trans. Pattern Anal. Mach. Intell. 37, 8 (2015).Google ScholarDigital Library

4. Thabo Beeler, Bernd Bickel, Paul Beardsley, Bob Sumner, and Markus Gross. 2010. High-quality Single-shot Capture of Facial Geometry. In ACM SIGGRAPH 2010.Google Scholar

5. Thabo Beeler, Fabian Hahn, Derek Bradley, Bernd Bickel, Paul Beardsley, Craig Gotsman, Robert W. Sumner, and Markus Gross. 2011. High-quality Passive Facial Performance Capture Using Anchor Frames. In ACM SIGGRAPH 2011.Google Scholar

6. Volker Blanz and Thomas Vetter. 1999. A Morphable Model for the Synthesis of 3D Faces. In Proc. of the Conference on Computer Graphics and Interactive Techniques (SIGGRAPH ’99).Google ScholarDigital Library

7. Cedric Cagniart, Edmond Boyer, and Slobodan Ilic. 2010. Probabilistic Deformable Surface Tracking From Multiple Videos. In Proc. ECCV (Lecture Notes in Computer Science), Kostas Daniilidis, Petros Maragos, and Nikos Paragios (Eds.), Vol. 6314. Springer, Heraklion, Greece, 326–339. Google ScholarCross Ref

8. Joel Carranza, Christian Theobalt, Marcus Magnor, and Hans-Peter Seidel. 2003. Free-Viewpoint Video of Human Actors. ACM Trans. Graph. 22 (07 2003), 569–577. Google ScholarDigital Library

9. Caroline Chan, Shiry Ginosar, Tinghui Zhou, and Alexei A Efros. 2019. Everybody dance now. In Proceedings of the IEEE International Conference on Computer Vision. 5933–5942.Google ScholarCross Ref

10. Zhang Chen, Anpei Chen, Guli Zhang, Chengyuan Wang, Yu Ji, Kiriakos N. Kutulakos, and Jingyi Yu. 2019. A Neural Rendering Framework for Free-Viewpoint Relighting. CoRR (2019).Google Scholar

11. Alvaro Collet, Ming Chuang, Pat Sweeney, Don Gillett, Dennis Evseev, David Calabrese, Hugues Hoppe, Adam Kirk, and Steve Sullivan. 2015. High-quality Streamable Free-viewpoint Video. ACM TOG (2015).Google Scholar

12. Edilson de Aguiar, Carsten Stoll, Christian Theobalt, Naveed Ahmed, Hans-Peter Seidel, and Sebastian Thrun. 2008. Performance Capture from Sparse Multi-View Video. ACM Trans. Graph. 27, 3 (Aug. 2008), 1–10. Google ScholarDigital Library

13. Paul Debevec. 2012. The Light Stages and Their Applications to Photoreal Digital Actors. In SIGGRAPH Asia. Singapore.Google Scholar

14. Paul Debevec, Tim Hawkins, Chris Tchou, Haarm-Pieter Duiker, Westley Sarokin, and Mark Sagar. 2000. Acquiring the Reflectance Field of a Human Face. In Proceedings of SIGGRAPH 2000 (SIGGRAPH ’00).Google ScholarDigital Library

15. Paul Debevec, Andreas Wenger, Chris Tchou, Andrew Gardner, Jamie Waese, and Tim Hawkins. 2002. A Lighting Reproduction Approach to Live-Action Compositing. In SIGGRAPH.Google Scholar

16. Alexey Dosovitskiy, Jost Tobias Springenberg, and Thomas Brox. 2015. Learning to generate chairs with convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 1538–1546.Google ScholarCross Ref

17. Mingsong Dou, Philip Davidson, Sean Ryan Fanello, Sameh Khamis, Adarsh Kowdle, Christoph Rhemann, Vladimir Tankovich, and Shahram Izadi. 2017. Motion2Fusion: Real-time Volumetric Performance Capture. SIGGRAPH Asia (2017).Google Scholar

18. Mingsong Dou, Sameh Khamis, Yury Degtyarev, Philip Davidson, Sean Ryan Fanello, Adarsh Kowdle, Sergio Orts Escolano, Christoph Rhemann, David Kim, Jonathan Taylor, Pushmeet Kohli, Vladimir Tankovich, and Shahram Izadi. 2016. Fusion4D: Real-time Performance Capture of Challenging Scenes. SIGGRAPH (2016).Google Scholar

19. Per Einarsson, Charles-Felix Chabert, Andrew Jones, Wan-Chun Ma, Bruce Lamond, Tim Hawkins, Mark Bolas, Sebastian Sylwan, and Paul Debevec. 2006. Relighting Human Locomotion with Flowed Reflectance Fields. In Proceedings of the 17th Eurographics Conference on Rendering Techniques (EGSR).Google ScholarDigital Library

20. S. M. Ali Eslami, Danilo Jimenez Rezende, Frederic Besse, Fabio Viola, Ari S. Morcos, Marta Garnelo, Avraham Ruderman, Andrei A. Rusu, Ivo Danihelka, Karol Gregor, David P. Reichert, Lars Buesing, Theophane Weber, Oriol Vinyals, Dan Rosenbaum, Neil Rabinowitz, Helen King, Chloe Hillier, Matt Botvinick, Daan Wierstra, Koray Kavukcuoglu, and Demis Hassabis. 2018. Neural scene representation and rendering. Science 360, 6394 (2018).Google Scholar

21. S. R. Fanello, C. Rhemann, V. Tankovich, A. Kowdle, S. Orts Escolano, D. Kim, and S. Izadi. 2016. HyperDepth: Learning Depth from Structured Light Without Matching. In CVPR.Google Scholar

22. Sean Ryan Fanello, Julien Valentin, Adarsh Kowdle, Christoph Rhemann, Vladimir Tankovich, Carlo Ciliberto, Philip Davidson, and Shahram Izadi. 2017. Low Compute and Fully Parallel Computer Vision with HashMatch. In ICCV.Google Scholar

23. Graham Fyffe, Cyrus A. Wilson, and Paul Debevec. 2009. Cosine Lobe Based Relighting from Gradient Illumination Photographs. In SIGGRAPH ’09: Posters (SIGGRAPH ’09).Google ScholarDigital Library

24. Pablo Garrido, Levi Valgaert, Chenglei Wu, and Christian Theobalt. 2013. Reconstructing Detailed Dynamic Face Geometry from Monocular Video. ACM Trans. Graph. (Proc. SIGGRAPH Asia) 32, 6, Article 158 (Nov. 2013), 10 pages.Google Scholar

25. Pablo Garrido, Michael Zollhoefer, Dan Casas, Levi Valgaerts, Kiran Varanasi, Patrick Perez, and Christian Theobalt. 2016. Reconstruction of Personalized 3D Face Rigs from Monocular Video. (2016).Google Scholar

26. Paulo Gotardo, Jérémy Riviere, Derek Bradley, Abhijeet Ghosh, and Thabo Beeler. 2018. Practical Dynamic Facial Appearance Modeling and Acquisition. In SIGGRAPH Asia.Google Scholar

27. Kaiwen Guo, Peter Lincoln, Philip Davidson, Jay Busch, Xueming Yu, Matt Whalen, Geoff Harvey, Sergio Orts-Escolano, Rohit Pandey, Jason Dourgarian, Danhang Tang, Anastasia Tkach, Adarsh Kowdle, Emily Cooper, Mingsong Dou, Sean Fanello, Graham Fyffe, Christoph Rhemann, Jonathan Taylor, Paul Debevec, and Shahram Izadi. 2019. The Relightables: Volumetric Performance Capture of Humans with Realistic Relighting. In ACM TOG.Google ScholarDigital Library

28. Peter Hedman, Julien Philip, True Price, Jan-Michael Frahm, George Drettakis, and Gabriel Brostow. 2018. Deep Blending for Free-Viewpoint Image-Based Rendering. SIGGRAPH Asia (2018).Google Scholar

29. Alexandru Eugen Ichim, Sofien Bouaziz, and Mark Pauly. 2015. Dynamic 3D Avatar Creation from Hand-held Video Input. ACM Trans. Graph. 34, 4, Article 45 (July 2015), 14 pages.Google ScholarDigital Library

30. Matthias Innmann, Michael Zollhöfer, Matthias Nießner, Christian Theobalt, and Marc Stamminger. 2016. VolumeDeform: Real-time Volumetric Non-rigid Reconstruction.Google Scholar

31. Max Jaderberg, Karen Simonyan, Andrew Zisserman, and Koray Kavukcuoglu. 2015. Spatial Transformer Networks. In Advances in Neural Information Processing Systems (NIPS).Google Scholar

32. Michael Kazhdan and Hugues Hoppe. 2013. Screened poisson surface reconstruction. ACM Transactions on Graphics (ToG) 32, 3 (2013), 1–13.Google ScholarDigital Library

33. Sean Kelly, Samantha Cordingley, Patrick Nolan, Christoph Rhemann, Sean Fanello, Danhang Tang, Jude Osborn, Jay Busch, Philip Davidson, Paul Debevec, Peter Denny, Graham Fyffe, Kaiwen Guo, Geoff Harvey, Shahram Izadi, Peter Lincoln, Wan-Chun Alex Ma, Jonathan Taylor, Xueming Yu, Matt Whalen, Jason Dourgarian, Genevieve Blanchett, Narelle French, Kirstin Sillitoe, Tea Uglow, Brenton Spiteri, Emma Pearson, Wade Kernot, and Jonathan Richards. 2019. AR-ia: Volumetric Opera for Mobile Augmented Reality. In SIGGRAPH Asia 2019 XR.Google Scholar

34. Hyeongwoo Kim, Mohamed Elgharib, Hans-Peter Zollöfer, Michael Seidel, Thabo Beeler, Christian Richardt, and Christian Theobalt. 2019. Neural Style-Preserving Visual Dubbing. ACM Transactions on Graphics (TOG) 38, 6 (2019), 178:1–13.Google ScholarDigital Library

35. Hyeongwoo Kim, Pablo Garrido, Ayush Tewari, Weipeng Xu, Justus Thies, Matthias Nießner, Patrick Pérez, Christian Richardt, Michael Zollöfer, and Christian Theobalt. 2018. Deep Video Portraits. ACM Transactions on Graphics (TOG) 37, 4 (2018), 163.Google ScholarDigital Library

36. Diederik P. Kingma and Jimmy Ba. 2014. Adam: A Method for Stochastic Optimization. CoRR (2014).Google Scholar

37. Adarsh Kowdle, Christoph Rhemann, Sean Fanello, Andrea Tagliasacchi, Jon Taylor, Philip Davidson, Mingsong Dou, Kaiwen Guo, Cem Keskin, Sameh Khamis, David Kim, Danhang Tang, Vladimir Tankovich, Julien Valentin, and Shahram Izadi. 2018. The Need 4 Speed in Real-Time Dense Visual Tracking. SIGGRAPH Asia (2018).Google Scholar

38. Tejas D Kulkarni, William F Whitney, Pushmeet Kohli, and Josh Tenenbaum. 2015. Deep convolutional inverse graphics network. In Advances in neural information processing systems. 2539–2547.Google Scholar

39. Guannan Li, Chenglei Wu, Carsten Stoll, Yebin Liu, Kiran Varanasi, Qionghai Dai, and Christian Theobalt. 2013. Capturing Relightable Human Performances under General Uncontrolled Illumination. Computer Graphics Forum (Proc. EUROGRAPHICS 2013) (2013).Google ScholarCross Ref

40. Lingjie Liu, Weipeng Xu, Michael Zollhoefer, Hyeongwoo Kim, Florian Bernard, Marc Habermann, Wenping Wang, and Christian Theobalt. 2019. Neural Rendering and Reenactment of Human Actor Videos. ACM Transactions on Graphics (2019).Google Scholar

41. Stephen Lombardi, Jason Saragih, Tomas Simon, and Yaser Sheikh. 2018. Deep Appearance Models for Face Rendering. ACM Trans. Graph. 37, 4, Article 68 (July 2018).Google ScholarDigital Library

42. Stephen Lombardi, Tomas Simon, Jason Saragih, Gabriel Schwartz, Andreas Lehrmann, and Yaser Sheikh. 2019. Neural Volumes: Learning Dynamic Renderable Volumes from Images. SIGGRAPH (2019).Google ScholarDigital Library

43. Liqian Ma, Xu Jia, Qianru Sun, Bernt Schiele, Tinne Tuytelaars, and Luc Van Gool. 2017. Pose guided person image generation. In NIPS.Google Scholar

44. Liqian Ma, Qianru Sun, Stamatios Georgoulis, Luc Van Gool, Bernt Schiele, and Mario Fritz. 2018. Disentangled Person Image Generation. CVPR (2018).Google Scholar

45. Wan-Chun Ma, Tim Hawkins, Pieter Peers, Charles-Felix Chabert, Malte Weiss, and Paul Debevec. 2007. Rapid Acquisition of Specular and Diffuse Normal Maps from Polarized Spherical Gradient Illumination. In Proceedings of the Eurographics Conference on Rendering Techniques (EGSR’07).Google ScholarDigital Library

46. Ricardo Martin-Brualla, Rohit Pandey, Shuoran Yang, Pavel Pidlypenskyi, Jonathan Taylor, Julien Valentin, Sameh Khamis, Philip Davidson, Anastasia Tkach, Peter Lincoln, Adarsh Kowdle, Christoph Rhemann, Dan B Goldman, Cem Keskin, Steve Seitz, Shahram Izadi, and Sean Fanello. 2018. LookinGood: Enhancing Performance Capture with Real-time NeuralRe-Rendering. In SIGGRAPH Asia.Google Scholar

47. Abhimitra Meka, Gereon Fox, Michael Zollhöfer, Christian Richardt, and Christian Theobalt. 2017. Live User-Guided Intrinsic Video For Static Scene. IEEE Transactions on Visualization and Computer Graphics 23, 11 (2017).Google ScholarDigital Library

48. Abhimitra Meka, Christian Haene, Rohit Pandey, Michael Zollhoefer, Sean Fanello, Graham Fyffe, Adarsh Kowdle, Xueming Yu, Jay Busch, Jason Dourgarian, Peter Denny, Sofien Bouaziz, Peter Lincoln, Matt Whalen, Geoff Harvey, Jonathan Taylor, Shahram Izadi, Andrea Tagliasacchi, Paul Debevec, Christian Theobalt, Julien Valentin, and Christoph Rhemann. 2019. Deep Reflectance Fields – High-Quality Facial Reflectance Field Inference From Color Gradient Illumination. ACM Transactions on Graphics (Proceedings SIGGRAPH).Google Scholar

49. Abhimitra Meka, Maxim Maximov, Michael Zollhoefer, Avishek Chatterjee, Hans-Peter Seidel, Christian Richardt, and Christian Theobalt. 2018. LIME: Live Intrinsic Material Estimation. In Proceedings of Computer Vision and Pattern Recognition (CVPR). 11.Google ScholarCross Ref

50. Microsoft. 2014. UVAtlas – isochart texture atlasing. http://github.com/Microsoft/UVAtlasGoogle Scholar

51. Ben Mildenhall, Pratul P. Srinivasan, Matthew Tancik, Jonathan T. Barron, Ravi Ramamoorthi, and Ren Ng. 2020. NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis. arXiv:cs.CV/2003.08934Google Scholar

52. Natalia Neverova, Riza Alp Güler, and Iasonas Kokkinos. 2018. Dense Pose Transfer. In European Conference on Computer Vision (ECCV).Google Scholar

53. Sergio Orts-Escolano, Christoph Rhemann, Sean Fanello, Wayne Chang, Adarsh Kowdle, Yury Degtyarev, David Kim, Philip L. Davidson, Sameh Khamis, Mingsong Dou, Vladimir Tankovich, Charles Loop, Qin Cai, Philip A. Chou, Sarah Mennicken, Julien Valentin, Vivek Pradeep, Shenlong Wang, Sing Bing Kang, Pushmeet Kohli, Yuliya Lutchyn, Cem Keskin, and Shahram Izadi. 2016. Holoportation: Virtual 3D Teleportation in Real-time. In UIST.Google Scholar

54. Rohit Pandey, Anastasia Tkach, Shuoran Yang, Pavel Pidlypenskyi, Jonathan Taylor, Ricardo Martin-Brualla, Andrea Tagliasacchi, George Papandreou, Philip Davidson, Cem Keskin, Shahram Izadi, and Sean Fanello. 2019. Volumetric Capture of Humans with a Single RGBD Camera via Semi-Parametric Learning. In CVPR.Google Scholar

55. Pieter Peers, Tim Hawkins, and Paul E. Debevec. 2006. A Reflective Light Stage. Technical Report.Google Scholar

56. Matt Pharr, Wenzel Jakob, and Greg Humphreys. 2016. Physically Based Rendering: From Theory to Implementation (3rd ed.). Morgan Kaufmann Publishers Inc., San Francisco, CA, USA.Google ScholarDigital Library

57. Julien Philip, Michaël Gharbi, Tinghui Zhou, Alexei Efros, and George Drettakis. 2019. Multi-view Relighting Using a Geometry-Aware Network. SIGGRAPH (2019).Google Scholar

58. Gerard Pons-Moll, Javier Romero, Naureen Mahmood, and Michael J. Black. 2015. Dyna: A Model of Dynamic Human Shape in Motion. ACM Transactions on Graphics, (Proc. SIGGRAPH) 34, 4 (Aug. 2015), 120:1–120:14.Google Scholar

59. Olaf Ronneberger, Philipp Fischer, and Thomas Brox. 2015. U-Net: Convolutional Networks for Biomedical Image Segmentation. MICCAI (2015).Google Scholar

60. Soumyadip Sengupta, Vivek Jayaram, Brian Curless, Steve Seitz, and Ira Kemelmacher-Shlizerman. 2020. Background Matting: The World is Your Green Screen. In Computer Vision and Pattern Recognition (CVPR).Google Scholar

61. Mike Seymour. 2020. Face it Will: Gemini Man. https://www.fxguide.com/fxfeatured/face-it-will-gemini-man/ (2020).Google Scholar

62. Aliaksandra Shysheya, Egor Zakharov, Kara-Ali Aliev, R S Bashirov, Egor Burkov, Karim Iskakov, Aleksei Ivakhnenko, Yury Malkov, I. M. Pasechnik, Dmitry Ulyanov, Alexander Vakhitov, and Victor S. Lempitsky. 2019. Textured Neural Avatars. CVPR (2019).Google Scholar

63. Chenyang Si, Wei Wang, Liang Wang, and Tieniu Tan. 2018. Multistage Adversarial Losses for Pose-Based Human Image Synthesis. In CVPR.Google Scholar

64. Vincent Sitzmann, Julien N.P. Martel, Alexander W. Bergman, David B. Lindell, and Gordon Wetzstein. 2020. Implicit Neural Representations with Periodic Activation Functions. In arXiv.Google Scholar

65. Vincent Sitzmann, Justus Thies, Felix Heide, Matthias Nießner, Gordon Wetzstein, and Michael Zollhöfer. 2018. DeepVoxels: Learning Persistent 3D Feature Embeddings. CoRR abs/1812.01024 (2018).Google Scholar

66. Vincent Sitzmann, Michael Zollhöfer, and Gordon Wetzstein. 2019. Scene Representation Networks: Continuous 3D-Structure-Aware Neural Scene Representations. arXiv:cs.CV/1906.01618Google Scholar

67. J. Starck and A. Hilton. 2007. Surface Capture for Performance-Based Animation. IEEE Computer Graphics and Applications (2007).Google Scholar

68. Tiancheng Sun, Jonathan T Barron, Yun-Ta Tsai, Zexiang Xu, Xueming Yu, Graham Fyffe, Christoph Rhemann, Jay Busch, Paul Debevec, and Ravi Ramamoorthi. 2019. Single image portrait relighting. ACM Transactions on Graphics (TOG) 38, 4 (2019), 79.Google ScholarDigital Library

69. Matthew Tancik, Pratul P. Srinivasan, Ben Mildenhall, Sara Fridovich-Keil, Nithin Raghavan, Utkarsh Singhal, Ravi Ramamoorthi, Jonathan T. Barron, and Ren Ng. 2020. Fourier Features Let Networks Learn High Frequency Functions in Low Dimensional Domains. arXiv preprint arXiv:2006.10739 (2020).Google Scholar

70. L. M. Tanco and A. Hilton. 2000. Realistic synthesis of novel human movements from a database of motion capture examples. In Proceedings Workshop on Human Motion.Google ScholarCross Ref

71. Vladimir Tankovich, Michael Schoenberg, Sean Ryan Fanello, Adarsh Kowdle, Christoph Rhemann, Max Dzitsiuk, Mirko Schmidt, Julien Valentin, and Shahram Izadi. 2018. SOS: Stereo Matching in O(1) with Slanted Support Windows. IROS (2018).Google Scholar

72. Ayush Tewari, Ohad Fried, Justus Thies, Vincent Sitzmann, Stephen Lombardi, Kalyan Sunkavalli, Ricardo Martin-Brualla, Tomas Simon, Jason Saragih, Matthias Nießner, Rohit Pandey, Sean Fanello, Gordon Wetzstein, Jun-Yan Zhu, Christian Theobalt, Maneesh Agrawala, Eli Shechtman, Dan B Goldman, and Michael Zollhoefer. 2020. State of the Art on Neural Rendering. In Eurographics.Google Scholar

73. Christian Theobalt, Naveed Ahmed, Hendrik P. A. Lensch, Marcus A. Magnor, and Hans-Peter Seidel. 2007. Seeing People in Different Light-Joint Shape, Motion, and Reflectance Capture. IEEE TVCG 13, 4 (2007), 663–674.Google Scholar

74. Justus Thies, Michael Zollhoefer, Marc Stamminger, Christian Theobalt, and Matthias Niessner. 2016. Face2Face: Real-Time Face Capture and Reenactment of RGB Videos. In Proc. CVPR.Google ScholarDigital Library

75. Justus Thies, Michael Zollhöfer, and Matthias Niessner. 2019. Deferred Neural Rendering: Image Synthesis Using Neural Textures. SIGGRAPH and ACM TOG (2019). Daniel Vlasic, Ilya Baran, Wojciech Matusik, and Jovan Popović. 2008. Articulated Mesh Animation from Multi-View Silhouettes. ACM Trans. Graph. 27, 3 (Aug. 2008), 1–9. Google ScholarDigital Library

76. Shenlong Wang, Sean Ryan Fanello, Christoph Rhemann, Shahram Izadi, and Pushmeet Kohli. 2016. The Global Patch Collider. CVPR (2016).Google Scholar

77. Zhen Wen, Zicheng Liu, and T. S. Huang. 2003. Face relighting with radiance environment maps. In CVPR.Google Scholar

78. Andreas Wenger, Andrew Gardner, Chris Tchou, Jonas Unger, Tim Hawkins, and Paul Debevec. 2005a. Performance Relighting and Reflectance Transformation with Time-Multiplexed Illumination. In SIGGRAPH.Google Scholar

79. Andreas Wenger, Andrew Gardner, Chris Tchou, Jonas Unger, Tim Hawkins, and Paul Debevec. 2005b. Performance Relighting and Reflectance Transformation with Time-multiplexed Illumination. In ACM SIGGRAPH 2005 Papers (SIGGRAPH ’05).Google Scholar

80. Chenglei Wu, Carsten Stoll, Levi Valgaerts, and Christian Theobalt. 2013. On-set Performance Capture of Multiple Actors with a Stereo Camera. ACM Trans. Graph. (Proc. SIGGRAPH Asia) 32, 6, Article 161 (Nov. 2013), 11 pages.Google Scholar

81. Zexiang Xu, Sai Bi, Kalyan Sunkavalli, Sunil Hadap, Hao Su, and Ravi Ramamoorthi. 2019. Deep View Synthesis from Sparse Photometric Images. SIGGRAPH (2019).Google Scholar

82. Zexiang Xu, Kalyan Sunkavalli, Sunil Hadap, and Ravi Ramamoorthi. 2018. Deep image-based relighting from optimal sparse samples. ACM Trans. on Graphics (2018).Google Scholar

83. A. Zaharescu, E. Boyer, K. Varanasi, and R. Horaud. 2009. Surface feature detection and description with applications to mesh matching. In CVPR.Google Scholar

84. Richard Zhang. 2019. Making Convolutional Networks Shift-Invariant Again. In ICML.Google Scholar

85. Richard Zhang, Phillip Isola, Alexei A Efros, Eli Shechtman, and Oliver Wang. 2018. The unreasonable effectiveness of deep features as a perceptual metric. IEEE conference on computer vision and pattern recognition (CVPR) (2018).Google ScholarCross Ref

86. Xiuming Zhang, Sean Fanello, Yun-Ta Tsai, Tiancheng Sun, Tianfan Xue, Rohit Pandey, Sergio Orts-Escolano, Philip Davidson, Christoph Rhemann, Paul Debevec, Jonathan T. Barron, Ravi Ramamoorthi, and William T. Freeman. 2020. Neural Light Transport for Relighting and View Synthesis. CoRR (2020).Google Scholar

87. Bo Zhao, Xiao Wu, Zhi-Qi Cheng, Hao Liu, and Jiashi Feng. 2017. Multi-View Image Generation from a Single-View. CoRR (2017).Google Scholar

88. Hao Zhou, Sunil Hadap, Kalyan Sunkavalli, and David Jacobs. 2019. Deep Single Image Portrait Relighting. In International Conference on Computer Vision (ICCV).Google Scholar

89. Zhenyao Zhu, Ping Luo, Xiaogang Wang, and Xiaoou Tang. 2014. Multi-view perceptron: a deep model for learning face identity and view representations. In NIPS.Google Scholar