“Affective Music Recommendation System using Input Images” by Sasaki, Hirai, Ohya and Morishima

Conference:

Type(s):

Title:

- Affective Music Recommendation System using Input Images

Presenter(s)/Author(s):

Entry Number: 15

Abstract:

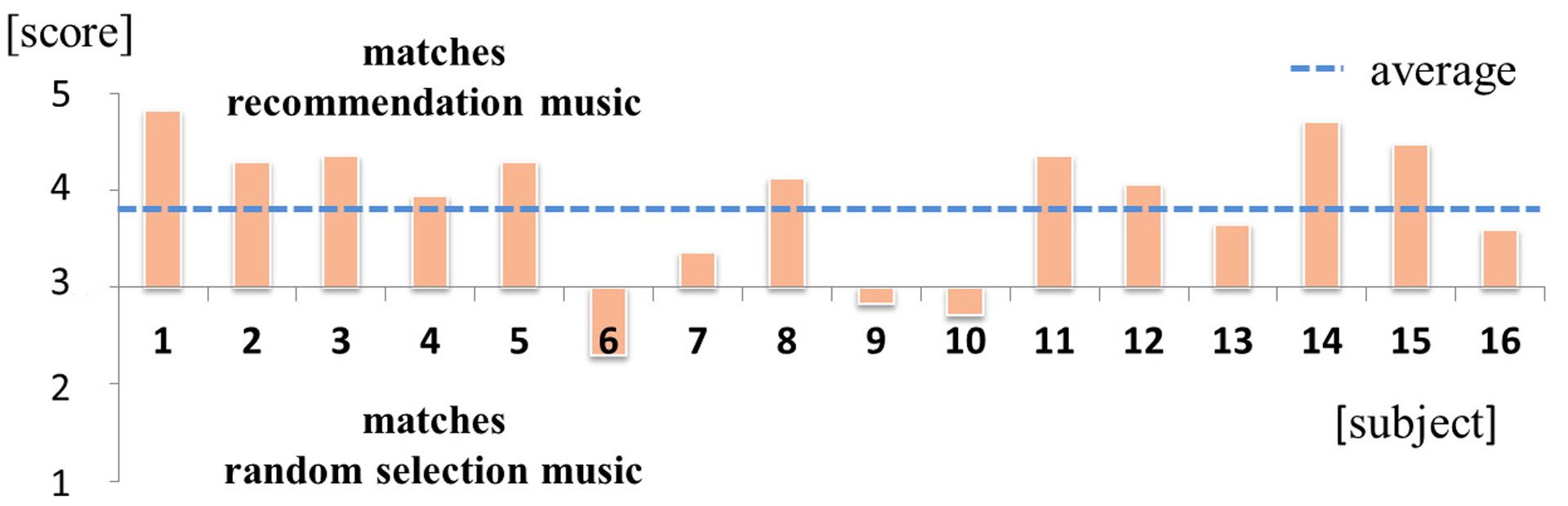

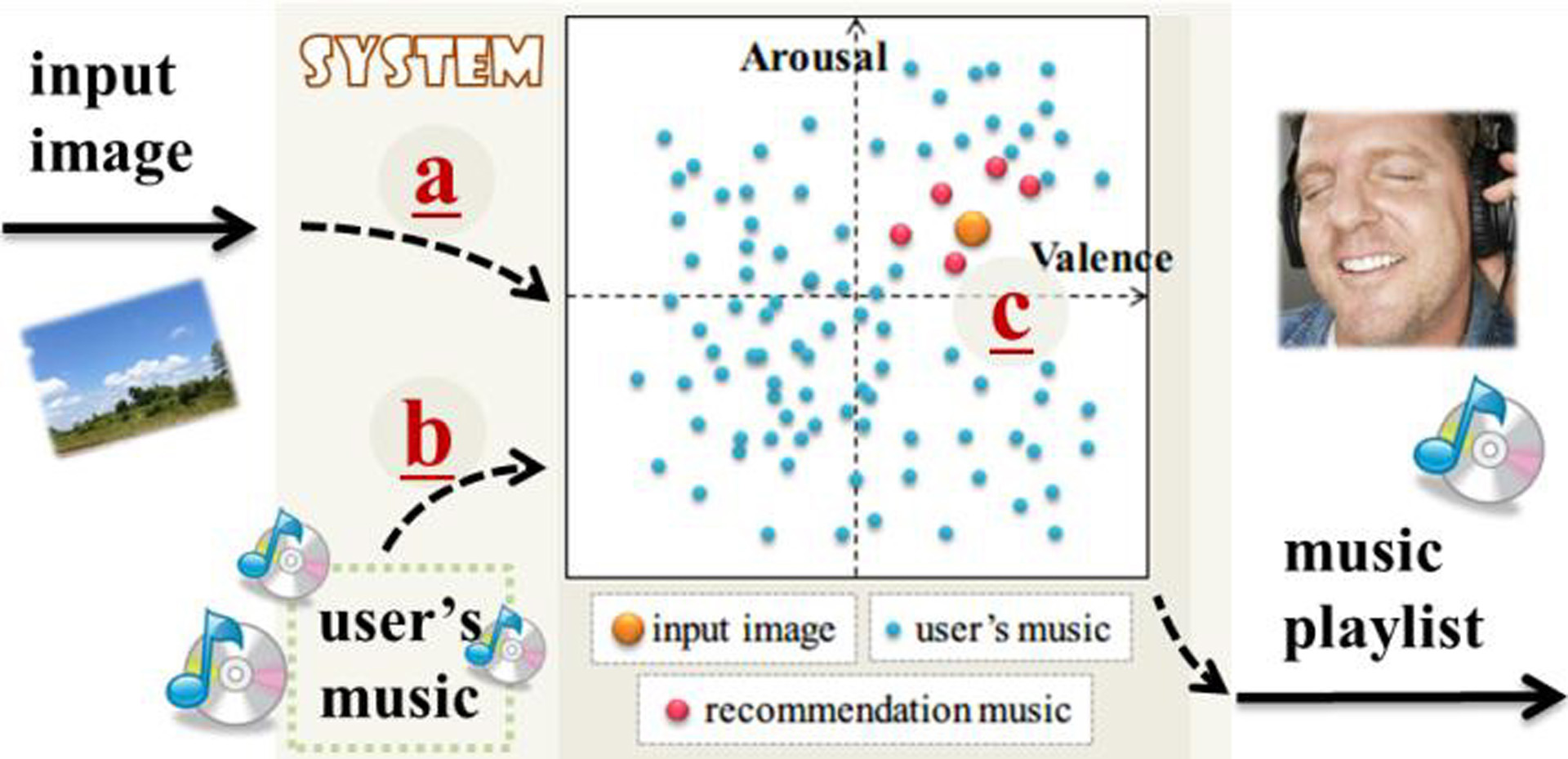

Music that matches our current mood can create a deep impression, which we usually want to enjoy when we listen to music. However, we do not know which music best matches our present mood. We have to listen to each song, searching for music that matches our mood. As it is difficult to select music manually, we need a recommendation system that can operate affectively. Most recommendation methods, such as collaborative filtering or content similarity, do not target a specific mood. In addition, there may be no word exactly specifying the mood. Therefore, textual retrieval is not effective. In this paper, we assume that there exists a relationship between our mood and images because visual information affects our mood when we listen to music. We now present an affective music recommendation system using an input image without textual information.

References:

1. Russell, J., 1980. A Circumplex Model of Affect, Journal of Personality and Social Psychology 1980, pp.1161–1178

2. Valdez, P., and Mehrabian, A. 1994. Effects of Color on Emotions, Journal of Experimental Psychology 1994, pp.394–409

3. Eerola, T., Lartillot, O., and Toiviainen, P. 2009. Prediction of Multidimensional Emotional Ratings in Music from Audio Using Multivariate Regression Models, Proc. International Society for Music Information Retrieval Conference 2009, pp.621–626