“Adversarial Monte Carlo denoising with conditioned auxiliary feature modulation” by Xu, Zhang, Wang, Xu, Yang, et al. …

Conference:

Type(s):

Title:

- Adversarial Monte Carlo denoising with conditioned auxiliary feature modulation

Session/Category Title: Samples & Speckles

Presenter(s)/Author(s):

Moderator(s):

Abstract:

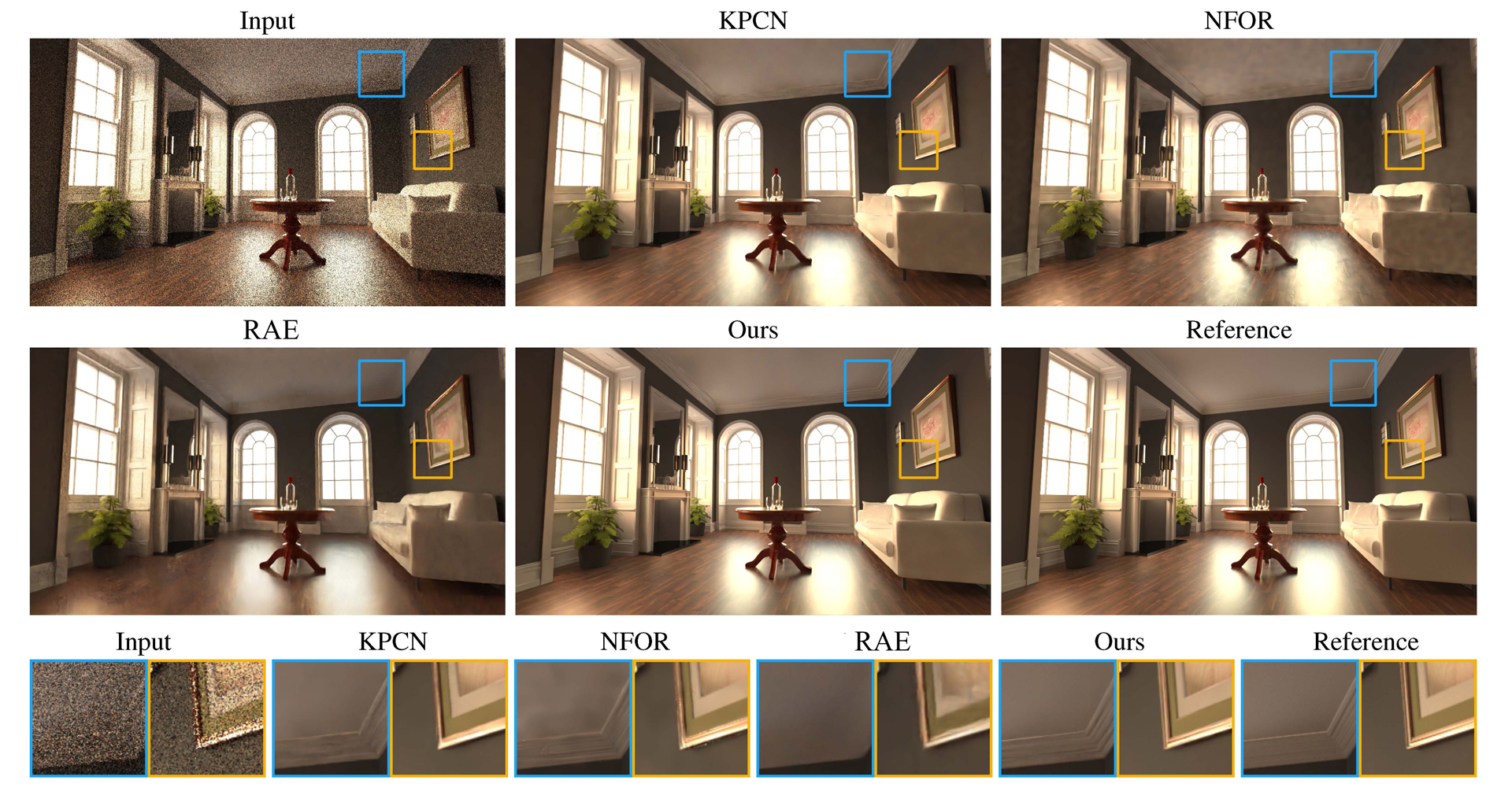

Denoising Monte Carlo rendering with a very low sample rate remains a major challenge in the photo-realistic rendering research. Many previous works, including regression-based and learning-based methods, have been explored to achieve better rendering quality with less computational cost. However, most of these methods rely on handcrafted optimization objectives, which lead to artifacts such as blurs and unfaithful details. In this paper, we present an adversarial approach for denoising Monte Carlo rendering. Our key insight is that generative adversarial networks can help denoiser networks to produce more realistic high-frequency details and global illumination by learning the distribution from a set of high-quality Monte Carlo path tracing images. We also adapt a novel feature modulation method to utilize auxiliary features better, including normal, albedo and depth. Compared to previous state-of-the-art methods, our approach produces a better reconstruction of the Monte Carlo integral from a few samples, performs more robustly at different sample rates, and takes only a second for megapixel images.

References:

1. Martin Arjovsky, Soumith Chintala, and Léon Bottou. 2017. Wasserstein gan. arXiv preprint arXiv:1701.07875 (2017).Google Scholar

2. Steve Bako, Thijs Vogels, Brian McWilliams, Mark Meyer, Jan Novák, Alex Harvill, Pradeep Sen, Tony Derose, and Fabrice Rousselle. 2017. Kernel-predicting convolutional networks for denoising Monte Carlo renderings. ACM Trans. Graph. 36, 4 (2017), 97–1.Google ScholarDigital Library

3. Pablo Bauszat, Martin Eisemann, and Marcus Magnor. 2011. Guided image filtering for interactive high-quality global illumination. In Computer Graphics Forum, Vol. 30. 1361–1368.Google ScholarDigital Library

4. Benedikt Bitterli. 2016. Rendering resources. https://benedikt-bitterli.me/resources/.Google Scholar

5. Benedikt Bitterli, Fabrice Rousselle, Bochang Moon, José A Iglesias-Guitián, David Adler, Kenny Mitchell, Wojciech Jarosz, and Jan Novák. 2016. Nonlinearly Weighted First-order Regression for Denoising Monte Carlo Renderings. In Computer Graphics Forum, Vol. 35. 107–117.Google ScholarDigital Library

6. Chakravarty R Alla Chaitanya, Anton S Kaplanyan, Christoph Schied, Marco Salvi, Aaron Lefohn, Derek Nowrouzezahrai, and Timo Aila. 2017. Interactive reconstruction of Monte Carlo image sequences using a recurrent denoising autoencoder. ACM Transactions on Graphics (TOG) 36, 4 (2017), 98.Google Scholar

7. Jingwen Chen, Jiawei Chen, Hongyang Chao, and Ming Yang. 2018. Image blind denoising with generative adversarial network based noise modeling. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 3155–3164.Google ScholarCross Ref

8. Qifeng Chen and Vladlen Koltun. 2017. Photographic image synthesis with cascaded refinement networks. In Proceedings of the IEEE International Conference on Computer Vision. 1511–1520.Google ScholarCross Ref

9. Holger Dammertz, Daniel Sewtz, Johannes Hanika, and Hendrik Lensch. 2010. Edge-avoiding À-Trous wavelet transform for fast global illumination filtering. In Proceedings of the Conference on High Performance Graphics. Eurographics Association, 67–75.Google ScholarDigital Library

10. Harm De Vries, Florian Strub, Jérémie Mary, Hugo Larochelle, Olivier Pietquin, and Aaron C Courville. 2017. Modulating early visual processing by language. In Advances in Neural Information Processing Systems. 6594–6604.Google Scholar

11. Nithish Divakar and R Venkatesh Babu. 2017. Image denoising via CNNs: an adversarial approach. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops. 80–87.Google ScholarCross Ref

12. Vincent Dumoulin, Ethan Perez, Nathan Schucher, Florian Strub, Harm de Vries, Aaron Courville, and Yoshua Bengio. 2018. Feature-wise transformations. Distill (2018). https://distill.pub/2018/feature-wise-transformations. Google ScholarCross Ref

13. Vincent Dumoulin, Jonathon Shlens, and Manjunath Kudlur. 2017. A learned representation for artistic style. Proc. of ICLR 2 (2017).Google Scholar

14. Raanan Fattal. 2007. Image upsampling via imposed edge statistics. ACM transactions on graphics (TOG) 26, 3 (2007), 95.Google Scholar

15. Leonardo Galteri, Lorenzo Seidenari, Marco Bertini, and Alberto Del Bimbo. 2017. Deep generative adversarial compression artifact removal. In Proceedings of the IEEE International Conference on Computer Vision. 4826–4835.Google ScholarCross Ref

16. Leon A Gatys, Alexander S Ecker, and Matthias Bethge. 2016. Image style transfer using convolutional neural networks. In Proceedings of the IEEE conference on computer vision and pattern recognition. 2414–2423.Google ScholarCross Ref

17. Michaël Gharbi, Tzu-Mao Li, Miika Aittala, Jaakko Lehtinen, and Frédo Durand. 2019. Sample-based Monte Carlo denoising using a kernel-splatting network. ACM Transactions on Graphics (TOG) 38, 4 (2019), 125.Google ScholarDigital Library

18. Golnaz Ghiasi, Honglak Lee, Manjunath Kudlur, Vincent Dumoulin, and Jonathon Shlens. 2017. Exploring the structure of a real-time, arbitrary neural artistic stylization network. arXiv preprint arXiv:1705.06830 (2017).Google Scholar

19. Ian Goodfellow. 2016. NIPS 2016 tutorial: Generative adversarial networks. arXiv preprint arXiv:1701.00160 (2016).Google Scholar

20. Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2014. Generative adversarial nets. In Advances in neural information processing systems. 2672–2680.Google Scholar

21. Ishaan Gulrajani, Faruk Ahmed, Martin Arjovsky, Vincent Dumoulin, and Aaron C Courville. 2017. Improved training of wasserstein gans. In Advances in Neural Information Processing Systems. 5767–5777.Google Scholar

22. David Ha, Andrew Dai, and Quoc V Le. 2016. Hypernetworks. arXiv preprint arXiv:1609.09106 (2016).Google Scholar

23. Kaiming He, Jian Sun, and Xiaoou Tang. 2010. Single image haze removal using dark channel prior. IEEE transactions on pattern analysis and machine intelligence 33, 12 (2010), 2341–2353.Google Scholar

24. Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2015. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the IEEE international conference on computer vision. 1026–1034.Google ScholarDigital Library

25. Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2016. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition. 770–778.Google ScholarCross Ref

26. Xun Huang and Serge Belongie. 2017. Arbitrary style transfer in real-time with adaptive instance normalization. In Proceedings of the IEEE International Conference on Computer Vision. 1501–1510.Google ScholarCross Ref

27. Xun Huang, Ming-Yu Liu, Serge Belongie, and Jan Kautz. 2018. Multimodal unsupervised image-to-image translation. In Proceedings of the European Conference on Computer Vision (ECCV). 172–189.Google ScholarDigital Library

28. Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, and Alexei A Efros. 2017. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE conference on computer vision and pattern recognition. 1125–1134.Google ScholarCross Ref

29. James T Kajiya. 1986. The rendering equation. In ACM SIGGRAPH computer graphics, Vol. 20. ACM, 143–150.Google Scholar

30. Nima Khademi Kalantari, Steve Bako, and Pradeep Sen. 2015. A machine learning approach for filtering Monte Carlo noise. ACM Trans. Graph. 34, 4 (2015), 122–1.Google ScholarDigital Library

31. Alexander Keller, Luca Fascione, Marcos Fajardo, Iliyan Georgiev, P Christensen, Johannes Hanika, Christian Eisenacher, and Gregory Nichols. 2015. The path tracing revolution in the movie industry. In ACM SIGGRAPH 2015 Courses. ACM, 24.Google ScholarDigital Library

32. Markus Kettunen, Erik Härkönen, and Jaakko Lehtinen. 2019. Deep convolutional reconstruction for gradient-domain rendering. ACM Transactions on Graphics (TOG) 38, 4 (2019), 126.Google ScholarDigital Library

33. Orest Kupyn, Volodymyr Budzan, Mykola Mykhailych, Dmytro Mishkin, and Jiří Matas. 2018. Deblurgan: Blind motion deblurring using conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 8183–8192.Google ScholarCross Ref

34. Christian Ledig, Lucas Theis, Ferenc Huszár, Jose Caballero, Andrew Cunningham, Alejandro Acosta, Andrew Aitken, Alykhan Tejani, Johannes Totz, Zehan Wang, et al. 2017. Photo-realistic single image super-resolution using a generative adversarial network. In Proceedings of the IEEE conference on computer vision and pattern recognition. 4681–4690.Google ScholarCross Ref

35. Jaakko Lehtinen, Jacob Munkberg, Jon Hasselgren, Samuli Laine, Tero Karras, Miika Aittala, and Timo Aila. 2018. Noise2noise: Learning image restoration without clean data. arXiv preprint arXiv:1803.04189 (2018).Google Scholar

36. Tzu-Mao Li, Yu-Ting Wu, and Yung-Yu Chuang. 2012. SURE-based optimization for adaptive sampling and reconstruction. ACM Transactions on Graphics (TOG) 31, 6 (2012), 194.Google ScholarDigital Library

37. William Lotter, Gabriel Kreiman, and David Cox. 2015. Unsupervised learning of visual structure using predictive generative networks. arXiv preprint arXiv:1511.06380 (2015).Google Scholar

38. Michael D McCool. 1999. Anisotropic diffusion for Monte Carlo noise reduction. ACM Transactions on Graphics (TOG) 18, 2 (1999), 171–194.Google ScholarDigital Library

39. Takeru Miyato, Toshiki Kataoka, Masanori Koyama, and Yuichi Yoshida. 2018. Spectral normalization for generative adversarial networks. arXiv preprint arXiv:1802.05957 (2018).Google Scholar

40. Shakir Mohamed and Balaji Lakshminarayanan. 2016. Learning in implicit generative models. arXiv preprint arXiv:1610.03483 (2016).Google Scholar

41. Bochang Moon, Nathan Carr, and Sung-Eui Yoon. 2014. Adaptive rendering based on weighted local regression. ACM Transactions on Graphics (TOG) 33, 5 (2014), 170.Google ScholarDigital Library

42. Bochang Moon, Jong Yun Jun, JongHyeob Lee, Kunho Kim, Toshiya Hachisuka, and Sung-Eui Yoon. 2013. Robust image denoising using a virtual flash image for Monte Carlo ray tracing. In Computer Graphics Forum, Vol. 32. 139–151.Google ScholarCross Ref

43. Bochang Moon, Steven McDonagh, Kenny Mitchell, and Markus Gross. 2016. Adaptive polynomial rendering. ACM Transactions on Graphics (TOG) 35, 4 (2016), 40.Google ScholarDigital Library

44. Taesung Park, Ming-Yu Liu, Ting-Chun Wang, and Jun-Yan Zhu. 2019. Semantic Image Synthesis with Spatially-Adaptive Normalization. arXiv preprint arXiv:1903.07291 (2019).Google Scholar

45. Ethan Perez, Harm De Vries, Florian Strub, Vincent Dumoulin, and Aaron Courville. 2017. Learning visual reasoning without strong priors. arXiv preprint arXiv:1707.03017 (2017).Google Scholar

46. Ethan Perez, Florian Strub, Harm De Vries, Vincent Dumoulin, and Aaron Courville. 2018. Film: Visual reasoning with a general conditioning layer. In Thirtyfilm-Second AAAI Conference on Artificial Intelligence.Google Scholar

47. Alec Radford, Luke Metz, and Soumith Chintala. 2015. Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv preprint arXiv:1511.06434 (2015).Google Scholar

48. Kevin Roth, Aurelien Lucchi, Sebastian Nowozin, and Thomas Hofmann. 2017. Stabilizing training of generative adversarial networks through regularization. In Advances in neural information processing systems. 2018–2028.Google Scholar

49. Fabrice Rousselle, Marco Manzi, and Matthias Zwicker. 2013. Robust denoising using feature and color information. In Computer Graphics Forum, Vol. 32. 121–130.Google ScholarCross Ref

50. Tim Salimans, Ian Goodfellow, Wojciech Zaremba, Vicki Cheung, Alec Radford, and Xi Chen. 2016. Improved techniques for training gans. In Advances in neural information processing systems. 2234–2242.Google Scholar

51. Pradeep Sen and Soheil Darabi. 2012. On filtering the noise from the random parameters in Monte Carlo rendering. ACM Trans. Graph. 31, 3 (2012), 18–1.Google ScholarDigital Library

52. Karen Simonyan and Andrew Zisserman. 2014. Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556 (2014).Google Scholar

53. Thijs Vogels, Fabrice Rousselle, Brian McWilliams, Gerhard Röthlin, Alex Harvill, David Adler, Mark Meyer, and Jan Novák. 2018. Denoising with kernel prediction and asymmetric loss functions. ACM Transactions on Graphics (TOG) 37, 4 (2018), 124.Google ScholarDigital Library

54. Ting-Chun Wang, Ming-Yu Liu, Jun-Yan Zhu, Andrew Tao, Jan Kautz, and Bryan Catanzaro. 2018a. High-resolution image synthesis and semantic manipulation with conditional gans. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 8798–8807.Google ScholarCross Ref

55. Xintao Wang, Ke Yu, Chao Dong, and Chen Change Loy. 2018b. Recovering realistic texture in image super-resolution by deep spatial feature transform. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 606–615.Google ScholarCross Ref

56. Xintao Wang, Ke Yu, Shixiang Wu, Jinjin Gu, Yihao Liu, Chao Dong, Yu Qiao, and Chen Change Loy. 2018c. Esrgan: Enhanced super-resolution generative adversarial networks. In Proceedings of the European Conference on Computer Vision (ECCV). 0–0.Google Scholar

57. Zhou Wang, Eero P Simoncelli, and Alan C Bovik. 2003. Multiscale structural similarity for image quality assessment. In The Thrity-Seventh Asilomar Conference on Signals, Systems & Computers, 2003, Vol. 2. Ieee, 1398–1402.Google ScholarCross Ref

58. Kelvin Xu, Jimmy Ba, Ryan Kiros, Kyunghyun Cho, Aaron Courville, Ruslan Salakhutdinov, Richard Zemel, and Yoshua Bengio. 2015. Show, attend and tell: Neural image caption generation with visual attention. arXiv preprint arXiv:1502.03044 (2015).Google Scholar

59. Richard Zhang, Phillip Isola, Alexei A Efros, Eli Shechtman, and Oliver Wang. 2018. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 586–595.Google ScholarCross Ref

60. Hang Zhao, Orazio Gallo, Iuri Frosio, and Jan Kautz. 2016. Loss functions for image restoration with neural networks. IEEE Transactions on computational imaging 3, 1 (2016), 47–57.Google ScholarCross Ref

61. Jun-Yan Zhu, Taesung Park, Phillip Isola, and Alexei A Efros. 2017. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE international conference on computer vision. 2223–2232.Google ScholarCross Ref

62. Henning Zimmer, Fabrice Rousselle, Wenzel Jakob, Oliver Wang, David Adler, Wojciech Jarosz, Olga Sorkine-Hornung, and Alexander Sorkine-Hornung. 2015. Path-space Motion Estimation and Decomposition for Robust Animation Filtering. In Computer Graphics Forum, Vol. 34. 131–142.Google ScholarDigital Library

63. Matthias Zwicker, Wojciech Jarosz, Jaakko Lehtinen, Bochang Moon, Ravi Ramamoorthi, Fabrice Rousselle, Pradeep Sen, Cyril Soler, and S-E Yoon. 2015. Recent advances in adaptive sampling and reconstruction for Monte Carlo rendering. In Computer Graphics Forum, Vol. 34. 667–681.Google ScholarDigital Library