“Painting With DEGAS (digitally extrapolated graphics via algorithmic strokes)” by Caruso

Conference:

Type(s):

Title:

- Painting With DEGAS (digitally extrapolated graphics via algorithmic strokes)

Presenter(s)/Author(s):

Entry Number: 13

Abstract:

INTRODUCTION

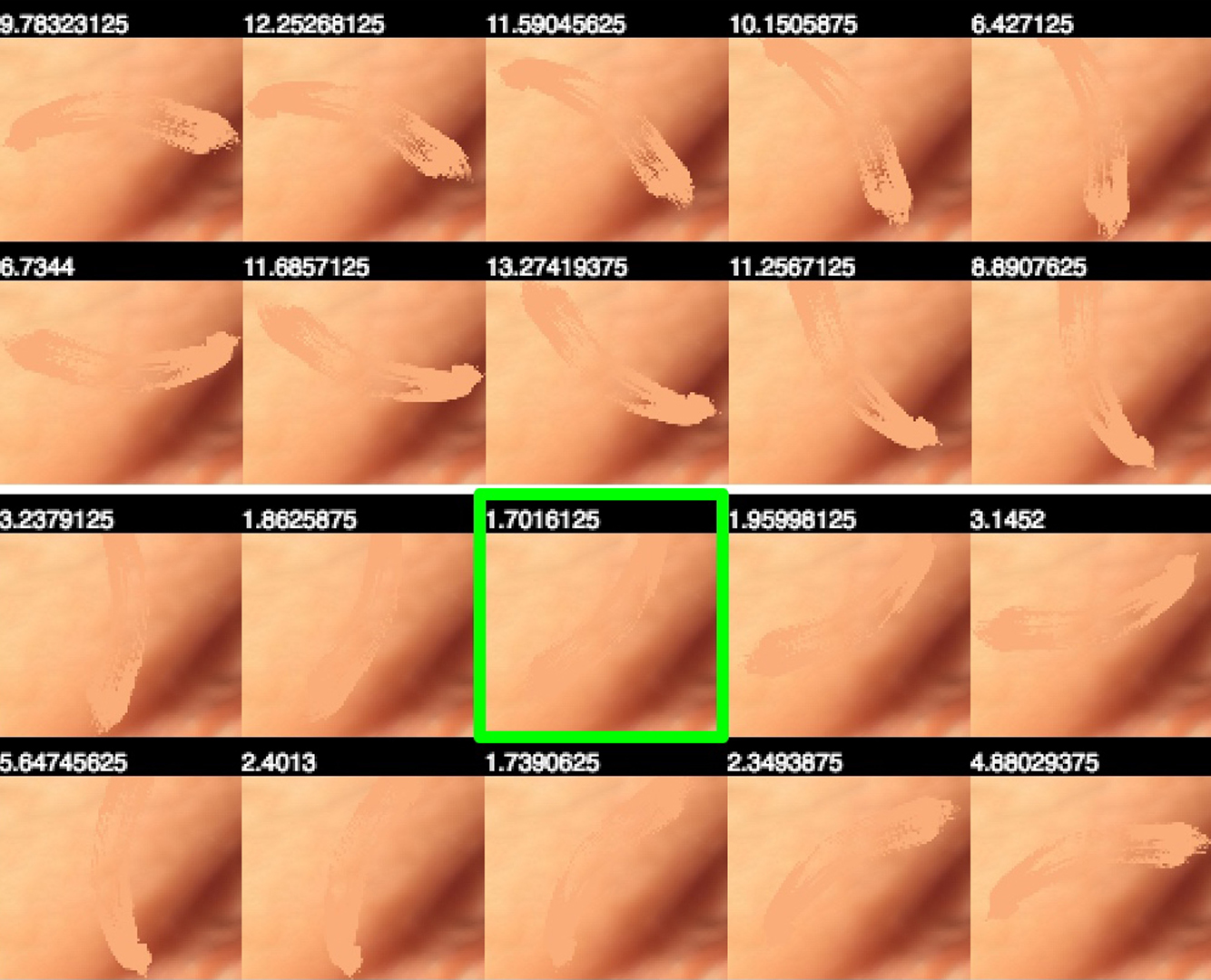

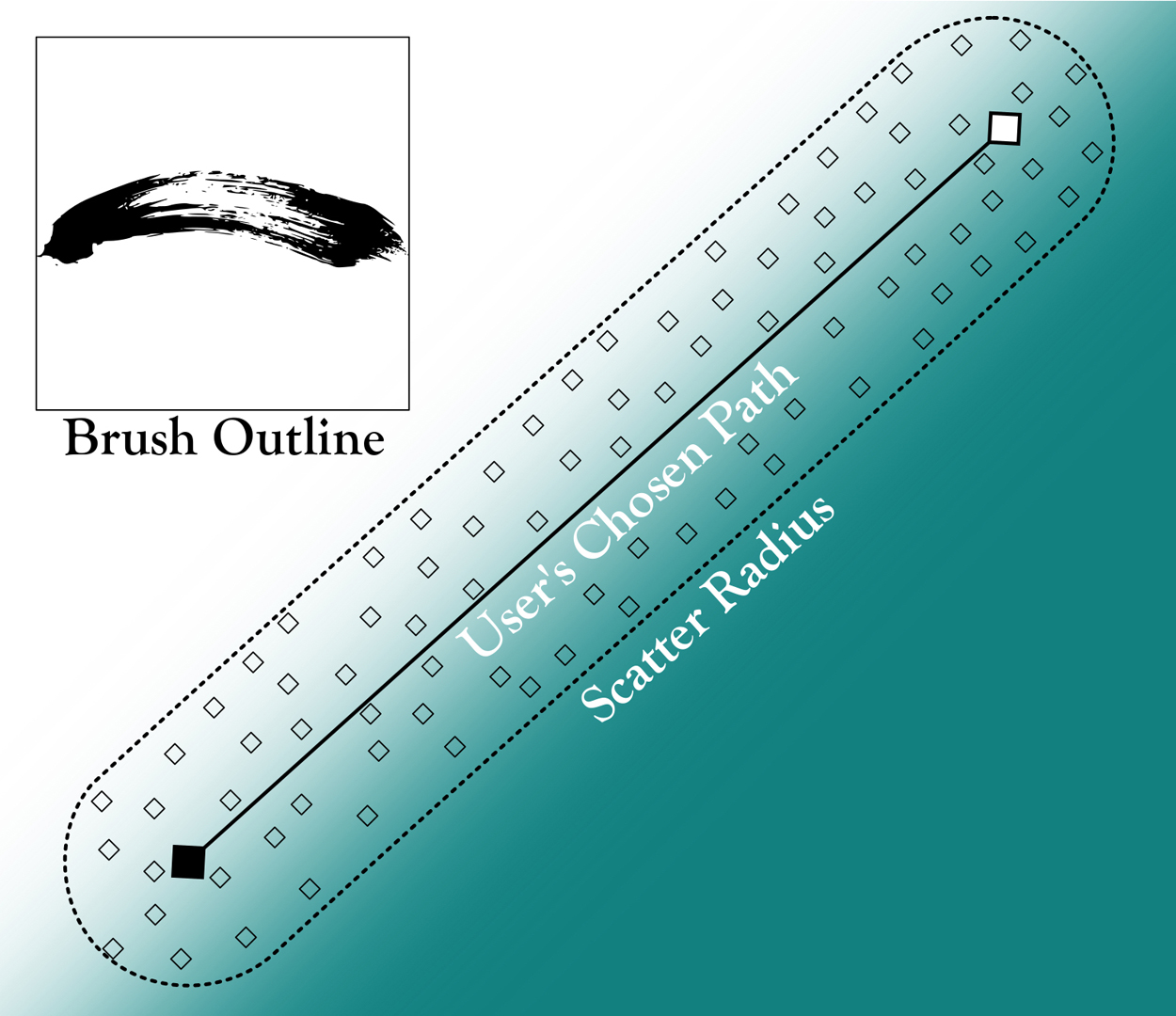

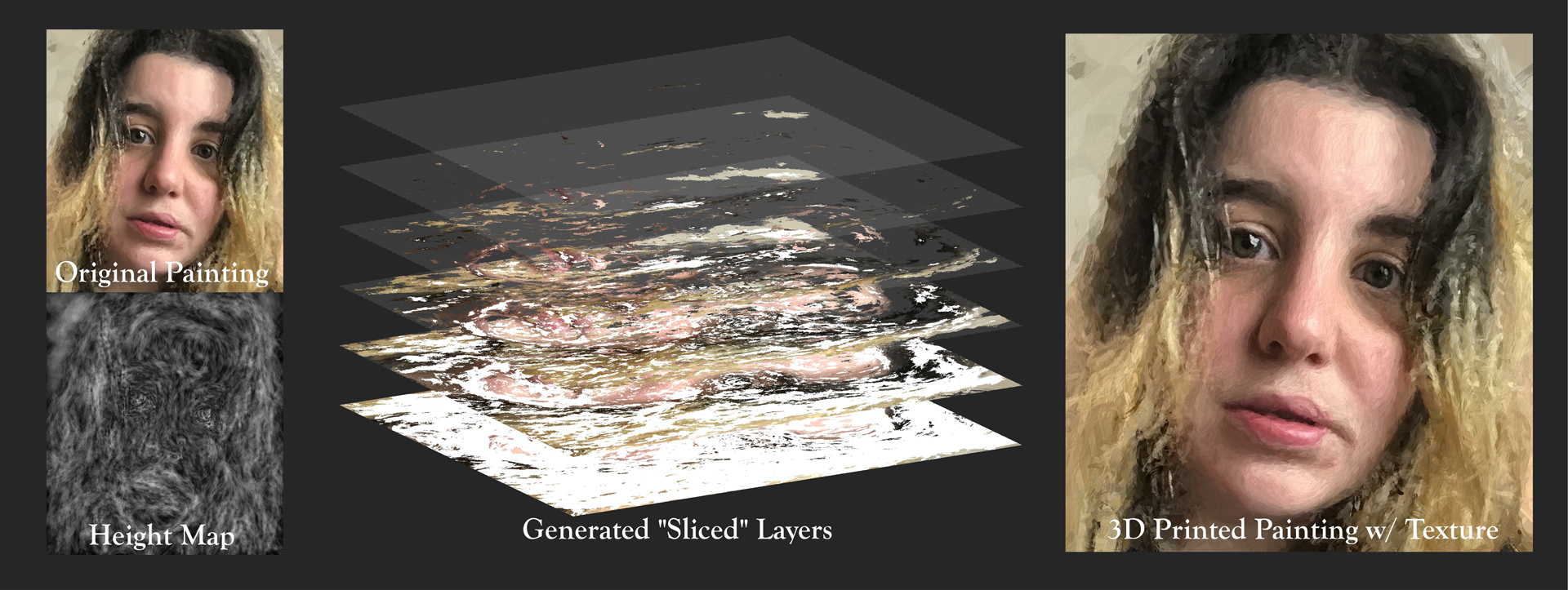

Most photograph-to-oil-painting algorithms are based off of the techniques described by [Litwinowicz 1997] and improved upon by [Hertzmann 2001]. They are essentially fully-automated processes which place strokes at random, choosing stroke orientations to follow local gradient normals. These strokes are built up over several layers, each layer in a decreasing order of stroke size – painting broad strokes and then filling in details. Outside of the initial chosen parameters and some masking considerations, the user has little agency in how the algorithm chooses stroke placement, nor has the ability to make direct changes or touch-ups until every stroke is laid down on the canvas – essentially it behaves like an “image filter” applied as one would adjust contrast or add texture.

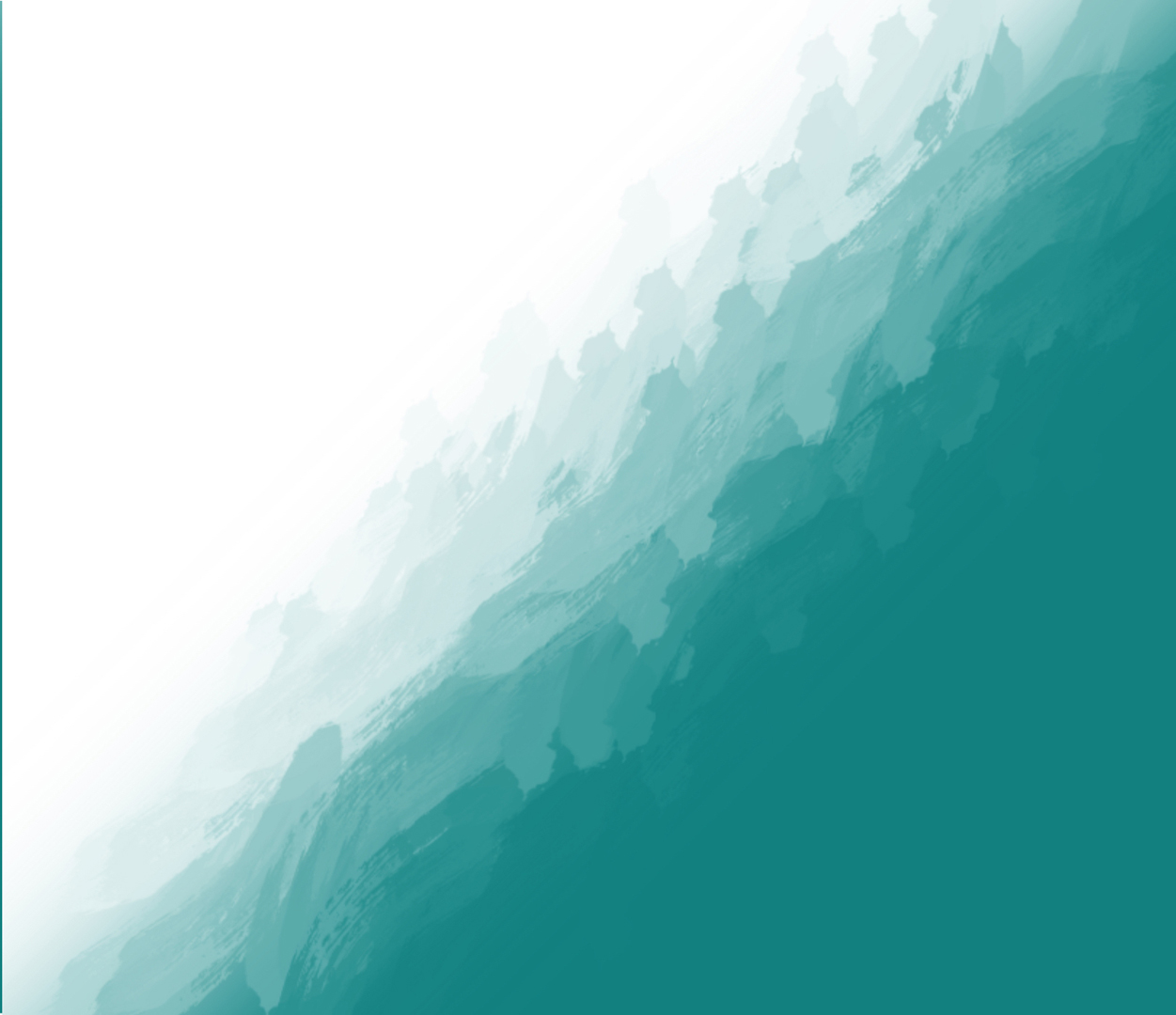

DEGAS, however, is a novel way of transforming a photograph into an oil painting by treating the transformative algorithm as a tool or “brush” in a conventional drawing program. This process involves repeatedly drawing brushstroke outlines (whose orientations are chosen by a least-color-variance method) over the user’s direct input, while giving the user direct access to the algorithm’s parameters and the ability to alter them in real time. At its heart this is a process-oriented approach (following the general stroke by-stroke technique used by Impressionist painters) rather than a product-oriented approach like a neural network (such as Ostagram [Morugin 2015]) – which produces patterns visually, but not the choices behind them – or a physical viscosity simulation like Adobe Wet Brush [Chen 2016] – which simulates the fluidity of paint. Where prior techniques required strong hardware, DEGAS is computationally inexpensive enough to run on a mobile web browser on a modern consumer-grade tablet computer with JavaScript and HTML5 Canvas capabilities.

References:

- Zhili Chen. 2016. Adobe WetBrush. https://www.youtube.com/watch?v=k_ndr3qDXKo?

- Emmanuel Flores and Bas Korsten. 2016. The Next Rembrandt. http://www. nextrembrandt.com/?

- Aaron Hertzmann. 2001. Algorithms for rendering in artistic styles. Ph.D. Dissertation. https://cs.nyu.edu/media/publications/hertzmann_aaron.pdf?

- Peter Litwinowicz. 1997. Processing images and video for an impressionist effect. Association for Computing Machinery, 407?414. http://citeseerx.ist.psu.edu/viewdoc/ download?doi=10.1.1.87.6457&rep=rep1&ENGINE=pdf?

- Sergey Morugin. 2015. http://www.ostagram.ru/,https://github.com/SergeyMorugin/ ostagram