“PiCam: an ultra-thin high performance monolithic camera array” by Venkataraman, Lelescu, Duparré, McMahon, Molina, et al. …

Conference:

Type(s):

Title:

- PiCam: an ultra-thin high performance monolithic camera array

Session/Category Title:

- Computational Photography

Presenter(s)/Author(s):

Abstract:

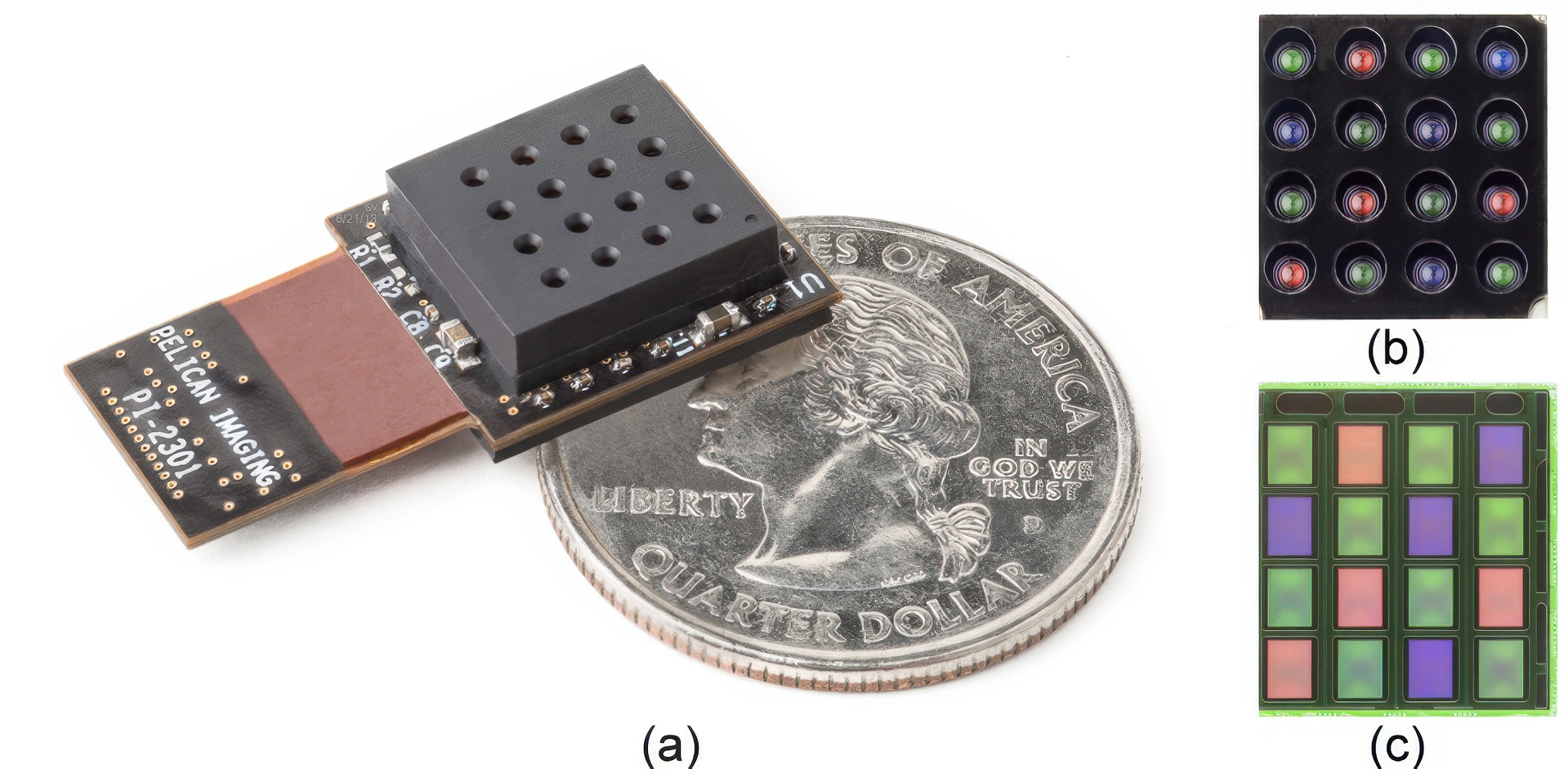

We present PiCam (Pelican Imaging Camera-Array), an ultra-thin high performance monolithic camera array, that captures light fields and synthesizes high resolution images along with a range image (scene depth) through integrated parallax detection and superresolution. The camera is passive, supporting both stills and video, low light capable, and small enough to be included in the next generation of mobile devices including smartphones. Prior works [Rander et al. 1997; Yang et al. 2002; Zhang and Chen 2004; Tanida et al. 2001; Tanida et al. 2003; Duparré et al. 2004] in camera arrays have explored multiple facets of light field capture – from viewpoint synthesis, synthetic refocus, computing range images, high speed video, and micro-optical aspects of system miniaturization. However, none of these have addressed the modifications needed to achieve the strict form factor and image quality required to make array cameras practical for mobile devices. In our approach, we customize many aspects of the camera array including lenses, pixels, sensors, and software algorithms to achieve imaging performance and form factor comparable to existing mobile phone cameras.Our contributions to the post-processing of images from camera arrays include a cost function for parallax detection that integrates across multiple color channels, and a regularized image restoration (superresolution) process that takes into account all the system degradations and adapts to a range of practical imaging conditions. The registration uncertainty from the parallax detection process is integrated into a Maximum-a-Posteriori formulation that synthesizes an estimate of the high resolution image and scene depth. We conclude with some examples of our array capabilities such as postcapture (still) refocus, video refocus, view synthesis to demonstrate motion parallax, 3D range images, and briefly address future work.

References:

1. Adelson, T., and Wang, J. Y. A. 1992. Single lens stereo with a plenoptic camera. IEEE Transactions on Pattern Analysis and Machine Intelligence 14, 2 (Feb.), 99–106.

2. Baker, S., and Kanade, T. 2002. Limits on super-resolution and how to break them. IEEE Transactions on Pattern Analysis and Machine Intelligence 24, 9 (September), 1167–1183.

3. Bishop, T. E., and Favaro, P. 2012. The light field camera: Extended depth of field, aliasing, and superresolution. IEEE Transactions on Pattern Recognition and Machine Intelligence 34, 5 (May), 972–986.

4. Bishop, T., Zanetti, S., and Favaro, P. 2009. Light field superresolution. In Proceedings of International Conference on Computational Photography (ICCP), IEEE.

5. Bose, N. K., and Ahuja, N. A. 2006. Superresolution and noise filtering using moving least squares. IEEE Transactions on Image Processing 15, 8 (Aug.), 2239–2248.

6. Bruckner, A., Duparre, J., Leitel, R., Dannberg, P., Brauer, A., and Tunnermann, A. 2010. Thin wafer-level camera lenses inspired by insect compound eyes. Optics Express 18, 24 (Nov.), 24379–24394.

7. Cho, K.-B., Tu, N., Brummer, J., Azad, K., Hsu, L., Wang, V., Kim, D., Palle, K., Miao, T.-M., Chen, Y., Hong, C., Bao, T., Sharma, V., Fong, Y., Irkar, K., Hashmi, S., Sukumar, V., Kabir, S., Rosenblum, G., Gao, Y., Ahn, K.-H., Ko, H.-J., Watson, J., and Kenoyer, C. 2013. A 12MP 16-focal plane CMOS image sensor with 1.75 μm pixel: Architecture and implementation. In Proceedings of International Image Sensors Workshop.

8. Duparré, J., Dannberg, P., Schreiber, P., Brauer, A., and Tunnermann, A. 2004. Thin observation module by bound optics: Concept and experimental verification. Artificial apposition compound eye fabricated by micro-optics technology 43.

9. El-Yamany, N. A., and Papamichalis, P. E. 2008. Robust color image superresolution: An adaptive m-estimation framework. EURASIP Journal in Advances in Signal Processing 2008 (Feb.), Article ID 763254.

10. Farsiu, S., Robinson, D., Elad, M., and Milanfar, P. 2004. Advances and challenges in super-resolution. International Journal of Imaging Systems and Technology 2004, 14 (Aug.), 47–57.

11. Farsiu, S., Elad, M., and Milanfar, P. 2006. Multiframe demosaicing and super-resolution of color images. IEEE Transactions on Image Processing 15, 1 (Jan.), 141–159.

12. Fischer, R. E., Galeb, B. T., and Yoder, P. 2008. Optical systems design.

13. Georgiev, T., Chunev, G., and Lumsdaine, A. 2011. Superresolution with the focused plenoptic camera. Computational Imaging IX, 243–254.

14. Hardie, R. C., Barnard, K. J., and Armstrong, E. E. 1997. Joint MAP registration and high-resolution image estimation using a sequence of undersampled images. IEEE Transactions on Image Processing 6, 12 (Dec.), 1621–1633.

15. Holst, G. C. 1998. CCD Arrays, Cameras and Displays, 2nd ed. JCD Publishing and SPIE, Winter Park, Florida, March.

16. Horisaki, R., Nakao, Y., Toyoda, T., Kagawa, K., Masaki, Y., and Tanida, J. 2008. A compound-eye imaging system with irregular lens-array arrangement. Proceedings of the SPIE 7072 (Sept.).

17. Irani, M., and Peleg, S. 1991. Improving resolution by image registration. CVGIP: Graphical Models and Image Processing 53, 3 (September), 231–239.

18. Kingslake, R. 1951. Lens design fundamentals. Academic Press.

19. Levoy, M., and Hanrahan, P. 1996. Light field rendering. In Proceedings of SIGGRAPH 96, Annual Conference Series, 31–42.

20. Lippmann, G. 1908. Épreuves réversibles. photographies intégrales. Comptes Rendus de l’Académie des Sciences 9, 146 (Mar.), 446–451.

21. Lohmann, A. W. 1989. Scaling laws for lens systems. Applied Optics 28, 23 (December), 4996–4998.

22. Mitra, K., and Veeraraghavan, A. 2012. Light field denoising, light field superresolution and stereo camera based refocusing using a GMM light field patch prior. In Proceedings of Computer Visison and Pattern Recognition Workshops (CVPRW), IEEE, 22–28.

23. Ng, R., Levoy, M., Brodif, M., Duval, G., Horowitz, M., and Hanrahan, P. 2005. Light field photography with a handheld plenoptic camera. Stanford University Computer Science Tech Report CSTR-2005-02.

24. Nomura, Y., Zhang, L., and Nayar, S. 2007. Scene Collages and Flexible Camera Arrays. In Proceedings of Eurographics Symposium on Rendering.

25. Okutomi, M., and Kanade, T. 1993. A multiple-baseline stereo. IEEE Transactions on Pattern Analysis and Machine Intelligence 15, 4 (Apr.), 353–363.

26. Pickup, L. C., Capel, D. P., Roberts, S. J., and Zisserman, A. 2007. Overcoming registration uncertainty in image super-resolution: Maximize or marginalize? EURASIP Journal in Advances in Signal Processing 2007, 2 (June), Article ID 2356.

27. Protter, M., and Elad, M. 2009. Super resolution with probabilistic motion estimation. IEEE Transactions on Image Processing 18, 8 (Aug.), 1899–1904.

28. Rander, P., Narayanan, P., and Kanade, T. 1997. Virtualized reality: Constructing time-varying virtual worlds from real events. IEEE Proceedings on Visualization, 277–283.

29. Rudin, L. I., Osher, S., and Fatemi, E. 1992. Nonlinear total variation based noise removal algorithms. Physica D 60 (Nov.), 259–268.

30. Sen, P., and Darabi, S. 2009. Compressive image superresolution. In Proceedings of 43rd Asilomar Conference on Signals, Systems, and Computers, IEEE, 1235–1242.

31. Szeliski, R. 2010. Computer Vision, Algorithms and Applications, 2011 ed. Springer, Springer-Verlag, London Limited.

32. Tanida, J., Kumagai, T., Yamada, K., Miyatake, S., Ishida, K., Morimoto, T., Kondou, N., Miyazaki, D., and Ichioka, Y. 2001. Thin observation module by bound optics: Concept and experimental verification. Applied Optics 40, 11 (Apr.), 1806–1813.

33. Tanida, J., Shogenji, R., Kitamura, Y., Yamada, K., Miyamoto, M., and S, S. M. 2003. Color imaging with an integrated compound imaging system. Optics Express 11, 18 (Sept.), 2109–2117.

34. Taylor, D. 1996. Virtual camera movement: The way of the future? American Cinematographer 77, 9 (Sept.), 93–100.

35. Vandewalle, P., Sbaiz, L., Vandewalle, J., and Vetterli, M. 2007. Super-resolution from unregistered and totally aliased signals using subspace methods. IEEE Transactions on Signal Processing 55, 7 (July), 3687–3703.

36. Wanner, S., and Goldluecke, B. 2012. Spatial and angular variational super-resolution of 4D light fields. In Proceedings of European Conference on Computer Vision, Springer, vol. 7576 of Lecture Notes in Computer Vision, 608–621.

37. Wilburn, B., Joshi, N., Vaish, V., Talvala, E.-V., Antunez, E., Barth, A., Adams, A., Horowitz, M., and Levoy, M. 2005. High performance imaging using large camera arrays. ACM Trans. Graph. 24, 3 (July), 765–776.

38. Yang, J. C., Everett, M., Buehler, C., and McMillan, L. 2002. A real time distributed light field camera. Eurographics Workshop on Rendering, 77–86.

39. Yang, J., Wright, J., Ma, Y., and Huang, T. 2008. Image super-resolution as sparse representation of raw image patches. In Proceedings of Computer Vision and Pattern Recognition (CVPR), IEEE, 1–8.

40. Zhang, C., and Chen, T. 2004. A self-reconfigurable camera array. Eurographics Symposium on Rendering, 243–254.

41. Zomet, A., Rav-Acha, A., and Peleg, S. 2001. Robust super-resolution. In Proceedings of Computer Vision and Pattern Recognition (CVPR), vol. 1, IEEE, 645–650.