“Real-time spatial relationship based 3D scene composition of unknown objects” by Seversky and Yin

Conference:

Type(s):

Title:

- Real-time spatial relationship based 3D scene composition of unknown objects

Presenter(s)/Author(s):

Abstract:

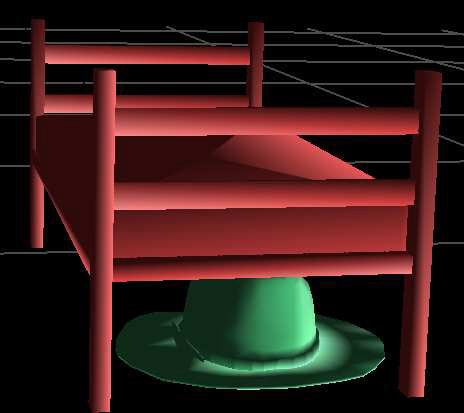

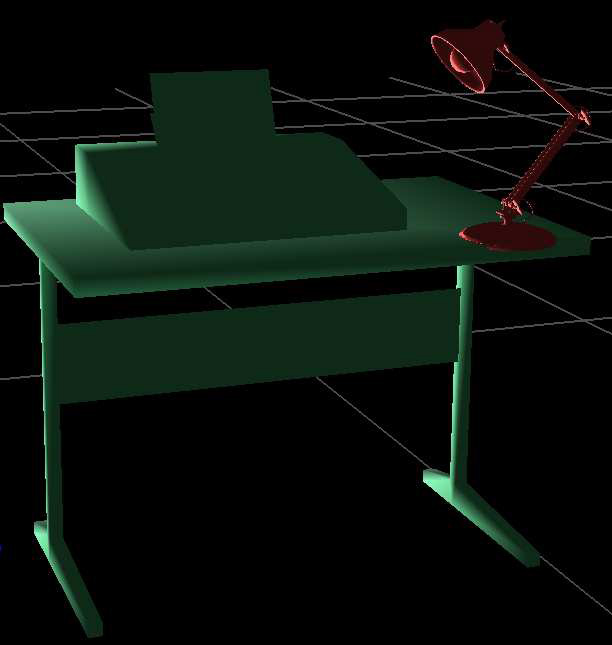

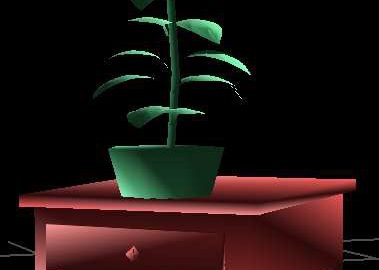

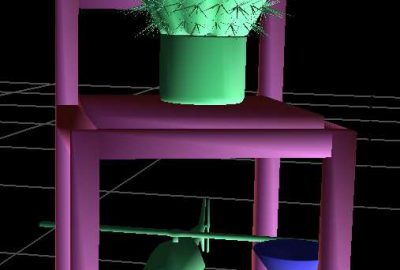

Manual scene composition in 3D is a difficult task and existing approaches attempt to construct scenes automatically [Coyne2001][Xu2002]. These methods depend heavily on explicit per-object knowledge that is used to determine placement. We present a method for automatically generating 3D scenes composed of un- known objects in real-time. Our method does not require any a priori knowledge of the objects and therefore the objects are considered to be unknown to our system. All necessary information is computed from the object’s geometric representation and is designed to support varying qualities of polygon models. The use of spatial relationships and relative positioning of objects is a natural and effective way for scene composition. Our method composes scenes by computing object placements that satisfy a desired spatial relationship such as on, under, next to, above, below, in front of, behind and to the left or right of. To illustrate our placement algorithm and its ability to be used interactively, a real-time scene composition framework using text and voice natural language input is developed.

References:

1. B. Coyne, R. S. 2001. Wordseye: An automatic text-to-scene conversion system. In Proceedings of the 28th annual conference on Computer graphics and interactive techniques, 487–496.

2. Ken Xu, James Stewart, W. F. 2002. Constraint-based automatic placement for scene composition. In Graphics Interface, 25–34.

Additional Images:

- 2006 Poster: Seversky_Real-time Spatial Relationship Based 3D Scene Composition of Unknown Objects

- 2006 Poster: Seversky_Real-time Spatial Relationship Based 3D Scene Composition of Unknown Objects