“Learning basketball dribbling skills using trajectory optimization and deep reinforcement learning” by Liu and Hodgins

Conference:

Type(s):

Title:

- Learning basketball dribbling skills using trajectory optimization and deep reinforcement learning

Session/Category Title: Animation Control

Presenter(s)/Author(s):

Moderator(s):

Entry Number: 142

Abstract:

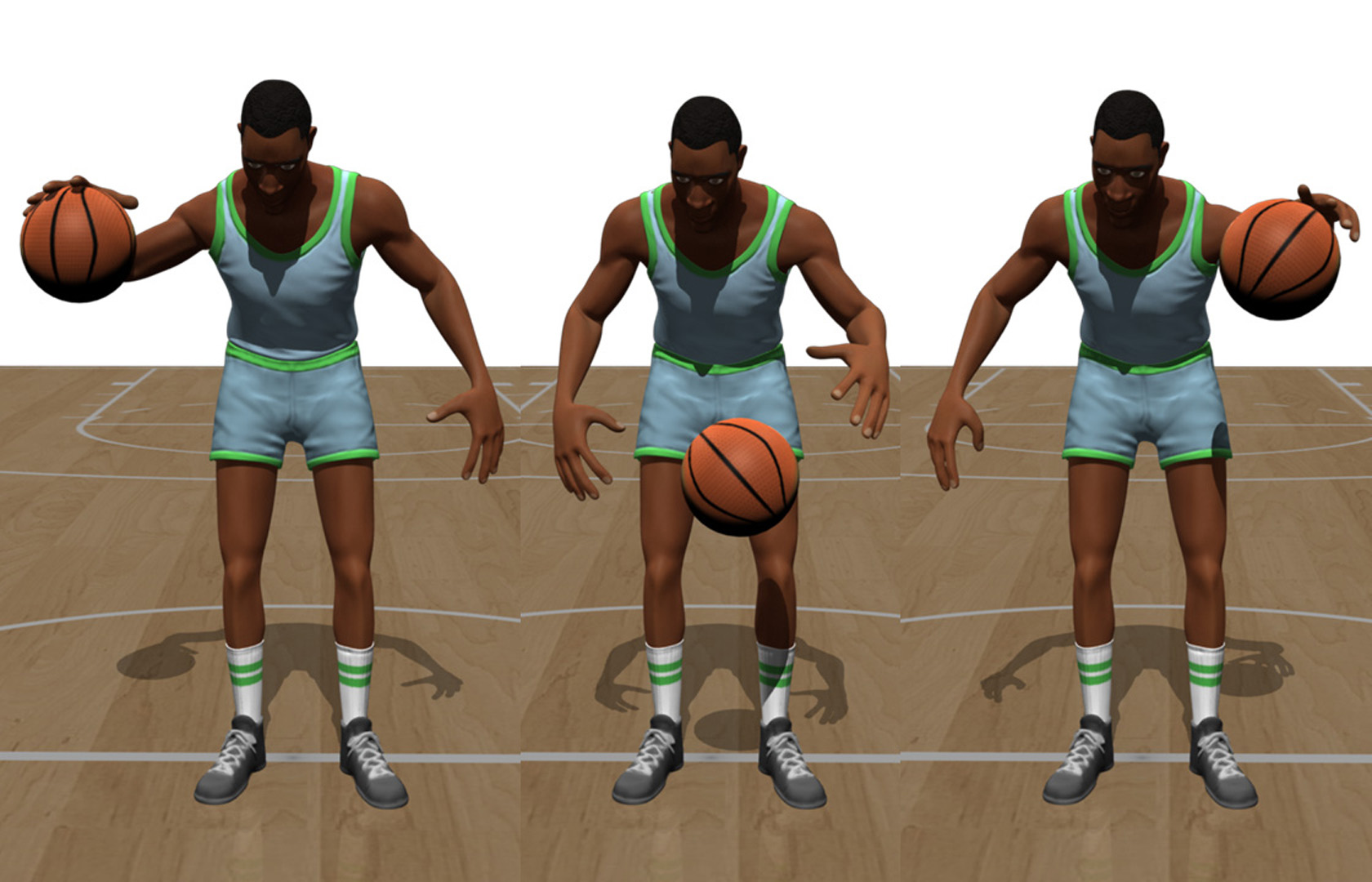

Basketball is one of the world’s most popular sports because of the agility and speed demonstrated by the players. This agility and speed makes designing controllers to realize robust control of basketball skills a challenge for physics-based character animation. The highly dynamic behaviors and precise manipulation of the ball that occur in the game are difficult to reproduce for simulated players. In this paper, we present an approach for learning robust basketball dribbling controllers from motion capture data. Our system decouples a basketball controller into locomotion control and arm control components and learns each component separately. To achieve robust control of the ball, we develop an efficient pipeline based on trajectory optimization and deep reinforcement learning and learn non-linear arm control policies. We also present a technique for learning skills and the transition between skills simultaneously. Our system is capable of learning robust controllers for various basketball dribbling skills, such as dribbling between the legs and crossover moves. The resulting control graphs enable a simulated player to perform transitions between these skills and respond to user interaction.

References:

1. Mazen Al Borno, Martin de Lasa, and Aaron Hertzmann. 2013. Trajectory Optimization for Full-Body Movements with Complex Contacts. IEEE Trans. Visual. Comput. Graph. 19, 8 (Aug 2013), 1405–1414. Google ScholarDigital Library

2. Sheldon Andrews and Paul G. Kry. 2013. Goal directed multi-finger manipulation: Control policies and analysis. Comput. Graph. 37, 7 (2013), 830 — 839. Google ScholarDigital Library

3. Yunfei Bai, Kristin Siu, and C. Karen Liu. 2012. Synthesis of Concurrent Object Manipulation Tasks. ACM Trans. Graph. 31, 6, Article 156 (Nov. 2012), 9 pages. Google ScholarDigital Library

4. Georg B?tz, Kwang-Kyu Lee, Dirk Wollherr, and Martin Buss. 2009. Robot basketball: A comparison of ball dribbling with visual and force/torque feedback. In 2009 IEEE International Conference on Robotics and Automation. 514–519. Google ScholarDigital Library

5. Georg B?tz, Uwe Mettin, Alexander Schmidts, Michael Scheint, Dirk Wollherr, and Anton S. Shiriaev. 2010. Ball dribbling with an underactuated continuous-time control phase: Theory & experiments. In 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems. 2890–2895.Google Scholar

6. Stelian Coros, Philippe Beaudoin, and Michiel van de Panne. 2009. Robust Task-based Control Policies for Physics-based Characters. ACM Trans. Graph. 28, 5, Article 170 (Dec. 2009), 9 pages. Google ScholarDigital Library

7. Stelian Coros, Philippe Beaudoin, and Michiel van de Panne. 2010. Generalized Biped Walking Control. ACM Trans. Graph. 29, 4, Article 130 (July 2010), 9 pages. Google ScholarDigital Library

8. Kai Ding, Libin Liu, Michiel van de Panne, and KangKang Yin. 2015. Learning Reduced-order Feedback Policies for Motion Skills. In Proceedings of the 14th ACM SIGGRAPH/Eurographics Symposium on Computer Animation (SCA ’15). ACM, 83–92. Google ScholarDigital Library

9. Ga?l Guennebaud, Beno?t Jacob, and others. 2010. Eigen v3. http://eigen.tuxfamily.org. (2010).Google Scholar

10. Sehoon Ha, Yuting Ye, and C. Karen Liu. 2012. Falling and Landing Motion Control for Character Animation. ACM Trans. Graph. 31, 6, Article 155 (Nov. 2012), 9 pages. Google ScholarDigital Library

11. Sami Haddadin, Kai Krieger, and Alin Albu-Sch?ffer. 2011. Exploiting elastic energy storage for cyclic manipulation: Modeling, stability, and observations for dribbling. In 2011 50th IEEE Conference on Decision and Control and European Control Conference. 690–697.Google ScholarCross Ref

12. Nikolaus Hansen. 2006. The CMA Evolution Strategy: A Comparing Review. In Towards a New Evolutionary Computation. Studies in Fuzziness and Soft Computing, Vol. 192. Springer Berlin Heidelberg, 75–102.Google Scholar

13. Matthew J. Hausknecht and Peter Stone. 2015. Deep Reinforcement Learning in Parameterized Action Space. CoRR abs/1511.04143 (2015). http://arxiv.org/abs/1511.04143Google Scholar

14. Jessica K. Hodgins, Wayne L. Wooten, David C. Brogan, and James F. O’Brien. 1995. Animating Human Athletics. In Proceedings of SIGGRAPH. 71–78. Google ScholarDigital Library

15. Sumit Jain and C. Karen Liu. 2009. Interactive Synthesis of Human-object Interaction. In Proceedings of the 2009 ACM SIGGRAPH/Eurographics Symposium on Computer Animation (SCA ’09). 47–53. Google ScholarDigital Library

16. Diederik P. Kingma and Jimmy Ba. 2014. Adam: A Method for Stochastic Optimization. CoRR abs/1412.6980 (2014). http://arxiv.org/abs/1412.6980Google Scholar

17. Paul G. Kry and Dinesh K. Pai. 2006. Interaction Capture and Synthesis. ACM Trans. Graph. 25, 3 (July 2006), 872–880. Google ScholarDigital Library

18. Taesoo Kwon and Jessica K. Hodgins. 2017. Momentum-Mapped Inverted Pendulum Models for Controlling Dynamic Human Motions. ACM Trans. Graph. 36, 1, Article 10 (Jan. 2017), 14 pages. Google ScholarDigital Library

19. Yoonsang Lee, Sungeun Kim, and Jehee Lee. 2010a. Data-driven Biped Control. ACM Trans. Graph. 29, 4, Article 129 (July 2010), 8 pages. Google ScholarDigital Library

20. Yongjoon Lee, Kevin Wampler, Gilbert Bernstein, Jovan Popovi?, and Zoran Popovi?. 2010b. Motion Fields for Interactive Character Locomotion. ACM Trans. Graph. 29, 6, Article 138 (Dec. 2010), 8 pages. Google ScholarDigital Library

21. Sergey Levine and Vladlen Koltun. 2013. Guided Policy Search. In Proceedings of the 30th International Conference on Machine Learning, Vol. 28(3). 1–9. Google ScholarDigital Library

22. Sergey Levine and Vladlen Koltun. 2014. Learning Complex Neural Network Policies with Trajectory Optimization. In Proceedings of the 31st International Conference on Machine Learning, Vol. 32(2). 829–837. Google ScholarDigital Library

23. Timothy P. Lillicrap, Jonathan J. Hunt, Alexander Pritzel, Nicolas Heess, Tom Erez, Yuval Tassa, David Silver, and Daan Wierstra. 2015. Continuous control with deep reinforcement learning. CoRR abs/1509.02971 (2015). http://arxiv.org/abs/1509.02971Google Scholar

24. C. Karen Liu. 2009. Dextrous Manipulation from a Grasping Pose. ACM Trans. Graph. 28, 3, Article 59 (July 2009), 6 pages. Google ScholarDigital Library

25. C. Karen Liu, Aaron Hertzmann, and Zoran Popovi?. 2006. Composition of Complex Optimal Multi-character Motions. In Proceedings of the 2006 ACM SIGGRAPH/Eurographics Symposium on Computer Animation (SCA ’06). 215–222. Google ScholarDigital Library

26. Libin Liu and Jessica Hodgins. 2017. Learning to Schedule Control Fragments for Physics-Based Characters Using Deep Q-Learning. ACM Trans. Graph. 36, 3, Article 29 (June 2017), 14 pages. Google ScholarDigital Library

27. Libin Liu, Michiel van de Panne, and KangKang Yin. 2016. Guided Learning of Control Graphs for Physics-Based Characters. ACM Trans. Graph. 35, 3, Article 29 (May 2016), 14 pages. Google ScholarDigital Library

28. Libin Liu, KangKang Yin, Bin Wang, and Baining Guo. 2013. Simulation and Control of Skeleton-driven Soft Body Characters. ACM Trans. Graph. 32, 6 (2013), Article 215. Google ScholarDigital Library

29. Adriano Macchietto, Victor Zordan, and Christian R. Shelton. 2009. Momentum control for balance. ACM Trans. Graph. 28, 3 (2009). Google ScholarDigital Library

30. James McCann and Nancy Pollard. 2007. Responsive Characters from Motion Fragments. ACM Trans. Graph. 26, 3, Article 6 (July 2007). Google ScholarDigital Library

31. Volodymyr Mnih, Koray Kavukcuoglu, David Silver, Andrei A. Rusu, Joel Veness, Marc G. Bellemare, Alex Graves, Martin Riedmiller, Andreas K. Fidjeland, Georg Ostrovski, Stig Petersen, Charles Beattie, Amir Sadik, Ioannis Antonoglou, Helen King, Dharshan Kumaran, Daan Wierstra, Shane Legg, and Demis Hassabis. 2015b. Human-level control through deep reinforcement learning. Nature 518, 7540 (26 Feb 2015), 529–533. Letter.Google Scholar

32. Volodymyr Mnih, Koray Kavukcuoglu, David Silver, Andrei A. Rusu, Joel Veness, and et al. 2015a. Human-level control through deep reinforcement learning. Nature 518, 7540 (26 Feb 2015), 529–533. Letter.Google Scholar

33. Igor Mordatch, Martin de Lasa, and Aaron Hertzmann. 2010. Robust Physics-based Locomotion Using Low-dimensional Planning. ACM Trans. Graph. 29, 4, Article 71 (July 2010), 8 pages. Google ScholarDigital Library

34. Igor Mordatch, Kendall Lowrey, Galen Andrew, Zoran Popovic, and Emanuel V. Todorov. 2015. Interactive Control of Diverse Complex Characters with Neural Networks. In Advances in Neural Information Processing Systems 28. 3114–3122. Google ScholarDigital Library

35. Igor Mordatch, Zoran Popovi?, and Emanuel Todorov. 2012a. Contact-invariant Optimization for Hand Manipulation. In Proceedings of the ACM SIGGRAPH/Eurographics Symposium on Computer Animation (SCA ’12). 137–144. Google ScholarDigital Library

36. Igor Mordatch, Emanuel Todorov, and Zoran Popovi?. 2012b. Discovery of Complex Behaviors Through Contact-invariant Optimization. ACM Trans. Graph. 31, 4, Article 43 (July 2012), 8 pages. Google ScholarDigital Library

37. Uldarico Muico, Yongjoon Lee, Jovan Popovi?, and Zoran Popovi?. 2009. Contact-aware nonlinear control of dynamic characters. ACM Trans. Graph. 28, 3 (2009). Google ScholarDigital Library

38. Xue Bin Peng, Glen Berseth, Kangkang Yin, and Michiel Van De Panne. 2017. DeepLoco: Dynamic Locomotion Skills Using Hierarchical Deep Reinforcement Learning. ACM Trans. Graph. 36, 4, Article 41 (July 2017), 13 pages. Google ScholarDigital Library

39. Xue Bin Peng and Michiel van de Panne. 2017. Learning Locomotion Skills Using DeepRL: Does the Choice of Action Space Matter?. In Proceedings of the ACM SIGGRAPH / Eurographics Symposium on Computer Animation (SCA ’17). Article 12, 13 pages. Google ScholarDigital Library

40. Nancy S. Pollard and Victor Brian Zordan. 2005. Physically Based Grasping Control from Example. In Proceedings of the 2005 ACM SIGGRAPH/Eurographics Symposium on Computer Animation (SCA ’05). 311–318. Google ScholarDigital Library

41. Philipp Reist and Raffaello D’Andrea. 2012. Design and Analysis of a Blind Juggling Robot. IEEE Trans. Robot. 28, 6 (Dec 2012), 1228–1243. Google ScholarDigital Library

42. Tim Salimans, Jonathan Ho, Xi Chen, Szymon Sidor, and Ilya Sutskever. 2017. Evolution Strategies as a Scalable Alternative to Reinforcement Learning. ArXiv e-prints (March 2017). arXiv:stat.ML/1703.03864Google Scholar

43. John Schulman, Sergey Levine, Pieter Abbeel, Michael Jordan, and Philipp Moritz. 2015a. Trust Region Policy Optimization. In The 32nd International Conference on Machine Learning. 1889–1897. Google ScholarDigital Library

44. John Schulman, Philipp Moritz, Sergey Levine, Michael I. Jordan, and Pieter Abbeel. 2015b. High-Dimensional Continuous Control Using Generalized Advantage Estimation. CoRR abs/1506.02438 (2015). http://arxiv.org/abs/1506.02438Google Scholar

45. David Silver, Guy Lever, Nicolas Heess, Thomas Degris, Daan Wierstra, and Martin Riedmiller. 2014. Deterministic Policy Gradient Algorithms. In The 31st International Conference on Machine Learning. 387–395. Google ScholarDigital Library

46. Jie Tan, Yuting Gu, C. Karen Liu, and Greg Turk. 2014. Learning Bicycle Stunts. ACM Trans. Graph. 33, 4, Article 50 (July 2014), 12 pages. Google ScholarDigital Library

47. Jie Tan, Yuting Gu, Greg Turk, and C. Karen Liu. 2011. Articulated Swimming Creatures. ACM Trans. Graph. 30, 4, Article 58 (July 2011), 12 pages. Google ScholarDigital Library

48. Theano Development Team. 2016. Theano: A Python framework for fast computation of mathematical expressions. arXiv e-prints abs/1605.02688 (May 2016). http://arxiv.org/abs/1605.02688Google Scholar

49. Adrien Treuille, Yongjoon Lee, and Zoran Popovi?. 2007. Near-optimal Character Animation with Continuous Control. ACM Trans. Graph. 26, 3, Article 7 (July 2007). Google ScholarDigital Library

50. Hado van Hasselt. 2012. Reinforcement Learning in Continuous State and Action Spaces. Springer, Berlin, Heidelberg, 207–251.Google Scholar

51. Kevin Wampler and Zoran Popovi?. 2009. Optimal gait and form for animal locomotion. ACM Trans. Graph. 28, 3 (2009), Article 60. Google ScholarDigital Library

52. Jack M. Wang, David J. Fleet, and Aaron Hertzmann. 2010. Optimizing Walking Controllers for Uncertain Inputs and Environments. ACM Trans. Graph. 29, 4, Article 73 (July 2010), 8 pages. Google ScholarDigital Library

53. Nkenge Wheatland, Yingying Wang, Huaguang Song, Michael Neff, Victor Zordan, and Sophie J?rg. 2015. State of the Art in Hand and Finger Modeling and Animation. Comput. Graph. Forum 34, 2 (May 2015), 735–760. Google ScholarDigital Library

54. Yuting Ye and C. Karen Liu. 2012. Synthesis of detailed hand manipulations using contact sampling. ACM Trans. Graph. 31, 4 (2012), Article 41. Google ScholarDigital Library

55. KangKang Yin, Kevin Loken, and Michiel van de Panne. 2007. SIMBICON: Simple Biped Locomotion Control. ACM Trans. Graph. 26, 3 (2007), Article 105. Google ScholarDigital Library

56. Wenping Zhao, Jianjie Zhang, Jianyuan Min, and Jinxiang Chai. 2013. Robust Realtime Physics-based Motion Control for Human Grasping. ACM Trans. Graph. 32, 6, Article 207 (Nov. 2013), 12 pages. Google ScholarDigital Library

57. Victor Zordan, David Brown, Adriano Macchietto, and KangKang Yin. 2014. Control of Rotational Dynamics for Ground and Aerial Behavior. IEEE Trans. Visual. Comput. Graph. 20, 10 (Oct 2014), 1356–1366.Google ScholarCross Ref