“Video SnapCut: robust video object cutout using localized classifiers” by Bai, Wang, Simons and Sapiro

Conference:

Type(s):

Title:

- Video SnapCut: robust video object cutout using localized classifiers

Presenter(s)/Author(s):

Abstract:

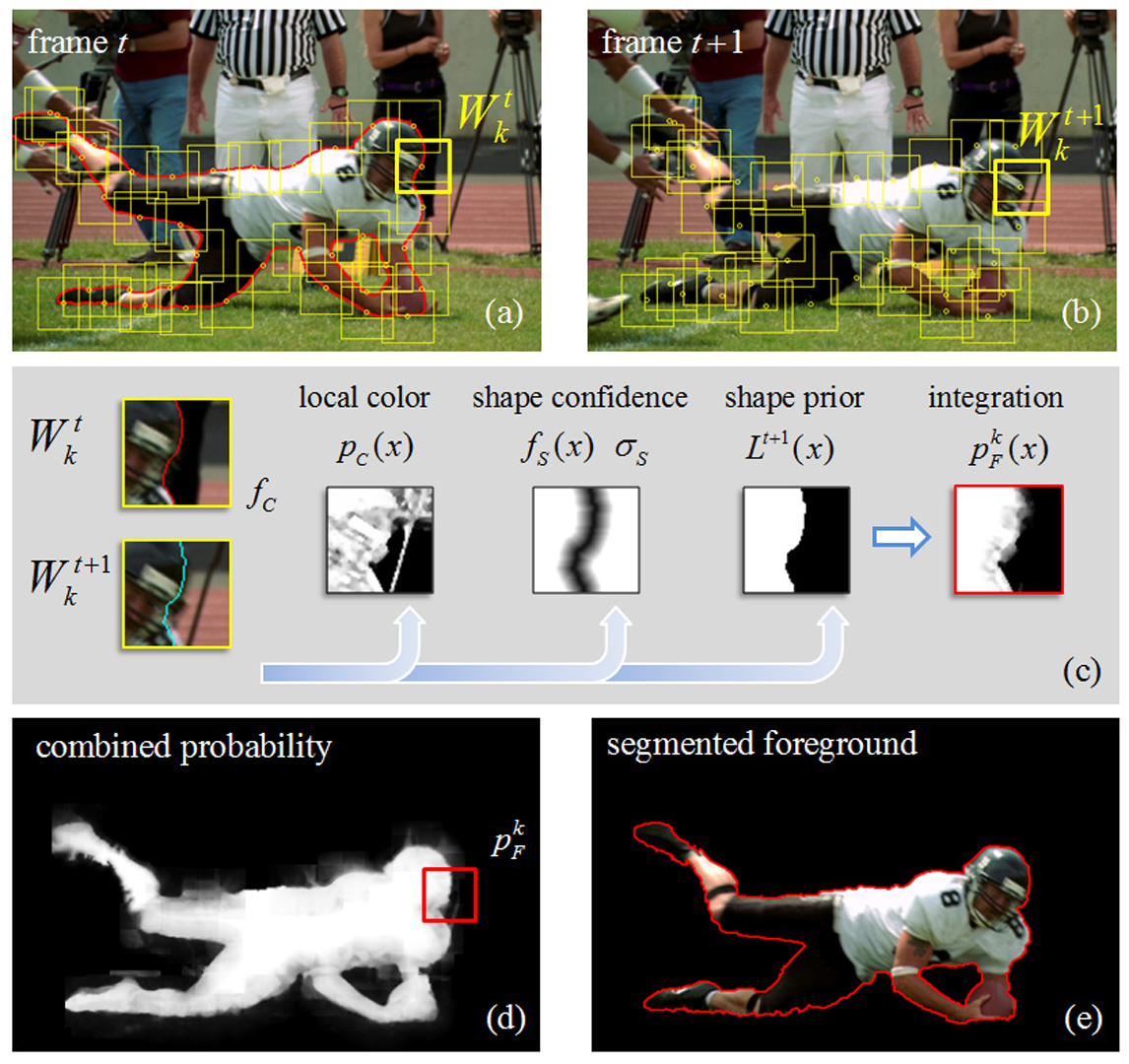

Although tremendous success has been achieved for interactive object cutout in still images, accurately extracting dynamic objects in video remains a very challenging problem. Previous video cutout systems present two major limitations: (1) reliance on global statistics, thus lacking the ability to deal with complex and diverse scenes; and (2) treating segmentation as a global optimization, thus lacking a practical workflow that can guarantee the convergence of the systems to the desired results.We present Video SnapCut, a robust video object cutout system that significantly advances the state-of-the-art. In our system segmentation is achieved by the collaboration of a set of local classifiers, each adaptively integrating multiple local image features. We show how this segmentation paradigm naturally supports local user editing and propagates them across time. The object cutout system is completed with a novel coherent video matting technique. A comprehensive evaluation and comparison is presented, demonstrating the effectiveness of the proposed system at achieving high quality results, as well as the robustness of the system against various types of inputs.

References:

1. Adobe Systems. 2008. Adobe Photoshop CS4 User Guide. Google ScholarDigital Library

2. Agarwala, A., Hertzmann, A., Salesin, D. H., and Seitz, S. M. 2004. Keyframe-based tracking for rotoscoping and animation. In Proc. of ACM SIGGRAPH, 584–591. Google ScholarDigital Library

3. Armstrong, C. J., Price, B. L., and Barrett, W. A. 2007. Interactive segmentation of image volumes with live surface. Computers and Graphics 31, 2, 212–229. Google ScholarDigital Library

4. Bai, X., and Sapiro, G. 2007. A geodesic framework for fast interactive image and video segmentation and matting. In Proc. of IEEE ICCV.Google Scholar

5. Blake, A., and Isard, M. 1998. Active Contours. Springer-Verlag.Google Scholar

6. Boykov, Y., Veksler, O., and Zabih, R. 2001. Fast approximate energy minimization via graph cuts. IEEE Trans. Pattern Analysis and Machine Intelligence 23, 11, 1222–1239. Google ScholarDigital Library

7. Chong, H., Gortler, S. J., and Zickler, T. 2008. A perception-based color space for illumination-invariant image processing. In Proc. of ACM SIGGRAPH. Google ScholarDigital Library

8. Chuang, Y.-Y., Agarwala, A., Curless, B., Salesin, D., and Szeliski, R. 2002. Video matting. In Proc. of ACM SIGGRAPH, 243–248. Google ScholarDigital Library

9. Kohli, P., Kumar, M. P., and Torr, P. H. S. 2007. P3 & beyond: solving energies with higher order cliques. In Proc. of IEEE CVPR.Google Scholar

10. Komogortsev, O., and Khan, J. 2004. Predictive perceptual compression for real time video communication. In Proc. of the 12th Annual ACM Int. Conf. on Multimedia, 220–227. Google ScholarDigital Library

11. Levin, A., Lischinski, D., and Weiss, Y. 2008. A closed-form solution to natural image matting. IEEE Trans. Pattern Analysis and Machine Intelligence 30, 2, 228–242. Google ScholarDigital Library

12. Li, Y., Sun, J., Tang, C.-K., and Shum, H.-Y. 2004. Lazy snapping. In Proc. of ACM SIGGRAPH, 303–308. Google ScholarDigital Library

13. Li, Y., Sun, J., and Shum, H. 2005. Video object cut and paste. In Proc. ACM SIGGRAPH, 595–600. Google ScholarDigital Library

14. Li, Y., Adelson, E., and Agarwala, A. 2008. Scribbleboost: Adding classification to edge-aware interpolation of local image and video adjustments. In Proc. of EGSR, 1255–1264. Google ScholarDigital Library

15. Lowe, D. G. 2004. Distinctive image features from scale-invariant Keypoints. Int. Journal of Computer Vision 60, 2, 91–110. Google ScholarDigital Library

16. Mortensen, E., and Barrett, W. 1995. Intelligent scissors for image composition. In Proc. of ACM SIGGRAPH, 191–198. Google ScholarDigital Library

17. Protiere, A., and Sapiro, G. 2007. Interactive image segmentation via adaptive weighted distances. IEEE Trans. Image Processing 16, 1046–1057. Google ScholarDigital Library

18. Rother, C., Kolmogorov, V., and Blake, A. 2004. Grabcut – interactive foreground extraction using iterated graph cut. In Proc. of ACM SIGGRAPH, 309–314. Google ScholarDigital Library

19. Stewart, S., 2003. Confessions of a roto artist: Three rules for better mattes. http://www.pinnaclesys.com/SupportFiles/Rotoscoping.pdfGoogle Scholar

20. Wandell, B. 1995. Foundations of Vision. Sinauer Associates.Google Scholar

21. Wang, J., and Cohen, M. 2007. Image and video matting: A survey. Foundations and Trends in Computer Graphics and Vision 3, 2, 97–175. Google ScholarDigital Library

22. Wang, J., and Cohen, M. 2007. Optimized color sampling for robust matting. In Proc. of IEEE CVPR.Google Scholar

23. Wang, J., Xu, Y., Shum, H., and Cohen, M. 2004. Video tooning. In Proc. of ACM SIGGRAPH. Google ScholarDigital Library

24. Wang, J., Bhat, P., Colburn, A., Agrawala, M., and Cohen, M. 2005. Interactive video cutout. In Proc. of ACM SIGGRAPH. Google ScholarDigital Library

25. Wang, J., Agrawala, M., and Cohen, M. 2007. Soft scissors: an interactive tool for realtime high quality matting. In Proc. of ACM SIGGRAPH. Google ScholarDigital Library

26. Waschbsch, M., Wrmlin, S., and Gross, M. 2006. Interactive 3d video editing. The Visual Computer 22, 9–11, 631–641. Google ScholarDigital Library

27. Yu, T., Zhang, C., Cohen, M., Rui, Y., and Wu, Y. 2007. Monocular video foreground/background segmentation by tracking spatial-color Gaussian mixture models. In Proc. of WMVC. Google ScholarDigital Library