“Towards Unstructured Unlabeled Optical Mocap: A Video Helps!”

Conference:

Type(s):

Title:

- Towards Unstructured Unlabeled Optical Mocap: A Video Helps!

Presenter(s)/Author(s):

Abstract:

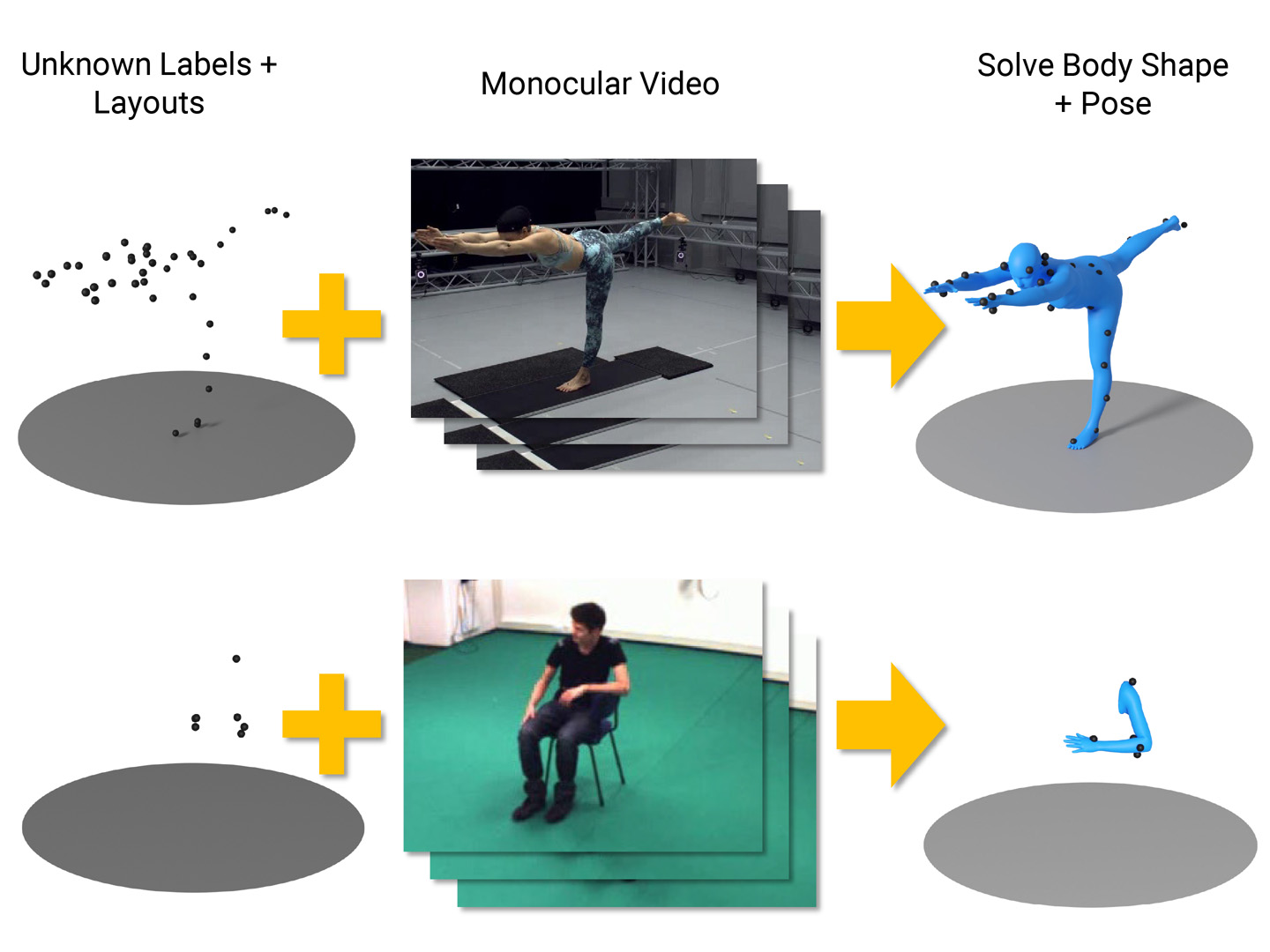

We introduce the problem Unstructured Unlabeled Optical (UUO) mocap, where unlabeled optical mocap markers can be placed anywhere on the body. Using monocular video, we introduce a multi-stage optimization framework that leverages multiple hypothesis testing to automatically solve for human pose and shape for both full-body and partial-body reconstruction.

References:

[1]

Karl Abson and Ian Palmer. 2015. Motion capture: capturing interaction between human and animal. The Visual Computer 31 (2015), 341?353.

[2]

Simon Alexanderson, Carol O?Sullivan, and Jonas Beskow. 2017. Real-time labeling of non-rigid motion capture marker sets. Computers & graphics 69 (2017), 59?67.

[3]

Giuseppe Averta, Federica Barontini, Vincenzo Catrambone, Sami Haddadin, Giacomo Handjaras, Jeremia PO Held, Tingli Hu, Eike Jakubowitz, Christoph M Kanzler, Johannes K?hn, 2021. U-Limb: A multi-modal, multi-center database on arm motion control in healthy and post-stroke conditions. GigaScience 10, 6 (2021), giab043.

[4]

Federica Bogo, Angjoo Kanazawa, Christoph Lassner, Peter Gehler, Javier Romero, and Michael J. Black. 2016. Keep It SMPL: Automatic Estimation of 3D Human Pose and Shape from a Single Image. In Computer Vision ? ECCV 2016, Bastian Leibe, Jiri Matas, Nicu Sebe, and Max Welling (Eds.). Springer International Publishing, Cham, 561?578.

[5]

Chris Bregler. 2007. Motion capture technology for entertainment [in the spotlight]. IEEE Signal Processing Magazine 24, 6 (2007), 160?158.

[6]

Jonathan Camargo, Aditya Ramanathan, Will Flanagan, and Aaron Young. 2021. A comprehensive, open-source dataset of lower limb biomechanics in multiple conditions of stairs, ramps, and level-ground ambulation and transitions. Journal of Biomechanics 119 (2021), 110320.

[7]

Anargyros Chatzitofis, Georgios Albanis, Nikolaos Zioulis, and Spyridon Thermos. 2022. A Low-Cost & Real-Time Motion Capture System. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 21453?21458.

[8]

Anargyros Chatzitofis, Dimitrios Zarpalas, Petros Daras, and Stefanos Kollias. 2021. DeMoCap: Low-cost marker-based motion capture. International Journal of Computer Vision 129, 12 (2021), 3338?3366.

[9]

Kang Chen, Yupan Wang, Song-Hai Zhang, Sen-Zhe Xu, Weidong Zhang, and Shi-Min Hu. 2021. MoCap-Solver: A neural solver for optical motion capture data. ACM Trans. Graph. (TOG) 40, 4 (2021), 1?11.

[10]

Allison L Clouthier, Gwyneth B Ross, Matthew P Mavor, Isabel Coll, Alistair Boyle, and Ryan B Graham. 2021. Development and validation of a deep learning algorithm and open-source platform for the automatic labelling of motion capture markers. IEEE Access 9 (2021), 36444?36454.

[11]

Edilson De Aguiar, Christian Theobalt, and Hans-Peter Seidel. 2006. Automatic learning of articulated skeletons from 3d marker trajectories. In Advances in Visual Computing: Second International Symposium.

[12]

Fernando De la Torre, Jessica Hodgins, Adam Bargteil, Xavier Martin, Justin Macey, Alex Collado, and Pep Beltran. 2009. Guide to the Carnegie Mellon University Multimodal Activity (CMU-MMAC) Database. (2009).

[13]

Nima Ghorbani and Michael J. Black. 2021. SOMA: Solving Optical Marker-Based MoCap Automatically. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). 11117?11126.

[14]

Saeed Ghorbani, Ali Etemad, and Nikolaus F. Troje. 2019. Auto-labelling of Markers in Optical Motion Capture by Permutation Learning. In Advances in Computer Graphics, Marina Gavrilova, Jian Chang, Nadia Magnenat Thalmann, Eckhard Hitzer, and Hiroshi Ishikawa (Eds.). Springer International Publishing, Cham, 167?178.

[15]

Shubham Goel, Georgios Pavlakos, Jathushan Rajasegaran, Angjoo Kanazawa, and Jitendra Malik. 2023. Humans in 4D: Reconstructing and Tracking Humans with Transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). 14783?14794.

[16]

Shangchen Han, Beibei Liu, Robert Wang, Yuting Ye, Christopher D Twigg, and Kenrick Kin. 2018. Online optical marker-based hand tracking with deep labels. ACM Trans. Graph. (TOG) 37, 4 (2018), 1?10.

[17]

Daniel Holden. 2018. Robust solving of optical motion capture data by denoising. ACM Trans. Graph. (TOG) 37, 4 (2018), 1?12.

[18]

Angjoo Kanazawa, Michael J Black, David W Jacobs, and Jitendra Malik. 2018. End-to-end recovery of human shape and pose. In Proceedings of the IEEE conference on computer vision and pattern recognition. 7122?7131.

[19]

Muhammed Kocabas, Nikos Athanasiou, and Michael J. Black. 2020. VIBE: Video Inference for Human Body Pose and Shape Estimation. In IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 5253?5263.

[20]

Matthew Loper, Naureen Mahmood, and Michael J Black. 2014. MoSh: motion and shape capture from sparse markers.ACM Trans. Graph. 33, 6 (2014), 220?1.

[21]

Matthew Loper, Naureen Mahmood, Javier Romero, Gerard Pons-Moll, and Michael J. Black. 2015. SMPL: A Skinned Multi-Person Linear Model. ACM Trans. Graphics (Proc. SIGGRAPH Asia) 34, 6 (Oct. 2015), 248:1?248:16.

[22]

Naureen Mahmood, Nima Ghorbani, Nikolaus F. Troje, Gerard Pons-Moll, and Michael J. Black. 2019. AMASS: Archive of Motion Capture as Surface Shapes. In International Conference on Computer Vision. 5442?5451.

[23]

Pierre Merriaux, Yohan Dupuis, R?mi Boutteau, Pascal Vasseur, and Xavier Savatier. 2017. A study of vicon system positioning performance. Sensors 17, 7 (2017), 1591.

[24]

Johannes Meyer, Markus Kuderer, J?rg M?ller, and Wolfram Burgard. 2014. Online marker labeling for fully automatic skeleton tracking in optical motion capture. In 2014 IEEE International Conference on Robotics and Automation (ICRA). 5652?5657. https://doi.org/10.1109/ICRA.2014.6907690

[25]

Thomas B. Moeslund, Adrian Hilton, and Volker Kr?ger. 2006. A survey of advances in vision-based human motion capture and analysis. Computer Vision and Image Understanding 104, 2 (2006), 90?126.

[26]

Xiaoyu Pan, Bowen Zheng, Xinwei Jiang, Guanglong Xu, Xianli Gu, Jingxiang Li, Qilong Kou, He Wang, Tianjia Shao, Kun Zhou, and Xiaogang Jin. 2023. A Locality-based Neural Solver for Optical Motion Capture., Article 117 (2023), 11 pages. https://doi.org/10.1145/3610548.3618148

[27]

Georgios Pavlakos, Vasileios Choutas, Nima Ghorbani, Timo Bolkart, Ahmed A. A. Osman, Dimitrios Tzionas, and Michael J. Black. 2019. Expressive Body Capture: 3D Hands, Face, and Body From a Single Image. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 10975?10985.

[28]

Owen Pearl, Soyong Shin, Ashwin Godura, Sarah Bergbreiter, and Eni Halilaj. 2023. Fusion of video and inertial sensing data via dynamic optimization of a biomechanical model. Journal of Biomechanics 155 (2023), 111617.

[29]

F. Pedregosa, G. Varoquaux, A. Gramfort, V. Michel, B. Thirion, O. Grisel, M. Blondel, P. Prettenhofer, R. Weiss, V. Dubourg, J. Vanderplas, A. Passos, D. Cournapeau, M. Brucher, M. Perrot, and E. Duchesnay. 2011. Scikit-learn: Machine Learning in Python. Journal of Machine Learning Research 12 (2011), 2825?2830.

[30]

Davis Rempe, Tolga Birdal, Aaron Hertzmann, Jimei Yang, Srinath Sridhar, and Leonidas J. Guibas. 2021. HuMoR: 3D Human Motion Model for Robust Pose Estimation. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). 11488?11499.

[31]

Daniel Roetenberg, Henk Luinge, Per Slycke, 2009. Xsens MVN: Full 6DOF human motion tracking using miniature inertial sensors. Xsens Motion Technologies BV, Tech. Rep 1 (2009), 1?7.

[32]

Tobias Schubert, Alexis Gkogkidis, Tonio Ball, and Wolfram Burgard. 2015. Automatic initialization for skeleton tracking in optical motion capture. In 2015 IEEE International Conference on Robotics and Automation (ICRA). 734?739. https://doi.org/10.1109/ICRA.2015.7139260

[33]

Soyong Shin, Zhixiong Li, and Eni Halilaj. 2023. Markerless Motion Tracking With Noisy Video and IMU Data. IEEE Transactions on Biomedical Engineering 70, 11 (2023), 3082?3092. https://doi.org/10.1109/TBME.2023.3275775

[34]

Tian Tan, Dianxin Wang, Peter B Shull, and Eni Halilaj. 2022. IMU and smartphone camera fusion for knee adduction and knee flexion moment estimation during walking. IEEE Transactions on Industrial Informatics 19, 2 (2022), 1445?1455.

[35]

Jilin Tang, Lincheng Li, Jie Hou, Haoran Xin, and Xin Yu. 2023. A Divide-and-conquer Solution to 3D Human Motion Estimation from Raw MoCap Data. In 2023 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW). 767?768. https://doi.org/10.1109/VRW58643.2023.00226

[36]

Shashank Tripathi, Lea M?ller, Chun-Hao P. Huang, Omid Taheri, Michael J. Black, and Dimitrios Tzionas. 2023. 3D Human Pose Estimation via Intuitive Physics. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 4713?4725.

[37]

N.P. van der Aa, X. Luo, G.J. Giezeman, R.T. Tan, and R.C. Veltkamp. 2011. UMPM benchmark: A multi-person dataset with synchronized video and motion capture data for evaluation of articulated human motion and interaction. In 2011 IEEE International Conference on Computer Vision Workshops (ICCV Workshops). 1264?1269. https://doi.org/10.1109/ICCVW.2011.6130396

[38]

Eline Van der Kruk and Marco M Reijne. 2018. Accuracy of human motion capture systems for sport applications; state-of-the-art review. European journal of sport science 18, 6 (2018), 806?819.

[39]

Tim J van der Zee, Emily M Mundinger, and Arthur D Kuo. 2022. A biomechanics dataset of healthy human walking at various speeds, step lengths and step widths. Scientific data 9, 1 (2022), 704.

[40]

Jingbo Wang, Ye Yuan, Zhengyi Luo, Kevin Xie, Dahua Lin, Umar Iqbal, Sanja Fidler, and Sameh Khamis. 2023. Learning Human Dynamics in Autonomous Driving Scenarios. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). 20796?20806.

[41]

Thomas J West III. 2019. Going Ape: Animacy and affect in Rise of the Planet of the Apes (2011). New Review of Film and Television Studies 17, 2 (2019), 236?253.

[42]

Hongyi Xu, Eduard Gabriel Bazavan, Andrei Zanfir, William T Freeman, Rahul Sukthankar, and Cristian Sminchisescu. 2020. Ghum & ghuml: Generative 3d human shape and articulated pose models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. IEEE, 6184?6193.

[43]

Vickie Ye, Georgios Pavlakos, Jitendra Malik, and Angjoo Kanazawa. 2023. Decoupling human and camera motion from videos in the wild. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 21222?21232.

[44]

Ye Yuan, Umar Iqbal, Pavlo Molchanov, Kris Kitani, and Jan Kautz. 2022. GLAMR: Global Occlusion-Aware Human Mesh Recovery With Dynamic Cameras. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 11038?11049.

[45]

He Zhang, Sebastian Starke, Taku Komura, and Jun Saito. 2018. Mode-adaptive neural networks for quadruped motion control. ACM Trans. Graph. 37, 4 (2018), 1?11.

[46]

H. Zhang, Y. Tian, Y. Zhang, M. Li, L. An, Z. Sun, and Y. Liu. 2023. PyMAF-X: Towards Well-Aligned Full-Body Model Regression From Monocular Images. IEEE Transactions on Pattern Analysis and Machine Intelligence 45, 10 (oct 2023), 12287?12303. https://doi.org/10.1109/TPAMI.2023.3271691

[47]

Hongwen Zhang, Yating Tian, Xinchi Zhou, Wanli Ouyang, Yebin Liu, Limin Wang, and Zhenan Sun. 2021. Pymaf: 3d human pose and shape regression with pyramidal mesh alignment feedback loop. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 11446?11456.