“ThemeStation: Generating Theme-aware 3D Assets From Few Exemplars”

Conference:

Type(s):

Title:

- ThemeStation: Generating Theme-aware 3D Assets From Few Exemplars

Presenter(s)/Author(s):

Abstract:

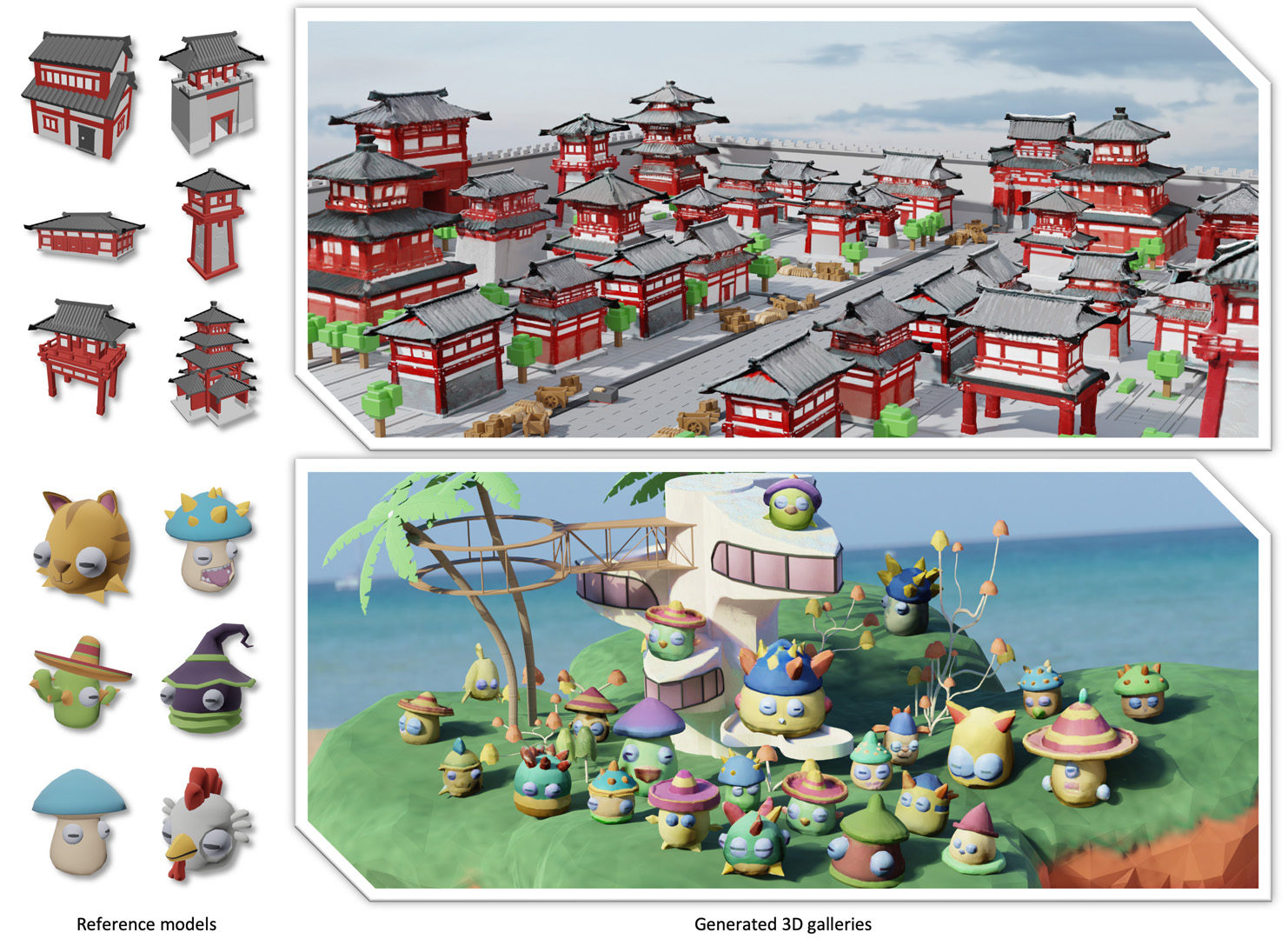

ThemeStation is an advanced tool for crafting theme-consistent 3D models. From a few exemplars to a universe of 3D assets, our two-stage framework and dual distillation process ensure a good blend of unity and diversity. Unleash your creativity with ThemeStation and step into the realm of effortless 3D content generation.

References:

[1]

Omri Avrahami, Kfir Aberman, Ohad Fried, Daniel Cohen-Or, and Dani Lischinski. 2023. Break-A-Scene: Extracting Multiple Concepts from a Single Image. arXiv preprint arXiv:2305.16311 (2023).

[2]

Bob. 2022. 3D Modeling 101: Comprehensive Beginners Guide. Retrieved Jan 03, 2024 from https://wow-how.com/articles/3d-modeling-101-comprehensive-beginners-guide

[3]

Andrew Brock, Jeff Donahue, and Karen Simonyan. 2018. Large scale GAN training for high fidelity natural image synthesis. arXiv preprint arXiv:1809.11096 (2018).

[4]

Tim Brooks, Aleksander Holynski, and Alexei A Efros. 2023. Instructpix2pix: Learning to follow image editing instructions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 18392?18402.

[5]

CGHero. 2022. The Stages of Creating a 3D Model. Retrieved Jan 02, 2024 from https://cghero.com/articles/stages-of-creating-3d-model

[6]

Eric R Chan, Connor Z Lin, Matthew A Chan, Koki Nagano, Boxiao Pan, Shalini De Mello, Orazio Gallo, Leonidas J Guibas, Jonathan Tremblay, Sameh Khamis, 2022. Efficient geometry-aware 3D generative adversarial networks. In CVPR.

[7]

Siddhartha Chaudhuri, Evangelos Kalogerakis, Leonidas Guibas, and Vladlen Koltun. 2011. Probabilistic reasoning for assembly-based 3D modeling. In ACM SIGGRAPH 2011 papers. 1?10.

[8]

Hansheng Chen, Jiatao Gu, Anpei Chen, Wei Tian, Zhuowen Tu, Lingjie Liu, and Hao Su. 2023b. Single-Stage Diffusion NeRF: A Unified Approach to 3D Generation and Reconstruction. arXiv preprint arXiv:2304.06714 (2023).

[9]

Rui Chen, Yongwei Chen, Ningxin Jiao, and Kui Jia. 2023a. Fantasia3D: Disentangling geometry and appearance for high-quality text-to-3D content creation. arXiv preprint arXiv:2303.13873 (2023).

[10]

Yongwei Chen, Tengfei Wang, Tong Wu, Xingang Pan, Kui Jia, and Ziwei Liu. 2024. ComboVerse: Compositional 3D Assets Creation Using Spatially-Aware Diffusion Guidance.

[11]

Victor Dibia. 2022. Latent Diffusion Models: Components and Denoising Steps. Retrieved Jan 04, 2024 from https://victordibia.com/blog/stable-diffusion-denoising/

[12]

Ziya Erko?, Fangchang Ma, Qi Shan, Matthias Nie?ner, and Angela Dai. 2023. HyperDiffusion: Generating Implicit Neural Fields with Weight-Space Diffusion. arxiv:2303.17015 [cs.CV]

[13]

Rinon Gal, Yuval Alaluf, Yuval Atzmon, Or Patashnik, Amit H. Bermano, Gal Chechik, and Daniel Cohen-Or. 2022a. An Image is Worth One Word: Personalizing Text-to-Image Generation using Textual Inversion. https://doi.org/10.48550/ARXIV.2208.01618

[14]

Rinon Gal, Yuval Alaluf, Yuval Atzmon, Or Patashnik, Amit H Bermano, Gal Chechik, and Daniel Cohen-Or. 2022b. An image is worth one word: Personalizing text-to-image generation using textual inversion. arXiv preprint arXiv:2208.01618 (2022).

[15]

Leon A Gatys, Alexander S Ecker, and Matthias Bethge. 2016. Image style transfer using convolutional neural networks. In Proceedings of the IEEE conference on computer vision and pattern recognition. 2414?2423.

[16]

Anchit Gupta, Wenhan Xiong, Yixin Nie, Ian Jones, and Barlas O?uz. 2023. 3DGen: Triplane latent diffusion for textured mesh generation. arXiv preprint arXiv:2303.05371 (2023).

[17]

Zexin He and Tengfei Wang. 2023. OpenLRM: Open-Source Large Reconstruction Models. https://github.com/3DTopia/OpenLRM.

[18]

Amir Hertz, Ron Mokady, Jay Tenenbaum, Kfir Aberman, Yael Pritch, and Daniel Cohen-Or. 2022. Prompt-to-prompt image editing with cross attention control. (2022).

[19]

Jonathan Ho, Ajay Jain, and Pieter Abbeel. 2020. Denoising diffusion probabilistic models. Advances in neural information processing systems 33 (2020), 6840?6851.

[20]

Fangzhou Hong, Jiaxiang Tang, Ziang Cao, Min Shi, Tong Wu, Zhaoxi Chen, Tengfei Wang, Liang Pan, Dahua Lin, and Ziwei Liu. 2024. 3DTopia: Large Text-to-3D Generation Model with Hybrid Diffusion Priors. arXiv preprint arXiv:2403.02234 (2024).

[21]

Yicong Hong, Kai Zhang, Jiuxiang Gu, Sai Bi, Yang Zhou, Difan Liu, Feng Liu, Kalyan Sunkavalli, Trung Bui, and Hao Tan. 2023. Lrm: Large reconstruction model for single image to 3D. arXiv preprint arXiv:2311.04400 (2023).

[22]

Edward J Hu, Yelong Shen, Phillip Wallis, Zeyuan Allen-Zhu, Yuanzhi Li, Shean Wang, Lu Wang, and Weizhu Chen. 2021. Lora: Low-rank adaptation of large language models. arXiv preprint arXiv:2106.09685 (2021).

[23]

Heewoo Jun and Alex Nichol. 2023. Shap-e: Generating conditional 3D implicit functions. arXiv preprint arXiv:2305.02463 (2023).

[24]

Tero Karras, Samuli Laine, and Timo Aila. 2019. A style-based generator architecture for generative adversarial networks. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 4401?4410.

[25]

Vladimir G Kim, Wilmot Li, Niloy J Mitra, Siddhartha Chaudhuri, Stephen DiVerdi, and Thomas Funkhouser. 2013. Learning part-based templates from large collections of 3D shapes. ACM Transactions on Graphics (TOG) 32, 4 (2013), 1?12.

[26]

Weiyu Li, Xuelin Chen, Jue Wang, and Baoquan Chen. 2023. Patch-based 3D Natural Scene Generation from a Single Example. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 16762?16772.

[27]

Chen-Hsuan Lin, Jun Gao, Luming Tang, Towaki Takikawa, Xiaohui Zeng, Xun Huang, Karsten Kreis, Sanja Fidler, Ming-Yu Liu, and Tsung-Yi Lin. 2023. Magic3D: High-Resolution Text-to-3D Content Creation. In Conference on Computer Vision and Pattern Recognition (CVPR).

[28]

Minghua Liu, Ruoxi Shi, Linghao Chen, Zhuoyang Zhang, Chao Xu, Xinyue Wei, Hansheng Chen, Chong Zeng, Jiayuan Gu, and Hao Su. 2023b. One-2-3-45++: Fast Single Image to 3D Objects with Consistent Multi-View Generation and 3D Diffusion. arXiv preprint arXiv:2311.07885 (2023).

[29]

Ruoshi Liu, Rundi Wu, Basile Van Hoorick, Pavel Tokmakov, Sergey Zakharov, and Carl Vondrick. 2023c. Zero-1-to-3: Zero-shot one image to 3D object. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 9298?9309.

[30]

Yuan Liu, Cheng Lin, Zijiao Zeng, Xiaoxiao Long, Lingjie Liu, Taku Komura, and Wenping Wang. 2023a. SyncDreamer: Generating Multiview-consistent Images from a Single-view Image. arXiv preprint arXiv:2309.03453 (2023).

[31]

Xiaoxiao Long, Yuan-Chen Guo, Cheng Lin, Yuan Liu, Zhiyang Dou, Lingjie Liu, Yuexin Ma, Song-Hai Zhang, Marc Habermann, Christian Theobalt, 2023. Wonder3D: Single image to 3D using cross-domain diffusion. arXiv preprint arXiv:2310.15008 (2023).

[32]

Roey Mechrez, Itamar Talmi, and Lihi Zelnik-Manor. 2018. The contextual loss for image transformation with non-aligned data. In Proceedings of the European conference on computer vision (ECCV). 768?783.

[33]

Luke Melas-Kyriazi, Christian Rupprecht, Iro Laina, and Andrea Vedaldi. 2023. RealFusion: 360 Reconstruction of Any Object from a Single Image. In Conference on Computer Vision and Pattern Recognition (CVPR).

[34]

Gal Metzer, Elad Richardson, Or Patashnik, Raja Giryes, and Daniel Cohen-Or. 2023. Latent-NeRF for shape-guided generation of 3D shapes and textures. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 12663?12673.

[35]

Charlie Nash, Yaroslav Ganin, SM Ali Eslami, and Peter Battaglia. 2020. Polygen: An autoregressive generative model of 3D meshes. In International conference on machine learning. PMLR, 7220?7229.

[36]

Alex Nichol, Heewoo Jun, Prafulla Dhariwal, Pamela Mishkin, and Mark Chen. 2023. Point-E: A System for Generating 3D Point Clouds from Complex Prompts. https://arxiv.org/abs/2212.08751 (2023).

[37]

Michael Niemeyer and Andreas Geiger. 2021. Giraffe: Representing scenes as compositional generative neural feature fields. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 11453?11464.

[38]

Dario Pavllo, Jonas Kohler, Thomas Hofmann, and Aurelien Lucchi. 2021. Learning generative models of textured 3D meshes from real-world images. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 13879?13889.

[39]

Ben Poole, Ajay Jain, Jonathan T. Barron, and Ben Mildenhall. 2023. DreamFusion: Text-to-3D using 2D Diffusion. In International Conference on Learning Representations (ICLR).

[40]

Guocheng Qian, Jinjie Mai, Abdullah Hamdi, Jian Ren, Aliaksandr Siarohin, Bing Li, Hsin-Ying Lee, Ivan Skorokhodov, Peter Wonka, Sergey Tulyakov, and Bernard Ghanem. 2023. Magic123: One Image to High-Quality 3D Object Generation Using Both 2D and 3D Diffusion Priors. https://arxiv.org/abs/2306.17843 (2023).

[41]

Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, 2021. Learning transferable visual models from natural language supervision. In International conference on machine learning. PMLR, 8748?8763.

[42]

Amit Raj, Srinivas Kaza, Ben Poole, Michael Niemeyer, Nataniel Ruiz, Ben Mildenhall, Shiran Zada, Kfir Aberman, Michael Rubinstein, Jonathan Barron, 2023. Dreambooth3D: Subject-driven text-to-3D generation. arXiv preprint arXiv:2303.13508 (2023).

[43]

Robin Rombach, Andreas Blattmann, Dominik Lorenz, Patrick Esser, and Bj?rn Ommer. 2022. High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 10684?10695.

[44]

Nataniel Ruiz, Yuanzhen Li, Varun Jampani, Yael Pritch, Michael Rubinstein, and Kfir Aberman. 2023. Dreambooth: Fine tuning text-to-image diffusion models for subject-driven generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 22500?22510.

[45]

Chitwan Saharia, William Chan, Saurabh Saxena, Lala Li, Jay Whang, Emily L Denton, Kamyar Ghasemipour, Raphael Gontijo Lopes, Burcu Karagol Ayan, Tim Salimans, 2022. Photorealistic text-to-image diffusion models with deep language understanding. Advances in Neural Information Processing Systems 35 (2022), 36479?36494.

[46]

Nadav Schor, Oren Katzir, Hao Zhang, and Daniel Cohen-Or. 2019. Componet: Learning to generate the unseen by part synthesis and composition. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 8759?8768.

[47]

Tamar Rott Shaham, Tali Dekel, and Tomer Michaeli. 2019. SinGAN: Learning a generative model from a single natural image. In Proceedings of the IEEE/CVF international conference on computer vision. 4570?4580.

[48]

Jingxiang Sun, Bo Zhang, Ruizhi Shao, Lizhen Wang, Wen Liu, Zhenda Xie, and Yebin Liu. 2023. DreamCraft3D: Hierarchical 3D Generation with Bootstrapped Diffusion Prior. https://arxiv.org/abs/2310.16818 (2023).

[49]

Jiaxiang Tang, Zhaoxi Chen, Xiaokang Chen, Tengfei Wang, Gang Zeng, and Ziwei Liu. 2024. LGM: Large Multi-View Gaussian Model for High-Resolution 3D Content Creation. arXiv preprint arXiv:2402.05054 (2024).

[50]

Jiaxiang Tang, Jiawei Ren, Hang Zhou, Ziwei Liu, and Gang Zeng. 2023a. DreamGaussian: Generative Gaussian Splatting for Efficient 3D Content Creation. arxiv:2309.16653 [cs.CV]

[51]

Junshu Tang, Tengfei Wang, Bo Zhang, Ting Zhang, Ran Yi, Lizhuang Ma, and Dong Chen. 2023b. Make-It-3D: High-Fidelity 3D Creation from A Single Image with Diffusion Prior. In International Conference on Computer Vision ICCV.

[52]

Tengfei Wang, Bo Zhang, Ting Zhang, Shuyang Gu, Jianmin Bao, Tadas Baltrusaitis, Jingjing Shen, Dong Chen, Fang Wen, Qifeng Chen, and Baining Guo. 2023b. RODIN: A Generative Model for Sculpting 3D Digital Avatars Using Diffusion. IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2023).

[53]

Tengfei Wang, Ting Zhang, Bo Zhang, Hao Ouyang, Dong Chen, Qifeng Chen, and Fang Wen. 2022. Pretraining is All You Need for Image-to-Image Translation. In arXiv.

[54]

Zhengyi Wang, Cheng Lu, Yikai Wang, Fan Bao, Chongxuan Li, Hang Su, and Jun Zhu. 2023a. ProlificDreamer: High-Fidelity and Diverse Text-to-3D Generation with Variational Score Distillation. https://arxiv.org/abs/2305.16213 (2023).

[55]

Rundi Wu, Ruoshi Liu, Carl Vondrick, and Changxi Zheng. 2023. Sin3DM: Learning a Diffusion Model from a Single 3D Textured Shape. arXiv preprint arXiv:2305.15399 (2023).

[56]

Rundi Wu and Changxi Zheng. 2022. Learning to generate 3D shapes from a single example. arXiv preprint arXiv:2208.02946 (2022).

[57]

Kai Xu, Hao Zhang, Daniel Cohen-Or, and Baoquan Chen. 2012. Fit and diverse: Set evolution for inspiring 3D shape galleries. ACM Transactions on Graphics (TOG) 31, 4 (2012), 1?10.

[58]

Shi Yichun, Wang Peng, Ye Jianglong, Mai Long, Li Kejie, and Yang Xiao. 2023. MVDream: Multi-view Diffusion for 3D Generation. https://arxiv.org/abs/2308.16512 (2023).

[59]

Youyi Zheng, Daniel Cohen-Or, and Niloy J Mitra. 2013. Smart variations: Functional substructures for part compatibility. In Computer Graphics Forum, Vol. 32. Wiley Online Library, 195?204.

[60]

Linqi Zhou, Yilun Du, and Jiajun Wu. 2021. 3D shape generation and completion through point-voxel diffusion. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 5826?5835.