“Texture Atlas Compression Based on Repeated Content Removal” by Luo, Jin, Pan, Wu, Kou, et al. …

Conference:

Type(s):

Title:

- Texture Atlas Compression Based on Repeated Content Removal

Session/Category Title:

- Texture Magic

Presenter(s)/Author(s):

Abstract:

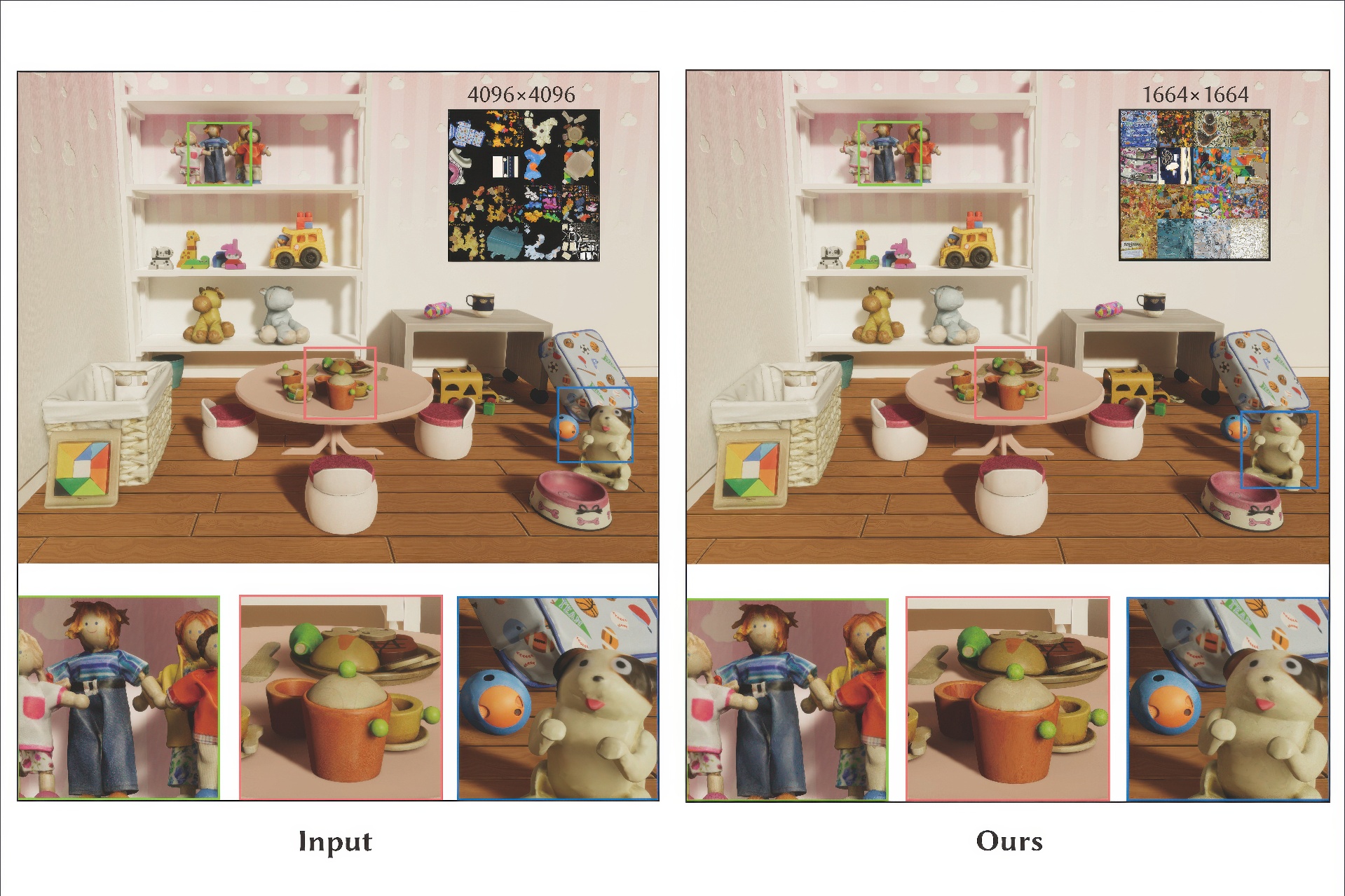

Optimizing the memory footprint of 3D models can have a major impact on the user experiences during real-time rendering and streaming visualization, where the major memory overhead lies in the high-resolution texture data. In this work, we propose a robust and automatic pipeline to content-aware, lossy compression for texture atlas. The design of our solution lies in two observations: 1) mapping multiple surface patches to the same texture region is seamlessly compatible with the standard rendering pipeline, requiring no decompression before any usage; 2) a texture image has background regions and salient structural features, which can be handled separately to achieve a high compression rate. Accordingly, our method contains three phases. We first factor out redundant salient texture contents by detecting such regions and mapping their corresponding 3D surface patches to a single UV patch via a UV-preserving re-meshing procedure. We then compress redundant background content by clustering triangles into groups by their color. Finally, we create a new UV atlas with all repetitive texture contents removed, and bake a new texture via differentiable rendering to remove potential inter-patch artifacts. To evaluate the efficacy of our approach, we batch-processed a dataset containing 100 models collected online. On average, our method achieves a texture atlas compression ratio of 81.80% with an averaged PSNR and MS-SSIM scores of 40.68 and 0.97, a marginal error in visual appearance.

References:

[1]

Kfir Aberman, Jing Liao, Mingyi Shi, Dani Lischinski, Baoquan Chen, and Daniel Cohen-Or. 2018. Neural best-buddies: Sparse cross-domain correspondence. ACM Transactions on Graphics 37, 4 (2018), 1–14.

[2]

Agisoft. 2023. Agisoft: Agisoft Metashape: A 3D reconstruction software. Retrieved May, 2023 from https://www.agisoft.com/

[3]

Jyrki Alakuijala, Ruud Van Asseldonk, Sami Boukortt, Martin Bruse, Iulia-Maria Comșa, Moritz Firsching, Thomas Fischbacher, Evgenii Kliuchnikov, Sebastian Gomez, Robert Obryk, 2019. JPEG XL next-generation image compression architecture and coding tools. In Applications of Digital Image Processing XLII, Vol. 11137. SPIE, 112–124.

[4]

Alexander C Berg, Tamara L Berg, and Jitendra Malik. 2005. Shape matching and object recognition using low distortion correspondences. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Vol. 1. IEEE, 26–33.

[5]

Hervé Brönnimann, Andreas Fabri, Geert-Jan Giezeman, Susan Hert, Michael Hoffmann, Lutz Kettner, Sylvain Pion, and Stefan Schirra. 2017. 2D and 3D Linear Geometry Kernel. https://doc.cgal.org/latest/Kernel_23/classKernel.html

[6]

Nathan A Carr and John C Hart. 2002. Meshed atlases for real-time procedural solid texturing. ACM Transactions on Graphics 21, 2 (2002), 106–131.

[7]

Ming-Ming Cheng, Fang-Lue Zhang, Niloy J Mitra, Xiaolei Huang, and Shi-Min Hu. 2010. Repfinder: finding approximately repeated scene elements for image editing. ACM Transactions on Graphics 29, 4 (2010), 1–8.

[8]

Yizong Cheng. 1995. Mean shift, mode seeking, and clustering. IEEE Transactions on Pattern Analysis and Machine Intelligence 17, 8 (1995), 790–799. https://doi.org/10.1109/34.400568

[9]

P. Cignoni, C. Rocchini, and R. Scopigno. 1998. Metro: Measuring error on simplified surfaces. Computer Graphics Forum 17, 2 (1998), 167–174.

[10]

Dorin Comaniciu and Peter Meer. 2002. Mean shift: A robust approach toward feature space analysis. IEEE Transactions on Pattern Analysis and Machine Intelligence 24, 5 (2002), 603–619.

[11]

Mengyang Feng, Huchuan Lu, and Errui Ding. 2019. Attentive feedback network for boundary-aware salient object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 1623–1632.

[12]

Francisco González and Gustavo Patow. 2009. Continuity mapping for multi-chart textures. In ACM SIGGRAPH Asia 2009 papers. 1–8.

[13]

Jon Hasselgren, Jacob Munkberg, Jaakko Lehtinen, Miika Aittala, and Samuli Laine. 2021. Appearance-driven automatic 3D model simplification. In EGSR (DL). 85–97.

[14]

Abir Jaafar Hussain, Ali Al-Fayadh, and Naeem Radi. 2018. Image compression techniques: A survey in lossless and lossy algorithms. Neurocomputing 300 (2018), 44–69.

[15]

Jennifer Jang and Heinrich Jiang. 2021. MeanShift++: Extremely Fast Mode-Seeking With Applications to Segmentation and Object Tracking. In 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 4100–4111. https://doi.org/10.1109/CVPR46437.2021.00409

[16]

Alexander Kirillov, Eric Mintun, Nikhila Ravi, Hanzi Mao, Chloe Rolland, Laura Gustafson, Tete Xiao, Spencer Whitehead, Alexander C. Berg, Wan-Yen Lo, Piotr Dollár, and Ross Girshick. 2023. Segment anything. arXiv:2304.02643 (2023).

[17]

Yan Kong, Weiming Dong, Xing Mei, Xiaopeng Zhang, and Jean-Claude Paul. 2013. SimLocator: robust locator of similar objects in images. The Visual Computer 29 (2013), 861–870.

[18]

Pavel Krajcevski, Srihari Pratapa, and Dinesh Manocha. 2016. GST: GPU-decodable supercompressed textures. ACM Transactions on Graphics 35, 6 (2016), 1–10.

[19]

Thomas Leung and Jitendra Malik. 1996. Detecting, localizing and grouping repeated scene elements from an image. In Computer Vision—ECCV’96: 4th European Conference on Computer Vision Cambridge, UK, April 15–18, 1996 Proceedings, Volume I 4. Springer, 546–555.

[20]

Chuan Li and Michael Wand. 2015. Approximate translational building blocks for image decomposition and synthesis. ACM Transactions on Graphics 34, 5 (2015), 1–16.

[21]

Max Limper, Nicholas Vining, and Alla Sheffer. 2018. Box cutter: atlas refinement for efficient packing via void elimination.ACM Trans. Graph. 37, 4 (2018), 153–1.

[22]

Nian Liu, Junwei Han, and Ming-Hsuan Yang. 2018. Picanet: Learning pixel-wise contextual attention for saliency detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 3089–3098.

[23]

William E. Lorensen and Harvey E. Cline. 1987. Marching Cubes: A High Resolution 3D Surface Construction Algorithm. In Proceedings of the 14th Annual Conference on Computer Graphics and Interactive Techniques(SIGGRAPH ’87). Association for Computing Machinery, New York, NY, USA, 163–169. https://doi.org/10.1145/37401.37422

[24]

Bruce D Lucas and Takeo Kanade. 1981. An iterative image registration technique with an application to stereo vision. In IJCAI’81: 7th international joint conference on Artificial intelligence, Vol. 2. 674–679.

[25]

A Maggiordomo, P Cignoni, and M Tarini. 2023. Texture inpainting for photogrammetric models. Computer Graphics Forum 42 (2023).

[26]

Microsoft. 2020. Texture Block Compression in Direct3D 11. https://learn.microsoft.com/en-us/windows/win32/direct3d11/texture-block-compression-in-direct3d-11

[27]

Ben Mildenhall, Pratul P. Srinivasan, Matthew Tancik, Jonathan T. Barron, Ravi Ramamoorthi, and Ren Ng. 2020. NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis. In ECCV.

[28]

Prerana Mukherjee, Manoj Sharma, Megh Makwana, Ajay Pratap Singh, Avinash Upadhyay, Akkshita Trivedi, Brejesh Lall, and Santanu Chaudhury. 2019. DSAL-GAN: Denoising based saliency prediction with generative adversarial networks. arXiv preprint arXiv:1904.01215 (2019).

[29]

Jae-Ho Nah. 2020. QuickETC2: Fast ETC2 texture compression using Luma differences. ACM Transactions on Graphics 39, 6 (2020), 1–10.

[30]

NVIDIA. 2022. NVCOMP. Retrieved Sep, 2023 from https://www.unrealengine.com/en-US/unreal-engine-5

[31]

Jörn Nystad, Anders Lassen, Andy Pomianowski, Sean Ellis, and Tom Olson. 2012. Adaptive scalable texture compression. In Proceedings of the Fourth ACM SIGGRAPH/Eurographics Conference on High-Performance Graphics. 105–114.

[32]

Gabriel Peyré. 2009. Sparse modeling of textures. Journal of mathematical imaging and vision 34 (2009), 17–31.

[33]

Budirijanto Purnomo, Jonathan D Cohen, and Subodh Kumar. 2004. Seamless texture atlases. In Proceedings of the 2004 Eurographics/ACM SIGGRAPH symposium on Geometry processing. 65–74.

[34]

Capturing Reality. 2023. RealityCapture: A 3D reconstruction software.Retrieved May, 2023 from https://www.capturingreality.com/realitycapture

[35]

Oren Rippel and Lubomir Bourdev. 2017. Real-time adaptive image compression. In International Conference on Machine Learning. PMLR, 2922–2930.

[36]

Open Robotics. 2023. Gazebo. Retrieved May, 2023 from https://app.gazebosim.org/fuel/models

[37]

Jianbo Shi 1994. Good features to track. In 1994 Proceedings of IEEE Conference on Computer Vision and Pattern Recognition. IEEE, 593–600.

[38]

Jacob Ström and Tomas Akenine-Möller. 2005. i PACKMAN: High-quality, low-complexity texture compression for mobile phones. In Proceedings of the ACM SIGGRAPH/EUROGRAPHICS conference on Graphics hardware. 63–70.

[39]

Jacob Ström and Martin Pettersson. 2007. ETC 2: texture compression using invalid combinations. In Graphics Hardware, Vol. 7. 49–54.

[40]

George Toderici, Damien Vincent, Nick Johnston, Sung Jin Hwang, David Minnen, Joel Shor, and Michele Covell. 2017. Full resolution image compression with recurrent neural networks. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition. 5306–5314.

[41]

Michael Tschannen, Eirikur Agustsson, and Mario Lucic. 2018. Deep generative models for distribution-preserving lossy compression. Advances in Neural Information Processing Systems 31 (2018), 5933––5944.

[42]

Karthik Vaidyanathan, Marco Salvi, Bartlomiej Wronski, Tomas Akenine-Möller, Pontus Ebelin, and Aaron Lefohn. 2023. Random-Access Neural Compression of Material Textures. In Proceedings of SIGGRAPH.

[43]

Gregory K Wallace. 1991. The JPEG still picture compression standard. Commun. ACM 34, 4 (1991), 30–44.

[44]

Huamin Wang, Yonatan Wexler, Eyal Ofek, and Hugues Hoppe. 2008. Factoring repeated content within and among images. ACM Transactions on Graphics 27, 3 (2008), 1–10.

[45]

Z. Wang, E.P. Simoncelli, and A.C. Bovik. 2003. Multiscale structural similarity for image quality assessment. In The Thrity-Seventh Asilomar Conference on Signals, Systems & Computers, 2003, Vol. 2. IEEE Computer Society, USA, 1398–1402 Vol.2.

[46]

Li-Yi Wei, Jianwei Han, Kun Zhou, Hujun Bao, Baining Guo, and Heung-Yeung Shum. 2008. Inverse texture synthesis. In ACM SIGGRAPH 2008 papers. 1–9.

[47]

Tong Wu, Jiarui Zhang, Xiao Fu, Yuxin Wang, Jiawei Ren, Liang Pan, Wayne Wu, Lei Yang, Jiaqi Wang, Chen Qian, 2023. Omniobject3d: Large-vocabulary 3d object dataset for realistic perception, reconstruction and generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 803–814.

[48]

Jonathan Young. 2023. Xatlas. Retrieved May, 2023 from https://github.com/jpcy/xatlas

[49]

Borut Žalik and Ivana Kolingerová. 2003. An incremental construction algorithm for Delaunay triangulation using the nearest-point paradigm. International Journal of Geographical Information Science 17, 2 (2003), 119–138.

[50]

Lijun Zhao, Huihui Bai, Anhong Wang, and Yao Zhao. 2019. Learning a virtual codec based on deep convolutional neural network to compress image. Journal of Visual Communication and Image Representation 63 (2019), 102589.

[51]

Qian-Yi Zhou and Vladlen Koltun. 2014. Color map optimization for 3d reconstruction with consumer depth cameras. ACM Transactions on Graphics 33, 4 (2014), 1–10.