“Synthesis of a video of performers appearing to play user-specified band music” by Yamamoto, Okabe and Onai

Conference:

Type(s):

Title:

- Synthesis of a video of performers appearing to play user-specified band music

Presenter(s)/Author(s):

Abstract:

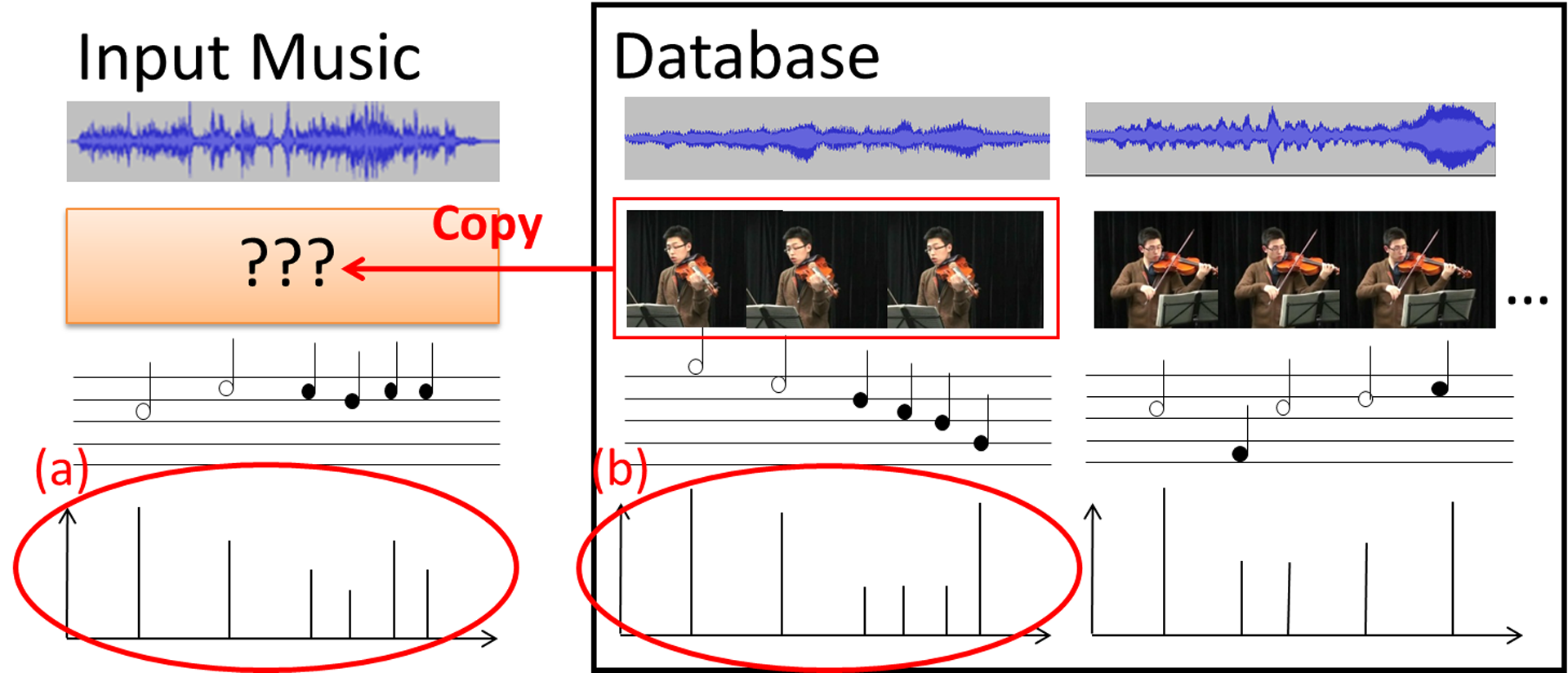

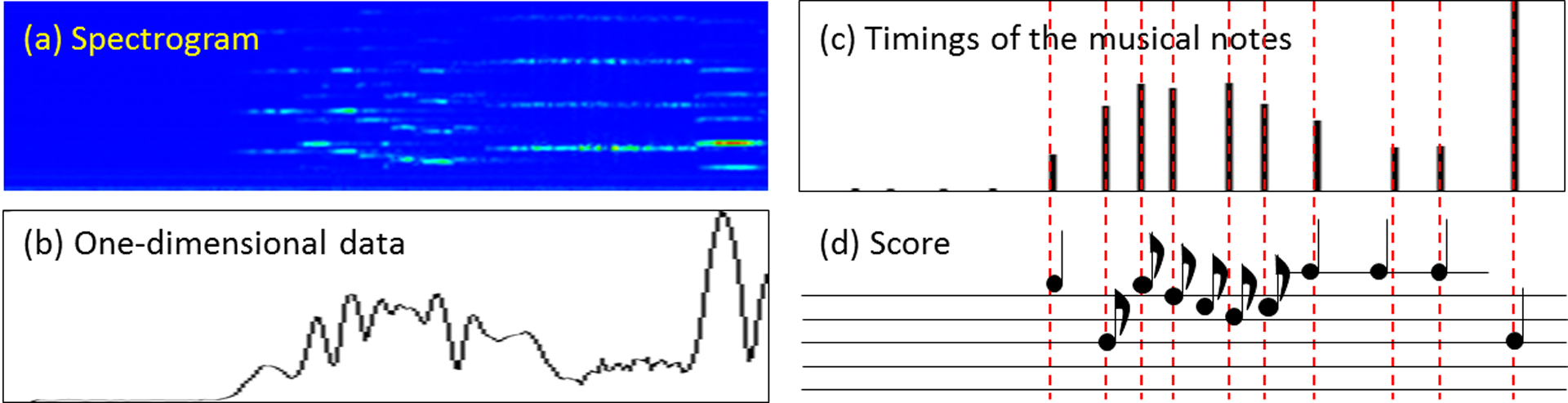

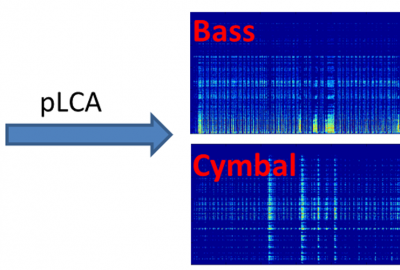

We propose a novel method to synthesize a video of multiple performers appearing to play the user-specified band music. The performance video often helps us to enjoy or understand the music. For example, watching the performance of a bass player makes it easier for us to focus on the bass sound, which is an experience different from listening to the music just using speakers or headphones. Our approach is based on the database of performance videos: given the band music, our system chooses appropriate footages from the database, slightly modifies their speeds and timings according to the music, and then concatenates them as the resulting video. In existing research, there are several methods proposed for synchronizing videos to a user-specified music, e.g., to synthesize a dance video [Nakano et al. 2011]. These methods synchronize a video to the music by analyzing its mood based on tempo or chord changes in the music. On the other hand, since we want to synthesize a performance video and require more precise synchronization, we analyze the music extracting the timings of musical notes from the audio signal (Fig.1). We perform best match search using the timings as feature vector, and copy the footage that has a similar set of timings (Fig.1-a and b). We demonstrate that our method enables to create a performance video of a band music, which is a fake but looks interesting.

References:

1. Nakano, T., Murofushi, S., Goto, M., and Morishima, S. 2011. Dancereproducer: An automatic mashup music video generation system by reusing dance video clips on the web. In Proc. of SMC, 183–189.

2. Smaragdis, P., Raj, B., and Shashanka, M. 2007. Supervised and semi-supervised separation of sounds from single-channel mixtures. In Proc. of ICA, 414–421.