“StyleCariGAN: caricature generation via StyleGAN feature map modulation” by Jang, Ju, Jung, Yang, Tong, et al. …

Conference:

Type(s):

Title:

- StyleCariGAN: caricature generation via StyleGAN feature map modulation

Presenter(s)/Author(s):

Abstract:

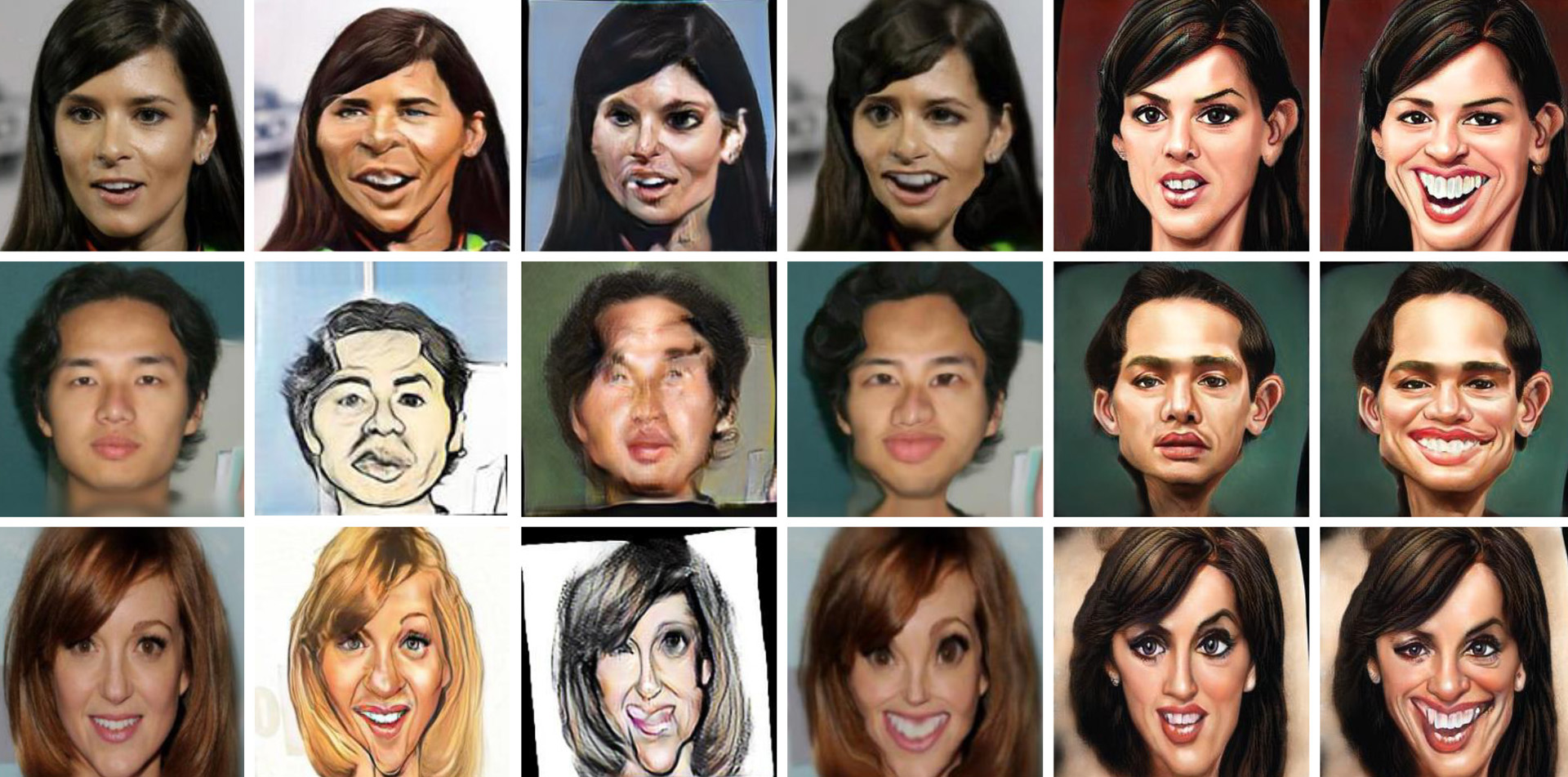

We present a caricature generation framework based on shape and style manipulation using StyleGAN. Our framework, dubbed StyleCariGAN, automatically creates a realistic and detailed caricature from an input photo with optional controls on shape exaggeration degree and color stylization type. The key component of our method is shape exaggeration blocks that are used for modulating coarse layer feature maps of StyleGAN to produce desirable caricature shape exaggerations. We first build a layer-mixed StyleGAN for photo-to-caricature style conversion by swapping fine layers of the StyleGAN for photos to the corresponding layers of the StyleGAN trained to generate caricatures. Given an input photo, the layer-mixed model produces detailed color stylization for a caricature but without shape exaggerations. We then append shape exaggeration blocks to the coarse layers of the layer-mixed model and train the blocks to create shape exaggerations while preserving the characteristic appearances of the input. Experimental results show that our StyleCariGAN generates realistic and detailed caricatures compared to the current state-of-the-art methods. We demonstrate StyleCariGAN also supports other StyleGAN-based image manipulations, such as facial expression control.

References:

1. Rameen Abdal, Yipeng Qin, and Peter Wonka. 2019. Image2stylegan: How to embed images into the stylegan latent space?. In Proc. ICCV.Google ScholarCross Ref

2. Rameen Abdal, Yipeng Qin, and Peter Wonka. 2020. Image2StyleGAN++: How to edit the embedded images?. In Proc. CVPR.Google ScholarCross Ref

3. Rameen Abdal, Peihao Zhu, Niloy Mitra, and Peter Wonka. 2021. Styleflow: Attribute-conditioned exploration of stylegan-generated images using conditional continuous normalizing flows. arXiv (2021).Google Scholar

4. Ergun Akleman. 1997. Making caricatures with morphing. In Proc. ACM SIGGRAPH.Google ScholarDigital Library

5. Ergun Akleman, James Palmer, and Ryan Logan. 2000. Making extreme caricatures with a new interactive 2D deformation technique with simplicial complexes. In Proc. Visual.Google Scholar

6. David Bau, Hendrik Strobelt, William Peebles, Jonas Wulff, Bolei Zhou, Jun-Yan Zhu, and Antonio Torralba. 2019. Semantic photo manipulation with a generative image prior. ACM Trans. Graph. 38, 4 (2019), 1–11.Google ScholarDigital Library

7. Susan E Brennan. 1985. Caricature generator: The dynamic exaggeration of faces by computer. Leonardo 18, 3 (1985), 170–178.Google ScholarCross Ref

8. Andrew Brock, Theodore Lim, James M Ritchie, and Nick Weston. 2016. Neural photo editing with introspective adversarial networks. arXiv (2016).Google Scholar

9. bryandlee. 2020. FreezeG. https://github.com/bryandlee/FreezeG.Google Scholar

10. Kaidi Cao, Jing Liao, and Lu Yuan. 2018. CariGANs: Unpaired photo-to-caricature translation. ACM Trans. Graph. 37, 6, Article 244 (2018).Google ScholarDigital Library

11. Hong Chen, Nan-Ning Zheng, Lin Liang, Yan Li, Ying-Qing Xu, and Heung-Yeung Shum. 2002. PicToon: a personalized image-based cartoon system. In Proc.MM.Google ScholarDigital Library

12. Yunjey Choi, Youngjung Uh, Jaejun Yoo, and Jung-Woo Ha. 2020. Stargan v2: Diverse image synthesis for multiple domains. In Proc. CVPR.Google ScholarCross Ref

13. Antonia Creswell and Anil Anthony Bharath. 2018. Inverting the generator of a generative adversarial network. IEEE Trans. Neural Networks and Learning Systems 30, 7 (2018), 1967–1974.Google ScholarCross Ref

14. Yu Deng, Jiaolong Yang, Dong Chen, Fang Wen, and Tong Xin. 2020. Disentangled and controllable face image generation via 3D imitative-contrastive learning. In Proc. CVPR.Google ScholarCross Ref

15. Yu Deng, Jiaolong Yang, Sicheng Xu, Dong Chen, Yunde Jia, and Xin Tong. 2019. Accurate 3d face reconstruction with weakly-supervised learning: From single image to image set. In Proc. CVPR Workshops.Google ScholarCross Ref

16. Julia Gong, Yannick Hold-Geoffroy, and Jingwan Lu. 2020. AutoToon: Automatic Geometric Warping for Face Cartoon Generation. In Proc. WACV.Google ScholarCross Ref

17. Bruce Gooch, Erik Reinhard, and Amy Gooch. 2004. Human facial illustrations: Creation and psychophysical evaluation. ACM Trans. Graph. 23, 1 (2004), 27–44.Google ScholarDigital Library

18. Ian J Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2014. Generative adversarial networks. In Proc. NeurIPS.Google Scholar

19. Xiaoguang Han, Kangcheng Hou, Dong Du, Yuda Qiu, Shuguang Cui, Kun Zhou, and Yizhou Yu. 2018. Caricatureshop: Personalized and photorealistic caricature sketching. IEEE Trans. Visualization & Computer Graphics 26, 7 (2018), 2349–2361.Google ScholarCross Ref

20. Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2016a. Deep residual learning for image recognition. In Proc. CVPR.Google ScholarCross Ref

21. Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2016b. Identity mappings in deep residual networks. In Proc. ECCV.Google ScholarCross Ref

22. Martin Heusel, Hubert Ramsauer, Thomas Unterthiner, Bernhard Nessler, and Sepp Hochreiter. 2017. Gans trained by a two time-scale update rule converge to a local nash equilibrium. In Proc. NeurIPS.Google Scholar

23. Xun Huang, Ming-Yu Liu, Serge Belongie, and Jan Kautz. 2018. Multimodal unsupervised image-to-image translation. In Proc. ECCV.Google ScholarDigital Library

24. Jing Huo, Wenbin Li, Yinghuan Shi, Yang Gao, and Hujun Yin. 2017. Webcaricature: a benchmark for caricature recognition. arXiv (2017).Google Scholar

25. Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, and Alexei A Efros. 2017. Image-to-image translation with conditional adversarial networks. In Proc. CVPR.Google ScholarCross Ref

26. Wen Ji, Kelei He, Jing Huo, Zheng Gu, and Yang Gao. 2020. Unsupervised domain attention adaptation network for caricature attribute recognition. In Proc. ECCV.Google ScholarDigital Library

27. Tero Karras, Miika Aittala, Janne Hellsten, Samuli Laine, Jaakko Lehtinen, and Timo Aila. 2020a. Training generative adversarial networks with limited data. In Proc. NeurIPS.Google Scholar

28. Tero Karras, Samuli Laine, and Timo Aila. 2019. A style-based generator architecture for generative adversarial networks. In Proc. CVPR.Google ScholarCross Ref

29. Tero Karras, Samuli Laine, Miika Aittala, Janne Hellsten, Jaakko Lehtinen, and Timo Aila. 2020b. Analyzing and improving the image quality of stylegan. In Proc. ICCV.Google ScholarCross Ref

30. Junho Kim, Minjae Kim, Hyeonwoo Kang, and Kwanghee Lee. 2020. U-gat-it: unsupervised generative attentional networks with adaptive layer-instance normalization for image-to-image translation. In Proc. ICLR.Google Scholar

31. Diederik P Kingma and Jimmy Ba. 2014. Adam: A method for stochastic optimization. arXiv (2014).Google Scholar

32. Nguyen Kim Hai Le, Yong Peng Why, and Golam Ashraf. 2011. Shape stylized face caricatures. In Proc. MMM.Google Scholar

33. Wenbin Li, Wei Xiong, Haofu Liao, Jing Huo, Yang Gao, and Jiebo Luo. 2020. Carigan: Caricature generation through weakly paired adversarial learning. Neural Networks 132 (2020), 66–74.Google ScholarCross Ref

34. Pei-Ying Chiang Wen-Hung Liao and Tsai-Yen Li. 2004. Automatic caricature generation by analyzing facial features. In Proc. ACCV.Google Scholar

35. Ziwei Liu, Ping Luo, Xiaogang Wang, and Xiaoou Tang. 2015. Deep Learning Face Attributes in the Wild. In Proc. ICCV.Google ScholarDigital Library

36. Lars Mescheder, Andreas Geiger, and Sebastian Nowozin. 2018. Which training methods for GANs do actually converge?. In Proc. ICML.Google Scholar

37. Sangwoo Mo, Minsu Cho, and Jinwoo Shin. 2020. Freeze Discriminator: A Simple Baseline for Fine-tuning GANs. arXiv (2020).Google Scholar

38. Zhenyao Mo, John P Lewis, and Ulrich Neumann. 2004. Improved automatic caricature by feature normalization and exaggeration. In Proc. ACM SIGGRAPH.Google ScholarDigital Library

39. Adam Paszke, Sam Gross, Francisco Massa, Adam Lerer, James Bradbury, Gregory Chanan, Trevor Killeen, Zeming Lin, Natalia Gimelshein, Luca Antiga, Alban Desmaison, Andreas Kopf, Edward Yang, Zachary DeVito, Martin Raison, Alykhan Tejani, Sasank Chilamkurthy, Benoit Steiner, Lu Fang, Junjie Bai, and Soumith Chintala. 2019. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Advances in Neural Information Processing Systems 32, H. Wallach, H. Larochelle, A. Beygelzimer, F. d’Alch?-Buc, E. Fox, and R. Garnett (Eds.). Curran Associates, Inc., 8024–8035.Google Scholar

40. Justin NM Pinkney and Doron Adler. 2020. Resolution Dependant GAN Interpolation for Controllable Image Synthesis Between Domains. arXiv (2020).Google Scholar

41. Elad Richardson, Yuval Alaluf, Or Patashnik, Yotam Nitzan, Yaniv Azar, Stav Shapiro, and Daniel Cohen-Or. 2020. Encoding in style: a stylegan encoder for image-to-image translation. arXiv (2020).Google Scholar

42. rosinality. 2020. stylegan2-pytorch. https://github.com/rosinality/stylegan2-pytorch.Google Scholar

43. Florian Schroff, Dmitry Kalenichenko, and James Philbin. 2015. Facenet: A unified embedding for face recognition and clustering. In Proc. CVPR.Google ScholarCross Ref

44. Yujun Shen, Ceyuan Yang, Xiaoou Tang, and Bolei Zhou. 2020. InterFaceGAN: Interpreting the disentangled face representation learned by GANs. In Proc. PAMI.Google ScholarCross Ref

45. Yujun Shen and Bolei Zhou. 2020. Closed-form factorization of latent semantics in gans. arXiv (2020).Google Scholar

46. Yichun Shi, Debayan Deb, and Anil K Jain. 2019. Warpgan: Automatic caricature generation. In Proc. CVPR.Google ScholarCross Ref

47. Ayush Tewari, Mohamed Elgharib, Florian Bernard, Hans-Peter Seidel, Patrick P?rez, Michael Zollh?fer, and Christian Theobalt. 2020. Pie: Portrait image embedding for semantic control. ACM Trans. Graph. 39, 6 (2020), 1–14.Google ScholarDigital Library

48. Ting-Chun Wang, Ming-Yu Liu, Jun-Yan Zhu, Andrew Tao, Jan Kautz, and Bryan Catanzaro. 2018. High-resolution image synthesis and semantic manipulation with conditional gans. In Proc. CVPR.Google ScholarCross Ref

49. Raymond A Yeh, Chen Chen, Teck Yian Lim, Alexander G Schwing, Mark Hasegawa-Johnson, and Minh N Do. 2017. Semantic image inpainting with deep generative models. In Proc. CVPR.Google ScholarCross Ref

50. Jiapeng Zhu, Yujun Shen, Deli Zhao, and Bolei Zhou. 2020. In-domain gan inversion for real image editing. In Proc. ECCV.Google ScholarDigital Library

51. Jun-Yan Zhu, Philipp Kr?henb?hl, Eli Shechtman, and Alexei A Efros. 2016. Generative visual manipulation on the natural image manifold. In Proc. ECCV.Google ScholarCross Ref

52. Jun-Yan Zhu, Taesung Park, Phillip Isola, and Alexei A Efros. 2017. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proc. ICCV.Google ScholarCross Ref