“SketchHairSalon: deep sketch-based hair image synthesis” by Xiao, Yu, Han, Zheng and Fu

Conference:

Type(s):

Title:

- SketchHairSalon: deep sketch-based hair image synthesis

Session/Category Title: Synthesizing Human Images

Presenter(s)/Author(s):

Abstract:

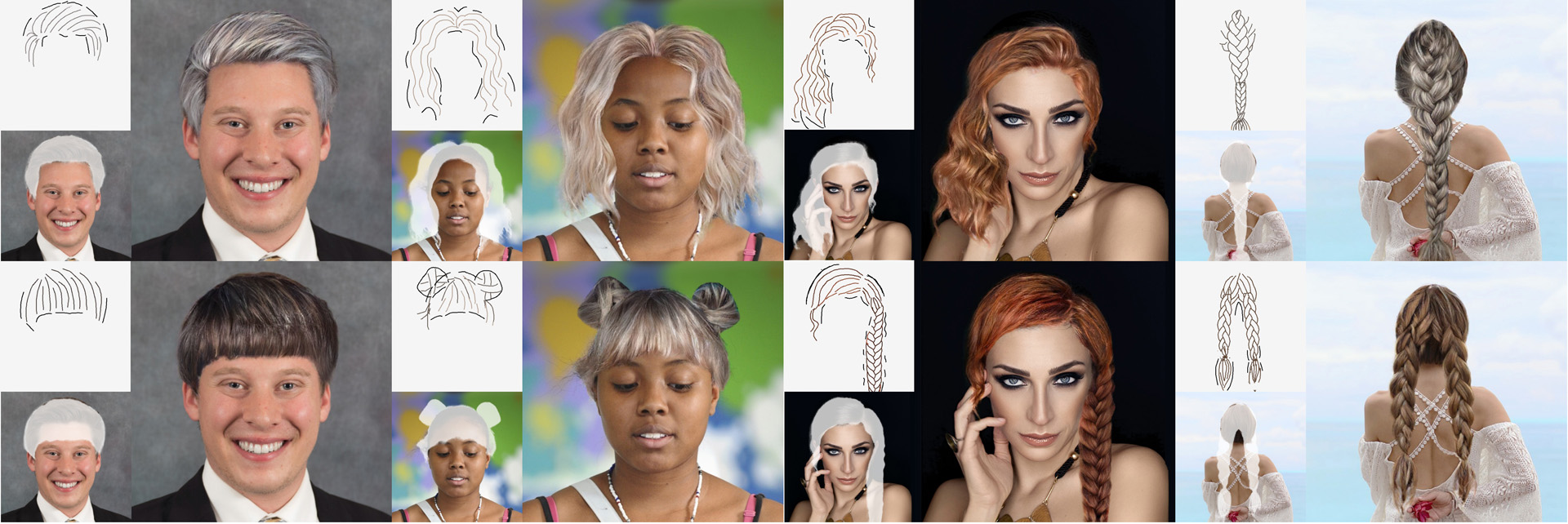

Recent deep generative models allow real-time generation of hair images from sketch inputs. Existing solutions often require a user-provided binary mask to specify a target hair shape. This not only costs users extra labor but also fails to capture complicated hair boundaries. Those solutions usually encode hair structures via orientation maps, which, however, are not very effective to encode complex structures. We observe that colored hair sketches already implicitly define target hair shapes as well as hair appearance and are more flexible to depict hair structures than orientation maps. Based on these observations, we present SketchHairSalon, a two-stage framework for generating realistic hair images directly from freehand sketches depicting desired hair structure and appearance. At the first stage, we train a network to predict a hair matte from an input hair sketch, with an optional set of non-hair strokes. At the second stage, another network is trained to synthesize the structure and appearance of hair images from the input sketch and the generated matte. To make the networks in the two stages aware of long-term dependency of strokes, we apply self-attention modules to them. To train these networks, we present a new dataset containing thousands of annotated hair sketch-image pairs and corresponding hair mattes. Two efficient methods for sketch completion are proposed to automatically complete repetitive braided parts and hair strokes, respectively, thus reducing the workload of users. Based on the trained networks and the two sketch completion strategies, we build an intuitive interface to allow even novice users to design visually pleasing hair images exhibiting various hair structures and appearance via freehand sketches. The qualitative and quantitative evaluations show the advantages of the proposed system over the existing or alternative solutions.

References:

1. E. Artin. 1947. Theory of Braids. Annals of Mathematics 48, 1 (1947), 101–126. http://www.jstor.org/stable/1969218

2. John Canny. 1986. A computational approach to edge detection. IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI) 6 (1986), 679–698.

3. Menglei Chai, Jian Ren, and Sergey Tulyakov. 2020. Neural hair rendering. In Proceedings of the European Conference on Computer Vision (ECCV). Springer, 371–388.

4. Menglei Chai, Lvdi Wang, Yanlin Weng, Xiaogang Jin, and Kun Zhou. 2013. Dynamic hair manipulation in images and videos. ACM Transactions on Graphics (TOG) 32, 4 (2013), 1–8.

5. Hong Chen and Song-Chun Zhu. 2006. A generative sketch model for human hair analysis and synthesis. IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI) 28, 7 (2006), 1025–1040.

6. Shu-Yu Chen, Feng-Lin Liu, Yu-Kun Lai, Paul L. Rosin, Chunpeng Li, Hongbo Fu, and Lin Gao. 2021. DeepFaceEditing: Deep Face Generation and Editing with Disentangled Geometry and Appearance Control. ACM Transactions on Graphics (TOG) 40, 4, Article 90 (July 2021), 15 pages.

7. Shu-Yu Chen, Wanchao Su, Lin Gao, Shihong Xia, and Hongbo Fu. 2020. DeepFace-Drawing: Deep Generation of Face Images from Sketches. ACM Transactions on Graphics (TOG) 39, 4 (2020), 72:1–72:16.

8. Yunjey Choi, Minje Choi, Munyoung Kim, Jung-Woo Ha, Sunghun Kim, and Jaegul Choo. 2018. Stargan: Unified generative adversarial networks for multi-domain image-to-image translation. In Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR). 8789–8797.

9. Alexey Dosovitskiy and Thomas Brox. 2016. Generating images with perceptual similarity metrics based on deep networks. In Advances in Neural Information Processing Systems (NIPS). 658–666.

10. Hongbo Fu, Yichen Wei, Chiew-Lan Tai, and Long Quan. 2007. Sketching hairstyles. In Proceedings of the 4th Eurographics workshop on Sketch-based interfaces and modeling. 31–36.

11. Jun Fu, Jing Liu, Haijie Tian, Yong Li, Yongjun Bao, Zhiwei Fang, and Hanqing Lu. 2019. Dual attention network for scene segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 3146–3154.

12. Shuyang Gu, Jianmin Bao, Hao Yang, Dong Chen, Fang Wen, and Lu Yuan. 2019. Mask-guided portrait editing with conditional gans. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 3436–3445.

13. Chen-Yuan Hsu, Li-Yi Wei, Lihua You, and Jian Jun Zhang. 2020. Autocomplete Element Fields. In Proceedings of the ACM Conference on Human Factors in Computing Systems (HCI). 1–13.

14. Liwen Hu, Chongyang Ma, Linjie Luo, and Hao Li. 2015. Single-view hair modeling using a hairstyle database. ACM Transactions on Graphics (TOG) 34, 4 (2015), 1–9.

15. Liwen Hu, Chongyang Ma, Linjie Luo, Li-Yi Wei, and Hao Li. 2014. Capturing braided hairstyles. ACM Transactions on Graphics (TOG) 33, 6 (2014), 1–9.

16. Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, and Alexei A Efros. 2017. Image-to-Image Translation with Conditional Adversarial Networks. (2017).

17. Wenzel Jakob, Jonathan T Moon, and Steve Marschner. 2009. Capturing hair assemblies fiber by fiber. ACM Transactions on Graphics (TOG) 28, 5 (2009), 1–9.

18. Tero Karras, Samuli Laine, and Timo Aila. 2019. A style-based generator architecture for generative adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 4401–4410.

19. Guillaume Lample, Neil Zeghidour, Nicolas Usunier, Antoine Bordes, Ludovic Denoyer, and Marc’Aurelio Ranzato. 2017. Fader networks: Manipulating images by sliding attributes. In Advances in Neural Information Processing Systems (NIPS). 5967–5976.

20. Cheng-Han Lee, Ziwei Liu, Lingyun Wu, and Ping Luo. 2020. Maskgan: Towards diverse and interactive facial image manipulation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 5549–5558.

21. Ta-Chih Lee, Rangasami L. Kashyap, and Chong-Nam Chu. 1994. Building Skeleton Models via 3-D Medial Surface/Axis Thinning Algorithms. CVGIP: Graphical Models and Image Processing 56, 6 (Nov. 1994), 462–478.

22. Yaoyi Li and Hongtao Lu. 2020. Natural image matting via guided contextual attention. In Proceedings of the American Association for Artificial Intelligence (AAAI), Vol. 34. 11450–11457.

23. Xiaoyang Mao, Hiroki Kato, Atsumi Imamiya, and Ken Anjyo. 2004. Sketch interface based expressive hairstyle modelling and rendering. In Proceedings Computer Graphics International. IEEE, 608–611.

24. Umar Riaz Muhammad, Michele Svanera, Riccardo Leonardi, and Sergio Benini. 2018. Hair detection, segmentation, and hairstyle classification in the wild. Image and Vision Computing 71 (2018), 25–37.

25. Kyle Olszewski, Duygu Ceylan, Jun Xing, Jose Echevarria, Zhili Chen, Weikai Chen, and Hao Li. 2020. Intuitive, Interactive Beard and Hair Synthesis with Generative Models. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 7446–7456.

26. Rohit Pandey, Sergio Orts Escolano, Chloe Legendre, Christian Haene, Sofien Bouaziz, Christoph Rhemann, Paul Debevec, and Sean Fanello. 2021. Total relighting: learning to relight portraits for background replacement. ACM Transactions on Graphics (TOG) 40, 4 (2021), 1–21.

27. Taesung Park, Ming-Yu Liu, Ting-Chun Wang, and Jun-Yan Zhu. 2019. Semantic Image Synthesis With Spatially-Adaptive Normalization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR).

28. Haonan Qiu, Chuan Wang, Hang Zhu, Xiao Zhu, Jinjin Gu, and Xiaoguang Han. 2019. Two-phase Hair Image Synthesis by Self-Enhancing Generative Model. In Computer Graphics Forum (CGF), Vol. 38. Wiley Online Library, 403–412.

29. Patsorn Sangkloy, Jingwan Lu, Chen Fang, Fisher Yu, and James Hays. 2017. Scribbler: Controlling deep image synthesis with sketch and color. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 5400–5409.

30. Y Shen, C Zhang, H Fu, K Zhou, and Y Zheng. 2020. DeepSketchHair: Deep Sketch-based 3D Hair Modeling. IEEE Transactions on Visualization and Computer Graphics (TVCG) (2020).

31. Zhentao Tan, Menglei Chai, Dongdong Chen, Jing Liao, Qi Chu, Lu Yuan, Sergey Tulyakov, and Nenghai Yu. 2020. MichiGAN: multi-input-conditioned hair image generation for portrait editing. ACM Transactions on Graphics (TOG) 39, 4 (2020), 95–1.

32. Peihan Tu, Li-Yi Wei, Koji Yatani, Takeo Igarashi, and Matthias Zwicker. 2020. Continuous curve textures. ACM Transactions on Graphics (TOG) 39, 6 (2020), 1–16.

33. Ting-Chun Wang, Ming-Yu Liu, Jun-Yan Zhu, Andrew Tao, Jan Kautz, and Bryan Catanzaro. 2018. High-resolution image synthesis and semantic manipulation with conditional gans. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 8798–8807.

34. Zhibo Wang, Xin Yu, Ming Lu, Quan Wang, Chen Qian, and Feng Xu. 2020. Single image portrait relighting via explicit multiple reflectance channel modeling. ACM Transactions on Graphics (TOG) 39, 6 (2020), 1–13.

35. Lingyu Wei, Liwen Hu, Vladimir Kim, Ersin Yumer, and Hao Li. 2018. Real-time hair rendering using sequential adversarial networks. In Proceedings of the European Conference on Computer Vision (ECCV). 99–116.

36. YC Wei, E Ofek, L Quan, and HY Shum. 2005. Modeling hair from multiple views. ACM Transactions on Graphics (TOG) 24, 3 (2005), 816–820.

37. Taihong Xiao, Jiapeng Hong, and Jinwen Ma. 2018. Elegant: Exchanging latent encodings with gan for transferring multiple face attributes. In Proceedings of the European conference on computer vision (ECCV). 168–184.

38. Jun Xing, Koki Nagano, Weikai Chen, Haotian Xu, Li-yi Wei, Yajie Zhao, Jingwan Lu, Byungmoon Kim, and Hao Li. 2019. Hairbrush for immersive data-driven hair modeling. In Proceedings of the 32Nd Annual ACM Symposium on User Interface Software and Technology (UIST). 263–279.

39. Lingchen Yang, Zefeng Shi, Youyi Zheng, and Kun Zhou. 2019. Dynamic hair modeling from monocular videos using deep neural networks. ACM Transactions on Graphics (TOG) 38, 6 (2019), 1–12.

40. Meng Zhang, Pan Wu, Hongzhi Wu, Yanlin Weng, Youyi Zheng, and Kun Zhou. 2018. Modeling hair from an rgb-d camera. ACM Transactions on Graphics (TOG) 37, 6 (2018), 1–10.

41. Yi Zhou, Liwen Hu, Jun Xing, Weikai Chen, Han-Wei Kung, Xin Tong, and Hao Li. 2018. Hairnet: Single-view hair reconstruction using convolutional neural networks. In Proceedings of the European Conference on Computer Vision (ECCV). 235–251.

42. Peihao Zhu, Rameen Abdal, Yipeng Qin, and Peter Wonka. 2020. SEAN: Image Synthesis with Semantic Region-Adaptive Normalization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 5104–5113.