“Self-Calibrating, Fully Differentiable NLOS Inverse Rendering” by Choi, Kim, Choi, Marco, Gutierrez, et al. …

Conference:

Type(s):

Title:

- Self-Calibrating, Fully Differentiable NLOS Inverse Rendering

Session/Category Title:

- Computer Vision

Presenter(s)/Author(s):

Abstract:

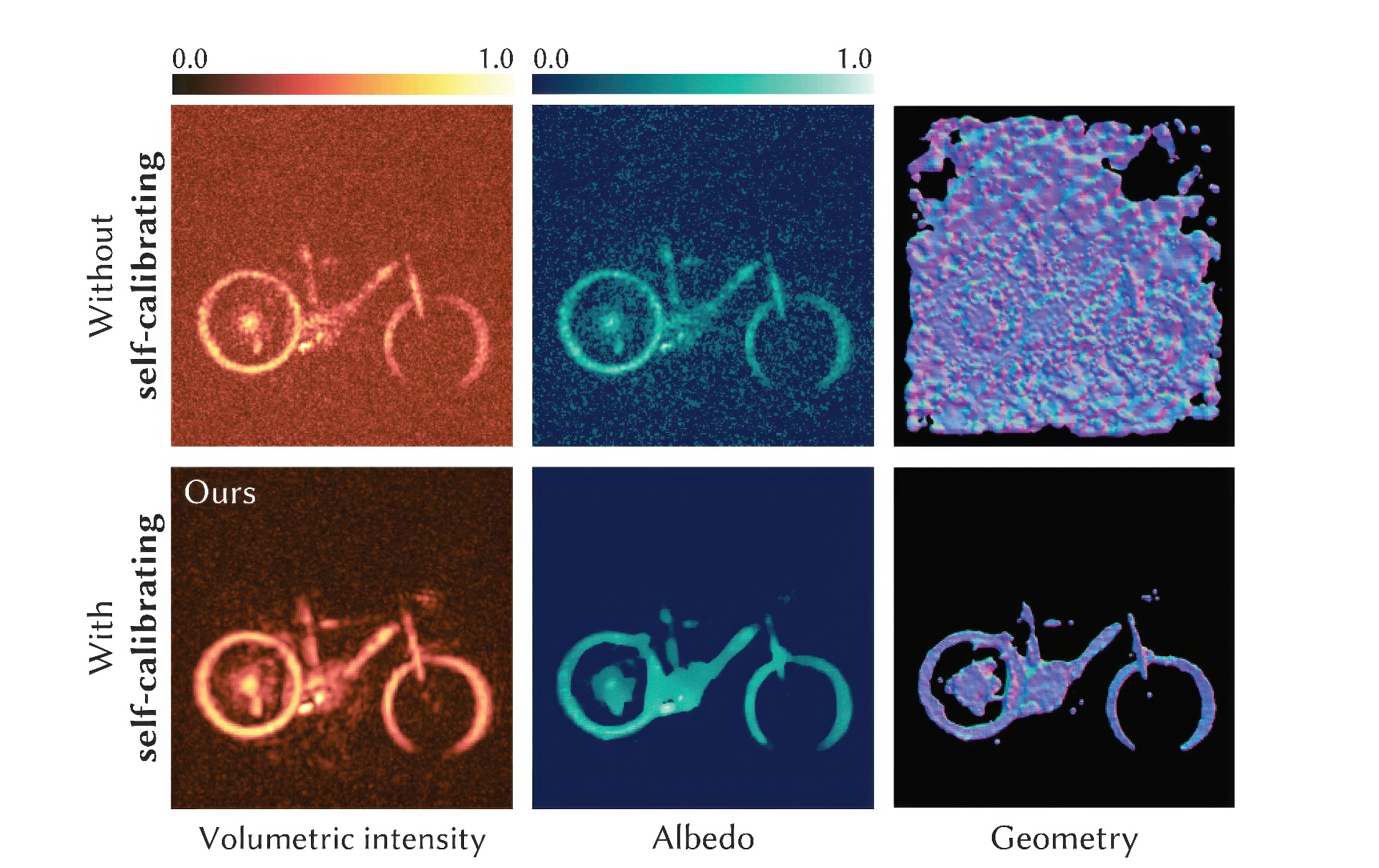

Existing time-resolved non-line-of-sight (NLOS) imaging methods reconstruct hidden scenes by inverting the optical paths of indirect illumination measured at visible relay surfaces. These methods are prone to reconstruction artifacts due to inversion ambiguities and capture noise, which are typically mitigated through the manual selection of filtering functions and parameters. We introduce a fully-differentiable end-to-end NLOS inverse rendering pipeline that self-calibrates the imaging parameters during the reconstruction of hidden scenes, using as input only the measured illumination while working both in the time and frequency domains. Our pipeline extracts a geometric representation of the hidden scene from NLOS volumetric intensities and estimates the time-resolved illumination at the relay wall produced by such geometric information using differentiable transient rendering. We then use gradient descent to optimize imaging parameters by minimizing the error between our simulated time-resolved illumination and the measured illumination. To make our pipeline efficient and differentiable, we combine diffraction-based imaging with path-space light transport and a simple ray marching technique for surface extraction. Unlike the majority of previous works, our method extracts detailed, dense sets of surface points and normals of the hidden scene. Our results demonstrate the robustness of our method to consistently reconstruct geometry and albedo, even with significant noise interference.

References:

[1]

Byeongjoo Ahn, Akshat Dave, Ashok Veeraraghavan, Ioannis Gkioulekas, and Aswin C Sankaranarayanan. 2019. Convolutional approximations to the general non-line-of-sight imaging operator. In Proc. International Conference on Computer Vision (ICCV). 7889–7899.

[2]

Victor Arellano, Diego Gutierrez, and Adrian Jarabo. 2017. Fast Back-Projection for Non-Line of Sight Reconstruction. Optics Express 25, 10 (2017).

[3]

Jonathan T. Barron, Ben Mildenhall, Matthew Tancik, Peter Hedman, Ricardo Martin-Brualla, and Pratul P. Srinivasan. 2021. Mip-NeRF: A Multiscale Representation for Anti-Aliasing Neural Radiance Fields. ICCV (2021).

[4]

Mauro Buttafava, Jessica Zeman, Alberto Tosi, Kevin Eliceiri, and Andreas Velten. 2015. Non-line-of-sight imaging using a time-gated single photon avalanche diode. Opt. Express 23, 16 (2015).

[5]

Angel X Chang, Thomas Funkhouser, Leonidas Guibas, Pat Hanrahan, Qixing Huang, Zimo Li, Silvio Savarese, Manolis Savva, Shuran Song, Hao Su, 2015. Shapenet: An information-rich 3d model repository. arXiv preprint arXiv:1512.03012 (2015).

[6]

Wenzheng Chen, Fangyin Wei, Kiriakos N. Kutulakos, Szymon Rusinkiewicz, and Felix Heide. 2020. Learned Feature Embeddings for Non-Line-of-Sight Imaging and Recognition. ACM Trans. Graph. 39, 6 (2020).

[7]

Justin Dove and Jeffrey H. Shapiro. 2020a. Nonparaxial phasor-field propagation. Opt. Express, OE 28, 20 (Sept. 2020), 29212–29229. https://doi.org/10.1364/OE.401203 Publisher: Optical Society of America.

[8]

Justin Dove and Jeffrey H. Shapiro. 2020b. Speckled speckled speckle. Opt. Express, OE 28, 15 (July 2020), 22105–22120. https://doi.org/10.1364/OE.398226 Publisher: Optical Society of America.

[9]

Daniele Faccio, Andreas Velten, and Gordon Wetzstein. 2020. Non-line-of-sight imaging. Nature Reviews Physics 2, 6 (2020), 318–327.

[10]

Yuki Fujimura, Takahiro Kushida, Takuya Funatomi, and Yasuhiro Mukaigawa. 2023. NLOS-NeuS: Non-line-of-sight Neural Implicit Surface. arXiv preprint arXiv:2303.12280v2 (2023).

[11]

Genevieve Gariepy, Nikola Krstajić, Robert Henderson, Chunyong Li, Robert R Thomson, Gerald S Buller, Barmak Heshmat, Ramesh Raskar, Jonathan Leach, and Daniele Faccio. 2015. Single-photon sensitive light-in-fight imaging. Nature Communications 6 (2015).

[12]

Javier Grau Chopite, Matthias B. Hullin, Michael Wand, and Julian Iseringhausen. 2020. Deep Non-Line-of-Sight Reconstruction. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR).

[13]

Ibón Guillén, Xiaochun Liu, Andreas Velten, Diego Gutierrez, and Adrian Jarabo. 2020. On the Effect of Reflectance on Phasor Field Non-Line-of-Sight Imaging. In IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 9269–9273.

[14]

Otkrist Gupta, Thomas Willwacher, Andreas Velten, Ashok Veeraraghavan, and Ramesh Raskar. 2012. Reconstruction of hidden 3D shapes using diffuse reflections. Opt. Express 20, 17 (2012).

[15]

Felix Heide, Matthew O’Toole, Kai Zang, David B Lindell, Steven Diamond, and Gordon Wetzstein. 2019. Non-line-of-sight imaging with partial occluders and surface normals. ACM Trans. Graph. 38, 3 (2019), 22.

[16]

Quercus Hernandez, Diego Gutierrez, and Adrian Jarabo. 2017. A Computational Model of a Single-Photon Avalanche Diode Sensor for Transient Imaging. arXiv preprint arXiv:1703.02635 (2017).

[17]

Julian Iseringhausen and Matthias B Hullin. 2020. Non-line-of-sight reconstruction using efficient transient rendering. ACM Trans. Graph. 39, 1 (2020), 1–14.

[18]

Adrian Jarabo, Julio Marco, Adolfo Muñoz, Raul Buisan, Wojciech Jarosz, and Diego Gutierrez. 2014. A Framework for Transient Rendering. ACM Trans. Graph. 33, 6 (2014).

[19]

Adrian Jarabo, Belen Masia, Julio Marco, and Diego Gutierrez. 2017. Recent advances in transient imaging: A computer graphics and vision perspective. Visual Informatics 1, 1 (2017), 65–79.

[20]

Michael Kazhdan and Hugues Hoppe. 2013. Screened poisson surface reconstruction. ACM Transactions on Graphics (TOG) 32, 3 (2013), 29.

[21]

Marco La Manna, Fiona Kine, Eric Breitbach, Jonathan Jackson, Talha Sultan, and Andreas Velten. 2018. Error backprojection algorithms for non-line-of-sight imaging. IEEE transactions on pattern analysis and machine intelligence 41, 7 (2018), 1615–1626.

[22]

Martin Laurenzis and Andreas Velten. 2014. Feature selection and back-projection algorithms for nonline-of-sight laser–gated viewing. Journal of Electronic Imaging 23, 6 (2014), 063003.

[23]

Tzu-Mao Li, Miika Aittala, Frédo Durand, and Jaakko Lehtinen. 2018. Differentiable monte carlo ray tracing through edge sampling. ACM Transactions on Graphics (TOG) 37, 6 (2018), 1–11.

[24]

Zhengpeng Liao, Deyang Jiang, Xiaochun Liu, Andreas Velten, Yajun Ha, and Xin Lou. 2021. FPGA Accelerator for Real-Time Non-Line-of-Sight Imaging. IEEE Transactions on Circuits and Systems I: Regular Papers (2021).

[25]

David B Lindell, Gordon Wetzstein, and Matthew O’Toole. 2019. Wave-based non-line-of-sight imaging using fast f-k migration. ACM Trans. Graph. 38, 4 (2019), 1–13.

[26]

Xiaochun Liu, Sebastian Bauer, and Andreas Velten. 2020. Phasor field diffraction based reconstruction for fast non-line-of-sight imaging systems. Nature communications 11, 1 (2020), 1–13.

[27]

Xiaochun Liu, Ibón Guillén, Marco La Manna, Ji Hyun Nam, Syed Azer Reza, Toan Huu Le, Adrian Jarabo, Diego Gutierrez, and Andreas Velten. 2019. Non-Line-of-Sight Imaging using Phasor Fields Virtual Wave Optics. Nature (2019).

[28]

Julio Marco, Adrian Jarabo, Ji Hyun Nam, Xiaochun Liu, Miguel Ángel Cosculluela, Andreas Velten, and Diego Gutierrez. 2021. Virtual light transport matrices for non-line-of-sight imaging. In 2021 IEEE/CVF International Conference on Computer Vision (ICCV).

[29]

Ben Mildenhall, Pratul P. Srinivasan, Matthew Tancik, Jonathan T. Barron, Ravi Ramamoorthi, and Ren Ng. 2020. NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis. In ECCV.

[30]

Fangzhou Mu, Sicheng Mo, Jiayong Peng, Xiaochun Liu, Ji Hyun Nam, Siddeshwar Raghavan, Andreas Velten, and Yin Li. 2022. Physics to the Rescue: Deep Non-line-of-sight Reconstruction for High-speed Imaging. In IEEE Conference on Computational Photography (ICCP).

[31]

Ji Hyun Nam, Eric Brandt, Sebastian Bauer, Xiaochun Liu, Marco Renna, Alberto Tosi, Eftychios Sifakis, and Andreas Velten. 2021. Low-latency time-of-flight non-line-of-sight imaging at 5 frames per second. Nature communications 12, 1 (2021), 1–10.

[32]

Michael Niemeyer, Jonathan T. Barron, Ben Mildenhall, Mehdi S. M. Sajjadi, Andreas Geiger, and Noha Radwan. 2022. RegNeRF: Regularizing Neural Radiance Fields for View Synthesis from Sparse Inputs. In CVPR.

[33]

Matthew O’Toole, David B Lindell, and Gordon Wetzstein. 2018. Confocal non-line-of-sight imaging based on the light-cone transform. Nature 555, 7696 (2018), 338.

[34]

Adithya Pediredla, Ashok Veeraraghavan, and Ioannis Gkioulekas. 2019. Ellipsoidal path connections for time-gated rendering. ACM Transactions on Graphics (TOG) 38, 4 (2019), 1–12.

[35]

Markus Plack, Clara Callenberg, Monika Schneider, and Matthias B Hullin. 2023. Fast Differentiable Transient Rendering for Non-Line-of-Sight Reconstruction. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision. 3067–3076.

[36]

Syed Azer Reza, Marco La Manna, Sebastian Bauer, and Andreas Velten. 2019. Phasor field waves: experimental demonstrations of wave-like properties. Opt. Express 27, 22 (Oct. 2019), 32587. https://doi.org/10.1364/OE.27.032587

[37]

Diego Royo, Jorge García, Adolfo Muñoz, and Adrian Jarabo. 2022. Non-line-of-sight transient rendering. Computers & Graphics 107 (2022), 84–92. https://doi.org/10.1016/j.cag.2022.07.003

[38]

Guy Satat, Barmak Heshmat, Nikhil Naik, Albert Redo-Sanchez, and Ramesh Raskar. 2016. Advances in Ultrafast Optics and Imaging Applications. In SPIE Defense+ Security.

[39]

Siyuan Shen, Zi Wang, Ping Liu, Zhengqing Pan, Ruiqian Li, Tian Gao, Shiying Li, and Jingyi Yu. 2021. Non-line-of-sight imaging via neural transient fields. IEEE Transactions on Pattern Analysis and Machine Intelligence 43, 7 (2021), 2257–2268.

[40]

Peter Shirley and Kenneth Chiu. 1997. A low distortion map between disk and square. Journal of graphics tools 2, 3 (1997), 45–52.

[41]

Chia-Yin Tsai, Aswin C Sankaranarayanan, and Ioannis Gkioulekas. 2019. Beyond Volumetric Albedo–A Surface Optimization Framework for Non-Line-Of-Sight Imaging. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 1545–1555.

[42]

Andreas Velten, Thomas Willwacher, Otkrist Gupta, Ashok Veeraraghavan, Moungi G. Bawendi, and Ramesh Raskar. 2012. Recovering three-dimensional shape around a corner using ultrafast time-of-flight imaging. Nature Communications3 (2012).

[43]

Lifan Wu, Guangyan Cai, Ravi Ramamoorthi, and Shuang Zhao. 2021. Differentiable time-gated rendering. ACM Transactions on Graphics (TOG) 40, 6 (2021), 1–16.

[44]

Shumian Xin, Sotiris Nousias, Kiriakos N Kutulakos, Aswin C Sankaranarayanan, Srinivasa G Narasimhan, and Ioannis Gkioulekas. 2019. A theory of Fermat paths for non-line-of-sight shape reconstruction. In IEEE Computer Vision and Pattern Recognition (CVPR). 6800–6809.

[45]

Shinyoung Yi, Donggun Kim, Kiseok Choi, Adrian Jarabo, Diego Gutierrez, and Min H. Kim. 2021. Differentiable Transient Rendering. ACM Transactions on Graphics (Proc. SIGGRAPH Asia 2021) 40, 6 (2021).

[46]

Sean I. Young, David B. Lindell, Bernd Girod, David Taubman, and Gordon Wetzstein. 2020. Non-line-of-sight Surface Reconstruction Using the Directional Light-cone Transform. In Proc. CVPR.

[47]

Shuang Zhao, Wenzel Jakob, and Tzu-Mao Li. 2020. Physics-based differentiable rendering: from theory to implementation. In ACM siggraph 2020 courses. 1–30.