“Robust solving of optical motion capture data by denoising” by Holden

Conference:

Type(s):

Title:

- Robust solving of optical motion capture data by denoising

Session/Category Title:

- Bodies in Motion Human Performance Capture

Presenter(s)/Author(s):

Moderator(s):

Entry Number:

- 165

Abstract:

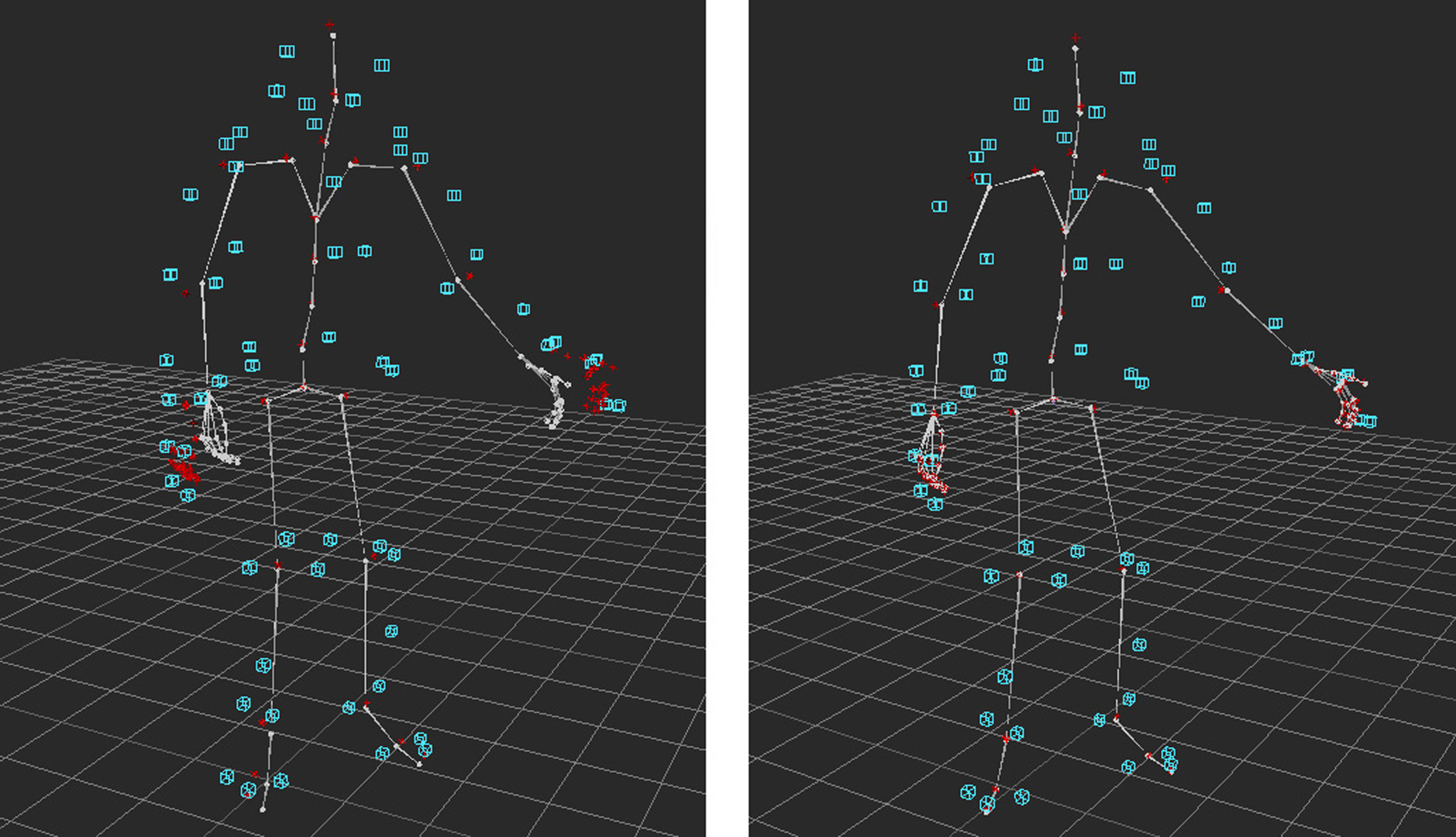

Raw optical motion capture data often includes errors such as occluded markers, mislabeled markers, and high frequency noise or jitter. Typically these errors must be fixed by hand – an extremely time-consuming and tedious task. Due to this, there is a large demand for tools or techniques which can alleviate this burden. In this research we present a tool that sidesteps this problem, and produces joint transforms directly from raw marker data (a task commonly called “solving”) in a way that is extremely robust to errors in the input data using the machine learning technique of denoising. Starting with a set of marker configurations, and a large database of skeletal motion data such as the CMU motion capture database [CMU 2013b], we synthetically reconstruct marker locations using linear blend skinning and apply a unique noise function for corrupting this marker data – randomly removing and shifting markers to dynamically produce billions of examples of poses with errors similar to those found in real motion capture data. We then train a deep denoising feed-forward neural network to learn a mapping from this corrupted marker data to the corresponding transforms of the joints. Once trained, our neural network can be used as a replacement for the solving part of the motion capture pipeline, and, as it is very robust to errors, it completely removes the need for any manual clean-up of data. Our system is accurate enough to be used in production, generally achieving precision to within a few millimeters, while additionally being extremely fast to compute with low memory requirements.

References:

1. Ijaz Akhter, Tomas Simon, Sohaib Khan, Iain Matthews, and Yaser Sheikh. 2012. Bilinear spatiotemporal basis models. ACM Transactions on Graphics 31, 2 (April 2012), 17:1–17:12. Google ScholarDigital Library

2. Andreas Aristidou, Daniel Cohen-Or, Jessica K. Hodgins, and Ariel Shamir. 2018. Self-similarity Analysis for Motion Capture Cleaning. Comput. Graph. Forum 37, 2 (2018).Google Scholar

3. Andreas Aristidou and Joan Lasenby. 2013. Real-time marker prediction and CoR estimation in optical motion capture. The Visual Computer 29, 1 (01 Jan 2013), 7–26.Google Scholar

4. Autodesk. 2016. MotionBuilder HumanIK. https://knowledge.autodesk.com/support/motionbuilder/learn-explore/caas/CloudHelp/cloudhelp/2017/ENU/MotionBuilder/files/GUID-44608A05-8D2F-4C2F-BDA6-E3945F36C872-htm.html. (2016).Google Scholar

5. Steve Bako, Thijs Vogels, Brian Mcwilliams, Mark Meyer, Jan Nov?K, Alex Harvill, Pradeep Sen, Tony Derose, and Fabrice Rousselle. 2017. Kernel-predicting Convolutional Networks for Denoising Monte Carlo Renderings. ACM Trans. Graph. 36, 4, Article 97 (July 2017), 14 pages. Google ScholarDigital Library

6. Jan Baumann, Bj?rn Kr?ger, Arno Zinke, and Andreas Weber. 2011. Data-Driven Completion of Motion Capture Data. In Workshop in Virtual Reality interactions and Physical Simulation “VRIPHYS” (2011), Jan Bender, Kenny Erleben, and Eric Galin (Eds.). The Eurographics Association.Google Scholar

7. Paul J. Besl and Neil D. McKay. 1992. A Method for Registration of 3-D Shapes. IEEE Trans. Pattern Anal. Mach. Intell. 14, 2 (Feb. 1992), 239–256. Google ScholarDigital Library

8. M. Burke and J. Lasenby. 2016. Estimating missing marker positions using low dimensional Kaiman smoothing. Journal of Biomechanics 49, 9 (2016), 1854 — 1858.Google ScholarCross Ref

9. Samuel Buss. 2004. Introduction to inverse kinematics with Jacobian transpose, pseudoinverse and damped least squares methods. 17 (05 2004).Google Scholar

10. Jinxiang Chai and Jessica K. Hodgins. 2005. Performance Animation from Low-dimensional Control Signals. In ACM SIGGRAPH 2005 Papers (SIGGRAPH ’05). ACM, New York, NY, USA, 686–696. Google ScholarDigital Library

11. Chakravarty R. Alla Chaitanya, Anton S. Kaplanyan, Christoph Schied, Marco Salvi, Aaron Lefohn, Derek Nowrouzezahrai, and Timo Aila. 2017. Interactive Reconstruction of Monte Carlo Image Sequences Using a Recurrent Denoising Au-toencoder. ACM Trans. Graph. 36, 4, Article 98 (July 2017), 12 pages. Google ScholarDigital Library

12. Junyoung Chung, ?aglar G?l?ehre, KyungHyun Cho, and Yoshua Bengio. 2014. Empirical Evaluation of Gated Recurrent Neural Networks on Sequence Modeling. CoRR abs/1412.3555 (2014). arXiv:1412.3555 http://arxiv.org/abs/1412.3555Google Scholar

13. CMU. 2013a. BVH Conversion of Carnegie-Mellon Mocap Database. https://sites.google.com/a/cgspeed.com/cgspeed/motion-capture/cmu-bvh-conversion/. (2013).Google Scholar

14. CMU. 2013b. Carnegie-Mellon Mocap Database, http://mocap.cs.cmu.edu/. (2013).Google Scholar

15. Klaus Dorfm?ller-Ulhaas. 2005. Robust Optical User Motion Tracking Using a Kaiman Filter.Google Scholar

16. Yinfu Feng, Ming-Ming Ji, Jun Xiao, Xiaosong Yang, Jian J Zhang, Yueting Zhuang, and Xuelong Li. 2014a. Mining Spatial-Temporal Patterns and Structural Sparsity for Human Motion Data Denoising. 45 (12 2014).Google Scholar

17. Yinfu Feng, Jun Xiao, Yueting Zhuang, Xiaosong Yang, Jian J. Zhang, and Rong Song. 2014b. Exploiting temporal stability and low-rank structure for motion capture data refinement. Information Sciences 277, Supplement C (2014), 777 — 793.Google ScholarCross Ref

18. Tamar Flash and Neville Hogans. 1985. The Coordination of Arm Movements: An Experimentally Confirmed Mathematical Model. Journal of neuroscience 5 (1985), 1688–1703.Google ScholarCross Ref

19. Katerina Fragkiadaki, Sergey Levine, and Jitendra Malik. 2015. Recurrent Network Models for Kinematic Tracking. CoRR abs/1508.00271 (2015). arXiv:1508.00271 http://arxiv.org/abs/1508.00271 Google ScholarDigital Library

20. Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2015. Deep Residual Learning for Image Recognition. CoRR abs/1512.03385 (2015). arXiv:1512.03385 http://arxiv.org/abs/1512.03385Google Scholar

21. Daniel Holden, Jun Saito, and Taku Komura. 2016. A Deep Learning Framework for Character Motion Synthesis and Editing. ACM Trans. Graph. 35, 4, Article 138 (July 2016), 11 pages. Google ScholarDigital Library

22. Daniel Holden, Jun Saito, Taku Komura, and Thomas Joyce. 2015. Learning Motion Manifolds with Convolutional Autoencoders. In SIGGRAPH Asia 2015 Technical Briefs (SA ’15). ACM, New York, NY, USA, Article 18, 4 pages. Google ScholarDigital Library

23. Hengyuan Hu, Lisheng Gao, and Quanbin Ma. 2016. Deep Restricted Boltzmann Networks. CoRR abs/1611.07917 (2016). arXiv:1611.07917 http://arxiv.org/abs/1611.07917Google Scholar

24. Alexander G. Ororbia II, C. Lee Giles, and David Reitter. 2015. Online Semi-Supervised Learning with Deep Hybrid Boltzmann Machines and Denoising Autoencoders. CoRR abs/1511.06964 (2015). arXiv:1511.06964 http://arxiv.org/abs/1511.06964Google Scholar

25. Ladislav Kavan, Steven Collins, Ji?? ??ra, and Carol O’Sullivan. 2008. Geometric Skinning with Approximate Dual Quaternion Blending. ACM Trans. Graph. 27, 4, Article 105 (Nov. 2008), 23 pages. Google ScholarDigital Library

26. Diederik P. Kingma and Jimmy Ba. 2014. Adam: A Method for Stochastic Optimization. CoRR abs/1412.6980 (2014). arXiv:1412.6980 http://arxiv.org/abs/1412.6980Google Scholar

27. G?nter Klambauer, Thomas Unterthiner, Andreas Mayr, and Sepp Hochreiter. 2017. Self-Normalizing Neural Networks. CoRR abs/1706.02515 (2017). arXiv:1706.02515 http://arxiv.org/abs/1706.02515Google Scholar

28. Yann LeCun, L?on Bottou, Genevieve B. Orr, and Klaus-Robert M?ller. 1998. Efficient BackProp. In Neural Networks: Tricks of the Trade, This Book is an Outgrowth of a 1996 NIPS Workshop. Springer-Verlag, London, UK, UK, 9–50. http://dl.acm.org/citation.cfm?id=645754.668382 Google ScholarDigital Library

29. Lei Li, James Mccann, Nancy Pollard, Christos Faloutsos, Lei Li, James Mccann, Nancy Pollard, and Christos Faloutsos. 2010. Bolero: A principled technique for including bone length constraints in motion capture occlusion filling. In In Proceedings of the ACM SIGGRAPH/Eurographics Symposium on Computer Animation. Google ScholarDigital Library

30. Guodong Liu and Leonard McMillan. 2006. Estimation of Missing Markers in Human Motion Capture. Vis. Comput. 22, 9 (Sept. 2006), 721–728. Google ScholarDigital Library

31. Xin Liu, Yiu-ming Cheung, Shu-Juan Peng, Zhen Cui, Bineng Zhong, and Ji-Xiang Du. 2014. Automatic motion capture data denoising via filtered subspace clustering and low rank matrix approximation. 105 (12 2014), 350–362.Google Scholar

32. Utkarsh Mall, G. Roshan Lai, Siddhartha Chaudhuri, and Parag Chaudhuri. 2017. A Deep Recurrent Framework for Cleaning Motion Capture Data. CoRR abs/1712.03380 (2017). arXiv:1712.03380 http://arxiv.org/abs/1712.03380Google Scholar

33. Sang Il Park and Jessica K. Hodgins. 2006. Capturing and Animating Skin Deformation in Human Motion. In ACM SIGGRAPH 2006 Papers (SIGGRAPH ’06). ACM, New York, NY, USA, 881–889. Google ScholarDigital Library

34. Sashank J. Reddi, Satyen Kale, and Sanjiv Kumar. 2018. On the Convergence of Adam and Beyond. In International Conference on Learning Representations. https://openreview.net/forum?id=ryQu7f-RZGoogle Scholar

35. Maurice Ringer and Joan Lasenby. 2002. Multiple Hypothesis Tracking for Automatic Optical Motion Capture. Springer Berlin Heidelberg, Berlin, Heidelberg, 524–536. Google ScholarDigital Library

36. Ruslan Salakhutdinov and Geoffrey Hinton. 2009. Deep Boltzmann Machines. In Proceedings of the Twelth International Conference on Artificial Intelligence and Statistics (Proceedings of Machine Learning Research), David van Dyk and Max Welling (Eds.), Vol. 5. PMLR, Hilton Clearwater Beach Resort, Clearwater Beach, Florida USA, 448–455. http://proceedings.mlr.press/v5/salakhutdinov09a.htmlGoogle Scholar

37. Abraham. Savitzky and Marcel J. E. Golay. 1964. Smoothing and Differentiation of Data by Simplified Least Squares Procedures. Analytical Chemistry 36, 8 (1964), 1627–1639.Google ScholarCross Ref

38. arXiv:http://dx.doi.org/10.1021/ac60214a047Google Scholar

39. Alexander Sorkine-Hornung, Sandip Sar-Dessai, and Leif Kobbelt. 2005. Self-calibrating optical motion tracking for articulated bodies. IEEE Proceedings. VR 2005. Virtual Reality 2005. (2005), 75–82. Google ScholarDigital Library

40. Nitish Srivastava, Geoffrey Hinton, Alex Krizhevsky, Ilya Sutskever, and Ruslan Salakhutdinov. 2014. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. Journal of Machine Learning Research 15 (2014), 1929–1958. http://jmlr.org/papers/v15/srivastava14a.html Google ScholarDigital Library

41. Jochen Tautges, Arno Zinke, Bj?rn Kr?ger, Jan Baumann, Andreas Weber, Thomas Helten, Meinard M?ller, Hans-Peter Seidel, and Bernd Eberhardt. 2011. Motion Reconstruction Using Sparse Accelerometer Data. ACM Trans. Graph. 30, 3, Article 18 (May 2011), 12 pages. Google ScholarDigital Library

42. Graham W. Taylor, Geoffrey E Hinton, and Sam T. Roweis. 2007. Modeling Human Motion Using Binary Latent Variables. In Advances in Neural Information Processing Systems 19, B. Sch?lkopf, J. C. Platt, and T. Hoffman (Eds.). MIT Press, 1345–1352. http://papers.nips.cc/paper/3078-modeling-human-motion-using-binary-latent-variables.pdf Google ScholarDigital Library

43. Theano Development Team. 2016. Theano: A Python framework for fast computation of mathematical expressions. arXiv e-prints abs/1605.02688 (May 2016). http://arxiv.org/abs/1605.02688Google Scholar

44. Alex Varghese, Kiran Vaidhya, Subramaniam Thirunavukkarasu, Chandrasekharan Kesavdas, and Ganapathy Krishnamurthi. 2016. Semi-supervised Learning using Denoising Autoencoders for Brain Lesion Detection and Segmentation. CoRR abs/1611.08664 (2016). arXiv:1611.08664 http://arxiv.org/abs/1611.08664Google Scholar

45. Vicon. 2018. Vicon Software, https://www.vicon.com/products/software/. (2018).Google Scholar

46. Pascal Vincent, Hugo Larochelle, Isabelle Lajoie, Yoshua Bengio, and Pierre-Antoine Manzagol. 2010. Stacked Denoising Autoencoders: Learning Useful Representations in a Deep Network with a Local Denoising Criterion. J. Mach. Learn. Res. 11 (Dec. 2010), 3371–3408. http://dl.acm.org/citation.cfm?id=1756006.1953039 Google ScholarDigital Library

47. Jun Xiao, Yinfu Feng, Mingming Ji, Xiaosong Yang, Jian J. Zhang, and Yueting Zhuang. 2015. Sparse Motion Bases Selection for Human Motion Denoising. Signal Process. 110, C (May 2015), 108–122. Google ScholarDigital Library

48. Zhidong Xiao, Hammadi Nait-Charif, and Jian J. Zhang. 2008. Automatic Estimation of Skeletal Motion from Optical Motion Capture Data. Springer Berlin Heidelberg, Berlin, Heidelberg, 144–153. Google ScholarDigital Library

49. Junyuan Xie, Linli Xu, and Enhong Chen. 2012. Image Denoising and Inpainting with Deep Neural Networks. In Advances in Neural Information Processing Systems 25, F. Pereira, C. J. C. Burges, L. Bottou, and K. Q. Weinberger (Eds.). Curran Associates, Inc., 341–349. http://papers.nips.cc/paper/4686-image-denoising-and-inpainting-with-deep-neural-networks.pdf Google ScholarDigital Library

50. Raymond A. Yeh, Chen Chen, Teck-Yian Lim, Alexander G. Schwing, Mark Hasegawa-Johnson, and Minh N. Do. 2017. Semantic Image Inpainting with Deep Generative Models. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2017), 6882–6890.Google Scholar

51. Victor Brian Zordan and Nicholas C. Van Der Horst. 2003. Mapping Optical Motion Capture Data to Skeletal Motion Using a Physical Model. In Proceedings of the 2003 ACM SIGGRAPH/Eurographics Symposium on Computer Animation (SCA ’03). Eurographics Association, Aire-la-Ville, Switzerland, Switzerland, 245–250. http://dl.acm.org/citation.cfm?id=846276.846311 Google ScholarDigital Library