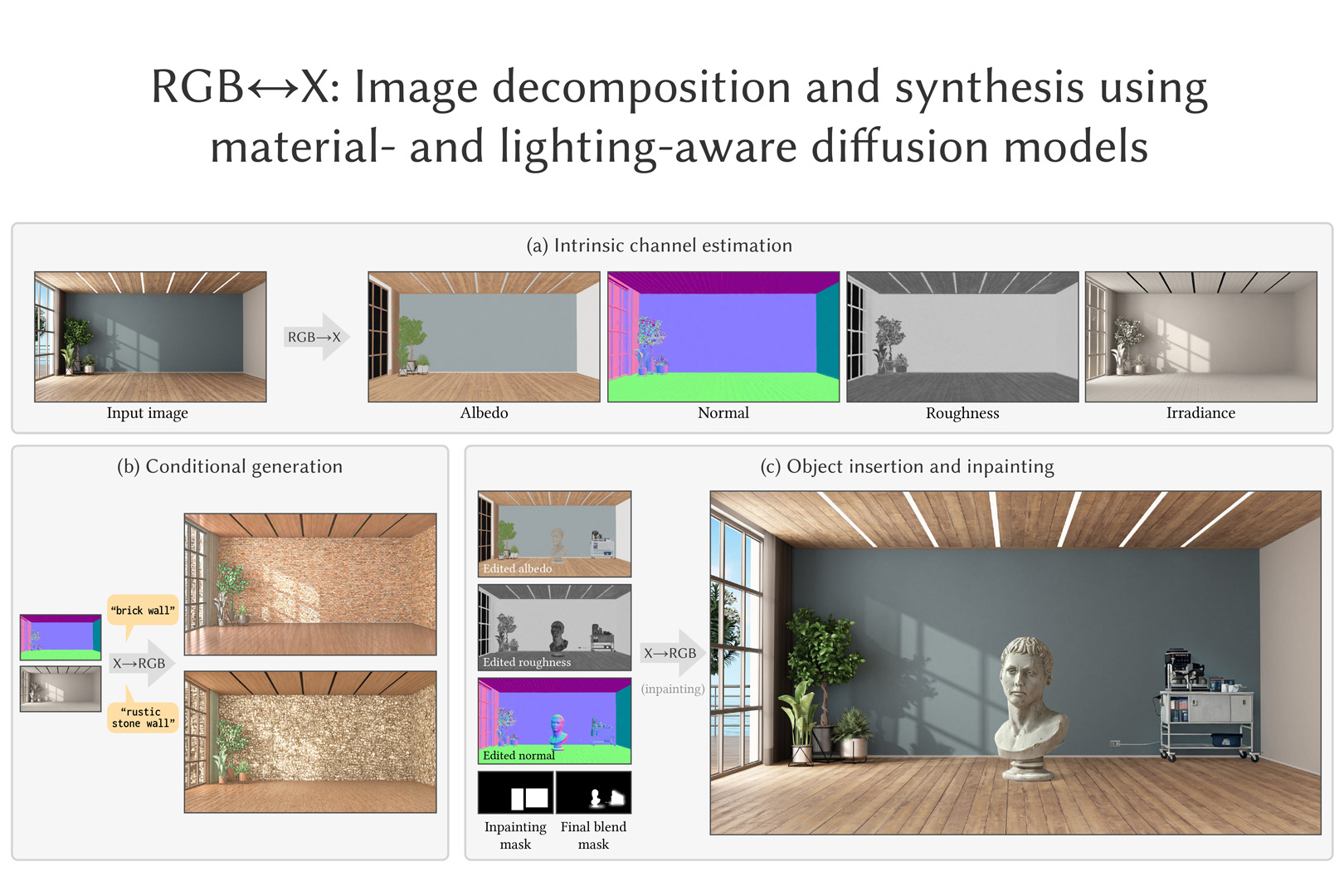

“RGB?X: Image Decomposition and Synthesis Using Material- and Lighting-aware Diffusion Models”

Conference:

Type(s):

Title:

- RGB?X: Image Decomposition and Synthesis Using Material- and Lighting-aware Diffusion Models

Presenter(s)/Author(s):

Abstract:

We present models for image decomposition into intrinsic channels (RGB?X) and image synthesis from such channels (X?RGB) in a unified conditional diffusion framework. We believe it can bring benefits to a wide range of downstream editing tasks including material editing, relighting, and realistic rendering from simple/under-specified scene definitions.

References:

[1]

Harry Barrow, J Tenenbaum, A Hanson, and E Riseman. 1978. Recovering intrinsic scene characteristics. Comput. vis. syst 2, 3-26 (1978), 2.

[2]

Sean Bell, Kavita Bala, and Noah Snavely. 2014. Intrinsic images in the wild. ACM Transactions on Graphics (TOG) 33, 4 (2014), 1?12.

[3]

Anand Bhattad, Daniel McKee, Derek Hoiem, and David Forsyth. 2024. Stylegan knows normal, depth, albedo, and more. Advances in Neural Information Processing Systems 36 (2024).

[4]

Reiner Birkl, Diana Wofk, and Matthias M?ller. 2023. MiDaS v3.1 ? A Model Zoo for Robust Monocular Relative Depth Estimation. arXiv preprint arXiv:2307.14460 (2023).

[5]

Tim Brooks, Aleksander Holynski, and Alexei A. Efros. 2023. InstructPix2Pix: Learning to Follow Image Editing Instructions. In CVPR.

[6]

Chris Careaga and Ya??z Aksoy. 2023. Intrinsic Image Decomposition via Ordinal Shading. ACM Trans. Graph. (2023).

[7]

Xiaodan Du, Nicholas Kolkin, Greg Shakhnarovich, and Anand Bhattad. 2023. Generative Models: What do they know? Do they know things? Let?s find out!arXiv preprint arXiv:2311.17137 (2023).

[8]

Elena Garces, Carlos Rodriguez-Pardo, Dan Casas, and Jorge Lopez-Moreno. 2022. A Survey on Intrinsic Images: Delving Deep into Lambert and Beyond. International Journal of Computer Vision 130, 3 (2022), 836?868.

[9]

Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2014. Generative adversarial nets. Advances in neural information processing systems 27 (2014).

[10]

David Griffiths, Tobias Ritschel, and Julien Philip. 2022. OutCast: Single Image Relighting with Cast Shadows. Computer Graphics Forum 43 (2022).

[11]

Roger Grosse, Micah K. Johnson, Edward H. Adelson, and William T. Freeman. 2009. Ground-truth dataset and baseline evaluations for intrinsic image algorithms. In International Conference on Computer Vision. 2335?2342. https://doi.org/10.1109/ICCV.2009.5459428

[12]

Jie Gui, Zhenan Sun, Yonggang Wen, Dacheng Tao, and Jieping Ye. 2021. A review on generative adversarial networks: Algorithms, theory, and applications. IEEE transactions on knowledge and data engineering 35, 4 (2021), 3313?3332.

[13]

Jonathan Ho and Tim Salimans. 2022. Classifier-free diffusion guidance. arXiv preprint arXiv:2207.12598 (2022).

[14]

Edward J Hu, Yelong Shen, Phillip Wallis, Zeyuan Allen-Zhu, Yuanzhi Li, Shean Wang, Lu Wang, and Weizhu Chen. 2021. Lora: Low-rank adaptation of large language models. arXiv preprint arXiv:2106.09685 (2021).

[15]

Lianghua Huang, Di Chen, Yu Liu, Yujun Shen, Deli Zhao, and Jingren Zhou. 2023. Composer: Creative and controllable image synthesis with composable conditions. arXiv preprint arXiv:2302.09778 (2023).

[16]

Jay-Artist. 2012. Country-Kitchen Cycles. https://blendswap.com/blend/5156

[17]

Tero Karras, Samuli Laine, and Timo Aila. 2019. A style-based generator architecture for generative adversarial networks. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 4401?4410.

[18]

Tero Karras, Samuli Laine, Miika Aittala, Janne Hellsten, Jaakko Lehtinen, and Timo Aila. 2020. Analyzing and improving the image quality of stylegan. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 8110?8119.

[19]

Peter Kocsis, Vincent Sitzmann, and Matthias Nie?ner. 2023. Intrinsic Image Diffusion for Single-view Material Estimation. In arxiv.

[20]

Edwin H Land and John J McCann. 1971. Lightness and retinex theory. Josa 61, 1 (1971), 1?11.

[21]

Hsin-Ying Lee, Hung-Yu Tseng, and Ming-Hsuan Yang. 2023. Exploiting Diffusion Prior for Generalizable Pixel-Level Semantic Prediction. arXiv preprint arXiv:2311.18832 (2023).

[22]

Junnan Li, Dongxu Li, Silvio Savarese, and Steven Hoi. 2023. BLIP-2: Bootstrapping Language-Image Pre-training with Frozen Image Encoders and Large Language Models. arxiv:2301.12597 [cs.CV]

[23]

Zhengqin Li, Mohammad Shafiei, Ravi Ramamoorthi, Kalyan Sunkavalli, and Manmohan Chandraker. 2020. Inverse rendering for complex indoor scenes: Shape, spatially-varying lighting and svbrdf from a single image. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2475?2484.

[24]

Zhengqin Li, Jia Shi, Sai Bi, Rui Zhu, Kalyan Sunkavalli, Milo? Ha?an, Zexiang Xu, Ravi Ramamoorthi, and Manmohan Chandraker. 2022. Physically-Based Editing of Indoor Scene Lighting from a Single Image. In ECCV 2022. 555?572.

[25]

Zhengqin Li, Ting-Wei Yu, Shen Sang, Sarah Wang, Meng Song, Yuhan Liu, Yu-Ying Yeh, Rui Zhu, Nitesh Gundavarapu, Jia Shi, Sai Bi, Hong-Xing Yu, Zexiang Xu, Kalyan Sunkavalli, Milo? Ha?an, Ravi Ramamoorthi, and Manmohan Chandraker. 2021. OpenRooms: An Open Framework for Photorealistic Indoor Scene Datasets. In 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 7186?7195. https://doi.org/10.1109/CVPR46437.2021.00711

[26]

O. Nalbach, E. Arabadzhiyska, D. Mehta, H.-P. Seidel, and T. Ritschel. 2017. Deep Shading: Convolutional Neural Networks for Screen Space Shading. Comput. Graph. Forum 36, 4 (jul 2017), 65?78.

[27]

NVIDIA. 2020. NVIDIA OptiX? AI-Accelerated Denoiser. https://developer.nvidia.com/optix-denoiser

[28]

Zhaoqing Pan, Weijie Yu, Xiaokai Yi, Asifullah Khan, Feng Yuan, and Yuhui Zheng. 2019. Recent progress on generative adversarial networks (GANs): A survey. IEEE access 7 (2019), 36322?36333.

[29]

Rohit Pandey, Sergio Orts-Escolano, Chloe Legendre, Christian Haene, Sofien Bouaziz, Christoph Rhemann, Paul E Debevec, and Sean Ryan Fanello. 2021. Total relighting: learning to relight portraits for background replacement.ACM Trans. Graph. 40, 4 (2021), 43?1.

[30]

Matt Pharr and Greg Humphreys. 2004. Physically Based Rendering: From Theory to Implementation. Morgan Kaufmann Publishers Inc., San Francisco, CA, USA.

[31]

Ariadna Quattoni and Antonio Torralba. 2009. Recognizing indoor scenes. In 2009 IEEE conference on computer vision and pattern recognition. IEEE, 413?420.

[32]

Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, 2021. Learning transferable visual models from natural language supervision. In International conference on machine learning. PMLR, 8748?8763.

[33]

Aditya Ramesh, Prafulla Dhariwal, Alex Nichol, Casey Chu, and Mark Chen. 2022. Hierarchical Text-Conditional Image Generation with CLIP Latents. arxiv:2204.06125 [cs.CV]

[34]

Ren? Ranftl, Katrin Lasinger, David Hafner, Konrad Schindler, and Vladlen Koltun. 2022. Towards Robust Monocular Depth Estimation: Mixing Datasets for Zero-Shot Cross-Dataset Transfer. IEEE Transactions on Pattern Analysis and Machine Intelligence 44, 3 (2022).

[35]

Mike Roberts, Jason Ramapuram, Anurag Ranjan, Atulit Kumar, Miguel Angel Bautista, Nathan Paczan, Russ Webb, and Joshua M. Susskind. 2021. Hypersim: A Photorealistic Synthetic Dataset for Holistic Indoor Scene Understanding. In International Conference on Computer Vision (ICCV) 2021.

[36]

Robin Rombach, Andreas Blattmann, Dominik Lorenz, Patrick Esser, and Bj?rn Ommer. 2022. High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 10684?10695.

[37]

Viktor Rudnev, Mohamed Elgharib, William Smith, Lingjie Liu, Vladislav Golyanik, and Christian Theobalt. 2022. Nerf for outdoor scene relighting. In European Conference on Computer Vision. Springer, 615?631.

[38]

Tim Salimans and Jonathan Ho. 2022. Progressive distillation for fast sampling of diffusion models. arXiv preprint arXiv:2202.00512 (2022).

[39]

Prafull Sharma, Varun Jampani, Yuanzhen Li, Xuhui Jia, Dmitry Lagun, Fredo Durand, William T. Freeman, and Mark Matthews. 2023. Alchemist: Parametric Control of Material Properties with Diffusion Models. arxiv:2312.02970 [cs.CV]

[40]

Manu Mathew Thomas and Angus G. Forbes. 2018. Deep Illumination: Approximating Dynamic Global Illumination with Generative Adversarial Network. arxiv:1710.09834 [cs.GR]

[41]

Bruce Walter, Stephen R. Marschner, Hongsong Li, and Kenneth E. Torrance. 2007. Microfacet models for refraction through rough surfaces(EGSR?07). 195?206.

[42]

Wenhai Wang, Enze Xie, Xiang Li, Deng-Ping Fan, Kaitao Song, Ding Liang, Tong Lu, Ping Luo, and Ling Shao. 2022. Pvt v2: Improved baselines with pyramid vision transformer. Computational Visual Media 8, 3 (2022), 415?424.

[43]

Zian Wang, Tianchang Shen, Jun Gao, Shengyu Huang, Jacob Munkberg, Jon Hasselgren, Zan Gojcic, Wenzheng Chen, and Sanja Fidler. 2023. Neural Fields meet Explicit Geometric Representations for Inverse Rendering of Urban Scenes. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR).

[44]

Ye Yu, Abhimitra Meka, Mohamed Elgharib, Hans-Peter Seidel, Christian Theobalt, and William AP Smith. 2020. Self-supervised outdoor scene relighting. In Computer Vision?ECCV 2020: 16th European Conference, Glasgow, UK, August 23?28, 2020, Proceedings, Part XXII 16. Springer, 84?101.

[45]

Lvmin Zhang, Anyi Rao, and Maneesh Agrawala. 2023. Adding Conditional Control to Text-to-Image Diffusion Models. arxiv:2302.05543 [cs.CV]

[46]

Jingsen Zhu, Fujun Luan, Yuchi Huo, Zihao Lin, Zhihua Zhong, Dianbing Xi, Rui Wang, Hujun Bao, Jiaxiang Zheng, and Rui Tang. 2022b. Learning-Based Inverse Rendering of Complex Indoor Scenes with Differentiable Monte Carlo Raytracing. In SIGGRAPH Asia 2022 Conference Papers. ACM, Article 6, 8 pages. https://doi.org/10.1145/3550469.3555407

[47]

Rui Zhu, Zhengqin Li, Janarbek Matai, Fatih Porikli, and Manmohan Chandraker. 2022a. IRISformer: Dense Vision Transformers for Single-Image Inverse Rendering in Indoor Scenes. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 2822?2831.