“Removing image artifacts due to dirty camera lenses and thin occluders”

Conference:

Type(s):

Title:

- Removing image artifacts due to dirty camera lenses and thin occluders

Session/Category Title:

- Imaging enchancement

Presenter(s)/Author(s):

Moderator(s):

Abstract:

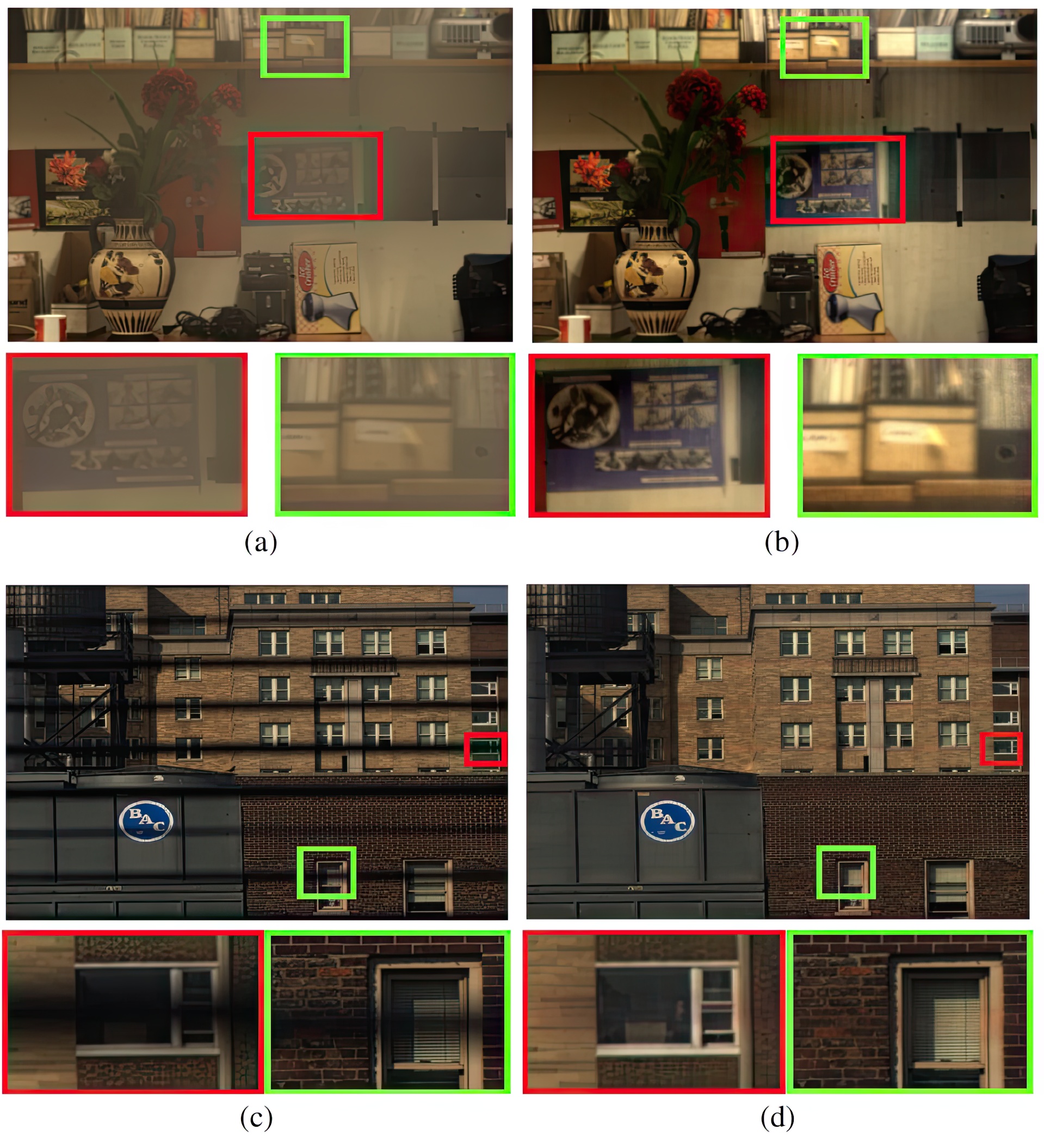

Dirt on camera lenses, and occlusions from thin objects such as fences, are two important types of artifacts in digital imaging systems. These artifacts are not only an annoyance for photographers, but also a hindrance to computer vision and digital forensics. In this paper, we show that both effects can be described by a single image formation model, wherein an intermediate layer (of dust, dirt or thin occluders) both attenuates the incoming light and scatters stray light towards the camera. Because of camera defocus, these artifacts are low-frequency and either additive or multiplicative, which gives us the power to recover the original scene radiance pointwise. We develop a number of physics-based methods to remove these effects from digital photographs and videos. For dirty camera lenses, we propose two methods to estimate the attenuation and the scattering of the lens dirt and remove the artifacts — either by taking several pictures of a structured calibration pattern beforehand, or by leveraging natural image statistics for post-processing existing images. For artifacts from thin occluders, we propose a simple yet effective iterative method that recovers the original scene from multiple apertures. The method requires two images if the depths of the scene and the occluder layer are known, or three images if the depths are unknown. The effectiveness of our proposed methods are demonstrated by both simulated and real experimental results.

References:

1. Bertalmio, M., Sapiro, G., Caselles, V., and Ballester, C. 2000. Image inpainting. In Proceedings of SIGGRAPH 2000, 417–424. Google ScholarDigital Library

2. Burton, G. J., and Moorhead, I. R. 1987. Color and spatial structured in natural scenes. Applied Optics 26, 1, 157–170.Google ScholarCross Ref

3. Durand, F., Mcguire, M., Matusik, W., Pfister, H., and Hughes, J. F. 2005. Defocus video matting. ACM Transactions on Graphics (SIGGRAPH) 24, 3, 567–576. Google ScholarDigital Library

4. Efros, A. A., and Freeman, W. T. 2001. Image quilting for texture synthesis and transfer. In Proceedings of SIGGRAPH 2001, 341–346. Google ScholarDigital Library

5. Fattal, R. 2008. Single image dehazing. ACM Transactions on Graphics (SIGGRAPH) 27, 3, 72:1–72:9. Google ScholarDigital Library

6. Favaro, P., and Soatto, S. 2003. Seeing beyond occlusions (and other marvels of a finite lens aperture). In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 579–586.Google Scholar

7. Gu, J., Ramamoorthi, R., Belhumeur, P., and Nayar, S. 2007. Dirty glass: Rendering contamination on transparent surfaces. In Eurographics Symposium on Rendering (EGSR), 159–170. Google ScholarCross Ref

8. Hansen, P. C., Nagy, J. G., and P. O’Leary, D. 2006. Deblurring Images: Matrices, Spectra, and Filtering. Society of Industrial and Applied Mathematics (SIAM). Google ScholarCross Ref

9. Hasinoff, S. W., and Kutulakos, K. N. 2007. A layer-based restoration framework for variable-aperture photography. In IEEE International Conference on Computer Vision (ICCV).Google Scholar

10. He, K., Sun, J., and Tang, X. 2009. Single image haze removal using dark channel prior. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR).Google Scholar

11. Ishimaru, A. 1978. Wave Propagation and Scattering in Random Media. Academic Press, New York.Google Scholar

12. Jacques, S. L., Alter, C. A., and Prahl, S. A. 1987. Angular dependence of HeNe laser light scattering by human dermis. Lasers Life Science 1, 309–333.Google Scholar

13. Joseph, J. H., Wiscombe, W. J., and Weinman, J. A. 1976. The Delta-Eddington approximation for radiative flux transfer. Journal of the Atmospheric Sciences 33, 12, 2452–2459.Google ScholarCross Ref

14. Komodakis, N., and Tziritas, G. 2006. Image completion using global optimization. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 442–452. Google ScholarDigital Library

15. Koreban, F., and Schechner, Y. Y. 2009. Geometry by deflaring. In IEEE International Conference on Computational Photography (ICCP).Google Scholar

16. Kuthirummal, S., Agarwala, A., Goldman, D. B., and Nayar, S. K. 2008. Priors for large photo collections and what they reveal about cameras. In Proceedings of European Conference on Computer Vision (ECCV), 74–87. Google ScholarDigital Library

17. Levin, A., Zomet, A., and Weiss, Y. 2003. Learning how to inpaint from global image statistics. In IEEE International Conference on Computer Vision (ICCV), 305–312. Google ScholarDigital Library

18. Levin, A., Fergus, R., Durand, F., and Freeman, W. T. 2007. Image and depth from a conventional camera with a coded aperture. ACM Transactions on Graphics (SIGGRAPH) 26, 3, 70:1–70:10. Google ScholarDigital Library

19. Liu, Y., Belkina, T., Hays, J. H., and Lublinerman, R. 2008. Image de-fencing. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR).Google Scholar

20. McCloskey, S., Langer, M., and Siddiqi, K. 2007. Automated removal of partial occlusion blur. In Asian Conference on Computer Vision (ACCV), 271–281. Google ScholarDigital Library

21. Narasimhan, S., and Nayar, S. 2003. Contrast restoration of weather degraded images. IEEE Transactions on Pattern Analysis and Machine Intelligence 25, 6, 713–724. Google ScholarDigital Library

22. Nayar, S. K., Krishnan, G., Grossberg, M. D., and Raskar, R. 2006. Fast separation of direct and global components of a scene using high frequency illumination. ACM Transactions on Graphics (SIGGRAPH) 25, 3, 935–944. Google ScholarDigital Library

23. Raskar, R., Agrawal, A., Wilson, C. A., and Veeraraghavan, A. 2008. Glare aware photography: 4D ray sampling for reducing glare effects of camera lenses. ACM Transactions on Graphics (SIGGRAPH) 27, 3, 56:1–56:10. Google ScholarDigital Library

24. Rudin, L. I., Osher, S., and Fatemi, E. 1992. Nonlinear total variation based noise removal algorithms. Physica D 60, 1–4, 259–268. Google ScholarDigital Library

25. Saad, Y. 1996. Iterative Methods for Sparse Linear Systems. PWS Publishing Company. Google ScholarDigital Library

26. Schechner, Y. Y., and Karpel, N. 2005. Recovery of underwater visibility and structure by polarization analysis. IEEE Journal of Oceanic Engineering 30, 3, 570–587.Google ScholarCross Ref

27. Schechner, Y. Y., Narasimhan, S. G., and Nayar, S. K. 2001. Instant dehazing of images using polarization. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 325–332.Google Scholar

28. Schechner, Y., Narasimhan, S., and Nayar, S. 2003. Polarization-based vision through haze. Applied Optics, Special issue 42, 3, 511–525.Google Scholar

29. Sun, J., Yuan, L., Jia, J., and Shum, H.-Y. 2005. Image completion with structure propagation. ACM Transactions on Graphics (SIGGRAPH) 24, 3, 861–868. Google ScholarDigital Library

30. Talvala, E.-V., Adams, A., Horowitz, M., and Levoy, M. 2007. Veiling glare in high dynamic range imaging. ACM Transactions on Graphics (SIGGRAPH) 26, 3, 37:1–37:10. Google ScholarDigital Library

31. Torralba, A., Fergus, R., and Freeman, W. T. 2008. 80 million tiny images: A large data set for nonparametric object and scene recognition. IEEE Transactions on Pattern Analysis and Machine Intelligience 30, 11, 1958–1970. Google ScholarDigital Library

32. Treibitz, T., and Schechner, Y. Y. 2009. Recovery limits in pointwise degradation. In IEEE International Conference on Computational Photography (ICCP).Google Scholar

33. Vaish, V., Levoy, M., Szeliski, R., Zitnick, C. L., and Kang, S. B. 2006. Reconstructing occluded surfaces using synthetic apertures: Stereo, focus and robust measures. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2331–2338. Google ScholarDigital Library

34. Watanabe, M., and Nayar, S. 1996. Minimal operator set for passive depth from defocus. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 431–438. Google ScholarDigital Library

35. Willson, R. G., Maimone, M. W., Johnson, A. E., and Scherr, L. M. 2005. An optical model for image artifacts produced by dust particles on lenses. In International Symposium on Artificial Intelligence, Robtics and Automation in Space (i-SAIRAS).Google Scholar

36. Zeid, I. 1985. Fixed-point iteration to nonlinear finite element analysis. part i: Mathematical theory and background. International Journal for Numerical Methods in Engineering 21, 2027–2048.Google ScholarCross Ref

37. Zhou, C., and Lin, S. 2007. Removal of image arifacts due to sensor dust. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR).Google Scholar

38. Zhou, C., and Nayar, S. 2009. What are good apertures for defocus deblurring? In IEEE International Conference on Computational Photography (ICCP).Google Scholar