“Realtime 3D eye gaze animation using a single RGB camera”

Conference:

Type(s):

Title:

- Realtime 3D eye gaze animation using a single RGB camera

Session/Category Title:

- CAPTURING HUMANS

Presenter(s)/Author(s):

Moderator(s):

Abstract:

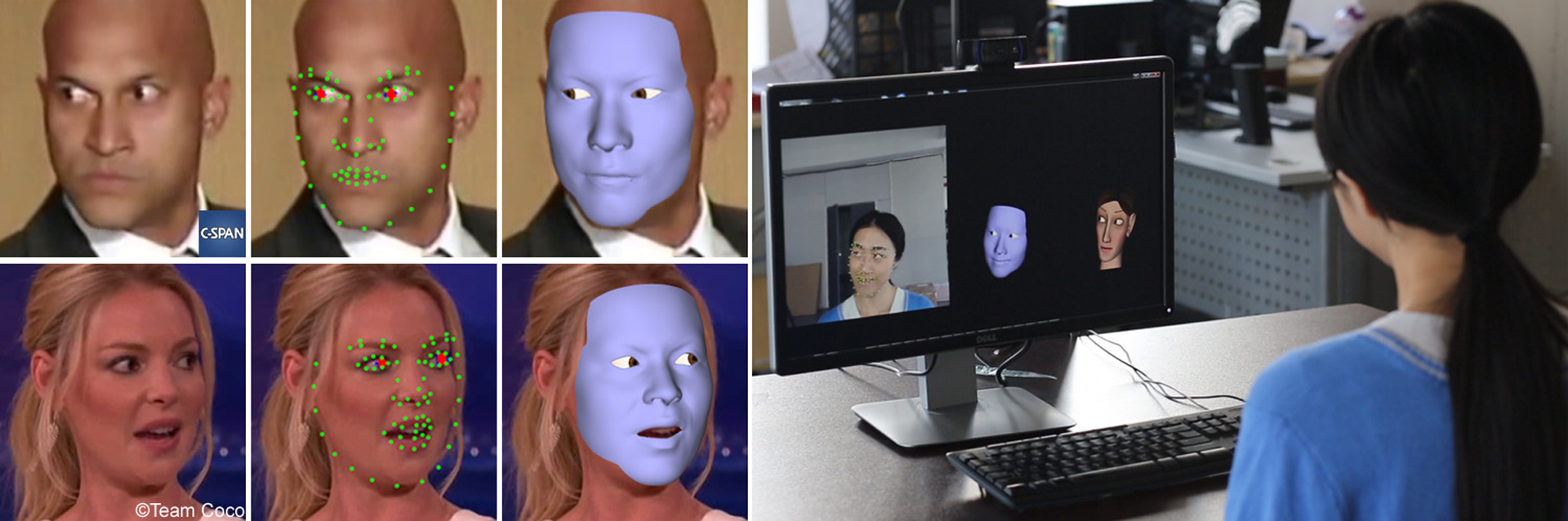

This paper presents the first realtime 3D eye gaze capture method that simultaneously captures the coordinated movement of 3D eye gaze, head poses and facial expression deformation using a single RGB camera. Our key idea is to complement a realtime 3D facial performance capture system with an efficient 3D eye gaze tracker. We start the process by automatically detecting important 2D facial features for each frame. The detected facial features are then used to reconstruct 3D head poses and large-scale facial deformation using multi-linear expression deformation models. Next, we introduce a novel user-independent classification method for extracting iris and pupil pixels in each frame. We formulate the 3D eye gaze tracker in the Maximum A Posterior (MAP) framework, which sequentially infers the most probable state of 3D eye gaze at each frame. The eye gaze tracker could fail when eye blinking occurs. We further introduce an efficient eye close detector to improve the robustness and accuracy of the eye gaze tracker. We have tested our system on both live video streams and the Internet videos, demonstrating its accuracy and robustness under a variety of uncontrolled lighting conditions and overcoming significant differences of races, genders, shapes, poses and expressions across individuals.

References:

1. Anon, Applied science laboratories, 2015. http://www.as-l.com.com.Google Scholar

2. Baltrušaitis, T., Robinson, P., and Morency, L.-P. 2012. 3d constrained local model for rigid and non-rigid facial tracking. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2610–2617. Google ScholarDigital Library

3. Beeler, T., Bickel, B., Beardsley, P., Sumner, B., and Gross, M. 2010. High-quality single-shot capture of facial geometry. ACM Trans. Graph. 29, 4, 40:1–40:9. Google ScholarDigital Library

4. Beeler, T., Hahn, F., Bradley, D., Bickel, B., Beardsley, P., Gotsman, C., Sumner, R. W., and Gross, M. 2011. High-quality passive facial performance capture using anchor frames. ACM Trans. Graph. 30, 4, 75:1–75:10. Google ScholarDigital Library

5. Bickel, B., Botsch, M., Angst, R., Matusik, W., Otaduy, M., Pfister, H., and Gross, M. 2007. Multi-scale capture of facial geometry and motion. ACM Trans. Graph. 26, 3, 33:1–33:10. Google ScholarDigital Library

6. BioID, 2015. https://www.bioid.com/about/bioid-face-database.Google Scholar

7. Bouaziz, S., Wang, Y., and Pauly, M. 2013. Online modeling for realtime facial animation. ACM Trans. Graph. 32, 4 (July), 40:1–40:10. Google ScholarDigital Library

8. Bradley, D., Heidrich, W., Popa, T., and Sheffer, A. 2010. High resolution passive facial performance capture. ACM Trans. Graph. 29, 4, 41:1–41:10. Google ScholarDigital Library

9. Breiman, L. 2001. Random forests. Machine learning 45, 1, 5–32. Google ScholarDigital Library

10. Canny, J. 1986. A computational approach to edge detection. IEEE Transactions on Pattern Analysis and Machine Intelligence, 6, 679–698. Google ScholarDigital Library

11. Cao, X., Wei, Y., Wen, F., and Sun, J. 2012. Face alignment by explicit shape regression. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2887–2894. Google ScholarDigital Library

12. Cao, C., Weng, Y., Lin, S., and Zhou, K. 2013. 3d shape regression for real-time facial animation. ACM Trans. Graph. 32, 4 (July), 41:1–41:10. Google ScholarDigital Library

13. Cao, C., Hou, Q., and Zhou, K. 2014. Displaced dynamic expression regression for real-time facial tracking and animation. ACM Transactions on Graphics (TOG) 33, 4, 43. Google ScholarDigital Library

14. Cao, C., Weng, Y., Zhou, S., Tong, Y., and Zhou, K. 2014. Facewarehouse: a 3d facial expression database for visual computing. IEEE Transations on Visualizationa and Computer Graphics (TVCG) 20, 3, 413–425. Google ScholarDigital Library

15. Cao, C., Bradley, D., Zhou, K., and Beeler, T. 2015. Realtime high-fidelity facial performance capture. ACM Transactions on Graphics (TOG) 34, 4, 46. Google ScholarDigital Library

16. Chai, J., Xiao, J., and Hodgins, J. 2003. Vision-based control of 3D facial animation. In Proceedings of the 2003 ACM SIGGRAPH/Eurographics Symposium on Computer Animation. 193–206. Google ScholarDigital Library

17. Chau, M., and Betke, M. 2005. Real time eye tracking and blink detection with usb cameras. Tech. rep., Boston University Computer Science Department.Google Scholar

18. Chen, Y.-L., Wu, H.-t., Shi, F., Tong, X., and Chai, J. 2013. Accurate and robust 3d facial capture using a single rgbd camera. In IEEE International Conference on Computer Vision (ICCV), 3615–3622. Google ScholarDigital Library

19. Comaniciu, D., and Meer, P. 2002. Mean shift: A robust approach toward feature space analysis. IEEE Transactions on Pattern Analysis and Machine Intelligence 24, 5, 603–619. Google ScholarDigital Library

20. Corcoran, P. M., Nanu, F., Petrescu, S., and Bigioi, P. 2012. Real-time eye gaze tracking for gaming design and consumer electronics systems. IEEE Transactions on Consumer Electronics 58, 2, 347–355.Google ScholarCross Ref

21. Garrido, P., Valgaerts, L., Wu, C., and Theobalt, C. 2013. Reconstructing detailed dynamic face geometry from monocular video. ACM Trans. Graph. 32, 6, 158. Google ScholarDigital Library

22. Hsieh, P.-L., Ma, C., Yu, J., and Li, H. 2015. Unconstrained realtime facial performance capture. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 1675–1683.Google Scholar

23. Huang, W., and Mariani, R. 2000. Face detection and precise eyes location. In International Conference on Pattern Recognition, vol. 4, 722–727. Google ScholarDigital Library

24. Huang, J., and Wechsler, H. 1999. Eye detection using optimal wavelet packets and radial basis functions (rbfs). International Journal of Pattern Recognition and Artificial Intelligence 13, 07, 1009–1025.Google ScholarCross Ref

25. Huang, G. B., Ramesh, M., Berg, T., and Learned-Miller, E. 2007. Labeled faces in the wild: A database for studying face recognition in unconstrained environments. Tech. rep., Technical Report 07-49, University of Massachusetts, Amherst.Google Scholar

26. Huang, H., Chai, J., Tong, X., and Wu, H.-T. 2011. Leveraging motion capture and 3d scanning for high-fidelity facial performance acquisition. ACM Trans. Graph. 30, 4, 74:1–74:10. Google ScholarDigital Library

27. Kawato, S., and Ohya, J. 2000. Real-time detection of nodding and head-shaking by directly detecting and tracking the between-eyes. In IEEE International Conference on Automatic Face and Gesture Recognition, 40–45. Google ScholarDigital Library

28. Lc Technologies, 2015. http://www.eyegaze.com.Google Scholar

29. Li, H., Yu, J., Ye, Y., and Bregler, C. 2013. Realtime facial animation with on-the-fly correctives. ACM Trans. Graph. 32, 4 (July), 42:1–42:10. Google ScholarDigital Library

30. Li, H., Trutoiu, L., Olszewski, K., Wei, L., Trutna, T., Hsieh, P.-L., Nicholls, A., and Ma, C. 2015. Facial performance sensing head-mounted display. ACM Transactions on Graphics (TOG) 34, 4, 47. Google ScholarDigital Library

31. Liu, Y., Xu, F., Chai, J., Tong, X., Wang, L., and Huo, Q. 2015. Video-audio driven real-time facial animation. ACM Trans. Graph. 34, 6 (Oct.), 182:1–182:10. Google ScholarDigital Library

32. Ma, W.-C., Jones, A., Chiang, J.-Y., Hawkins, T., Frederiksen, S., Peers, P., Vukovic, M., Ouhyoung, M., and Debevec, P. 2008. Facial performance synthesis using deformation-driven polynomial displacement maps. ACM Trans. Graph. 27, 5, 121:1–121:10. Google ScholarDigital Library

33. Megvii Technology, 2015. http://www.faceplusplus.com.cn.Google Scholar

34. Morimoto, C. H., and Flickner, M. 2000. Real-time multiple face detection using active illumination. In IEEE International Conference on Automatic Face and Gesture Recognition, 8–13. Google ScholarDigital Library

35. Ren, S., Cao, X., Wei, Y., and Sun, J. 2014. Face alignment at 3000 fps via regressing local binary features. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), IEEE, 1685–1692. Google ScholarDigital Library

36. Ruhland, K., Andrist, S., Badler, J., Peters, C., Badler, N., Gleicher, M., Mutlu, B., and Mcdonnell, R. 2014. Look me in the eyes: A survey of eye and gaze animation for virtual agents and artificial systems. In Eurographics State-of-the-Art Report, 69–91.Google Scholar

37. Saragih, J. M., Lucey, S., and Cohn, J. F. 2011. Realtime avatar animation from a single image. In IEEE International Conference on Automatic Face & Gesture Recognition and Workshops (FG 2011), IEEE, 117–124.Google Scholar

38. Shi, F., Wu, H.-T., Tong, X., and Chai, J. 2014. Automatic acquisition of high-fidelity facial performances using monocular videos. ACM Transactions on Graphics (TOG) 33, 6, 222. Google ScholarDigital Library

39. Sorkine, O., Cohen-Or, D., Lipman, Y., Alexa, M., Rössl, C., and Seidel, H.-P. 2004. Laplacian surface editing. In Proceedings of the 2004 Eurographics/ACM SIGGRAPH symposium on Geometry processing, ACM, 175–184. Google ScholarDigital Library

40. Sugano, Y., Matsushita, Y., and Sato, Y. 2014. Learning-by-synthesis for appearance-based 3d gaze estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 1821–1828. Google ScholarDigital Library

41. Tian, Y.-l., Kanade, T., and Cohn, J. F. 2000. Dual-state parametric eye tracking. In IEEE International Conference on Automatic Face and Gesture Recognition, 110–115. Google ScholarDigital Library

42. Tobii Technologies, 2015. http://www.tobii.com.Google Scholar

43. Valgaerts, L., Wu, C., Bruhn, A., Seidel, H.-P., and Theobalt, C. 2012. Lightweight binocular facial performance capture under uncontrolled lighting. ACM Trans. Graph. 31, 6 (Nov.), 187:1–187:11. Google ScholarDigital Library

44. Vlasic, D., Brand, M., Pfister, H., and Popović, J. 2005. Face transfer with multilinear models. In ACM Transactions on Graphics (TOG), vol. 24, ACM, 426–433. Google ScholarDigital Library

45. Weise, T., Li, H., Van Gool, L., and Pauly, M. 2009. Face/off: live facial puppetry. In Symposium on Computer Animation, 7–16. Google ScholarDigital Library

46. Weise, T., Bouaziz, S., Li, H., and Pauly, M. 2011. Realtime performance-based facial animation. ACM Trans. Graph. 30, 4, 77:1–77:10. Google ScholarDigital Library

47. Wood, E., Baltrusaitis, T., Zhang, X., Sugano, Y., Robinson, P., and Bulling, A. 2015. Rendering of eyes for eye-shape registration and gaze estimation. arXiv preprint arXiv:1505.05916.Google Scholar

48. Xiong, X., and De la Torre, F. 2013. Supervised descent method and its applications to face alignment. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 532–539. Google ScholarDigital Library

49. Zhang, L., Snavely, N., Curless, B., and Seitz, S. 2004. Spacetime faces: high resolution capture for modeling and animation. ACM Transactions on Graphics 23, 3, 548–558. Google ScholarDigital Library

50. Zhang, X., Sugano, Y., Fritz, M., and Bulling, A. 2015. Appearance-based gaze estimation in the wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 4511–4520.Google Scholar