“Real-time HDR video reconstruction for multi-sensor systems” by Kronander, Gustavson and Unger

Conference:

Type(s):

Title:

- Real-time HDR video reconstruction for multi-sensor systems

Presenter(s)/Author(s):

Abstract:

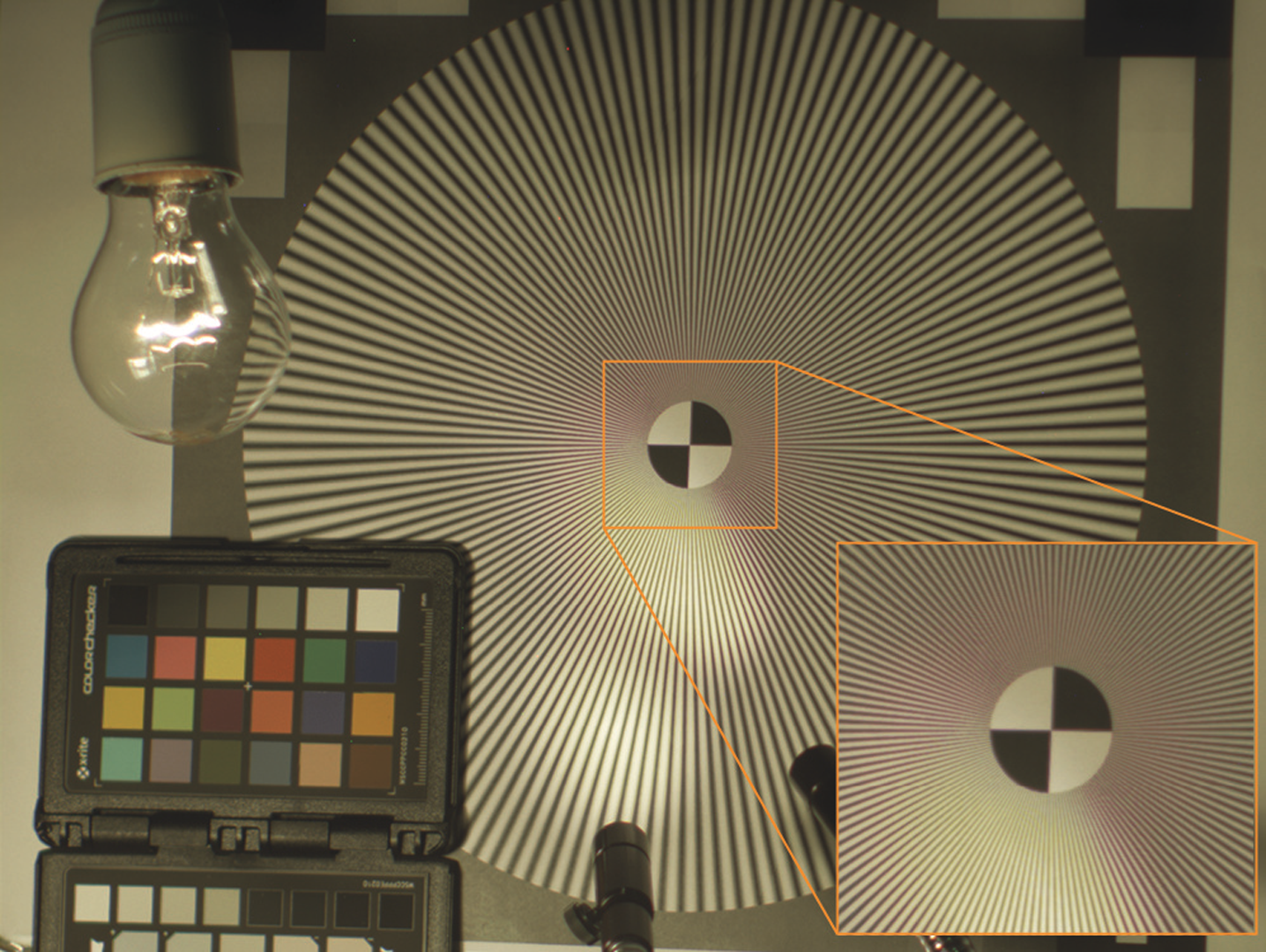

HDR video is an emerging field of technology, with a few camera systems currently in existence [Myszkowski et al. 2008], Multi-sensor systems [Tocci et al. 2011] have recently proved to be particularly promising due to superior robustness against temporal artifacts, correct motion blur, and high light efficiency. Previous HDR reconstruction methods for multi-sensor systems have assumed pixel perfect alignment of the physical sensors. This is, however, very difficult to achieve in practice. It may even be the case that reflections in beam splitters make it impossible to match the arrangement of the Bayer filters between sensors. We therefor present a novel reconstruction method specifically designed to handle the case of non-negligible misalignments between the sensors. Furthermore, while previous reconstruction techniques have considered HDR assembly, debayering and denoising as separate problems, our method is capable of simultaneous HDR assembly, debayering and smoothing of the data (denoising). The method is also general in that it allows reconstruction to an arbitrary output resolution and mapping. The algorithm is implemented in CUDA, and shows video speed performance for an experimental HDR video platform consisting of four 2336×1756 pixels high quality CCD sensors imaging the scene trough a common optical system. ND-filters of different densities are placed in front of the sensors to capture a dynamic range of 24 f-stops.

References:

1. Myszkowski, K., Mantiuk, R., and Krawczyk, G. 2008. High Dynamic Range Video. Morgan & Claypool.

2. Tocci, M. D., Kiser, C., Tocci, N., and Sen, P. 2011. A Versatile HDR Video Production System. ACM Transactions on Graphics (TOG) (Proceedings of SIGGRAPH 2011) 30, 4.