“Rapid Face Asset Acquisition with Recurrent Feature Alignment” by Liu, Cai, Chen, Zhou and Zhao

Conference:

Type(s):

Title:

- Rapid Face Asset Acquisition with Recurrent Feature Alignment

Session/Category Title:

- Faces and Avatars

Presenter(s)/Author(s):

Abstract:

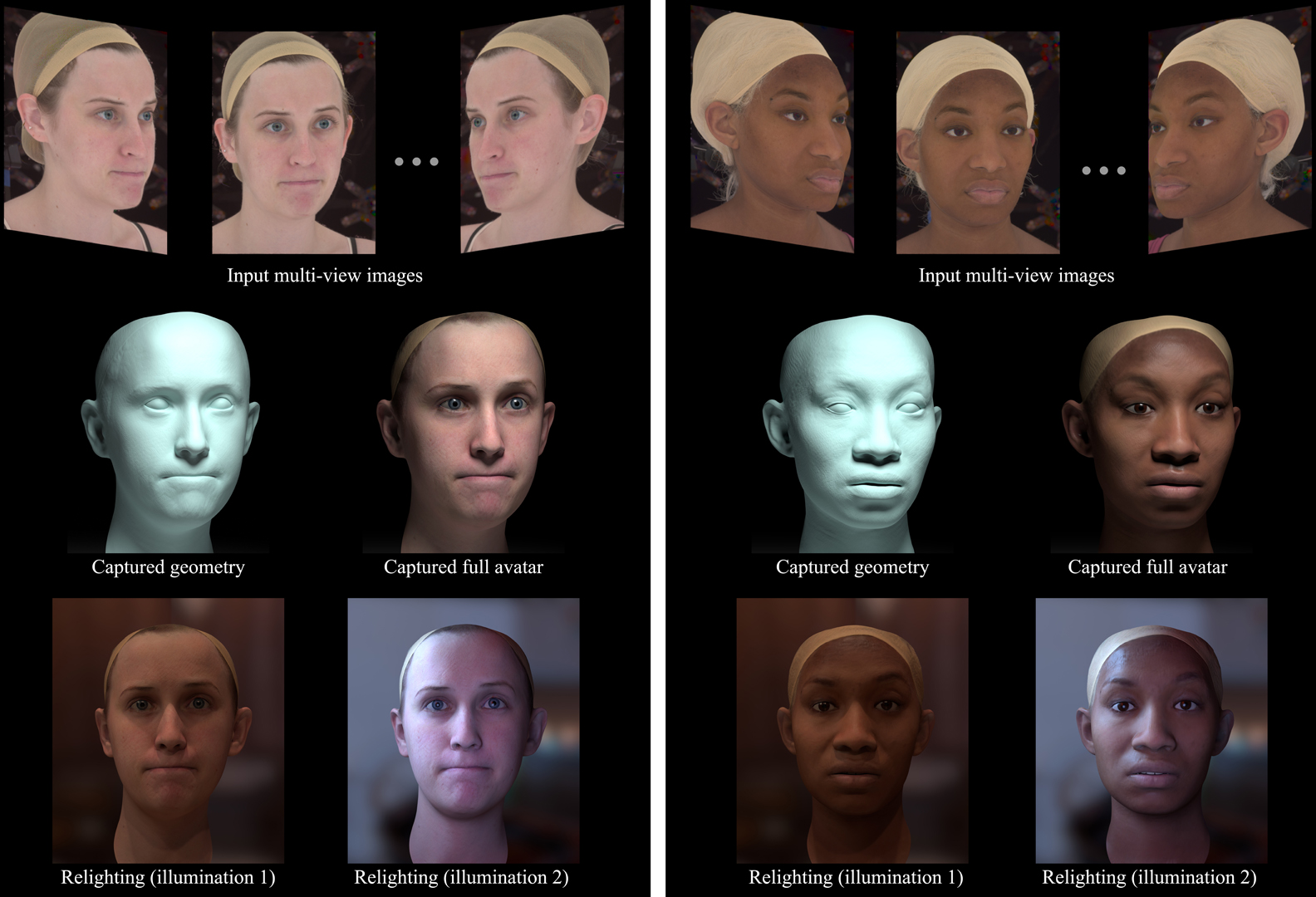

We present Recurrent Feature Alignment (ReFA), an end-to-end neural network for the very rapid creation of production-grade face assets from multi-view images. ReFA is on par with the industrial pipelines in quality for producing accurate, complete, registered, and textured assets directly applicable to physically-based rendering, but produces the asset end-to-end, fully automatically at a significantly faster speed at 4.5 FPS, which is unprecedented among neural-based techniques. Our method represents face geometry as a position map in the UV space. The network first extracts per-pixel features in both the multi-view image space and the UV space. A recurrent module then iteratively optimizes the geometry by projecting the image-space features to the UV space and comparing them with a reference UV-space feature. The optimized geometry then provides pixel-aligned signals for the inference of high-resolution textures. Experiments have validated that ReFA achieves a median error of 0.603mm in geometry reconstruction, is robust to extreme pose and expression, and excels in sparse-view settings. We believe that the progress achieved by our network enables lightweight, fast face assets acquisition that significantly boosts the downstream applications, such as avatar creation and facial performance capture. It will also enable massive database capturing for deep learning purposes.

References:

1. Jens Ackermann and Michael Goesele. 2015. A survey of photometric stereo techniques. Foundations and Trends® in Computer Graphics and Vision 9, 3–4 (2015), 149–254.

2. Brian Amberg, Reinhard Knothe, and Thomas Vetter. 2008. Expression invariant 3D face recognition with a morphable model. In 2008 8th IEEE International Conference on Automatic Face & Gesture Recognition. IEEE, 1–6.

3. Ziqian Bai, Zhaopeng Cui, Jamal Ahmed Rahim, Xiaoming Liu, and Ping Tan. 2020. Deep facial non-rigid multi-view stereo. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 5850–5860.

4. Anil Bas and William AP Smith. 2019. What does 2D geometric information really tell us about 3D face shape? International Journal of Computer Vision 127, 10 (2019), 1455–1473.

5. Thabo Beeler, Bernd Bickel, Paul A. Beardsley, Bob Sumner, and Markus H. Gross. 2010. High-quality single-shot capture of facial geometry. In ACM Transactions on Graphics (TOG).

6. Thabo Beeler, Fabian Hahn, Derek Bradley, Bernd Bickel, Paul Beardsley, Craig Gotsman, Robert W Sumner, and Markus Gross. 2011. High-quality passive facial performance capture using anchor frames. In ACM SIGGRAPH 2011 papers. 1–10.

7. Volker Blanz and Thomas Vetter. 1999. A morphable model for the synthesis of 3D faces. In Proceedings of the 26th annual conference on Computer graphics and interactive techniques. 187–194.

8. Volker Blanz and Thomas Vetter. 2003. Face recognition based on fitting a 3d morphable model. IEEE Transactions on pattern analysis and machine intelligence 25, 9 (2003), 1063–1074.

9. Michael Bleyer, Christoph Rhemann, and Carsten Rother. 2011. Patchmatch stereo-stereo matching with slanted support windows.. In Bmvc, Vol. 11. 1–11.

10. Timo Bolkart and Stefanie Wuhrer. 2015. A groupwise multilinear correspondence optimization for 3d faces. In Proceedings of the IEEE international conference on computer vision. 3604–3612.

11. George Borshukov, Dan Piponi, Oystein Larsen, John P Lewis, and Christina Tempelaar-Lietz. 2005. Universal capture-image-based facial animation for” The Matrix Reloaded”. In ACM Siggraph 2005 Courses. 16–es.

12. Jia-Ren Chang and Yong-Sheng Chen. 2018. Pyramid stereo matching network. In Proceedings of the IEEE conference on computer vision and pattern recognition. 5410–5418.

13. Anpei Chen, Zhang Chen, Guli Zhang, Kenny Mitchell, and Jingyi Yu. 2019. Photorealistic facial details synthesis from single image. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 9429–9439.

14. Kyunghyun Cho, Bart van Merriënboer, Caglar Gulcehre, Dzmitry Bahdanau, Fethi Bougares, Holger Schwenk, and Yoshua Bengio. 2014. Learning Phrase Representations using RNN Encoder-Decoder for Statistical Machine Translation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP). Association for Computational Linguistics.

15. Paul Debevec, Tim Hawkins, Chris Tchou, Haarm-Pieter Duiker, Westley Sarokin, and Mark Sagar. 2000. Acquiring the reflectance field of a human face. In Proceedings of the 27th annual conference on Computer graphics and interactive techniques. 145–156.

16. Paul Ekman and Wallace V. Friesen. 1978. Facial action coding system: a technique for the measurement of facial movement. In Consulting Psychologists Press.

17. Yao Feng, Haiwen Feng, Michael J Black, and Timo Bolkart. 2021. Learning an animatable detailed 3D face model from in-the-wild images. ACM Transactions on Graphics (TOG) 40, 4 (2021), 1–13.

18. Yao Feng, Fan Wu, Xiaohu Shao, Yanfeng Wang, and Xi Zhou. 2018. Joint 3d face reconstruction and dense alignment with position map regression network. In Proceedings of the European Conference on Computer Vision (ECCV). 534–551.

19. Graham Fyffe, Koki Nagano, Loc Huynh, Shunsuke Saito, Jay Busch, Andrew Jones, Hao Li, and Paul Debevec. 2017. Multi-View Stereo on Consistent Face Topology. In Computer Graphics Forum, Vol. 36. Wiley Online Library, 295–309.

20. David Gallup, Jan-Michael Frahm, Philippos Mordohai, Qingxiong Yang, and Marc Pollefeys. 2007. Real-time plane-sweeping stereo with multiple sweeping directions. In 2007 IEEE Conference on Computer Vision and Pattern Recognition. IEEE, 1–8.

21. Pablo Garrido, Michael Zollhöfer, Dan Casas, Levi Valgaerts, Kiran Varanasi, Patrick Pérez, and Christian Theobalt. 2016. Reconstruction of personalized 3D face rigs from monocular video. ACM Transactions on Graphics (TOG) 35, 3 (2016), 1–15.

22. Kyle Genova, Forrester Cole, Aaron Maschinot, Aaron Sarna, Daniel Vlasic, and William T Freeman. 2018. Unsupervised training for 3d morphable model regression. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 8377–8386.

23. Abhijeet Ghosh, Graham Fyffe, Borom Tunwattanapong, Jay Busch, Xueming Yu, and Paul Debevec. 2011a. Multiview face capture using polarized spherical gradient illumination. In Proceedings of the 2011 SIGGRAPH Asia Conference. 1–10.

24. Abhijeet Ghosh, Graham Fyffe, Borom Tunwattanapong, Jay Busch, Xueming Yu, and Paul E. Debevec. 2011b. Multiview face capture using polarized spherical gradient illumination. ACM Transactions on Graphics (TOG) 30 (2011), 129.

25. Paul Graham, Borom Tunwattanapong, Jay Busch, Xueming Yu, Andrew Jones, Paul Debevec, and Abhijeet Ghosh. 2013. Measurement-based synthesis of facial micro-geometry. In Computer Graphics Forum, Vol. 32. Wiley Online Library, 335–344.

26. Xiaodong Gu, Zhiwen Fan, Siyu Zhu, Zuozhuo Dai, Feitong Tan, and Ping Tan. 2020. Cascade cost volume for high-resolution multi-view stereo and stereo matching. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2495–2504.

27. Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2016. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition. 770–778.

28. Po-Han Huang, Kevin Matzen, Johannes Kopf, Narendra Ahuja, and Jia-Bin Huang. 2018. Deepmvs: Learning multi-view stereopsis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2821–2830.

29. Sunghoon Im, Hae-Gon Jeon, Stephen Lin, and In So Kweon. 2018. DPSNet: End-to-end Deep Plane Sweep Stereo. In International Conference on Learning Representations.

30. Sing Bing Kang, Richard Szeliski, and Jinxiang Chai. 2001. Handling occlusions in dense multi-view stereo. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. CVPR 2001, Vol. 1. IEEE, I-I.

31. Andor Kollar. 2019. Realistic Human Eye. http://kollarandor.com/gallery/3d-human-eye/. Online; Accessed: 2022-3-30.

32. Hamid Laga, Laurent Valentin Jospin, Farid Boussaid, and Mohammed Bennamoun. 2020. A survey on deep learning techniques for stereo-based depth estimation. IEEE Transactions on Pattern Analysis and Machine Intelligence (2020).

33. Alexandros Lattas, Stylianos Moschoglou, Baris Gecer, Stylianos Ploumpis, Vasileios Triantafyllou, Abhijeet Ghosh, and Stefanos Zafeiriou. 2020. AvatarMe: Realistically Renderable 3D Facial Reconstruction” In-the-Wild”. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 760–769.

34. Chloe LeGendre, Kalle Bladin, Bipin Kishore, Xinglei Ren, Xueming Yu, and Paul Debevec. 2018. Efficient multispectral facial capture with monochrome cameras. In Color and Imaging Conference.

35. Martin D Levine and Yingfeng Chris Yu. 2009. State-of-the-art of 3D facial reconstruction methods for face recognition based on a single 2D training image per person. Pattern Recognition Letters 30, 10 (2009), 908–913.

36. Hao Li, Bart Adams, Leonidas J Guibas, and Mark Pauly. 2009. Robust single-view geometry and motion reconstruction. ACM Transactions on Graphics (ToG) 28, 5 (2009), 1–10.

37. Jiaman Li, Zhengfei Kuang, Yajie Zhao, Mingming He, Karl Bladin, and Hao Li. 2020b. Dynamic facial asset and rig generation from a single scan. ACM Trans. Graph. 39, 6 (2020), 215–1.

38. Ruilong Li, Karl Bladin, Yajie Zhao, Chinmay Chinara, Owen Ingraham, Pengda Xiang, Xinglei Ren, Pratusha Prasad, Bipin Kishore, Jun Xing, et al. 2020a. Learning formation of physically-based face attributes. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 3410–3419.

39. Tianye Li, Shichen Liu, Timo Bolkart, Jiayi Liu, Hao Li, and Yajie Zhao. 2021. Topologically Consistent Multi-View Face Inference Using Volumetric Sampling. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 3824–3834.

40. Shichen Liu, Yichao Zhou, and Yajie Zhao. 2021. Vapid: A rapid vanishing point detector via learned optimizers. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 12859–12868.

41. Wan-Chun Ma, Tim Hawkins, Pieter Peers, Charles-Félix Chabert, Malte Weiss, and Paul E. Debevec. 2007. Rapid Acquisition of Specular and Diffuse Normal Maps from Polarized Spherical Gradient Illumination. In Rendering Techniques.

42. Wan-Chun Ma, Andrew Jones, Tim Hawkins, Jen-Yuan Chiang, and Paul Debevec. 2008. A high-resolution geometry capture system for facial performance. In ACM SIGGRAPH 2008 talks. 1–1.

43. Thomas Müller, Alex Evans, Christoph Schied, and Alexander Keller. 2022. Instant Neural Graphics Primitives with a Multiresolution Hash Encoding. ACM Trans. Graph. 41, 4, Article 102 (July 2022), 15 pages.

44. Alejandro Newell, Kaiyu Yang, and Jia Deng. 2016. Stacked hourglass networks for human pose estimation. In European conference on computer vision. Springer, 483–499.

45. Elad Richardson, Matan Sela, and Ron Kimmel. 2016. 3D face reconstruction by learning from synthetic data. In 2016 fourth international conference on 3D vision (3DV). IEEE, 460–469.

46. Elad Richardson, Matan Sela, Roy Or-El, and Ron Kimmel. 2017. Learning detailed face reconstruction from a single image. In Proceedings of the IEEE conference on computer vision and pattern recognition. 1259–1268.

47. Soubhik Sanyal, Timo Bolkart, Haiwen Feng, and Michael J Black. 2019. Learning to regress 3D face shape and expression from an image without 3D supervision. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 7763–7772.

48. Johannes L Schönberger, Enliang Zheng, Jan-Michael Frahm, and Marc Pollefeys. 2016. Pixelwise view selection for unstructured multi-view stereo. In European Conference on Computer Vision. Springer, 501–518.

49. Olga Sorkine, Daniel Cohen-Or, Yaron Lipman, Marc Alexa, Christian Rössl, and H-P Seidel. 2004. Laplacian surface editing. In Proceedings of the 2004 Eurographics/ACM SIGGRAPH symposium on Geometry processing. 175–184.

50. Christoph Strecha, Rik Fransens, and Luc Van Gool. 2006. Combined depth and outlier estimation in multi-view stereo. In 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), Vol. 2. IEEE, 2394–2401.

51. Zachary Teed and Jia Deng. 2020. Raft: Recurrent all-pairs field transforms for optical flow. In European conference on computer vision. Springer, 402–419.

52. Ayush Tewari, Michael Zollhöfer, Pablo Garrido, Florian Bernard, Hyeongwoo Kim, Patrick Pérez, and Christian Theobalt. 2018. Self-supervised multi-level face model learning for monocular reconstruction at over 250 hz. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2549–2559.

53. Ayush Tewari, Michael Zollhofer, Hyeongwoo Kim, Pablo Garrido, Florian Bernard, Patrick Perez, and Christian Theobalt. 2017. Mofa: Model-based deep convolutional face autoencoder for unsupervised monocular reconstruction. In Proceedings of the IEEE International Conference on Computer Vision Workshops. 1274–1283.

54. Justus Thies, Michael Zollhofer, Marc Stamminger, Christian Theobalt, and Matthias Nießner. 2016. Face2face: Real-time face capture and reenactment of rgb videos. In Proceedings of the IEEE conference on computer vision and pattern recognition. 2387–2395.

55. Triplegangers. 2021. Triplegangers Face Models. https://triplegangers.com/. Online; Accessed: 2021-12-05.

56. Laurens Van der Maaten and Geoffrey Hinton. 2008. Visualizing data using t-SNE. Journal of machine learning research 9, 11 (2008).

57. Ting-Chun Wang, Ming-Yu Liu, Jun-Yan Zhu, Andrew Tao, Jan Kautz, and Bryan Catanzaro. 2018a. High-resolution image synthesis and semantic manipulation with conditional gans. In Proceedings of the IEEE conference on computer vision and pattern recognition. 8798–8807.

58. Xintao Wang, Ke Yu, Shixiang Wu, Jinjin Gu, Yihao Liu, Chao Dong, Yu Qiao, and Chen Change Loy. 2018b. ESRGAN: Enhanced super-resolution generative adversarial networks. In The European Conference on Computer Vision Workshops (ECCVW).

59. Shugo Yamaguchi, Shunsuke Saito, Koki Nagano, Yajie Zhao, Weikai Chen, Kyle Olszewski, Shigeo Morishima, and Hao Li. 2018. High-fidelity facial reflectance and geometry inference from an unconstrained image. ACM Transactions on Graphics (TOG) 37, 4 (2018), 1–14.

60. Haotian Yang, Hao Zhu, Yanru Wang, Mingkai Huang, Qiu Shen, Ruigang Yang, and Xun Cao. 2020. Facescape: a large-scale high quality 3d face dataset and detailed riggable 3d face prediction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 601–610.

61. Yao Yao, Zixin Luo, Shiwei Li, Tianwei Shen, Tian Fang, and Long Quan. 2019. Recurrent mvsnet for high-resolution multi-view stereo depth inference. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 5525–5534.

62. Chao Zhang, William AP Smith, Arnaud Dessein, Nick Pears, and Hang Dai. 2016. Functional faces: Groupwise dense correspondence using functional maps. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 5033–5041.