“Perceptual-Based CNN Model for Watercolor Mixing Prediction” by Huang, Chen and Ouhyoung

Conference:

Type(s):

Title:

- Perceptual-Based CNN Model for Watercolor Mixing Prediction

Presenter(s)/Author(s):

Entry Number:

- 14

Abstract:

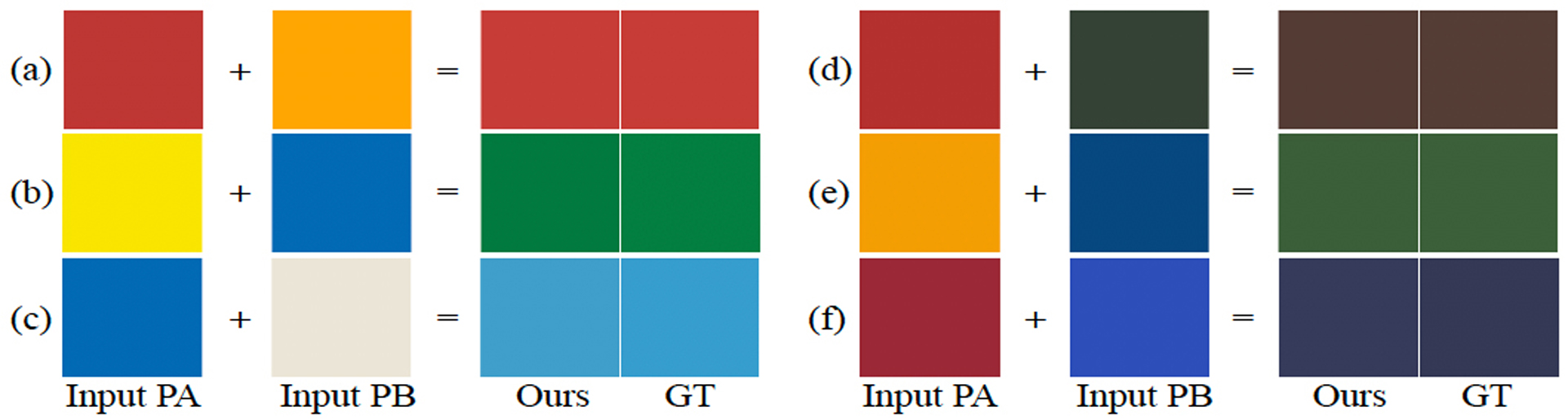

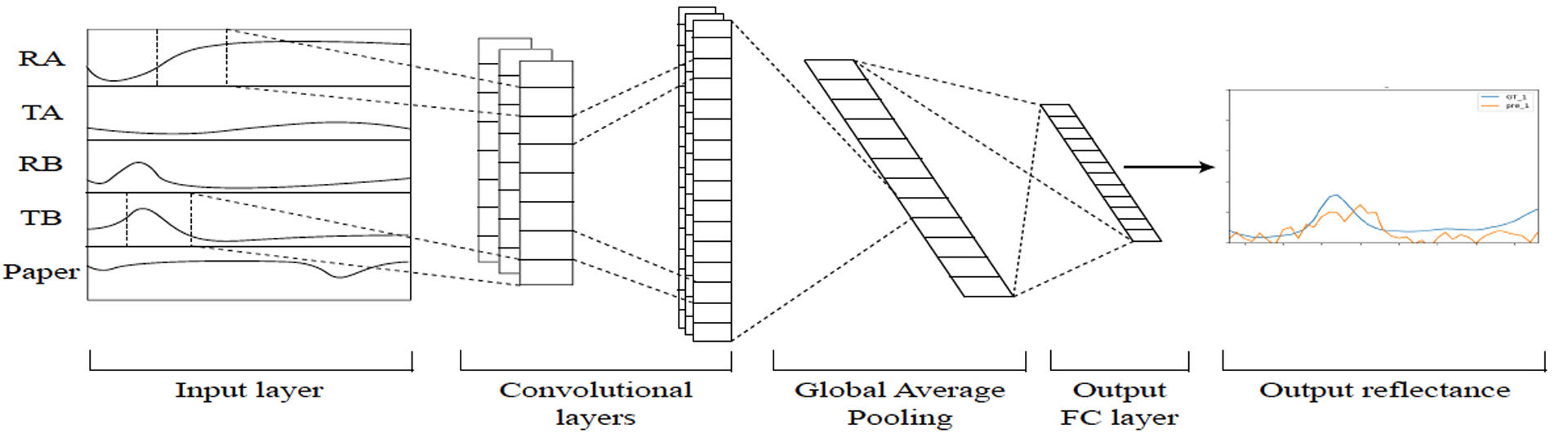

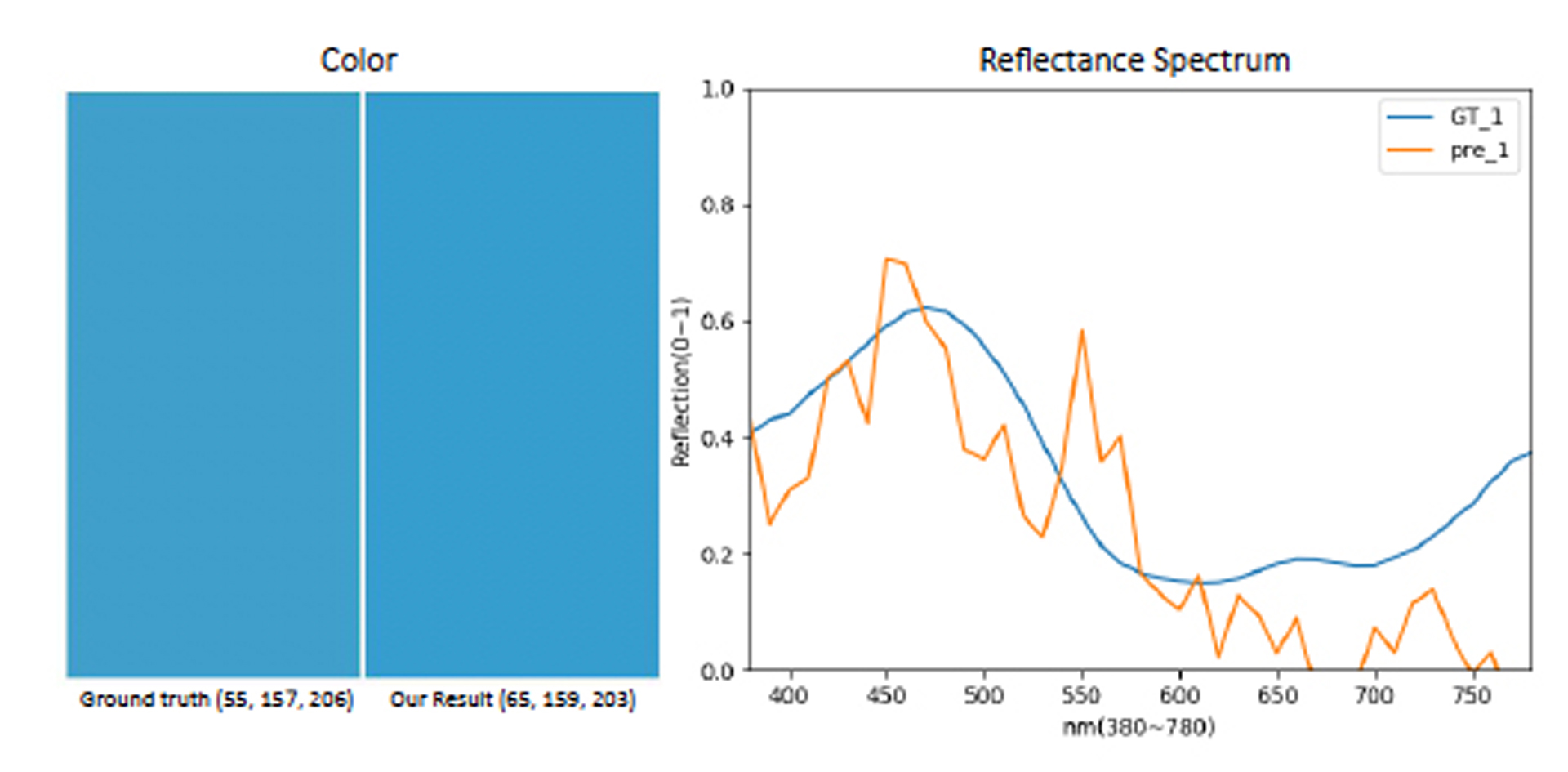

In the poster, we propose a model to predict the mixture of watercolor pigments using convolutional neural networks (CNN). With a watercolor dataset, we train our model to minimize the loss function of sRGB differences. In metric of color difference ∆ELab , our model achieves 88.7 % of data that ∆ELab < 5 on the test set, which means the difference can not easily be detected by human eye. In addition, an interesting phenomenon is found; Even if the reflectance curve of the predicted color is not as smooth as the ground truth curve, the RGB color is still close to the ground truth.

References:

- William Baxter, Jeremy Wendt, and Ming C Lin. 2004. IMPaSTo: a realistic, interactive model for paint. In Proc. of the 3rd international symposium on Non-photorealistic animation and rendering. ACM, 45–148.

- Mei-Yun Chen, Ci-Syuan Yang, and Ming Ouhyoung. 2018. A Smart Palette for Helping Novice Painters to Mix Physical Watercolor Pigments. In Proc. of EuroGraphics 2018, Posters,, Eakta Jain and Jirí Kosinka (Eds.). The Eurographics Association, April 2018. https://doi.org/10.2312/egp.20181008

- Per Edström. 2007. Examination of the revised Kubelka-Munk theory: considerations of modeling strategies. JOSA A 24, 2 (2007), 548–556.

- Chet S Haase and Gary W Meyer. 1992. Modeling pigmented materials for realistic image synthesis. ACM Transactions on Graphics (TOG) 11, 4 (1992), 305–335.

- Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2016. Deep residual learning for image recognition. In Proc. of the IEEE conference on computer vision and

pattern recognition. 770–778. - Paul Kubelka. 1948. New contributions to the optics of intensely light-scattering materials. Part I. Josa 38, 5 (1948), 448–457

Keyword(s):

Acknowledgements:

This project was partially supported by Meidatek Inc. under Grant No.:˜MTKC-2018-0167 , Ministry of Science and Technology(MOST), Taiwan under Grant No.:˜106-3114-E-002-012 and˜105-2221-E-002- 128-MY2.