“Neural style-preserving visual dubbing” by Kim, Elgharib, Zollhöfer, Seidel, Beeler, et al. …

Conference:

Type(s):

Title:

- Neural style-preserving visual dubbing

Session/Category Title:

- Learning from Video

Presenter(s)/Author(s):

- HyeongWoo Kim

- Mohamed Elgharib

- Michael Zollhöfer

- Hans-Peter Seidel

- Thabo Beeler

- Christian Richardt

- Christian Theobalt

Moderator(s):

Abstract:

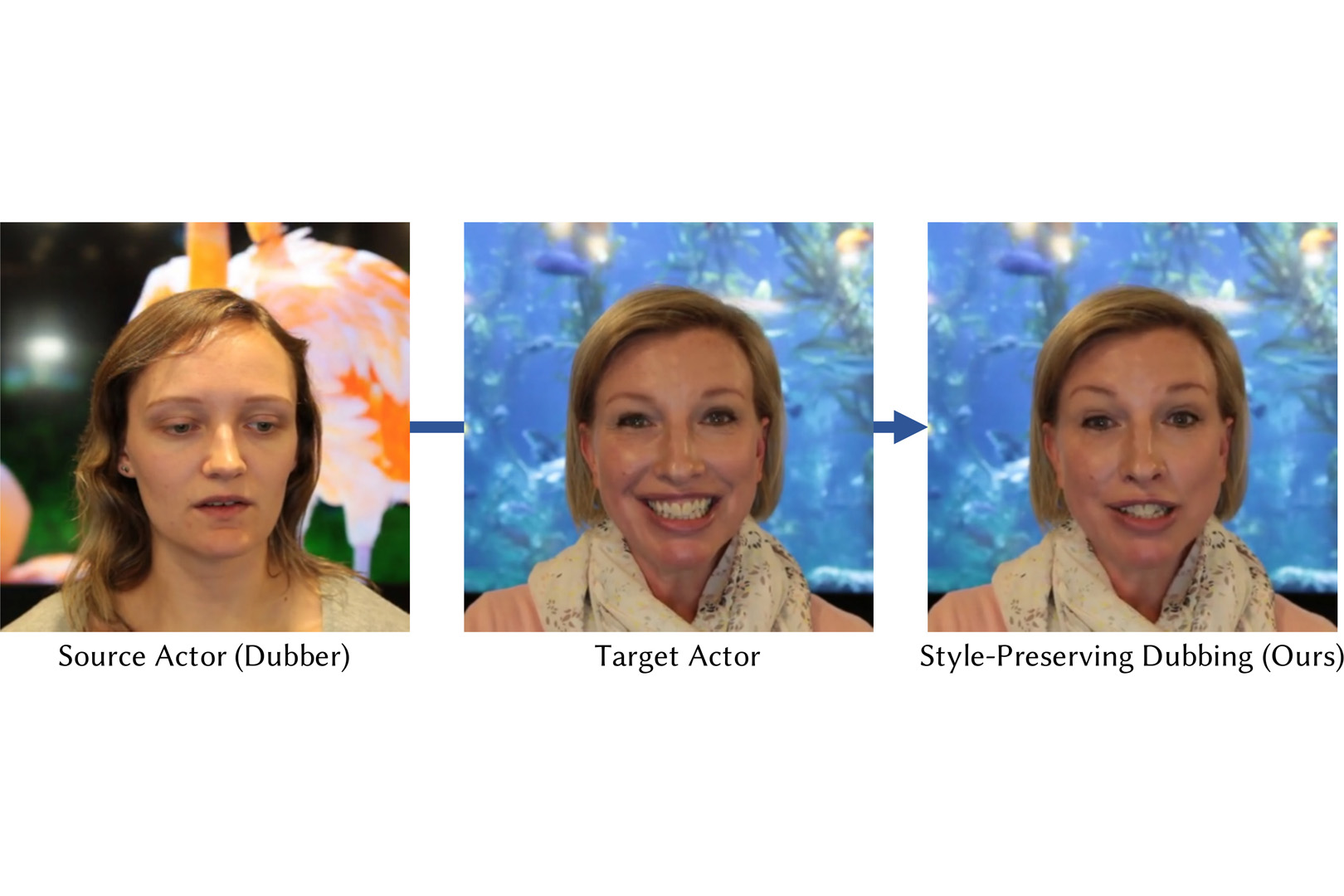

Dubbing is a technique for translating video content from one language to another. However, state-of-the-art visual dubbing techniques directly copy facial expressions from source to target actors without considering identity-specific idiosyncrasies such as a unique type of smile. We present a style-preserving visual dubbing approach from single video inputs, which maintains the signature style of target actors when modifying facial expressions, including mouth motions, to match foreign languages. At the heart of our approach is the concept of motion style, in particular for facial expressions, i.e., the person-specific expression change that is yet another essential factor beyond visual accuracy in face editing applications. Our method is based on a recurrent generative adversarial network that captures the spatiotemporal co-activation of facial expressions, and enables generating and modifying the facial expressions of the target actor while preserving their style. We train our model with unsynchronized source and target videos in an unsupervised manner using cycle-consistency and mouth expression losses, and synthesize photorealistic video frames using a layered neural face renderer. Our approach generates temporally coherent results, and handles dynamic backgrounds. Our results show that our dubbing approach maintains the idiosyncratic style of the target actor better than previous approaches, even for widely differing source and target actors.

References:

1. Martín Abadi, Ashish Agarwal, Paul Barham, Eugene Brevdo, Zhifeng Chen, Craig Citro, Greg S. Corrado, Andy Davis, Jeffrey Dean, Matthieu Devin, Sanjay Ghemawat, Ian Goodfellow, Andrew Harp, Geoffrey Irving, Michael Isard, Yangqing Jia, Rafal Jozefowicz, Lukasz Kaiser, Manjunath Kudlur, Josh Levenberg, Dan Mané, Rajat Monga, Sherry Moore, Derek Murray, Chris Olah, Mike Schuster, Jonathon Shlens, Benoit Steiner, Ilya Sutskever, Kunal Talwar, Paul Tucker, Vincent Vanhoucke, Vijay Vasudevan, Fernanda Viégas, Oriol Vinyals, Pete Warden, Martin Wattenberg, Martin Wicke, Yuan Yu, and Xiaoqiang Zheng. 2015. TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems. https://www.tensorflow.org/ Software available from tensorflow.org.Google Scholar

2. Oleg Alexander, Mike Rogers, William Lambeth, Jen-Yuan Chiang, Wan-Chun Ma, Chuan-Chang Wang, and Paul Debevec. 2010. The Digital Emily Project: Achieving a Photorealistic Digital Actor. IEEE Computer Graphics and Applications 30, 4 (July/August 2010), 20–31. Google ScholarDigital Library

3. Volker Blanz, Curzio Basso, Tomaso Poggio, and Thomas Vetter. 2003. Reanimating Faces in Images and Video. Computer Graphics Forum 22, 3 (September 2003), 641–650. Google ScholarCross Ref

4. Volker Blanz and Thomas Vetter. 1999. A Morphable Model for the Synthesis of 3D Faces. In SIGGRAPH. 187–194. Google ScholarDigital Library

5. Matthew Brand. 1999. Voice Puppetry. In SIGGRAPH. 21–28. Google ScholarDigital Library

6. Chen Cao, Yanlin Weng, Shun Zhou, Yiying Tong, and Kun Zhou. 2014. FaceWarehouse: A 3D Facial Expression Database for Visual Computing. IEEE Transactions on Visualization and Computer Graphics 20, 3 (March 2014), 413–425. Google ScholarDigital Library

7. Young-Woon Cha, True Price, Zhen Wei, Xinran Lu, Nicholas Rewkowski, Rohan Chabra, Zihe Qin, Hyounghun Kim, Zhaoqi Su, Yebin Liu, Adrian Ilie, Andrei State, Zhenlin Xu, Jan-Michael Frahm, and Henry Fuchs. 2018. Towards Fully Mobile 3D Face, Body, and Environment Capture Using Only Head-worn Cameras. IEEE Transactions on Visualization and Computer Graphics 24, 11 (November 2018), 2993–3004. Google ScholarCross Ref

8. Joon Son Chung, Amir Jamaludin, and Andrew Zisserman. 2017. You said that?. In British Machine Vision Conference (BMVC).Google Scholar

9. Zhigang Deng and Ulrich Neumann. 2006. eFASE: Expressive Facial Animation Synthesis and Editing with Phoneme-isomap Controls. In Symposium on Computer Animation (SCA). 251–260.Google Scholar

10. Pablo Garrido, Levi Valgaerts, Hamid Sarmadi, Ingmar Steiner, Kiran Varanasi, Patrick Pérez, and Christian Theobalt. 2015. VDub: Modifying Face Video of Actors for Plausible Visual Alignment to a Dubbed Audio Track. Computer Graphics Forum 34, 2 (May 2015), 193–204. Google ScholarDigital Library

11. Pablo Garrido, Michael Zollhöfer, Dan Casas, Levi Valgaerts, Kiran Varanasi, Patrick Pérez, and Christian Theobalt. 2016. Reconstruction of Personalized 3D Face Rigs from Monocular Video. ACM Transactions on Graphics 35, 3 (June 2016), 28:1–15. Google ScholarDigital Library

12. Jiahao Geng, Tianjia Shao, Youyi Zheng, Yanlin Weng, and Kun Zhou. 2018. Warp-guided GANs for Single-photo Facial Animation. ACM Transactions on Graphics 37, 6 (November 2018), 231:1–12. Google ScholarDigital Library

13. Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2016. Deep Residual Learning for Image Recognition. In Conference on Computer Vision and Pattern Recognition (CVPR). 770–778. Google ScholarCross Ref

14. Sepp Hochreiter and Jürgen Schmidhuber. 1997. Long Short-Term Memory. Neural Computation 9, 8 (November 1997), 1735–1780. Google ScholarDigital Library

15. Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, and Alexei A. Efros. 2017. Image-to-Image Translation with Conditional Adversarial Networks. In Conference on Computer Vision and Pattern Recognition (CVPR). 5967–5976. Google ScholarCross Ref

16. Tero Karras, Timo Aila, Samuli Laine, Antti Herva, and Jaakko Lehtinen. 2017. Audio-driven Facial Animation by Joint End-to-end Learning of Pose and Emotion. ACM Transactions on Graphics 36, 4 (July 2017), 94:1–12. Google ScholarDigital Library

17. Hyeongwoo Kim, Pablo Garrido, Ayush Tewari, Weipeng Xu, Justus Thies, Matthias Nießner, Patrick Pérez, Christian Richardt, Michael Zollhöfer, and Christian Theobalt. 2018. Deep Video Portraits. ACM Transactions on Graphics 37, 4 (August 2018), 163:1–14. Google ScholarDigital Library

18. Taeksoo Kim, Moonsu Cha, Hyunsoo Kim, Jung Kwon Lee, and Jiwon Kim. 2017. Learning to Discover Cross-Domain Relations with Generative Adversarial Networks. In International Conference on Machine Learning (ICML). https://arxiv.org/abs/1703.05192Google Scholar

19. Diederik P. Kingma and Jimmy Ba. 2015. Adam: A Method for Stochastic Optimization. In International Conference on Learning Representations (ICLR).Google Scholar

20. Sumedha Kshirsagar and Nadia Magnenat-Thalmann. 2003. Visyllable Based Speech Animation. Computer Graphics Forum 22, 3 (September 2003), 631–639. Google ScholarCross Ref

21. Bertrand Le Goff, Thierry Guiard-Marigny, Michael M. Cohen, and Christian Benoît. 1994. Real-time analysis-synthesis and intelligibility of talking faces. In SSW. https://www.isca-speech.org/archive_open/ssw2/ssw2_053.htmlGoogle Scholar

22. Ming-Yu Liu, Thomas Breuel, and Jan Kautz. 2017. Unsupervised Image-to-Image Translation Networks. In Advances in Neural Information Processing Systems (NIPS). https://github.com/mingyuliutw/unitGoogle Scholar

23. Jiyong Ma, Ron Cole, Bryan Pellom, Wayne Ward, and Barbara Wise. 2006. Accurate visible speech synthesis based on concatenating variable length motion capture data. IEEE Transactions on Visualization and Computer Graphics 12, 2 (March 2006), 266–276. Google ScholarDigital Library

24. Luming Ma and Zhigang Deng. 2019. Real-Time Facial Expression Transformation for Monocular RGB Video. Computer Graphics Forum 38, 1 (2019), 470–481. Google ScholarCross Ref

25. Koki Nagano, Jaewoo Seo, Jun Xing, Lingyu Wei, Zimo Li, Shunsuke Saito, Aviral Agarwal, Jens Fursund, and Hao Li. 2018. paGAN: Real-time Avatars Using Dynamic Textures. ACM Transactions on Graphics 37, 6 (November 2018), 258:1–12. Google ScholarDigital Library

26. Elmer Owens and Barbara Blazek. 1985. Visemes observed by hearing-impaired and normal-hearing adult viewers. Journal of Speech, Language, and Hearing Research 28, 3 (September 1985), 381–393. Google ScholarCross Ref

27. Razvan Pascanu, Tomas Mikolov, and Yoshua Bengio. 2013. On the difficulty of training Recurrent Neural Networks. In International Conference on Machine Learning (ICML). https://arxiv.org/abs/1211.5063Google Scholar

28. Hai X. Pham, Samuel Cheung, and Vladimir Pavlovic. 2017. Speech-Driven 3D Facial Animation With Implicit Emotional Awareness: A Deep Learning Approach. In CVPR Workshops. Google ScholarCross Ref

29. Frédéric Pighin, Jamie Hecker, Dani Lischinski, Richard Szeliski, and David H. Salesin. 1998. Synthesizing Realistic Facial Expressions from Photographs. In SIGGRAPH. 75–84. Google ScholarDigital Library

30. Jason M. Saragih, Simon Lucey, and Jeffrey F. Cohn. 2011. Deformable Model Fitting by Regularized Landmark Mean-Shift. International Journal of Computer Vision 91, 2 (2011), 200–215. Google ScholarDigital Library

31. Supasorn Suwajanakorn, Steven M. Seitz, and Ira Kemelmacher-Shlizerman. 2017. Synthesizing Obama: Learning Lip Sync from Audio. ACM Transactions on Graphics 36, 4 (July 2017), 95:1–13. Google ScholarDigital Library

32. Sarah Taylor, Taehwan Kim, Yisong Yue, Moshe Mahler, James Krahe, Anastasio Garcia Rodriguez, Jessica Hodgins, and Iain Matthews. 2017. A Deep Learning Approach for Generalized Speech Animation. ACM Transactions on Graphics 36, 4 (July 2017), 93:1–11. Google ScholarDigital Library

33. Sarah L. Taylor, Moshe Mahler, Barry-John Theobald, and Iain Matthews. 2012. Dynamic Units of Visual Speech. In Symposium on Computer Animation (SCA). 275–284.Google Scholar

34. Justus Thies, Michael Zollhöfer, Marc Stamminger, Christian Theobalt, and Matthias Nießner. 2016. Face2Face: Real-Time Face Capture and Reenactment of RGB Videos. In Conference on Computer Vision and Pattern Recognition (CVPR). 2387–2395. Google ScholarCross Ref

35. Konstantinos Vougioukas, Stavros Petridis, and Maja Pantic. 2018. End-to-End Speech-Driven Facial Animation with Temporal GANs. In British Machine Vision Conference (BMVC).Google Scholar

36. Olivia Wiles, A. Sophia Koepke, and Andrew Zisserman. 2018. X2Face: A network for controlling face generation by using images, audio, and pose codes. In European Conference on Computer Vision (ECCV). Google ScholarCross Ref

37. Zili Yi, Hao Zhang, Ping Tan, and Minglun Gong. 2017. DualGAN: Unsupervised Dual Learning for Image-to-Image Translation. In International Conference on Computer Vision (ICCV). 2868–2876. Google ScholarCross Ref

38. Jun-Yan Zhu, Taesung Park, Phillip Isola, and Alexei A. Efros. 2017. Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks. In International Conference on Computer Vision (ICCV). 2242–2251. Google ScholarCross Ref