“Near‐Invariant Blur for Depth and 2D Motion Via Time‐Varying Light Field Analysis” by Bando, Holtzman and Raskar

Conference:

Type:

Title:

- Near‐Invariant Blur for Depth and 2D Motion Via Time‐Varying Light Field Analysis

Session/Category Title: Laplacians, Light Field & Layouts

Presenter(s)/Author(s):

Moderator(s):

Abstract:

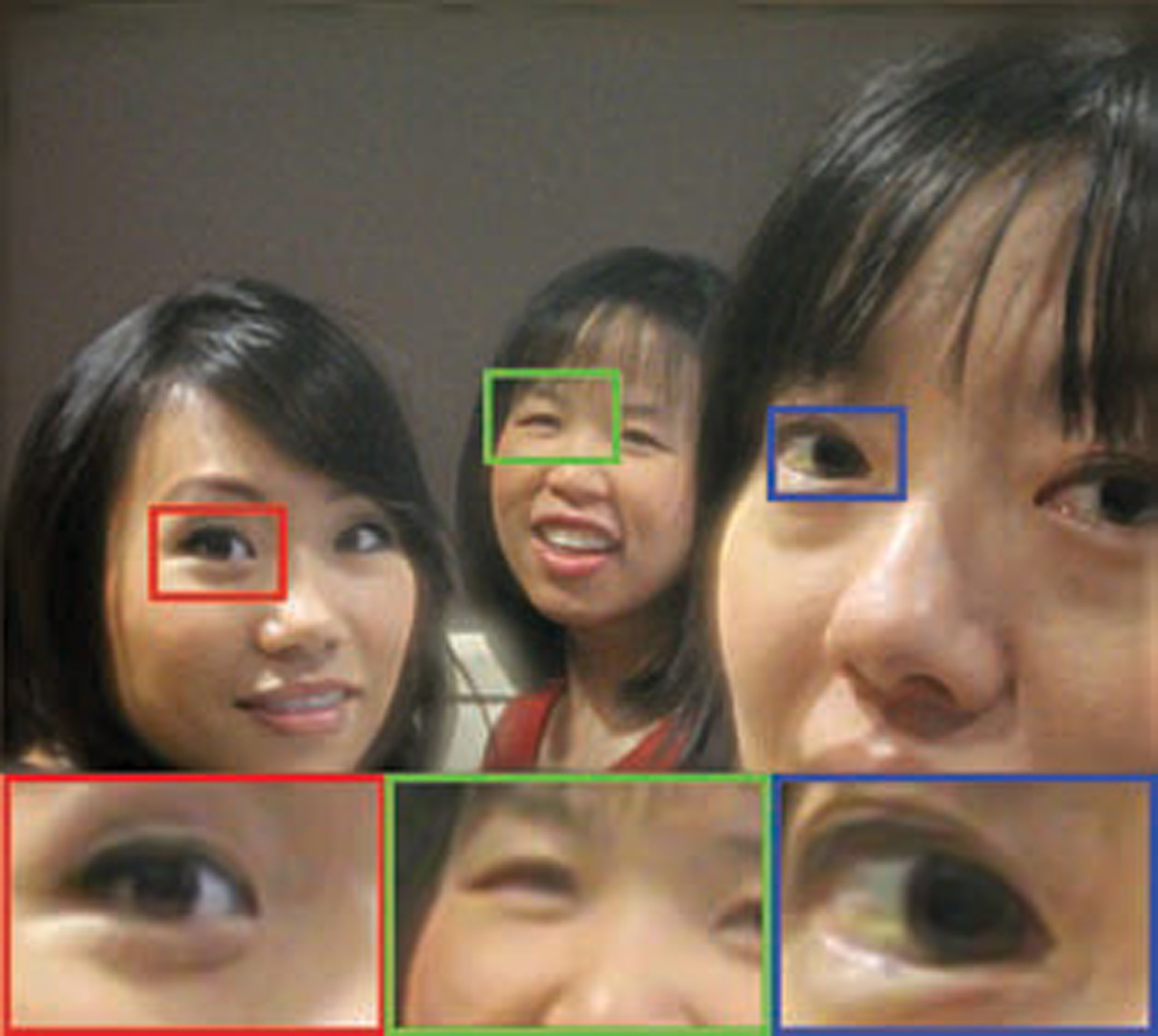

Recently, several camera designs have been proposed for either making defocus blur invariant to scene depth or making motion blur invariant to object motion. The benefit of such invariant capture is that no depth or motion estimation is required to remove the resultant spatially uniform blur. So far, the techniques have been studied separately for defocus and motion blur, and object motion has been assumed 1D (e.g., horizontal). This article explores a more general capture method that makes both defocus blur and motion blur nearly invariant to scene depth and in-plane 2D object motion. We formulate the problem as capturing a time-varying light field through a time-varying light field modulator at the lens aperture, and perform 5D (4D light field + 1D time) analysis of all the existing computational cameras for defocus/motion-only deblurring and their hybrids. This leads to a surprising conclusion that focus sweep, previously known as a depth-invariant capture method that moves the plane of focus through a range of scene depth during exposure, is near-optimal both in terms of depth and 2D motion invariance and in terms of high-frequency preservation for certain combinations of depth and motion ranges. Using our prototype camera, we demonstrate joint defocus and motion deblurring for moving scenes with depth variation.

References:

- Agarwala, A., Dontcheva, M., Agrawala, M., Drucker, S., Colburn, A., Curless, B., Salesin, D., and Cohen, M. 2004. Interactive digital photomontage. ACM Trans. Graph. 23, 3, 294–302.

- Agrawal, A. and Raskar, R. 2009. Optimal single image capture for motion deblurring. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR’09). 2560–2567.

- Agrawal, A. and Xu, Y. 2009. Coded exposure deblurring: Optimized codes for PSF estimation and invertibility. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR’09). 2066–2073.

- Agrawal, A., Xu, Y., and Raskar, R. 2009. Invertible motion blur in video. ACM Trans. Graph. 28, 3, 95:1–95:8.

- Baek, J. 2010. Transfer efficiency and depth invariance in computational cameras. In Proceedings of the International Conference on Intelligent Computer Communication and Processing (ICCP’10). 1–8.

- Bando, Y., Chen, B.-Y., and Nishita, T. 2011. Motion deblurring from a single image using circular sensor motion. Comput. Graph. Forum 30, 7, 1869–1878.

- Ben-Ezra, M. and Nayar, S. K. 2004. Motion-based motion deblurring. IEEE Trans. Pattern Anal. Mach. Intell. 26, 6, 689–698.

- Born, M. and Wolf, E. 1984. Principles of Optics 6th Ed. Pergamon Press.

- Bracewell, R. N. 1965. The Fourier Transform and Its Applications. McGraw-Hill.

- Burt, P. J. and Kolczynski, R. J. 1993. Enhanced image capture through fusion. In Proceedings of the IEEE International Conference on Computer Vision (ICCV’93). 173–182.

- Cho, T. S., Levin, A., Durand, F., and Freeman, W. T. 2010. Motion blur removal with orthogonal parabolic exposures. In Proceedings of the International Conference on Intelligent Computer Communication and Processing (ICCP’10). 1–8.

- Cossairt, O. and Nayar, S. 2010. Spectral focal sweep: Extended depth of field from chromatic aberrations. In Proceedings of the International Conference on Intelligent Computer Communication and Processing (ICCP’10). 1–8.

- Cossairt, O., Zhou, C., and Nayar, S. K. 2010. Diffusion coded photography for extended depth of field. ACM Trans. Graph. 29, 4, 31:1–31:10.

- Dabov, K., Foi, A., Katkovnik, V., and Egiazarian, K. 2008. Image restoration by sparse 3D transform-domain collaborative filtering. In Proceedings of the SPIE Conference on Electronic Imaging.

- Ding, Y., Mccloskey, S., and Yu, J. 2010. Analysis of motion blur with a flutter shutter camera for non-linear motion. In Proceedings of the European Conference on Computer Vision (ECCV’10). 15–30.

- Dowski, E. R. and Cathey, W. T. 1995. Extended depth of field through wave-front coding. Appl. Optics 34, 11, 1859–1866.

- Hasinoff, S. W., Kutulakos, K. N., Durand, F., and Freeman, W. T. 2009a. Time-constrained photography. In Proceedings of the IEEE International Conference on Computer Vision (ICCV’09). 333–340.

- Hasinoff, S. W., Kutulakos, K. N., Durand, F., and Freeman, W. T. 2009b. Time-constrained photography. Supplementary material. http://people. csail.mit.edu/hasinoff/timecon/.

- Hausler, G. 1972. A method to increase the depth of focus by two step image processing. Optics Comm. 6, 1, 38–42.

- Joshi, N., Kang, S. B., Zitnick, C. L., and Szeliski, R. 2010. Image deblurring using inertial measurement sensors. ACM Trans. Graph. 29, 4, 30:1–30:8.

- Kubota, A. and Aizawa, K. 2005. Reconstructing arbitrarily focused images from two differently focused images using linear filters. IEEE Trans. Image Process. 14, 11, 1848–1859.

- Levin, A. and Durand, F. 2010. Linear view synthesis using a dimensionality gap light field prior. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR’10). 1831–1838.

- Levin, A., Fergus, R., Durand, F., and Freeman, W. T. 2007. Image and depth from a conventional camera with a coded aperature. ACM Trans. Graph. 26, 3, 70:1–70:9.

- Levin, A., Freeman, W. T., and Durand, F. 2008a. Understanding camera trade-offs through a Bayesian analysis of light field projections. In Proceedings of the European Conference on Computer Vision (ECCV’08). 88–101.

- Levin, A., Sand, P., Cho, T. S., Durand, F., and Freeman, W. T. 2008b. Motion-invariant photography. ACM Trans. Graph. 27, 3, 71:1–71:9.

- Levin, A., Hasinoff, S. W., Green, P., Durand, F., and Freeman, W. T. 2009a. 4D frequency analysis of computational cameras for depth of field extension. ACM Trans. Graph. 28, 3, 97:1–97:14.

- Levin, A., Weiss, Y., Durand, F., and Freeman, W. T. 2009b. Understanding and evaluating blind deconvolution algorithms. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR’09). 1964–1971.

- Levoy, M. and Hanrahan, P. 1996. Light field rendering. In Proceedings of the Annual Conference on Computer Graphics and Interactive Techniques (SIGGRAPH’96). 31–42.

- Nagahara, H., Kutirummal, S., Zhou, C., and Nayar, S. K. 2008. Flexible depth of field photography. In Proceedings of the European Conference on Computer Vision (ECCV’08). 60–73.

- Ng, R. 2005. Fourier slice photography. ACM Trans. Graph. 24, 3, 735–744.

- Raskar, R., Agrawal, A., and Tumblin, J. 2006. Coded exposure photography: Motion deblurring using fluttered shutter. ACM Trans. Graph. 25, 3, 795–804.

- Rav-Acha, A. and Peleg, S. 2005. Two motion-blurred images are better than one. Pattern Recogn. Lett. 26, 3, 311–317.

- Tai, Y.-W., Du, H., Brown, M. S., and Lin, S. 2008. Image/video deblurring using a hybrid camera. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR’08). 1–8.

- Tai, Y.-W., Kong, N., Lin, S., and Shin, S. Y. 2010. Coded exposure imaging for projective motion deblurring. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR’10). 2408–2415.

- Veeraraghavan, A., Raskar, R., Agrawal, A., Mohan, A., and Tumblin, J. 2007. Dappled photography: Mask enhanced cameras for heterodyned light fields and coded aperature refocusing. ACM Trans. Graph. 26, 3, 69:1–69:12.

- Watson, G. N. 1922. A Treatise on the Theory of Bessel Functions. Cambridge University Press.

- Yuan, L., Sun, J., Quan, L., and Shum, H.-Y. 2007. Image deblurring with blurred/noisy image pairs. ACM Trans. Graph. 26, 3, 1:1–1:10.

- Zhang, L., Deshpande, A., and Chen, X. 2010. Denoising vs. deblurring: HDR imaging techniques using moving cameras. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR’10). 522–529.

- Zhou, C. and Nayar, S. 2009. What are good aperatures for defocus deblurring? In Proceedings of the International Conference on Intelligent Computer Communication and Processing (ICCP’09).

- Zhuo, S., Guo, D., and Sim, T. 2010. Robust flash deblurring. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR’10). 2440–2447.