“MatFusion: A Generative Diffusion Model for SVBRDF Capture” by Sartor and Peers

Conference:

Type(s):

Title:

- MatFusion: A Generative Diffusion Model for SVBRDF Capture

Session/Category Title:

- Visualizing the Future

Presenter(s)/Author(s):

Abstract:

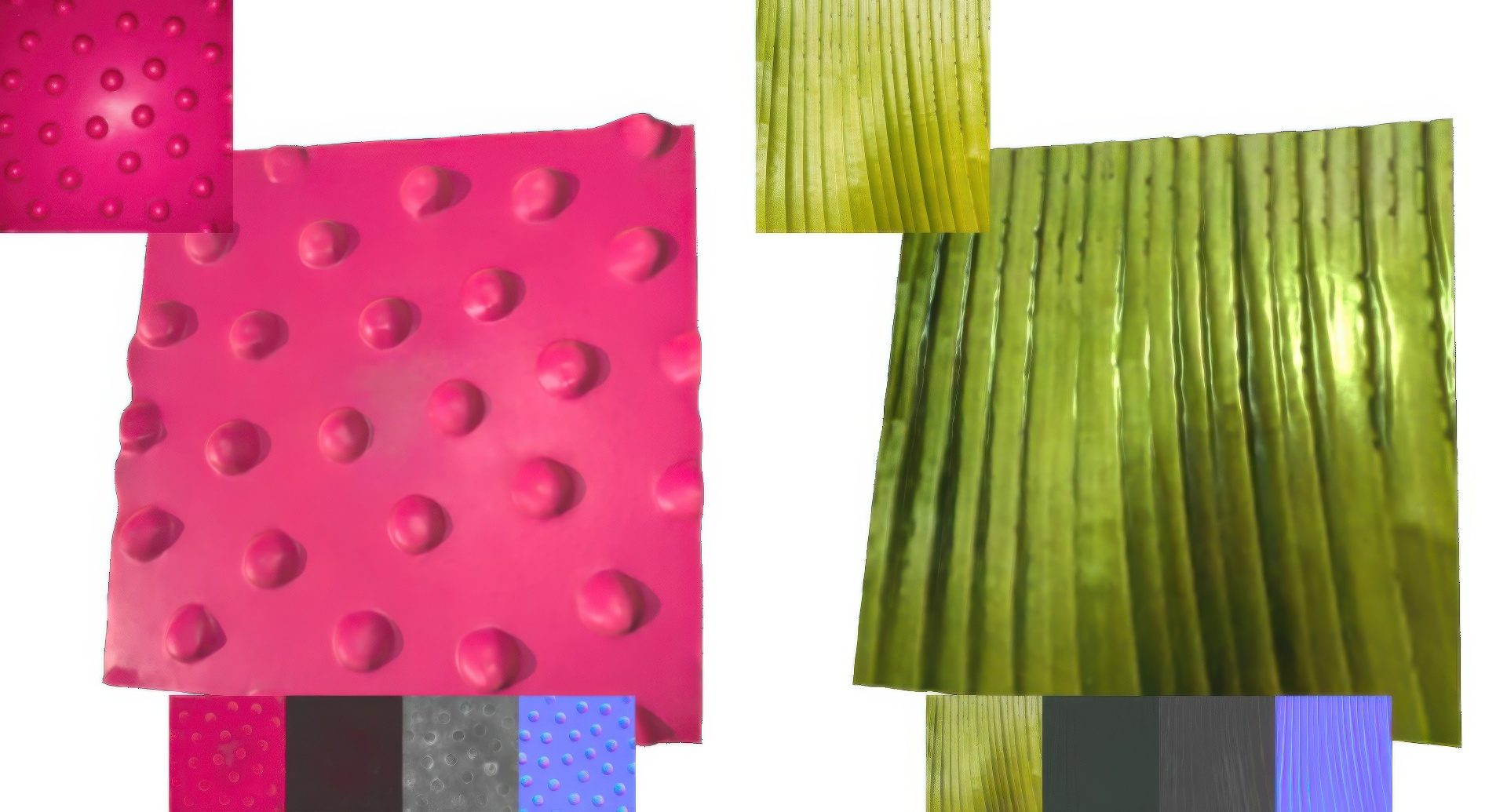

We formulate SVBRDF estimation from photographs as a diffusion task. To model the distribution of spatially varying materials, we first train a novel unconditional SVBRDF diffusion backbone model on a large set of 312,165 synthetic spatially varying material exemplars. This SVBRDF diffusion backbone model, named MatFusion, can then serve as a basis for refining a conditional diffusion model to estimate the material properties from a photograph under controlled or uncontrolled lighting. Our backbone MatFusion model is trained using only a loss on the reflectance properties, and therefore refinement can be paired with more expensive rendering methods without the need for backpropagation during training. Because the conditional SVBRDF diffusion models are generative, we can synthesize multiple SVBRDF estimates from the same input photograph from which the user can select the one that best matches the users’ expectation. We demonstrate the flexibility of our method by refining different SVBRDF diffusion models conditioned on different types of incident lighting, and show that for a single photograph under colocated flash lighting our method achieves equal or better accuracy than existing SVBRDF estimation methods.

References:

[1]

Miika Aittala, Timo Aila, and Jaakko Lehtinen. 2016. Reflectance modeling by neural texture synthesis. ACM Trans. Graph. 35, 4 (2016).

[2]

Valentin Deschaintre, Miika Aittala, Frédo Durand, George Drettakis, and Adrien Bousseau. 2018. Single-image SVBRDF capture with a rendering-aware deep network. ACM Trans. Graph. 37, 4 (2018).

[3]

Valentin Deschaintre, Miika Aittala, Frédo Durand, George Drettakis, and Adrien Bousseau. 2019. Flexible SVBRDF Capture with a Multi-Image Deep Network. Comp. Graph. Forum 38, 4 (2019).

[4]

Prafulla Dhariwal and Alexander Nichol. 2021. Diffusion Models Beat GANs on Image Synthesis. In NeurIPS, Vol. 34. 8780–8794.

[5]

Michael Fischer and Tobias Ritschel. 2022. Metappearance: Meta-Learning for Visual Appearance Reproduction. ACM Trans. Graph. 41, 6, Article 245 (nov 2022).

[6]

Duan Gao, Xiao Li, Yue Dong, Pieter Peers, Kun Xu, and Xin Tong. 2019. Deep inverse rendering for high-resolution SVBRDF estimation from an arbitrary number of images. ACM Trans. Graph. 38, 4 (2019).

[7]

Paul Guerrero, Miloš Hašan, Kalyan Sunkavalli, Radomír Měch, Tamy Boubekeur, and Niloy J. Mitra. 2022. MatFormer: A Generative Model for Procedural Materials. ACM Trans. Graph. 41, 4, Article 46 (jul 2022).

[8]

Jie Guo, Shuichang Lai, Chengzhi Tao, Yuelong Cai, Lei Wang, Yanwen Guo, and Ling-Qi Yan. 2021. Highlight-Aware Two-Stream Network for Single-Image SVBRDF Acquisition. ACM Trans. Graph. 40, 4, Article 123 (2021).

[9]

Y. Guo, M. Hašan, L. Yan, and S. Zhao. 2020a. A Bayesian Inference Framework for Procedural Material Parameter Estimation. Comp. Graph. Forum 39, 7 (2020), 255–266.

[10]

Yu Guo, Cameron Smith, Miloš Hašan, Kalyan Sunkavalli, and Shuang Zhao. 2020b. MaterialGAN: Reflectance Capture Using a Generative SVBRDF Model. ACM Trans. Graph. 39, 6, Article 254 (2020).

[11]

Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2016. Deep Residual Learning for Image Recognition. In CVPR. 770–778.

[12]

Jonathan Heek, Anselm Levskaya, Avital Oliver, Marvin Ritter, Bertrand Rondepierre, Andreas Steiner, and Marc van Zee. 2023. Flax: A neural network library and ecosystem for JAX. http://github.com/google/flax

[13]

Philipp Henzler, Valentin Deschaintre, Niloy J. Mitra, and Tobias Ritschel. 2021. Generative Modelling of BRDF Textures from Flash Images. ACM Trans. Graph. 40, 6, Article 284 (2021).

[14]

Jonathan Ho, Ajay Jain, and Pieter Abbeel. 2020. Denoising diffusion probabilistic models. Advances in Neural Information Processing Systems 33 (2020), 6840–6851.

[15]

Jonathan Ho, Chitwan Saharia, William Chan, David J. Fleet, Mohammad Norouzi, and Tim Salimans. 2022. Cascaded Diffusion Models for High Fidelity Image Generation. J. Mach. Learn. Res. 23 (2022), 47:1–47:33.

[16]

Yiwei Hu, Miloš Hašan, Paul Guerrero, Holly Rushmeier, and Valentin Deschaintre. 2022a. Controlling Material Appearance by Examples. Comp. Graph. Forum 41, 4 (2022), 117–128.

[17]

Yiwei Hu, Chengan He, Valentin Deschaintre, Julie Dorsey, and Holly Rushmeier. 2022b. An Inverse Procedural Modeling Pipeline for SVBRDF Maps. ACM Trans. Graph. 41, 2, Article 18 (jan 2022).

[18]

Zahra Kadkhodaie and Eero Simoncelli. 2021. Stochastic Solutions for Linear Inverse Problems using the Prior Implicit in a Denoiser. In NeurIPS, Vol. 34. 13242–13254.

[19]

Tero Karras, Miika Aittala, Timo Aila, and Samuli Laine. 2022. Elucidating the Design Space of Diffusion-Based Generative Models. In NeurIPS.

[20]

Xiao Li, Yue Dong, Pieter Peers, and Xin Tong. 2017. Modeling surface appearance from a single photograph using self-augmented convolutional neural networks. ACM Trans. Graph. 36, 4 (2017).

[21]

Zhengqin Li, Kalyan Sunkavalli, and Manmohan Chandraker. 2018. Materials for Masses: SVBRDF Acquisition with a Single Mobile Phone Image. In ECCV. 74–90.

[22]

Zhuang Liu, Hanzi Mao, Chao-Yuan Wu, Christoph Feichtenhofer, Trevor Darrell, and Saining Xie. 2022. A ConvNet for the 2020s. CVPR (2022).

[23]

Ilya Loshchilov and Frank Hutter. 2019. Decoupled Weight Decay Regularization. In ICLR.

[24]

Rosalie Martin, Arthur Roullier, Romain Rouffet, Adrien Kaiser, and Tamy Boubekeur. 2022. MaterIA: Single Image High-Resolution Material Capture in the Wild. Comp. Graph. Forum 41, 2 (2022), 163–177.

[25]

Yvain Quéau, Jean-Denis Durou, and Jean-François Aujol. 2018. Normal Integration: A Survey. Journal of Mathematical Imaging and Vision 60, 4 (May 2018), 576–593.

[26]

Markus N. Rabe and Charles Staats. 2021. Self-attention Does Not Need O(n2) Memory. arxiv:2112.05682 [cs.LG]

[27]

Robin Rombach, Andreas Blattmann, Dominik Lorenz, Patrick Esser, and Björn Ommer. 2022. High-Resolution Image Synthesis With Latent Diffusion Models. In CVPR. 10684–10695.

[28]

Chitwan Saharia, William Chan, Huiwen Chang, Chris Lee, Jonathan Ho, Tim Salimans, David Fleet, and Mohammad Norouzi. 2022. Palette: Image-to-Image Diffusion Models. In ACM SIGGRAPH 2022 Conference Proceedings(SIGGRAPH ’22). Article 15, 10 pages.

[29]

C. Saharia, J. Ho, W. Chan, T. Salimans, D. J. Fleet, and M. Norouzi. 2023. Image Super-Resolution via Iterative Refinement. IEEE TPAMI 45, 04 (apr 2023), 4713–4726.

[30]

Shen Sang and M. Chandraker. 2020. Single-Shot Neural Relighting and SVBRDF Estimation. In ECCV.

[31]

Hiroshi Sasaki, Chris G. Willcocks, and Toby P. Breckon. 2021. UNIT-DDPM: UNpaired Image Translation with Denoising Diffusion Probabilistic Models. arxiv:2104.05358 [cs.CV]

[32]

Liang Shi, Beichen Li, Miloš Hašan, Kalyan Sunkavalli, Tamy Boubekeur, Radomir Mech, and Wojciech Matusik. 2020. Match: Differentiable Material Graphs for Procedural Material Capture. ACM Trans. Graph. 39, 6, Article 196 (nov 2020).

[33]

Jiaming Song, Chenlin Meng, and Stefano Ermon. 2021a. Denoising Diffusion Implicit Models. In ICLR.

[34]

Yang Song and Stefano Ermon. 2020. Improved techniques for training score-based generative models. NeurIPS 33 (2020), 12438–12448.

[35]

Yang Song, Jascha Sohl-Dickstein, Diederik P Kingma, Abhishek Kumar, Stefano Ermon, and Ben Poole. 2021b. Score-Based Generative Modeling through Stochastic Differential Equations. In ICLR.

[36]

Giuseppe Vecchio, Simone Palazzo, and Concetto Spampinato. 2021. SurfaceNet: Adversarial SVBRDF Estimation From a Single Image. In ICCV.

[37]

Patrick von Platen, Suraj Patil, Anton Lozhkov, Pedro Cuenca, Nathan Lambert, Kashif Rasul, Mishig Davaadorj, and Thomas Wolf. 2022. Diffusers: State-of-the-art diffusion models. https://github.com/huggingface/diffusers.

[38]

Andrey Voynov, Kfir Abernan, and Daniel Cohen-Or. 2022. Sketch-Guided Text-to-Image Diffusion Models. (2022).

[39]

Bruce Walter, Stephen R. Marschner, Hongsong Li, and Kenneth E. Torrance. 2007. Microfacet Models for Refraction through Rough Surfaces. In EGSR. 195–206.

[40]

Tao Wen, Beibei Wang, Lei Zhang, Jie Guo, and Nicolas Holzschuch. 2022. SVBRDF Recovery from a Single Image with Highlights Using a Pre-trained Generative Adversarial Network. Comp. Graph. Forum 41, 6 (2022).

[41]

Wenjie Ye, Yue Dong, Pieter Peers, and Baining Guo. 2021. Deep Reflectance Scanning: Recovering Spatially-varying Material Appearance from a Flash-lit Video Sequence. Comp. Graph. Forum 40, 6 (2021), 409–427.

[42]

Wenjie Ye, Xiao Li, Yue Dong, Pieter Peers, and Xin Tong. 2018. Single Image Surface Appearance Modeling with Self-augmented CNNs and Inexact Supervision. Comp. Graph. Forum 37, 7 (2018), 201–211.

[43]

Lvmin Zhang and Maneesh Agrawala. 2023. Adding Conditional Control to Text-to-Image Diffusion Models. arXiv:2302.05543 [cs].

[44]

Richard Zhang, Phillip Isola, Alexei A Efros, Eli Shechtman, and Oliver Wang. 2018. The Unreasonable Effectiveness of Deep Features as a Perceptual Metric. In CVPR.

[45]

Xilong Zhou, Milos Hasan, Valentin Deschaintre, Paul Guerrero, Kalyan Sunkavalli, and Nima Khademi Kalantari. 2022. TileGen: Tileable, Controllable Material Generation and Capture. In SIGGRAPH Asia 2022 Conference Papers. Article 34.

[46]

Xilong Zhou and Nima Khademi Kalantari. 2021. Adversarial Single-Image SVBRDF Estimation with Hybrid Training. Comp. Graph. Forum (2021).

[47]

Xilong Zhou and Nima Khademi Kalantari. 2022. Look-Ahead Training with Learned Reflectance Loss for Single-Image SVBRDF Estimation. ACM Trans. Graph. 41, 6, Article 266 (nov 2022).