“MagniFinger: Fingertip-Mounted Microscope For Augmenting Human Perception” by Hiyama, Obushi, Wakisaka, Inami and Kasahara

Conference:

Type(s):

Title:

- MagniFinger: Fingertip-Mounted Microscope For Augmenting Human Perception

Presenter(s)/Author(s):

Entry Number:

- 02

Abstract:

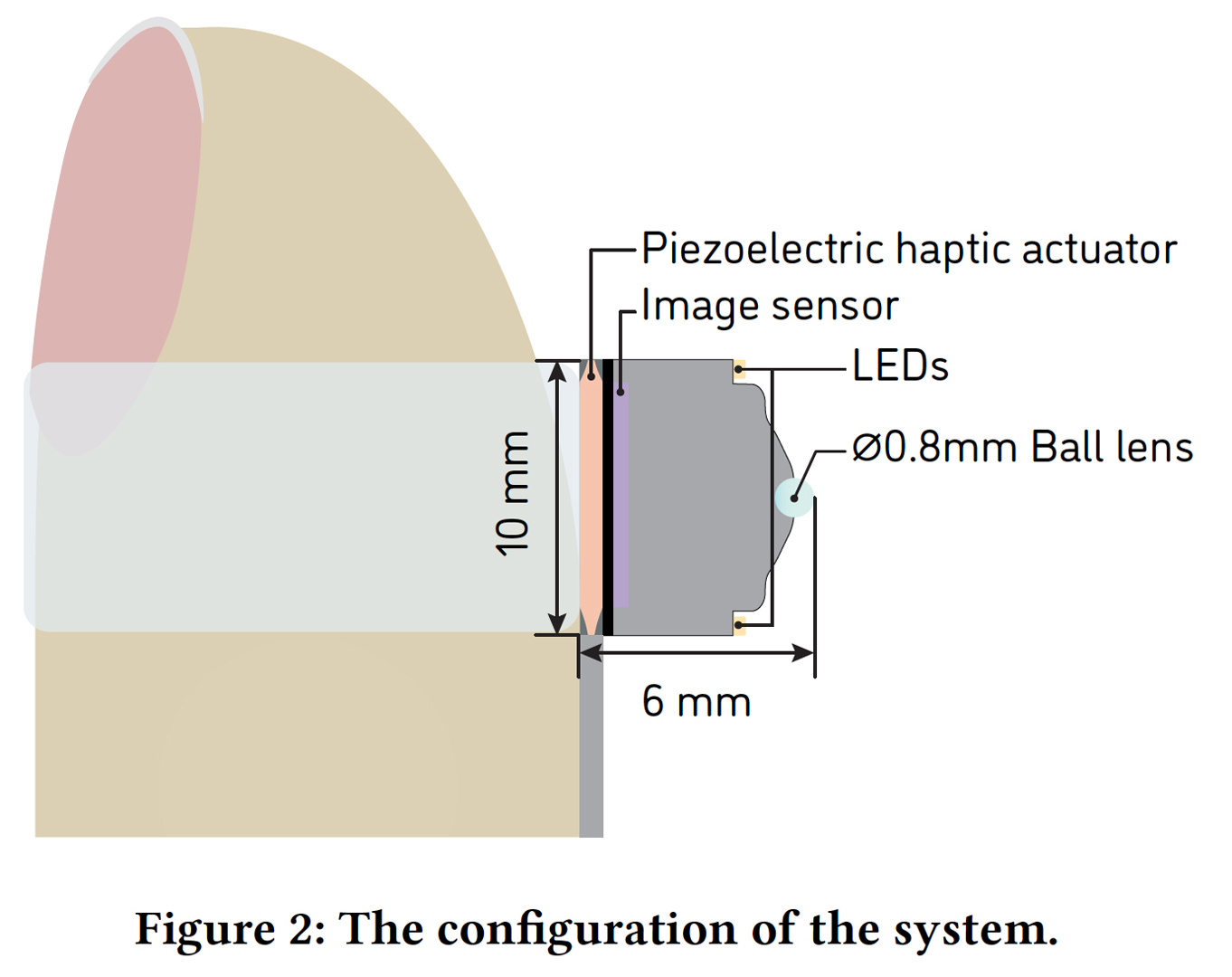

We propose MagniFinger, a fingertip-worn microscopy device that augments the limited abilities of human visual and tactile sensory systems in micrometer-scale environments. MagniFinger makes use of the finger’s dexterous motor skills to achieve precise and intuitive control while allowing the user to observe the desired position simply by placing a fingertip. To implement the fingertip-sized device and its tactile display, we have built a system comprising a ball lens, an image sensor, and a thin piezoelectric actuator. Vibration-based tactile feedback is displayed based on the luminance of a magnified image, providing the user with the feeling of touching the magnified world.

INTRODUCTION

Microscopes have played a key role in our understanding of nature by enhancing the limited resolution of human sight. In terms of an instrument for human augmentation, Robert Hooke mentioned the possibilities of such tools, describing them as artificial organs that compensate the deficient human perception [Hooke et al. 1665]. To use a microscope as such an organ, it is desirable that it should work with an observer’s movement for easier and more intuitive use. Otherwise, the user would be an operator instead of an observer. In addition to using sight, we can also feel the world using our fingers, owing to their high level of dexterity and tactile sensitivity. However, existing magnifiers do not fully make use of this as they only provide sight, omitting inputs from tactile sensation.

To address this problem, caused by limitations in both the human tactile sensory system and existing technology, we propose MagniFinger, a fingertip-worn microscopy device that provides the feeling of touching magnified objects. It has a small microscopy camera that can be attached to a fingertip, allowing the magnification of micrometer-scale objects. Magnified images are capture at the contacting point of the finger pad and users can move the observation point through fine movements of the fingertip. In addition, MagniFinger provides vibrotactile feedback through the finger pad, which is automatically generated from captured images. This image-based feedback can also provide passive tactile feedback; for example, the user could feel the tiny movements of microbes under the finger pad. Through both active and passive multi-modal stimulus in microenvironments, MagniFinger can provide data impossible to obtain using only visual information.

RELATED WORK

Microscope user interfaces are often designed with the aim of reducing the complexity of the procedures that a user must follow. For example, electron microscopes (EM) and scanning probe microscopes (SPM) have adopted robotic arms such as Nanomanipulator [Taylor et al. 1993]. With this system, single atom manipulation is achieved, which the user can feel via haptic feedback. However, such systems require relatively large space, and consequently, their usage is limited to research purposes.

MagniFinger provides an experience whereby a fingertip becomes a microscopic probe, which is controlled using the finger’s movements. Based on previous study [De Nil and Lafaille 2002], a subject’s finger movement becomes more precise when they are shown the magnified image of their finger movements with a high control-display gain. Based on this mechanism, we have previously presented another system[Obushi et al. 2019]. However, as this system only provided visual feedback, the users could perceive only limited information from the magnified images.

SYSTEM OVERVIEW

Figure 2 shows the configuration of the system. To achieve magnification by a fingertip-mounted camera while minimizing its size for a better experience of feeling, we designed a compact optical system with a short focal length based on the theory underlying Leeuwenhoek’s single-lens microscope. As a lens with a short focal length can only keep focus at a short distance, MagniFinger uses a 0.8 mm ball lens to allow users to contact the target during observations.

The acquired image is processed by a computer to generate vibrotactile feedback on the user’s fingertip. Vibration pattern is generated based on the change in luminance of the pixels at the center as shown in Figure 3. As soon as an image is captured, the brightness of the central pixel will be compared with that of an older image to calculate the amplitude, which is later applied to the piezoelectric haptic actuator.

CONCLUSION

We have developed a fingertip-sized microscope for augmenting the finger’s perception by presenting magnified visual feedback and image-based vibrotactile feedback on a finger pad. The compact microscopy camera allows the user to both feel and observe a surface at the same time, providing a novel magnification experience.

References:

- Luc F. De Nil and Sophie J. Lafaille. 2002. Jaw and Finger Movement Accuracy under Visual and Nonvisual Feedback Conditions. Perceptual and Motor Skills 95, 3_suppl (2002), 1129–1140. https://doi.org/10.2466/pms.2002.95.3f.1129

- Robert Hooke, John Martyn, James Allestry, and Lessing J Rosenwald Collection (Library of Congress). 1665. Micrographia, or, Some physiological descriptions of minute bodies made by magnifying glasses : with observations and inquiries there upon. Printed by Jo. Martyn and Ja. Allestry, printers to the Royal Society, and are to be sold at their shop at the Bell in S. Paul’s Church-yard, London. https://doi.org/10.5962/bhl.title.904

- Noriyasu Obushi, Sohei Wakisaka, Shunichi Kasahara, Katie Seaborn, Atsushi Hiyama, and Masahiko Inami. 2019. MagniFinger: Fingertip Probe Microscope with Direct Micro Movements. In Proceedings of the 10th Augmented Human International Conference 2019 (AH2019). ACM, New York, NY, USA, Article 32, 7 pages. https: //doi.org/10.1145/3311823.3311859

- Russell M. Taylor, Warren Robinett, Vernon L. Chi, Frederick P. Brooks, William V. Wright, R. Stanley Williams, and Erik J. Snyder. 1993. The nanomanipulator. In Proceedings of the 20th annual conference on Computer graphics and interactive techniques – SIGGRAPH ’93. ACM Press, New York, New York, USA, 127–134. https: //doi.org/10.1145/166117.166133

Keyword(s):

Acknowledgements:

This work is supported by JST ERATO Grant Number JPMJER1701, Japan.