“Locally Attentional SDF Diffusion for Controllable 3D Shape Generation” by Zheng, Pan, Wang, Tong, Liu, et al. …

Conference:

Type(s):

Title:

- Locally Attentional SDF Diffusion for Controllable 3D Shape Generation

Session/Category Title:

- Diffusion For Geometry

Presenter(s)/Author(s):

Moderator(s):

Abstract:

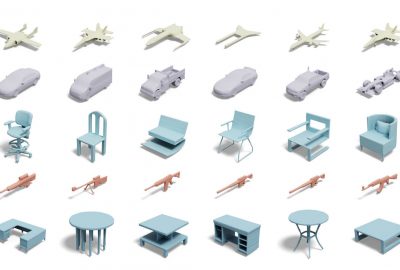

Although the recent rapid evolution of 3D generative neural networks greatly improves 3D shape generation, it is still not convenient for ordinary users to create 3D shapes and control the local geometry of generated shapes. To address these challenges, we propose a diffusion-based 3D generation framework — locally attentional SDF diffusion, to model plausible 3D shapes, via 2D sketch image input. Our method is built on a two-stage diffusion model. The first stage, named occupancy-diffusion, aims to generate a low-resolution occupancy field to approximate the shape shell. The second stage, named SDF-diffusion, synthesizes a high-resolution signed distance field within the occupied voxels determined by the first stage to extract fine geometry. Our model is empowered by a novel view-aware local attention mechanism for image-conditioned shape generation, which takes advantage of 2D image patch features to guide 3D voxel feature learning, greatly improving local controllability and model generalizability. Through extensive experiments in sketch-conditioned and category-conditioned 3D shape generation tasks, we validate and demonstrate the ability of our method to provide plausible and diverse 3D shapes, as well as its superior controllability and generalizability over existing work.

References:

1. 2022. LAION2B dataset. https://huggingface.co/datasets/laion/laion2B-en.

2. Panos Achlioptas, Olga Diamanti, Ioannis Mitliagkas, and Leonidas Guibas. 2018. Learning representations and generative models for 3D point clouds. In ICML. PMLR, 40–49.

3. Nichol Alex, Jun Heewoo, Dhariwal Prafulla, Mishkin Pamela, and Chen Mark. 2022. Point-E: A system for generating 3D point clouds from complex prompts. arXiv:2212.08751.

4. Ruojin Cai, Guandao Yang, Hadar Averbuch-Elor, Zekun Hao, Serge Belongie, Noah Snavely, and Bharath Hariharan. 2020. Learning gradient fields for shape generation. In ECCV. Springer, 364–381.

5. John Canny. 1986. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 8 (1986), 679–698. Issue 6.

6. Eric R Chan, Connor Z Lin, Matthew A Chan, Koki Nagano, Boxiao Pan, Shalini De Mello, Orazio Gallo, Leonidas J Guibas, Jonathan Tremblay, Sameh Khamis, et al. 2022. Efficient geometry-aware 3D generative adversarial networks. In CVPR. 16123–16133.

7. Eric R Chan, Marco Monteiro, Petr Kellnhofer, Jiajun Wu, and Gordon Wetzstein. 2021. pi-GAN: Periodic implicit generative adversarial networks for 3d-aware image synthesis. In CVPR. 5799–5809.

8. Angel X Chang, Thomas Funkhouser, Leonidas Guibas, Pat Hanrahan, Qixing Huang, Zimo Li, Silvio Savarese, Manolis Savva, Shuran Song, Hao Su, et al. 2015. ShapeNet: An information-rich 3D model repository. arXiv:1512.03012.

9. Kevin Chen, Christopher B Choy, Manolis Savva, Angel X Chang, Thomas Funkhouser, and Silvio Savarese. 2018. Text2shape: Generating shapes from natural language by learning joint embeddings. In ACCV. 100–116.

10. Ting Chen, Ruixiang Zhang, and Geoffrey Hinton. 2023. Analog Bits: Generating discrete data using diffusion models with self-conditioning. In ICLR.

11. Zhiqin Chen, Vladimir G Kim, Matthew Fisher, Noam Aigerman, Hao Zhang, and Siddhartha Chaudhuri. 2021. DECOR-GAN: 3D shape detailization by conditional refinement. In CVPR. 15740–15749.

12. Zhiqin Chen and Hao Zhang. 2019. Learning implicit fields for generative shape modeling. In CVPR. 5939–5948.

13. Yen-Chi Cheng, Hsin-Ying Lee, Sergey Tulyakov, Alexander Schwing, and Liangyan Gui. 2023. SDFusion: Multimodal 3D shape completion, reconstruction, and generation. In CVPR.

14. Zezhou Cheng, Menglei Chai, Jian Ren, Hsin-Ying Lee, Kyle Olszewski, Zeng Huang, Subhransu Maji, and Sergey Tulyakov. 2022. Cross-modal 3D shape generation and manipulation. In ECCV. Springer, 303–321.

15. Gene Chou, Yuval Bahat, and Felix Heide. 2022. DiffusionSDF: Conditional generative modeling of signed distance functions. arXiv:2211.13757.

16. Doug DeCarlo, Adam Finkelstein, Szymon Rusinkiewicz, and Anthony Santella. 2003. Suggestive contours for conveying shape. In SIGGRAPH. 848–855.

17. Yu Deng, Jiaolong Yang, Jianfeng Xiang, and Xin Tong. 2022. GRAM: Generative Radiance Manifolds for 3D-Aware Image Generation. In CVPR.

18. Prafulla Dhariwal and Alexander Nichol. 2021. Diffusion models beat GANs on image synthesis. NeurIPS 34, 8780–8794.

19. Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, et al. 2021. An image is worth 16×16 words: Transformers for image recognition at scale. In ICLR.

20. Mathias Eitz, James Hays, and Marc Alexa. 2012. How do humans sketch objects? ACM Trans. Graph. 31, 4 (2012), 1–10.

21. H Fan, H Su, and LJ Guibas. 2017. A point set generation network for 3D object reconstruction from a single image. In CVPR.

22. Rao Fu, Xiao Zhan, Yiwen Chen, Daniel Ritchie, and Srinath Sridhar. 2022. ShapeCrafter: A recursive text-conditioned 3D shape generation model. In NeurIPS.

23. Chenjian Gao, Qian Yu, Lu Sheng, Yi-Zhe Song, and Dong Xu. 2022b. SketchSampler: Sketch-based 3D reconstruction via view-dependent depth sampling. In ECCV. 464–479.

24. Jun Gao, Tianchang Shen, Zian Wang, Wenzheng Chen, Kangxue Yin, Daiqing Li, Or Litany, Zan Gojcic, and Sanja Fidler. 2022a. Get3D: A generative model of high quality 3D textured shapes learned from images. In NeurIPS.

25. Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2014. Generative adversarial nets. In NeurIPS, Vol. 27.

26. Benoit Guillard, Edoardo Remelli, Pierre Yvernay, and Pascal Fua. 2021. Sketch2Mesh: Reconstructing and editing 3D shapes from sketches. In ICCV. 13023–13032.

27. Jack Hessel, Ari Holtzman, Maxwell Forbes, Ronan Le Bras, and Yejin Choi. 2021. ClipScore: A reference-free evaluation metric for image captioning. In EMNLP.

28. Jonathan Ho, Ajay Jain, and Pieter Abbeel. 2020. Denoising diffusion probabilistic models. NeurIPS 33, 6840–6851.

29. Jonathan Ho and Tim Salimans. 2021. Classifier-free diffusion guidance. In NeurIPS Workshop.

30. Fangzhou Hong, Mingyuan Zhang, Liang Pan, Zhongang Cai, Lei Yang, and Ziwei Liu. 2022. AvatarCLIP: Zero-shot text-driven generation and animation of 3D avatars. ACM Trans. Graph. 41, 4 (2022).

31. Ka-Hei Hui, Ruihui Li, Jingyu Hu, and Chi-Wing Fu. 2022. Neural wavelet-domain diffusion for 3D shape generation. In SIGGRAPHAsia Conference Papers.

32. Le Hui, Rui Xu, Jin Xie, Jianjun Qian, and Jian Yang. 2020. Progressive point cloud deconvolution generation network. In ECCV. Springer, 397–413.

33. Moritz Ibing, Gregor Kobsik, and Leif Kobbelt. 2021a. Octree Transformer: Autoregressive 3D shape generation on hierarchically structured sequences. arXiv:2111.12480.

34. Moritz Ibing, Isaak Lim, and Leif Kobbelt. 2021b. 3D shape generation with grid-based implicit functions. In CVPR. 13559–13568.

35. Ajay Jain, Ben Mildenhall, Jonathan T Barron, Pieter Abbeel, and Ben Poole. 2022. Zero-shot text-guided object generation with dream fields. In CVPR. 867–876.

36. Chiyu Jiang, Philip Marcus, et al. 2017. Hierarchical detail enhancing mesh-based shape generation with 3D generative adversarial network. arXiv:1709.07581.

37. Nasir Khalid, Tianhao Xie, Eugene Belilovsky, and Tiberiu Popa. 2022. Text to mesh without 3D supervision using limit subdivision. In Siggraph Asia Conference Papers.

38. Diederik Kingma, Tim Salimans, Ben Poole, and Jonathan Ho. 2021. Variational diffusion models. NeurIPS 34, 21696–21707.

39. Diederik P Kingma and Jimmy Ba. 2014. Adam: A method for stochastic optimization. arXiv:1412.6980.

40. Marian Kleineberg, Matthias Fey, and Frank Weichert. 2020. Adversarial generation of continuous implicit shape representations. In Eurographics 2020 – Short Papers. The Eurographics Association.

41. Di Kong, Qiang Wang, and Yonggang Qi. 2022. A diffusion-refinement model for sketch-to-point modeling. In ACCV. 1522–1538.

42. Changjian Li, Hao Pan, Yang Liu, Xin Tong, Alla Sheffer, and Wenping Wang. 2018. Robust flow-guided neural prediction for sketch-based freeform surface modeling. ACM Trans. Graph. 37, 6 (2018).

43. Muheng Li, Yueqi Duan, Jie Zhou, and Jiwen Lu. 2023. Diffusion-SDF: Text-to-shape via voxelized diffusion. In CVPR.

44. Joseph J. Lim, Hamed Pirsiavash, and Antonio Torralba. 2013. Parsing IKEA objects: Fine pose estimation. In ICCV.

45. Chen-Hsuan Lin, Jun Gao, Luming Tang, Towaki Takikawa, Xiaohui Zeng, Xun Huang, Karsten Kreis, Sanja Fidler, Ming-Yu Liu, and Tsung-Yi Lin. 2023. Magic3D: Highresolution text-to-3D content creation. In CVPR.

46. Shichen Liu, Tianye Li, Weikai Chen, and Hao Li. 2019. Soft Rasterizer: A differentiable renderer for image-based 3D reasoning. In ICCV. 7708–7717.

47. Zhengzhe Liu, Peng Dai, Ruihui Li, Xiaojuan Qi, and Chi-Wing Fu. 2023. ISS: Image as stetting stone for text-guided 3D shape generation. In ICLR.

48. William E Lorensen and Harvey E Cline. 1987. Marching cubes: A high resolution 3D surface construction algorithm. SIGGRAPH, 163–169.

49. Ilya Loshchilov and Frank Hutter. 2019. Decoupled Weight Decay Regularization. In ICLR.

50. Zhaoliang Lun, Matheus Gadelha, Evangelos Kalogerakis, Subhransu Maji, and Rui Wang. 2017. 3D shape reconstruction from sketches via multi-view convolutional networks. In 3DV. IEEE, 67–77.

51. Shitong Luo and Wei Hu. 2021. Diffusion probabilistic models for 3D point cloud generation. In CVPR. 2837–2845.

52. Zhaoyang Lyu, Zhifeng Kong, Xudong Xu, Liang Pan, and Dahua Lin. 2021. A conditional point diffusion-refinement paradigm for 3D point cloud completion. In ICLR.

53. Lars Mescheder, Michael Oechsle, Michael Niemeyer, Sebastian Nowozin, and Andreas Geiger. 2019. Occupancy Networks: Learning 3D reconstruction in function space. In CVPR. 4460–4470.

54. Oscar Michel, Roi Bar-On, Richard Liu, Sagie Benaim, and Rana Hanocka. 2022. Text2mesh: Text-driven neural stylization for meshes. In CVPR. 13492–13502.

55. Paritosh Mittal, Yen-Chi Cheng, Maneesh Singh, and Shubham Tulsiani. 2022. AutoSDF: Shape priors for 3D completion, reconstruction and generation. In CVPR.

56. Gimin Nam, Mariem Khlifi, Andrew Rodriguez, Alberto Tono, Linqi Zhou, and Paul Guerrero. 2022. 3D-LDM: Neural implicit 3D shape generation with latent diffusion models. arXiv:2212.00842.

57. Charlie Nash, Yaroslav Ganin, SM Ali Eslami, and Peter Battaglia. 2020. PolyGen: An autoregressive generative model of 3D meshes. In ICML. PMLR, 7220–7229.

58. Michael Niemeyer and Andreas Geiger. 2021. GIRAFFE: Representing scenes as compositional generative neural feature fields. In CVPR. 11453–11464.

59. Luke Olsen, Faramarz F Samavati, Mario Costa Sousa, and Joaquim A Jorge. 2009. Sketch-based modeling: A survey. Computers & Graphics 33, 1 (2009), 85–103.

60. Roy Or-El, Xuan Luo, Mengyi Shan, Eli Shechtman, Jeong Joon Park, and Ira Kemelmacher-Shlizerman. 2022. StyleSDF: High-resolution 3D-consistent image and geometry generation. In CVPR. 13503–13513.

61. Songyou Peng, Chiyu Jiang, Yiyi Liao, Michael Niemeyer, Marc Pollefeys, and Andreas Geiger. 2021. Shape as Points: A differentiable poisson solver. NeurIPS 34, 13032–13044.

62. Ben Poole, Ajay Jain, Jonathan T. Barron, and Ben Mildenhall. 2023. DreamFusion: Text-to-3D using 2D diffusion. In ICLR.

63. Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, et al. 2021. Learning transferable visual models from natural language supervision. In ICML. 8748–8763.

64. Danilo Rezende and Shakir Mohamed. 2015. Variational inference with normalizing flows. In ICML. PMLR, 1530–1538.

65. Shunsuke Saito, Zeng Huang, Ryota Natsume, Shigeo Morishima, Angjoo Kanazawa, and Hao Li. 2019. PIFu: Pixel-aligned implicit function for high-resolution clothed human digitization. In ICCV. 2304–2314.

66. Aditya Sanghi, Hang Chu, Joseph G. Lambourne, Ye Wang, Chin-Yi Cheng, Marco Fumero, and Kamal Rahimi Malekshan. 2022a. CLIP-Forge: Towards zero-shot text-to-shape generation. In CVPR. 18603–18613.

67. Aditya Sanghi, Rao Fu, Vivian Liu, Karl Willis, Hooman Shayani, Amir Hosein Khasahmadi, Srinath Sridhar, and Daniel Ritchie. 2022b. TextCraft: Zero-Shot generation of high-fidelity and diverse shapes from text. arXiv:2211.01427.

68. Katja Schwarz, Yiyi Liao, Michael Niemeyer, and Andreas Geiger. 2020. GRAF: Generative radiance fields for 3D-aware image synthesis. NeurIPS 33, 20154–20166.

69. J Ryan Shue, Eric Ryan Chan, Ryan Po, Zachary Ankner, Jiajun Wu, and Gordon Wetzstein. 2022. 3D Neural Field Generation using Triplane Diffusion. arXiv:2211.16677.

70. Jascha Sohl-Dickstein, Eric Weiss, Niru Maheswaranathan, and Surya Ganguli. 2015. Deep unsupervised learning using nonequilibrium thermodynamics. In ICML. 2256–2265.

71. Jiaming Song, Chenlin Meng, and Stefano Ermon. 2020. Denoising diffusion implicit models. In ICLR.

72. Aaron Van Den Oord, Oriol Vinyals, and Koray Kavukcuoglu. 2017. Neural discrete representation learning. NeurIPS 30.

73. Aaron Van Oord, Nal Kalchbrenner, and Koray Kavukcuoglu. 2016. Pixel recurrent neural networks. In ICML. 1747–1756.

74. Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, ?ukasz Kaiser, and Illia Polosukhin. 2017. Attention is all you need. NeurIPS 30.

75. Dan Wang, Xinrui Cui, Xun Chen, Zhengxia Zou, Tianyang Shi, Septimiu Salcudean, Z Jane Wang, and Rabab Ward. 2021. Multi-view 3D reconstruction with transformers. In ICCV. 5722–5731.

76. Nanyang Wang, Yinda Zhang, Zhuwen Li, Yanwei Fu, Wei Liu, and Yu-Gang Jiang. 2018. Pixel2Mesh: Generating 3D mesh models from single RGB images. In ECCV. 52–67.

77. Pengshuai Wang, Yang Liu, Yuxiao Guo, Chunyu Sun, and Xin Tong. 2017. O-CNN: Octree-based convolutional neural networks for 3D shape analysis. ACM Trans. Graph. 36, 4 (2017).

78. Peng-Shuai Wang, Yang Liu, and Xin Tong. 2020. Deep Octree-based CNNs with output-guided skip connections for 3D shape and scene completion. In CVPR Workshop.

79. Jiajun Wu, Chengkai Zhang, Tianfan Xue, William T Freeman, and Joshua B Tenenbaum. 2016. Learning a probabilistic latent space of object shapes via 3D generative-adversarial modeling. In NeurIPS. 82–90.

80. Nan Xiang, Ruibin Wang, Tao Jiang, Li Wang, Yanran Li, Xiaosong Yang, and Jianjun Zhang. 2020. Sketch-based modeling with a differentiable renderer. Computer Animation and Virtual Worlds 31, 4–5 (2020), e1939.

81. Hongyi Xu and Jernej Barbi?. 2014. Signed distance fields for polygon soup meshes. In Graphics Interface. 35–41.

82. Qiangeng Xu, Weiyue Wang, Duygu Ceylan, Radomir Mech, and Ulrich Neumann. 2019. DISN: Deep implicit surface network for high-quality single-view 3D reconstruction. NeurIPS 32.

83. Xingguang Yan, Liqiang Lin, Niloy J Mitra, Dani Lischinski, Daniel Cohen-Or, and Hui Huang. 2022. Shapeformer: Transformer-based shape completion via sparse representation. In CVPR. 6239–6249.

84. Guandao Yang, Xun Huang, Zekun Hao, Ming-Yu Liu, Serge Belongie, and Bharath Hariharan. 2019. PointFlow: 3D point cloud generation with continuous normalizing flows. In ICCV. 4541–4550.

85. Xiaohui Zeng, Arash Vahdat, Francis Williams, Zan Gojcic, Or Litany, Sanja Fidler, and Karsten Kreis. 2022. LION: Latent point diffusion models for 3D shape generation. In NeurIPS.

86. Biao Zhang, Matthias Nie?ner, and Peter Wonka. 2022. 3DILG: Irregular Latent grids for 3D generative modeling. In NeurIPS.

87. Song-Hai Zhang, Yuan-Chen Guo, and Qing-Wen Gu. 2021. Sketch2Model: View-aware 3D modeling from single free-hand sketches. In CVPR. 6012–6021.

88. Xinyang Zheng, Yang Liu, Pengshuai Wang, and Xin Tong. 2022. SDF-StyleGAN: Implicit SDF-based StyleGAN for 3D shape generation. In Comput. Graph. Forum, Vol. 41. 52–63.

89. Yue Zhong, Yulia Gryaditskaya, Honggang Zhang, and Yi-Zhe Song. 2020a. Deep sketch-based modeling: Tips and tricks. In 3DV. 543–552.

90. Yue Zhong, Yulia Gryaditskaya, Honggang Zhang, and Yi-Zhe Song. 2022. A study of deep single sketch-based modeling: View/style invariance, sparsity and latent space disentanglement. Computers & Graphics 106 (2022), 237–247.

91. Yue Zhong, Yonggang Qi, Yulia Gryaditskaya, Honggang Zhang, and Yi-Zhe Song. 2020b. Towards practical sketch-based 3D shape generation: The role of professional sketches. IEEE Transactions on Circuits and Systems for Video Technology 31, 9 (2020), 3518–3528.

92. Linqi Zhou, Yilun Du, and Jiajun Wu. 2021. 3D shape generation and completion through point-voxel diffusion. In ICCV. 5826–5835.

Additional Images:

- 2023 Technical Papers: Zheng_Locally Attentional SDF Diffusion for Controllable 3D Shape Generation