“Lip-Sync ML: Machine Learning-based Framework to Generate Lip-sync Animations in FINAL FANTASY VII REBIRTH” by Nakada, Gil, Iwasawa and Hara

Conference:

Title:

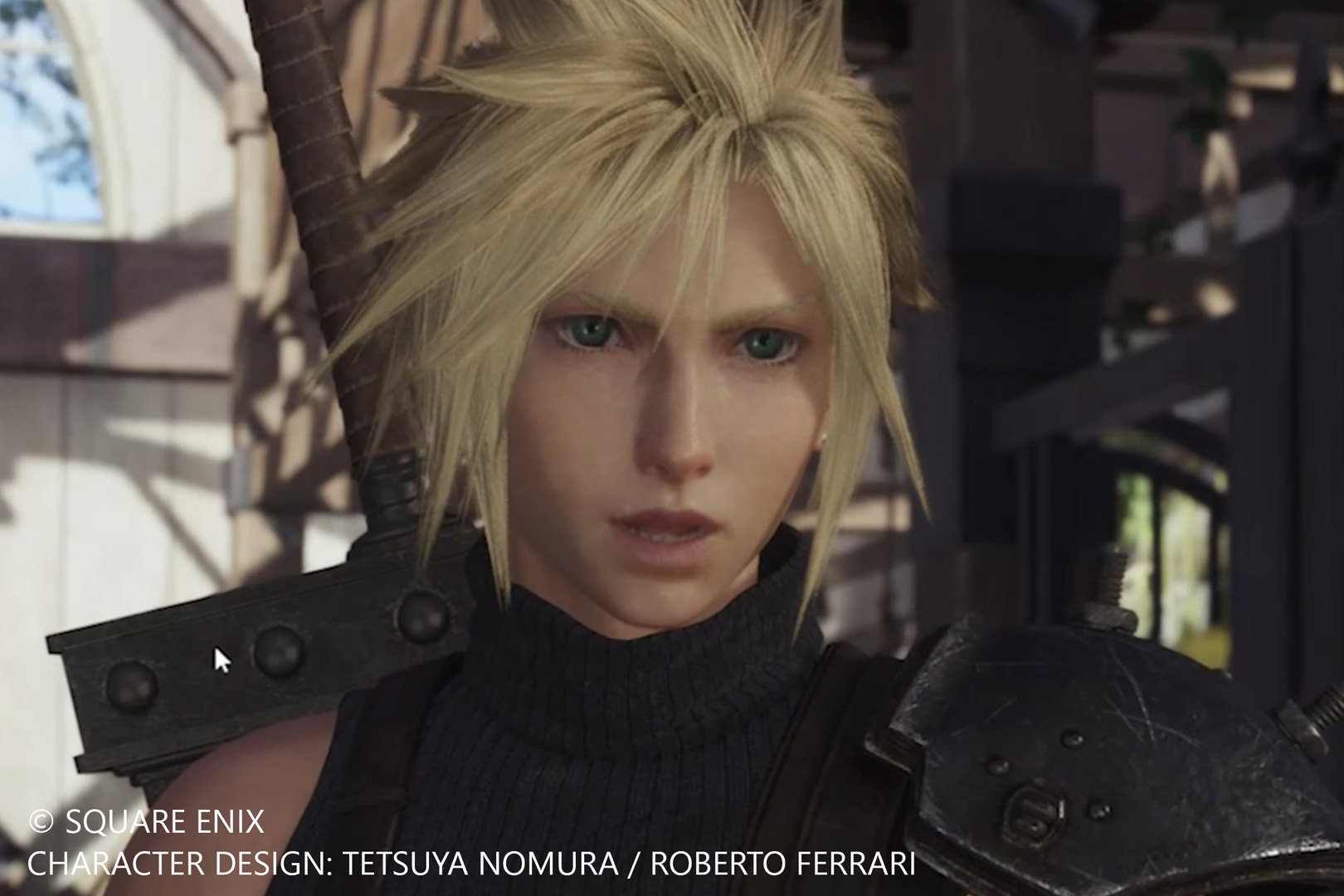

- Lip-Sync ML: Machine Learning-based Framework to Generate Lip-sync Animations in FINAL FANTASY VII REBIRTH

Session/Category Title:

- Lips Don't Lie

Presenter(s)/Author(s):

Abstract:

We present a machine learning-based framework called Lip-Sync ML to generate lip-sync animations from audio input. In “Final Fantasy VII Rebirth”, we used audio clips and animations from our previous game title as training data and created high quality lip-sync animations efficiently with Lip-Sync ML.

References:

[1]

Autodesk, INC.2024. Maya. https://www.autodesk.com/products/maya/overview

[2]

Brigitte Bigi. 2015. SPPAS – MULTI-LINGUAL APPROACHES TO THE AUTOMATIC ANNOTATION OF SPEECH. The Phonetician. Journal of the International Society of Phonetic Sciences 111-112, ISSN:0741-6164 (2015), 54–69. https://hal.science/hal-01417876

[3]

Pif Edwards, Chris Landreth, Eugene Fiume, and Karan Singh. 2016. JALI: An Animator-Centric Viseme Model for Expressive Lip Synchronization. ACM Trans. Graph. 35, 4, Article 127 (jul 2016), 11 pages. https://doi.org/10.1145/2897824.2925984

[4]

Cheng-Zhi Anna Huang, Ashish Vaswani, Jakob Uszkoreit, Noam Shazeer, Ian Simon, Curtis Hawthorne, Andrew M. Dai, Matthew D. Hoffman, Monica Dinculescu, and Douglas Eck. 2018. Music Transformer. arxiv:1809.04281 [cs.LG]

[5]

Tero Karras, Timo Aila, Samuli Laine, Antti Herva, and Jaakko Lehtinen. 2017. Audio-driven facial animation by joint end-to-end learning of pose and emotion. ACM Trans. Graph. 36, 4, Article 94 (jul 2017), 12 pages. https://doi.org/10.1145/3072959.3073658