“Learning symmetric and low-energy locomotion” by Yu, Turk and Liu

Conference:

Type(s):

Title:

- Learning symmetric and low-energy locomotion

Session/Category Title:

- Animation Control

Presenter(s)/Author(s):

Moderator(s):

Entry Number:

- 144

Abstract:

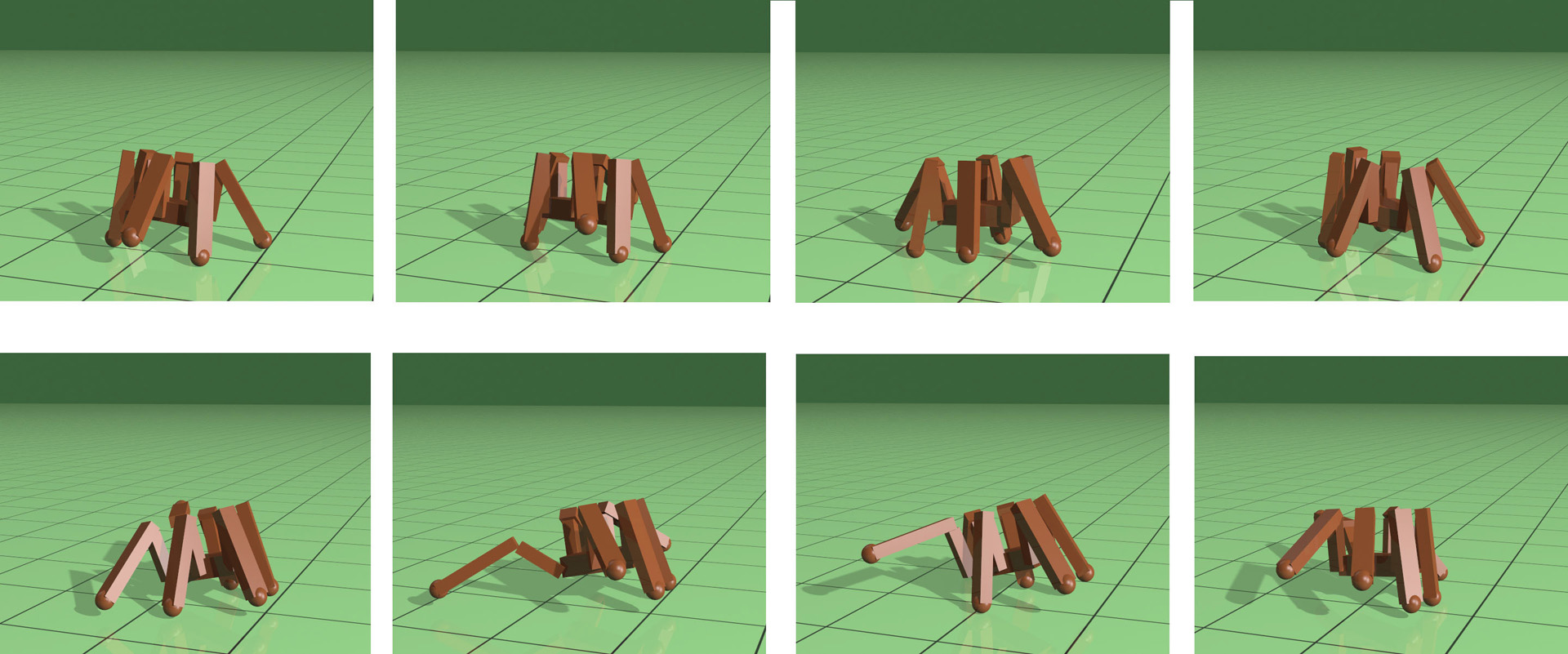

Learning locomotion skills is a challenging problem. To generate realistic and smooth locomotion, existing methods use motion capture, finite state machines or morphology-specific knowledge to guide the motion generation algorithms. Deep reinforcement learning (DRL) is a promising approach for the automatic creation of locomotion control. Indeed, a standard benchmark for DRL is to automatically create a running controller for a biped character from a simple reward function [Duan et al. 2016]. Although several different DRL algorithms can successfully create a running controller, the resulting motions usually look nothing like a real runner. This paper takes a minimalist learning approach to the locomotion problem, without the use of motion examples, finite state machines, or morphology-specific knowledge. We introduce two modifications to the DRL approach that, when used together, produce locomotion behaviors that are symmetric, low-energy, and much closer to that of a real person. First, we introduce a new term to the loss function (not the reward function) that encourages symmetric actions. Second, we introduce a new curriculum learning method that provides modulated physical assistance to help the character with left/right balance and forward movement. The algorithm automatically computes appropriate assistance to the character and gradually relaxes this assistance, so that eventually the character learns to move entirely without help. Because our method does not make use of motion capture data, it can be applied to a variety of character morphologies. We demonstrate locomotion controllers for the lower half of a biped, a full humanoid, a quadruped, and a hexapod. Our results show that learned policies are able to produce symmetric, low-energy gaits. In addition, speed-appropriate gait patterns emerge without any guidance from motion examples or contact planning.

References:

1. Mazen Al Borno, Martin De Lasa, and Aaron Hertzmann. 2013. Trajectory optimization for full-body movements with complex contacts. IEEE transactions on visualization and computer graphics 19, 8 (2013), 1405–1414. Google ScholarDigital Library

2. Yoshua Bengio, Jérome Louradour, Ronan Collobert, and Jason Weston. 2009. Curriculum learning. In Proceedings of the 26th annual international conference on machine learning. ACM, 41–48. Google ScholarDigital Library

3. Greg Brockman, Vicki Cheung, Ludwig Pettersson, Jonas Schneider, John Schulman, Jie Tang, and Wojciech Zaremba. 2016. OpenAI Gym. CoRR abs/1606.01540 (2016). arXiv:1606.01540 http://arxiv.org/abs/1606.01540Google Scholar

4. Stelian Coros, Philippe Beaudoin, and Michiel van de Panne. 2010. Generalized Biped Walking Control. In ACM SIGGRAPH 2010 Papers (SIGGRAPH ’10). ACM, New York, NY, USA, Article 130, 9 pages. Google ScholarDigital Library

5. Stelian Coros, Andrej Karpathy, Ben Jones, Lionel Reveret, and Michiel van de Panne. 2011. Locomotion Skills for Simulated Quadrupeds. ACM Transactions on Graphics 30, 4 (2011), Article TBD. Google ScholarDigital Library

6. Marco da Silva, Yeuhi Abe, and Jovan Popović. 2008. Interactive Simulation of Stylized Human Locomotion. ACM Trans. Graph. 27, 3, Article 82 (Aug. 2008), 10 pages. Google ScholarDigital Library

7. Martin de Lasa, Igor Mordatch, and Aaron Hertzmann. 2010. Feature-based Locomotion Controllers. ACM Trans. Graph. 29, 4, Article 131 (July 2010), 10 pages. Google ScholarDigital Library

8. Prafulla Dhariwal, Christopher Hesse, Matthias Plappert, Alec Radford, John Schulman, Szymon Sidor, and Yuhuai Wu. 2017. OpenAI Baselines, https://github.com/openai/baselines. (2017).Google Scholar

9. Yan Duan, Xi Chen, Rein Houthooft, John Schulman, and Pieter Abbeel. 2016. Benchmarking deep reinforcement learning for continuous control. In International Conference on Machine Learning. 1329–1338. Google ScholarDigital Library

10. Martin L Felis and Katja Mombaur. 2016. Synthesis of full-body 3-D human gait using optimal control methods. In Robotics and Automation (ICRA), 2016 IEEE International Conference on. IEEE, 1560–1566.Google ScholarDigital Library

11. Carlos Florensa, David Held, Markus Wulfmeier, and Pieter Abbeel. 2017. Reverse curriculum generation for reinforcement learning. arXiv preprint arXiv:1707.05300 (2017).Google Scholar

12. T. Geijtenbeek and N. Pronost. 2012. Interactive Character Animation Using Simulated Physics: A State-of-the-Art Review. Comput. Graph. Forum 31, 8 (Dec. 2012), 2492–2515. Google ScholarDigital Library

13. Thomas Geijtenbeek, Michiel van de Panne, and A. Frank van der Stappen. 2013. Flexible Muscle-based Locomotion for Bipedal Creatures. ACM Trans. Graph. 32, 6, Article 206 (Nov. 2013), 11 pages. Google ScholarDigital Library

14. Alex Graves, Marc G Bellemare, Jacob Menick, Remi Munos, and Koray Kavukcuoglu. 2017. Automated Curriculum Learning for Neural Networks. arXiv preprint arXiv:1704.03003 (2017).Google Scholar

15. Sehoon Ha. 2016. PyDart2. (2016). https://github.com/sehoonha/pydart2Google Scholar

16. Sehoon Ha and C Karen Liu. 2014. Iterative training of dynamic skills inspired by human coaching techniques. ACM Transactions on Graphics 34, 1 (2014). Google ScholarDigital Library

17. Nikolaus Hansen and Andreas Ostermeier. 1996. Adapting arbitrary normal mutation distributions in evolution strategies: The covariance matrix adaptation. In Evolutionary Computation, 1996., Proceedings of IEEE International Conference on. IEEE, 312–317.Google ScholarCross Ref

18. Nicolas Heess, Srinivasan Sriram, Jay Lemmon, Josh Merel, Greg Wayne, Yuval Tassa, Tom Erez, Ziyu Wang, Ali Eslami, Martin Riedmiller, et al. 2017. Emergence of locomotion behaviours in rich environments. arXiv preprint arXiv:1707.02286 (2017).Google Scholar

19. David Held, Xinyang Geng, Carlos Florensa, and Pieter Abbeel. 2017. Automatic Goal Generation for Reinforcement Learning Agents. arXiv preprint arXiv:1705.06366 (2017).Google Scholar

20. WALTER Herzog, Benno M Nigg, LYNDA J Read, and EWA Olsson. 1989. Asymmetries in ground reaction force patterns in normal human gait. Med Sci Sports Exerc 21, 1 (1989), 110–114.Google ScholarCross Ref

21. Jessica K. Hodgins, Wayne L. Wooten, David C. Brogan, and James F. O’Brien. 1995. Animating Human Athletics. In Proceedings of the 22Nd Annual Conference on Computer Graphics and Interactive Techniques (SIGGRAPH ’95). ACM, New York, NY, USA, 71–78. Google ScholarDigital Library

22. Sumit Jain, Yuting Ye, and C. Karen Liu. 2009. Optimization-Based Interactive Motion Synthesis. ACM Transaction on Graphics 28, 1 (2009), 1–10. Google ScholarDigital Library

23. Andrej Karpathy and Michiel Van De Panne. 2012. Curriculum learning for motor skills. In Canadian Conference on Artificial Intelligence. Springer, 325–330. Google ScholarDigital Library

24. Yann LeCun, Yoshua Bengio, and Geoffrey Hinton. 2015. Deep learning. Nature 521, 7553 (2015), 436–444.Google Scholar

25. Yoonsang Lee, Sungeun Kim, and Jehee Lee. 2010. Data-driven biped control. ACM Transactions on Graphics (TOG) 29, 4 (2010), 129. Google ScholarDigital Library

26. Yoonsang Lee, Moon Seok Park, Taesoo Kwon, and Jehee Lee. 2014. Locomotion Control for Many-muscle Humanoids. ACM Trans. Graph. 33, 6, Article 218 (Nov. 2014), 11 pages. Google ScholarDigital Library

27. Sergey Levine and Vladlen Koltun. 2014. Learning Complex Neural Network Policies with Trajectory Optimization. In ICML ’14: Proceedings of the 31st International Conference on Machine Learning. Google ScholarDigital Library

28. Timothy P Lillicrap, Jonathan J Hunt, Alexander Pritzel, Nicolas Heess, Tom Erez, Yuval Tassa, David Silver, and Daan Wierstra. 2015. Continuous control with deep reinforcement learning. arXiv preprint arXiv:1509.02971 (2015).Google Scholar

29. C. Karen Liu, Aaron Hertzmann, and Zoran Popović. 2005. Learning Physics-Based Motion Style with Nonlinear Inverse Optimization. ACM Transactions on Graphics 24, 3 (July 2005), 1071–1081. Google ScholarDigital Library

30. C. Karen Liu and Sumit Jain. 2012. A Short Tutorial onMultibody Dynamics. Tech. Rep. GIT-GVU-15-01-1, Georgia Institute of Technology, School of Interactive Computing (2012). http://dartsim.github.io/Google Scholar

31. Libin Liu and Jessica Hodgins. 2017. Learning to schedule control fragments for physics-based characters using deep q-learning. ACM Transactions on Graphics (TOG) 36, 3 (2017), 29. Google ScholarDigital Library

32. Libin Liu, Michiel Van De Panne, and Kangkang Yin. 2016. Guided Learning of Control Graphs for Physics-Based Characters. ACM Trans. Graph. 35, 3, Article 29 (May 2016), 14 pages. Google ScholarDigital Library

33. Tambet Matiisen, Avital Oliver, Taco Cohen, and John Schulman. 2017. Teacher-Student Curriculum Learning. arXiv preprint arXiv:1707.00183 (2017).Google Scholar

34. Volodymyr Mnih, Adria Puigdomenech Badia, Mehdi Mirza, Alex Graves, Timothy Lillicrap, Tim Harley, David Silver, and Koray Kavukcuoglu. 2016. Asynchronous methods for deep reinforcement learning. In International Conference on Machine Learning. 1928–1937. Google ScholarDigital Library

35. Igor Mordatch, Kendall Lowrey, Galen Andrew, Zoran Popovic, and Emanuel V Todorov. 2015. Interactive control of diverse complex characters with neural networks. In Advances in Neural Information Processing Systems. 3132–3140. Google ScholarDigital Library

36. Igor Mordatch, Emanuel Todorov, and Zoran Popović. 2012. Discovery of Complex Behaviors Through Contact-invariant Optimization. ACM Trans. Graph. 31, 4, Article 43 (July 2012), 8 pages. Google ScholarDigital Library

37. Igor Mordatch, Jack M. Wang, Emanuel Todorov, and Vladlen Koltun. 2013. Animating Human Lower Limbs Using Contact-invariant Optimization. ACM Trans. Graph. 32, 6, Article 203 (Nov. 2013), 8 pages. Google ScholarDigital Library

38. Uldarico Muico, Yongjoon Lee, Jovan Popović, and Zoran Popović. 2009. Contact-aware Nonlinear Control of Dynamic Characters. ACM Trans. Graph. 28, 3, Article 81 (July 2009), 9 pages. Google ScholarDigital Library

39. Sanmit Narvekar, Jivko Sinapov, Matteo Leonetti, and Peter Stone. 2016. Source task creation for curriculum learning. In Proceedings of the 2016 International Conference on Autonomous Agents & Multiagent Systems. International Foundation for Autonomous Agents and Multiagent Systems, 566–574. Google ScholarDigital Library

40. B Nigg, R Robinson, and W Herzog. 1987. Use of Force Platform Variables to Ouantify the Effects of Chiropractic Manipulation on Gait Symmetry. Journal of manipulative and physiological therapeutics 10, 4 (1987).Google Scholar

41. Openai. 2017. openai/roboschool. (2017). https://github.com/openai/roboschoolGoogle Scholar

42. Kara K Patterson, Iwona Parafianowicz, Cynthia J Danells, Valerie Closson, Mary C Verrier, W Richard Staines, Sandra E Black, and William E McIlroy. 2008. Gait asymmetry in community-ambulating stroke survivors. Archives of physical medicine and rehabilitation 89, 2 (2008), 304–310.Google Scholar

43. Xue Bin Peng, Glen Berseth, and Michiel van de Panne. 2015. Dynamic Terrain Traversal Skills Using Reinforcement Learning. ACM Trans. Graph. 34, 4, Article 80 (July 2015), 11 pages. Google ScholarDigital Library

44. Xue Bin Peng, Glen Berseth, and Michiel van de Panne. 2016. Terrain-adaptive Locomotion Skills Using Deep Reinforcement Learning. ACM Trans. Graph. 35, 4, Article 81 (July 2016), 12 pages. Google ScholarDigital Library

45. Xue Bin Peng, Glen Berseth, Kangkang Yin, and Michiel Van De Panne. 2017. DeepLoco: Dynamic Locomotion Skills Using Hierarchical Deep Reinforcement Learning. ACM Trans. Graph. 36, 4, Article 41 (July 2017), 13 pages. Google ScholarDigital Library

46. Anastasia Pentina, Viktoriia Sharmanska, and Christoph H Lampert. 2015. Curriculum learning of multiple tasks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 5492–5500.Google ScholarCross Ref

47. Lerrel Pinto and Abhinav Gupta. 2016. Supersizing self-supervision: Learning to grasp from 50k tries and 700 robot hours. In Robotics and Automation (ICRA), 2016 IEEE International Conference on. IEEE, 3406–3413.Google ScholarDigital Library

48. John Schulman, Sergey Levine, Pieter Abbeel, Michael Jordan, and Philipp Moritz. 2015a. Trust region policy optimization. In Proceedings of the 32nd International Conference on Machine Learning (ICML-15). 1889–1897. Google ScholarDigital Library

49. John Schulman, Philipp Moritz, Sergey Levine, Michael Jordan, and Pieter Abbeel. 2015b. High-dimensional continuous control using generalized advantage estimation. arXiv preprint arXiv:1506.02438 (2015).Google Scholar

50. John Schulman, Filip Wolski, Prafulla Dhariwal, Alec Radford, and Oleg Klimov. 2017. Proximal policy optimization algorithms. arXiv preprint arXiv:1707.06347 (2017).Google Scholar

51. Kwang Won Sok, Manmyung Kim, and Jehee Lee. 2007. Simulating Biped Behaviors from Human Motion Data. In ACM SIGGRAPH 2007 Papers (SIGGRAPH ’07). ACM, New York, NY, USA, Article 107. Google ScholarDigital Library

52. Richard S Sutton, David A McAllester, Satinder P Singh, and Yishay Mansour. 2000. Policy gradient methods for reinforcement learning with function approximation. In Advances in neural information processing systems. 1057–1063. Google ScholarDigital Library

53. Jie Tan, Yuting Gu, C. Karen Liu, and Greg Turk. 2014. Learning Bicycle Stunts. ACM Trans. Graph. 33, 4, Article 50 (July 2014), 12 pages. Google ScholarDigital Library

54. Jie Tan, Karen Liu, and Greg Turk. 2011. Stable proportional-derivative controllers. IEEE Computer Graphics and Applications 31, 4 (2011), 34–44. Google ScholarDigital Library

55. Michiel Van de Panne and Alexis Lamouret. 1995. Guided optimization for balanced locomotion. In Computer animation and simulation, Vol. 95. Springer, 165–177.Google Scholar

56. Kevin Wampler and Zoran Popović. 2009. Optimal Gait and Form for Animal Locomotion. In ACM SIGGRAPH 2009 Papers (SIGGRAPH ’09). ACM, New York, NY, USA, Article 60, 8 pages. Google ScholarDigital Library

57. Kevin Wampler, Zoran Popović, and Jovan Popović. 2014. Generalizing Locomotion Style to New Animals with Inverse Optimal Regression. ACM Trans. Graph. 33, 4, Article 49 (July 2014), 11 pages. Google ScholarDigital Library

58. Jack M. Wang, David J. Fleet, and Aaron Hertzmann. 2009. Optimizing Walking Controllers. ACM Trans. Graph. 28, 5, Article 168 (Dec. 2009), 8 pages. Google ScholarDigital Library

59. Jack M. Wang, Samuel R. Hamner, Scott L. Delp, and Vladlen Koltun. 2012. Optimizing Locomotion Controllers Using Biologically-based Actuators and Objectives. ACM Trans. Graph. 31, 4, Article 25 (July 2012), 11 pages. Google ScholarDigital Library

60. Jungdam Won, Jongho Park, Kwanyu Kim, and Jehee Lee. 2017. How to train your dragon: example-guided control of flapping flight. ACM Transactions on Graphics (TOG) 36, 6 (2017), 198. Google ScholarDigital Library

61. Jia-chi Wu and Zoran Popović. 2010. Terrain-adaptive bipedal locomotion control. ACM Transactions on Graphics (TOG) 29, 4 (2010), 72. Google ScholarDigital Library

62. Yuting Ye and C. Karen Liu. 2010. Optimal Feedback Control for Character Animation Using an Abstract Model. ACM Trans. Graph. 29, 4, Article 74 (July 2010), 9 pages. Google ScholarDigital Library

63. KangKang Yin, Stelian Coros, Philippe Beaudoin, and Michiel van de Panne. 2008. Continuation methods for adapting simulated skills. In ACM Transactions on Graphics (TOG), Vol. 27. ACM, 81. Google ScholarDigital Library

64. KangKang Yin, Kevin Loken, and Michiel van de Panne. 2007. SIMBICON: Simple Biped Locomotion Control. ACM Trans. Graph. 26, 3, Article 105 (July 2007). Google ScholarDigital Library