“Interactive Editing of Monocular Depth” by Dukor, Miangoleh, Krishna Reddy, Mai and Aksoy

Conference:

Type(s):

Title:

- Interactive Editing of Monocular Depth

Presenter(s)/Author(s):

Entry Number: 52

Abstract:

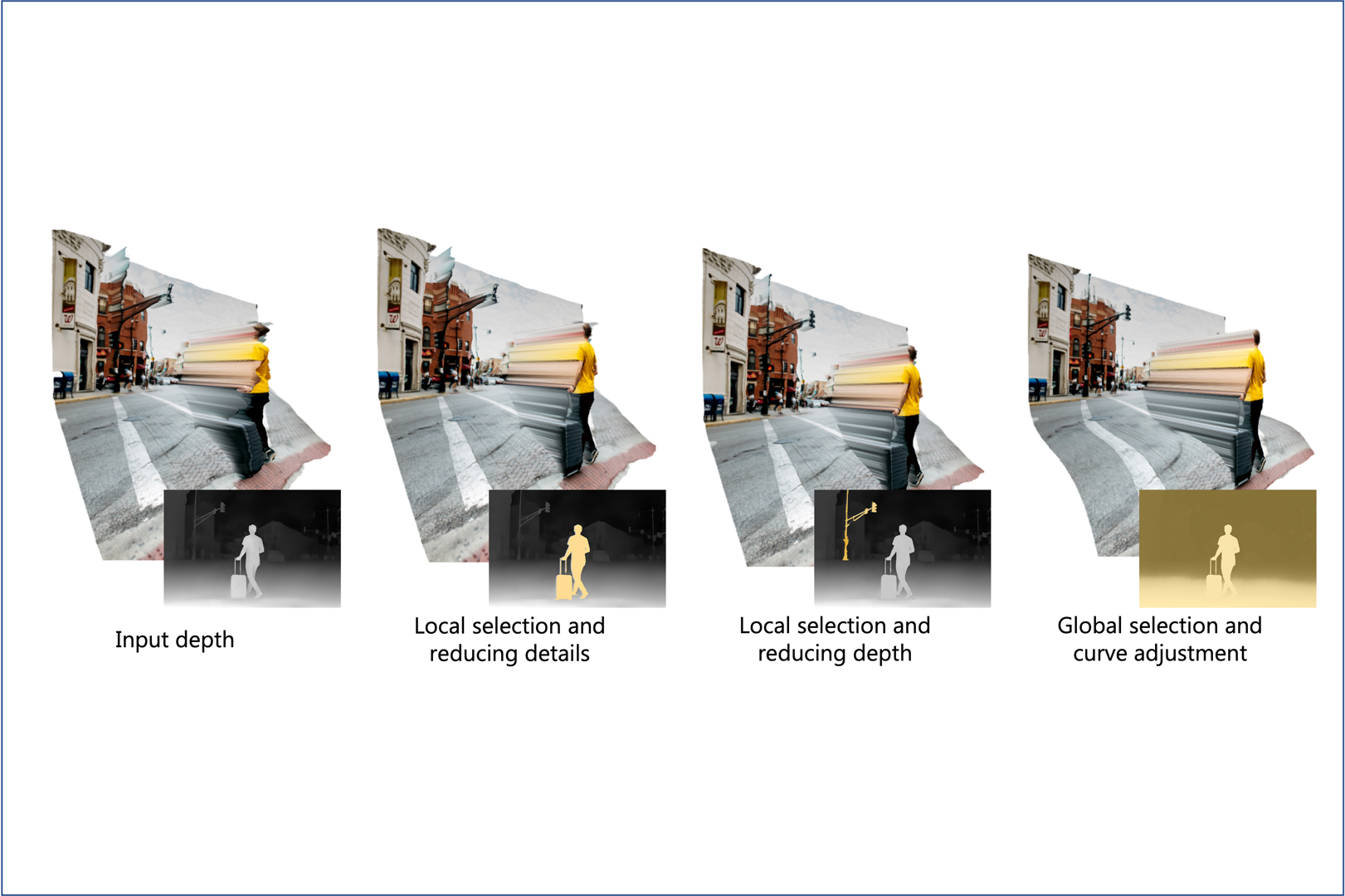

Recent advances in computer vision have made 3D structure-aware editing of still photographs a reality. Such computational photography applications use a depth map that is automatically generated by monocular depth estimation methods to represent the scene structure. In this work, we present a lightweight, web-based interactive depth editing and visualization tool that adapts low-level conventional image editing operations for geometric manipulation to enable artistic control in the 3D photography workflow. Our tool provides real-time feedback on the geometry through a 3D scene visualization to make the depth map editing process more intuitive for artists. Our web-based tool is open-source1 and platform-independent to support wider adoption of 3D photography techniques in everyday digital photography.

References:

S. Mahdi H. Miangoleh, Sebastian Dille, Long Mai, Sylvain Paris, and Yağız Aksoy. 2021. Boosting Monocular Depth Estimation Models to High-Resolution via Content-Adaptive Multi-Resolution Merging. In IEEE Conf. Comput. Vis. Pattern Recog.Google ScholarCross Ref

Simon Niklaus, Long Mai, Jimei Yang, and Feng Liu. 2019. 3D ken burns effect from a single image. ACM Trans. Graph. (2019).Google Scholar

René Ranftl, Alexey Bochkovskiy, and Vladlen Koltun. 2021. Vision transformers for dense prediction. In Int. Conf. Comput. Vis.Google ScholarCross Ref

Meng-Li Shih, Shih-Yang Su, Johannes Kopf, and Jia-Bin Huang. 2020. 3D photography using context-aware layered depth inpainting. In IEEE Conf. Comput. Vis. Pattern Recog.Google ScholarCross Ref

Neal Wadhwa, Rahul Garg, David E Jacobs, Bryan E Feldman, Nori Kanazawa, Robert Carroll, Yair Movshovitz-Attias, Jonathan T Barron, Yael Pritch, and Marc Levoy. 2018. Synthetic depth-of-field with a single-camera mobile phone. ACM Trans. Graph. (2018).Google Scholar