“Interactive character animation by learning multi-objective control”

Conference:

Type(s):

Title:

- Interactive character animation by learning multi-objective control

Session/Category Title:

- Character animation

Presenter(s)/Author(s):

Moderator(s):

Abstract:

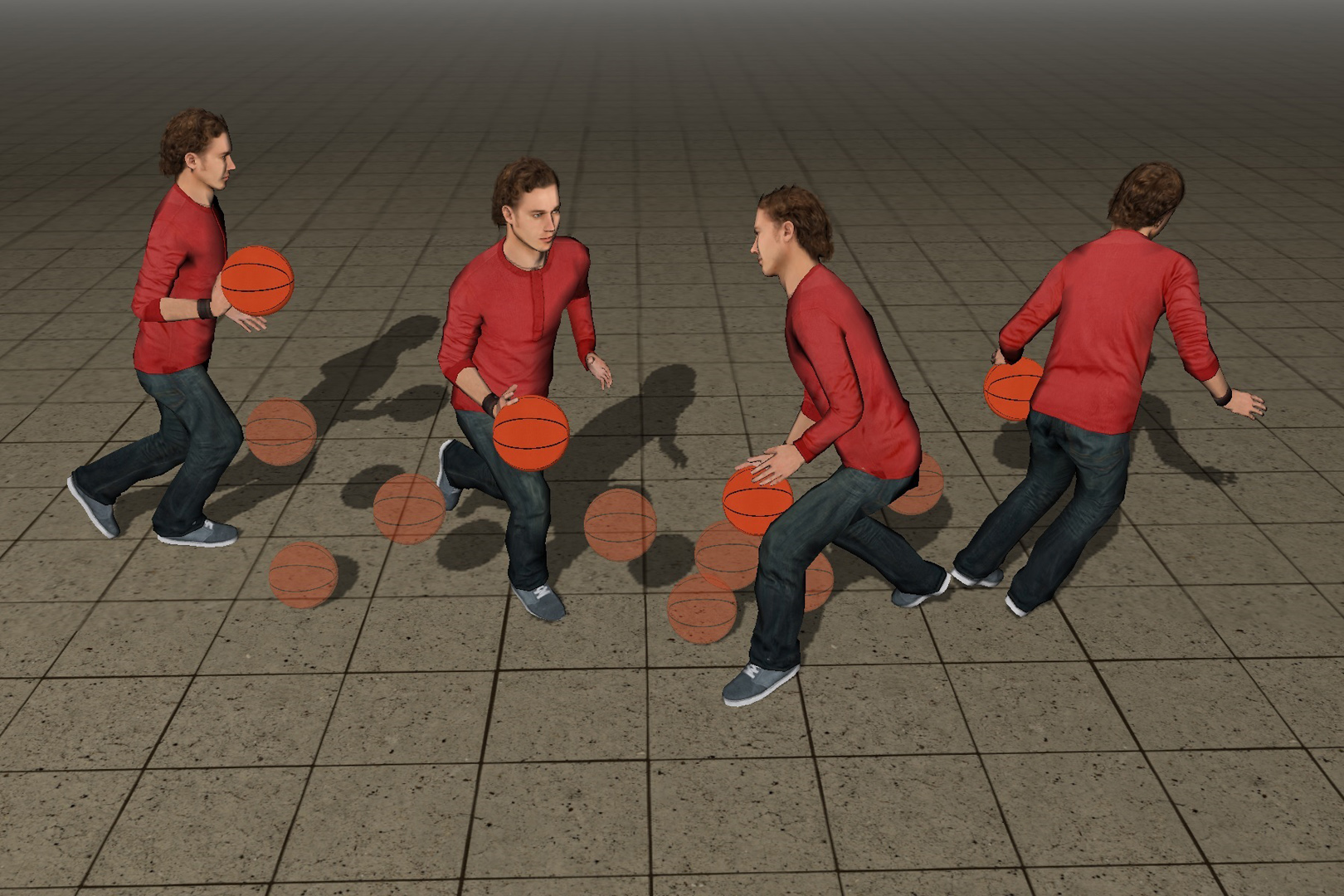

We present an approach that learns to act from raw motion data for interactive character animation. Our motion generator takes a continuous stream of control inputs and generates the character’s motion in an online manner. The key insight is modeling rich connections between a multitude of control objectives and a large repertoire of actions. The model is trained using Recurrent Neural Network conditioned to deal with spatiotemporal constraints and structural variabilities in human motion. We also present a new data augmentation method that allows the model to be learned even from a small to moderate amount of training data. The learning process is fully automatic if it learns the motion of a single character, and requires minimal user intervention if it deals with props and interaction between multiple characters.

References:

1. Myung Geol Choi, Manmyung Kim, Kyung Lyul Hyun, and Jehee Lee. 2011. Deformable Motion: Squeezing into cluttered environments. Computer Graphics Forum 30, 2 (2011), 445–453.Google ScholarCross Ref

2. Neil Dantam and Mike Stilman. 2013. The Motion Grammar: Analysis of a linguistic method for robot control. IEEE Transactions on Robotics 29, 3 (2013), 704–718. Google ScholarDigital Library

3. Jeffrey Donahue, Lisa Anne Hendricks, Sergio Guadarrama, Marcus Rohrbach, Subhashini Venugopalan, Kate Saenko, and Trevor Darrell. 2015. Long-term recurrent convolutional networks for visual recognition and description. In the IEEE Conference on Computer Vision and Pattern Recognition. 2625–2634.Google ScholarCross Ref

4. Yong Du, Yun Fu, and Liang Wang. 2016. Representation learning of temporal dynamics for skeleton-based action recognition. IEEE Transactions on Image Processing 25, 7 (2016), 3010–3022.Google ScholarDigital Library

5. Katerina Fragkiadaki, Sergey Levine, Panna Felsen, and Jitendra Malik. 2015. Recurrent network models for human dynamics. In the IEEE International Conference on Computer Vision. 4346–4354. Google ScholarDigital Library

6. Felix A Gers and E Schmidhuber. 2001. LSTM recurrent networks learn simple context-free and context-sensitive languages. IEEE Transactions on Neural Networks 12, 6 (2001), 1333–1340. Google ScholarDigital Library

7. Partha Ghosh, Jie Song, Emre Aksan, and Otmar Hilliges. 2017. Learning Human Motion Models for Long-term Predictions. arXiv preprint arXiv:1704.02827 (2017).Google Scholar

8. Alex Graves and Navdeep Jaitly. 2014. Towards end-to-end speech recognition with recurrent neural networks. In the 31st International Conference on Machine Learning. 1764–1772. Google ScholarDigital Library

9. Keith Grochow, Steven L Martin, Aaron Hertzmann, and Zoran Popović. 2004. Style-based inverse kinematics. ACM Transactions on Graphics 23, 3 (2004), 522–531. Google ScholarDigital Library

10. Gutemberg Guerra-Filho and Yiannis Aloimonos. 2012. The syntax of human actions and interactions. Journal of Neurolinguistics 25, 5 (2012), 500–514.Google ScholarCross Ref

11. Daniel Holden, Taku Komura, and Jun Saito. 2017. Phase-functioned neural networks for character control. ACM Transactions on Graphics 36, 4, Article 42 (2017). Google ScholarDigital Library

12. Daniel Holden, Jun Saito, and Taku Komura. 2016. A deep learning framework for character motion synthesis and editing. ACM Transactions on Graphics 35, 4, Article 138 (2016). Google ScholarDigital Library

13. Kyunglyul Hyun, Kyungho Lee, and Jehee Lee. 2016. Motion grammars for character animation. Computer Graphics Forum 35, 2 (2016), 103–113.Google ScholarCross Ref

14. Ashesh Jain, Amir R Zamir, Silvio Savarese, and Ashutosh Saxena. 2016. Structural-RNN: Deep learning on spatio-temporal graphs. In the IEEE Conference on Computer Vision and Pattern Recognition. 5308–5317.Google ScholarCross Ref

15. Manmyung Kim, Youngseok Hwang, Kyunglyul Hyun, and Jehee Lee. 2012. Tiling motion patches. In the 11th ACM SIGGRAPH/Eurographics Conference on Computer Animation. 117–126. Google ScholarDigital Library

16. Manmyung Kim, Kyunglyul Hyun, Jongmin Kim, and Jehee Lee. 2009. Synchronized multi-character motion editing. ACM Transactions on Graphics 28, 3, Article 79 (2009). Google ScholarDigital Library

17. Lucas Kovar and Michael Gleicher. 2004. Automated extraction and parameterization of motions in large data sets. ACM Transactions on Graphics 23, 3 (2004), 559–568. Google ScholarDigital Library

18. Lucas Kovar, Michael Gleicher, and Frédéric Pighin. 2002. Motion graphs. ACM Transactions on Graphics 21, 3 (2002), 473–482. Google ScholarDigital Library

19. Jehee Lee, Jinxiang Chai, Paul SA Reitsma, Jessica K Hodgins, and Nancy S Pollard. 2002. Interactive control of avatars animated with human motion data. ACM Transactions on Graphics 21, 3 (2002), 491–500. Google ScholarDigital Library

20. Jehee Lee and Kang Hoon Lee. 2004. Precomputing Avatar Behavior from Human Motion Data. In the 2004 ACM SIGGRAPH/Eurographics Symposium on Computer Animation. 79–87. Google ScholarDigital Library

21. Kang Hoon Lee, Myung Geol Choi, and Jehee Lee. 2006. Motion Patches: Building blocks for virtual environments annotated with motion data. ACM Transactions on Graphics 25, 3 (2006), 898–906. Google ScholarDigital Library

22. Sergey Levine, Yongjoon Lee, Vladlen Koltun, and Zoran Popović. 2011. Space-time planning with parameterized locomotion controllers. ACM Transactions on Graphics 30, 3, Article 23 (2011). Google ScholarDigital Library

23. Sergey Levine, Jack M Wang, Alexis Haraux, Zoran Popović, and Vladlen Koltun. 2012. Continuous character control with low-dimensional embeddings. ACM Transactions on Graphics 31, 4, Article 28 (2012). Google ScholarDigital Library

24. Libin Liu and Jessica K. Hodgins. 2017. Learning to Schedule Control Fragments for Physics-Based Characters Using Deep Q-Learning. ACM Transactions on Graphics 36, 3 (2017). Google ScholarDigital Library

25. Julieta Martinez, Michael J Black, and Javier Romero. 2017. On human motion prediction using recurrent neural networks. In 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). IEEE, 4674–4683.Google ScholarCross Ref

26. Jianyuan Min and Jinxiang Chai. 2012. Motion Graphs++: A compact generative model for semantic motion analysis and synthesis. ACM Transactions on Graphics 31, 6, Article 153 (2012). Google ScholarDigital Library

27. Volodymyr Mnih and Koray et al. Kavukcuoglu. 2015. Human-level control through deep reinforcement learning. Nature 518, 7540 (2015), 529–533.Google Scholar

28. Igor Mordatch, Kendall Lowrey, Galen Andrew, Zoran Popovic, and EmanuelV Todorov. 2015. Interactive control of diverse complex characters with neural networks. In Neural Information Processing Systems. 3132–3140. Google ScholarDigital Library

29. Xue Bin Peng, Pieter Abbeel, Sergey Levine, and Michiel van de Panne. 2018. DeepMimic: Example-Guided Deep Reinforcement Learning of Physics-Based Character Skills Paper Abstract Author Preprint Paper Video. ACM Transactions on Graphics 37, 4 (2018). Google ScholarDigital Library

30. Xue Bin Peng, Glen Berseth, KangKang Yin, and Michiel Van De Panne. 2017. Deeploco: Dynamic locomotion skills using hierarchical deep reinforcement learning. ACM Transactions on Graphics 36, 4, Article 41 (2017). Google ScholarDigital Library

31. Alla Safonova and Jessica K Hodgins. 2007. Construction and optimal search of interpolated motion graphs. ACM Transactions on Graphics 26, 3, Article 106 (2007). Google ScholarDigital Library

32. Hyun Joon Shin and Hyun Seok Oh. 2006. Fat Graphs: Constructing an interactive character with continuous controls. In the 2006 ACM SIGGRAPH/Eurographics Symposium on Computer Animation. 291–298. Google ScholarDigital Library

33. Hubert PH Shum, Taku Komura, Masashi Shiraishi, and Shuntaro Yamazaki. 2008. Interaction patches for multi-character animation. ACM Transactions on Graphics 27, 5, Article 114 (2008). Google ScholarDigital Library

34. Ilya Sutskever, James Martens, and Geoffrey E Hinton. 2011. Generating text with recurrent neural networks. In the 28th International Conference on Machine Learning. 1017–1024. Google ScholarDigital Library

35. Supasorn Suwajanakorn, Steven M Seitz, and Ira Kemelmacher-Shlizerman. 2017. Synthesizing Obama: Learning lip sync from audio. ACM Transactions on Graphics 36, 4, Article 95 (2017). Google ScholarDigital Library

36. Jie Tan, Yuting Gu, C Karen Liu, and Greg Turk. 2014. Learning bicycle stunts. ACM Transactions on Graphics 33, 4, Article 50 (2014). Google ScholarDigital Library

37. Graham W Taylor and Geoffrey E Hinton. 2009. Factored conditional restricted Boltzmann machines for modeling motion style. In the 26th Annual International Conference on Machine Learning. 1025–1032. Google ScholarDigital Library

38. TensorFlow. 2015. TensorFlow: Large-scale machine learning on heterogeneous systems. (2015). Software available from tensorflow.org.Google Scholar

39. Adrien Treuille, Yongjoon Lee, and Zoran Popović. 2007. Near-optimal character animation with continuous control. ACM Transactions on Graphics 26, 3, Article 7 (2007). Google ScholarDigital Library

40. Ronald J Williams and David Zipser. 1989. A learning algorithm for continually running fully recurrent neural networks. Neural computation 1, 2 (1989), 270–280. Google ScholarDigital Library

41. Jungdam Won, Kyungho Lee, Carol O’Sullivan, Jessica K Hodgins, and Jehee Lee. 2014. Generating and ranking diverse multi-character interactions. ACM Transactions on Graphics 33, 6, Article 219 (2014). Google ScholarDigital Library

42. Jungdam Won, Jongho Park, Kwanyu Kim, and Jehee Lee. 2017. How to Train Your Dragon: Example-guided control of flapping flight. ACM Transactions on Graphics 36, 6, Article 198 (2017). Google ScholarDigital Library

43. Wenhao Yu, Greg Turk, and C. Karen Liu. 2018. Learning Symmetry and Low-energy Locomotion Paper Abstract Author Preprint Paper Video. ACM Transactions on Graphics 37, 4 (2018). Google ScholarDigital Library

44. He Zhang, Sebastian Starke, Taku Komura, and Jun Saito. 2018. Mode-Adaptive Neural Networks for Quadruped Motion Control. ACM Transactions on Graphics 37, 4 (2018). Google ScholarDigital Library

45. Yi Zhou, Zimo Li, Shuangjiu Xiao, Chong He, Zeng Huang, and Hao Li. 2018. Auto-Conditioned Recurrent Networks for Extended Complex Human Motion Synthesis. In International Conference on Learning Representations. https://openreview.net/forum?id=r11Q2SlRWGoogle Scholar