“High-quality passive facial performance capture using anchor frames” by Beeler, Hahn, Bradley, Bickel, Beardsley, et al. …

Conference:

Type(s):

Title:

- High-quality passive facial performance capture using anchor frames

Presenter(s)/Author(s):

Abstract:

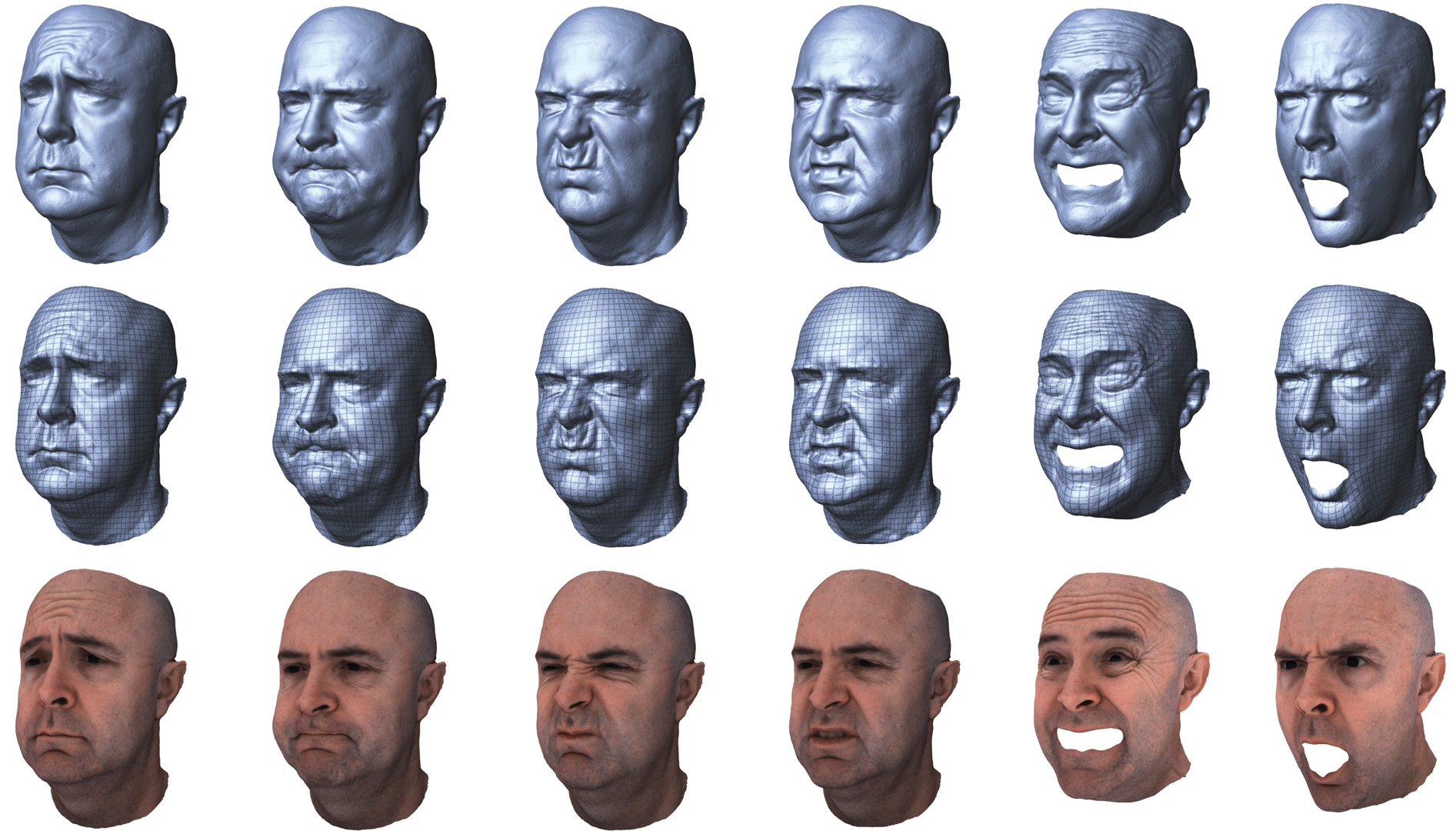

We present a new technique for passive and markerless facial performance capture based on anchor frames. Our method starts with high resolution per-frame geometry acquisition using state-of-the-art stereo reconstruction, and proceeds to establish a single triangle mesh that is propagated through the entire performance. Leveraging the fact that facial performances often contain repetitive subsequences, we identify anchor frames as those which contain similar facial expressions to a manually chosen reference expression. Anchor frames are automatically computed over one or even multiple performances. We introduce a robust image-space tracking method that computes pixel matches directly from the reference frame to all anchor frames, and thereby to the remaining frames in the sequence via sequential matching. This allows us to propagate one reconstructed frame to an entire sequence in parallel, in contrast to previous sequential methods. Our anchored reconstruction approach also limits tracker drift and robustly handles occlusions and motion blur. The parallel tracking and mesh propagation offer low computation times. Our technique will even automatically match anchor frames across different sequences captured on different occasions, propagating a single mesh to all performances.

References:

1. Alexander, O., Rogers, M., Lambeth, W., Chiang, M., and Debevec, P. 2009. The digital Emily project: photoreal facial modeling and animation. In ACM SIGGRAPH Courses, 1–15. Google Scholar

2. Anuar, N., and Guskov, I. 2004. Extracting animated meshes with adaptive motion estimation. In Proc. Vision, Modeling, and Visualization, 63–71.Google Scholar

3. Beeler, T., Bickel, B., Sumner, R., Beardsley, P., and Gross, M. 2010. High-quality single-shot capture of facial geometry. ACM Trans. Graphics (Proc. SIGGRAPH), 40. Google Scholar

4. Bickel, B., Botsch, M., Angst, R., Matusik, W., Otaduy, M., Pfister, H., and Gross, M. 2007. Multi-scale capture of facial geometry and motion. ACM Trans. Graphics (Proc. SIGGRAPH), 33. Google Scholar

5. Blanz, V., Basso, C., Vetter, T., and Poggio, T. 2003. Reanimating faces in images and video. Computer Graphics Forum (Proc. Eurographics) 22, 3, 641–650.Google ScholarCross Ref

6. Bradley, D., Popa, T., Sheffer, A., Heidrich, W., and Boubekeur, T. 2008. Markerless garment capture. ACM Trans. Graphics (Proc. SIGGRAPH), 99. Google Scholar

7. Bradley, D., Heidrich, W., Popa, T., and Sheffer, A. 2010. High resolution passive facial performance capture. ACM Trans. Graphics (Proc. SIGGRAPH), 41. Google Scholar

8. DeCarlo, D., and Metaxas, D. 1996. The integration of optical flow and deformable models with applications to human face shape and motion estimation. In Proc. CVPR, 231–238. Google ScholarDigital Library

9. Ekman, P., and Friesen, W. 1978. The facial action coding system: A technique for the measurement of facial movement. In Consulting Psychologists.Google Scholar

10. Essa, I., Basu, S., Darrell, T., and Pentland, A. 1996. Modeling, tracking and interactive animation of faces and heads using input from video. In Proc. Computer Animation, 68. Google ScholarDigital Library

11. Furukawa, Y., and Ponce, J. 2009. Dense 3D motion capture for human faces. In Proc. CVPR, 1674–1681.Google Scholar

12. Fyffe, G., Hawkins, T., Watts, C., Ma, W.-C., and Debevec, P. 2011. Comprehensive facial performance capture. Comp. Graphics Forum (Proc. Eurographics) 30, 2, 425–434.Google ScholarCross Ref

13. Guenter, B., Grimm, C., Wood, D., Malvar, H., and Pighin, F. 1998. Making faces. In Comp. Graphics, 55–66. Google Scholar

14. Hernández, C., and Vogiatzis, G. 2010. Self-calibrating a real-time monocular 3D facial capture system. In Proceedings International Symposium on 3D Data Processing, Visualization and Transmission (3DPVT).Google Scholar

15. Kraevoy, V., and Sheffer, A. 2004. Cross-parameterization and compatible remeshing of 3D models. ACM Trans. Graph. 23, 861–869. Google ScholarDigital Library

16. Li, H., Roivainen, P., and Forcheimer, R. 1993. 3-D motion estimation in model-based facial image coding. IEEE Trans. Pattern Anal. Mach. Intell. 15, 6, 545–555. Google ScholarDigital Library

17. Lin, I.-C., and Ouhyoung, M. 2005. Mirror mocap: Automatic and efficient capture of dense 3D facial motion parameters from video. The Visual Computer 21, 6, 355–372.Google ScholarCross Ref

18. Lowe, D. G. 2004. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vision 60, 2, 91–110. Google ScholarDigital Library

19. Ma, W.-C., Hawkins, T., Peers, P., Chabert, C.-F., Weiss, M., and Debevec, P. 2007. Rapid acquisition of specular and diffuse normal maps from polarized spherical gradient illumination. In Eurographics Symposium on Rendering, 183–194. Google Scholar

20. Ma, W.-C., Jones, A., Chiang, J.-Y., Hawkins, T., Frederiksen, S., Peers, P., Vukovic, M., Ouhyoung, M., and Debevec, P. 2008. Facial performance synthesis using deformation-driven polynomial displacement maps. ACM Trans. Graphics (Proc. SIGGRAPH Asia) 27, 5, 121. Google ScholarDigital Library

21. Pighin, F. H., Szeliski, R., and Salesin, D. 1999. Resynthesizing facial animation through 3D model-based tracking. In Proc. ICCV, 143–150.Google Scholar

22. Popa, T., South-Dickinson, I., Bradley, D., Sheffer, A., and Heidrich, W. 2010. Globally consistent space-time reconstruction. Comp. Graphics Forum (Proc. SGP), 1633–1642.Google Scholar

23. Rav-Acha, A., Kohli, P., Rother, C., and Fitzgibbon, A. 2008. Unwrap mosaics: A new representation for video editing. ACM Trans. Graphics (Proc. SIGGRAPH), 17. Google Scholar

24. Sharf, A., Alcantara, D. A., Lewiner, T., Greif, C., Sheffer, A., Amenta, N., and Cohen-Or, D. 2008. Space-time surface reconstruction using incompressible flow. ACM Trans. Graphics 27, 110. Google ScholarDigital Library

25. Sumner, R. W., and Popović, J. 2004. Deformation transfer for triangle meshes. ACM Trans. Graphics 23, 399–405. Google ScholarDigital Library

26. Wand, M., Adams, B., Ovsjanikov, M., Berner, A., Bokeloh, M., Jenke, P., Guibas, L., Seidel, H.-P., and Schilling, A. 2009. Efficient reconstruction of nonrigid shape and motion from real-time 3D scanner data. ACM Trans. Graph. 28, 2, 1–15. Google ScholarDigital Library

27. Wang, Y., Huang, X., Lee, C.-S., Zhang, S., Li, Z., Samaras, D., Metaxas, D., Elgammal, A., and Huang, P. 2004. High resolution acquisition, learning and transfer of dynamic 3-D facial expressions. Comp. Graphics Forum 23, 3, 677–686.Google ScholarCross Ref

28. Williams, L. 1990. Performance-driven facial animation. In Computer Graphics (Proc. SIGGRAPH), vol. 24, 235–242. Google ScholarCross Ref

29. Wilson, C. A., Ghosh, A., Peers, P., Chiang, J.-Y., Busch, J., and Debevec, P. 2010. Temporal upsampling of performance geometry using photometric alignment. ACM Trans. Graphics 29, 2. Google ScholarDigital Library

30. Winkler, T., Hormann, K., and Gotsman, C. 2008. Mesh massage. The Visual Computer 24, 775–785. Google ScholarDigital Library

31. Zhang, L., Snavely, N., Curless, B., and Seitz, S. M. 2004. Spacetime faces: High resolution capture for modeling and animation. ACM Trans. Graphics 23, 3, 548–558. Google ScholarDigital Library