“Hand Pose Estimation with Mems-Ultrasonic Sensors” by Zhang, Lin, Lin and Rusinkiewicz

Conference:

Type(s):

Title:

- Hand Pose Estimation with Mems-Ultrasonic Sensors

Session/Category Title:

- Multidisciplinary Fusion

Presenter(s)/Author(s):

Abstract:

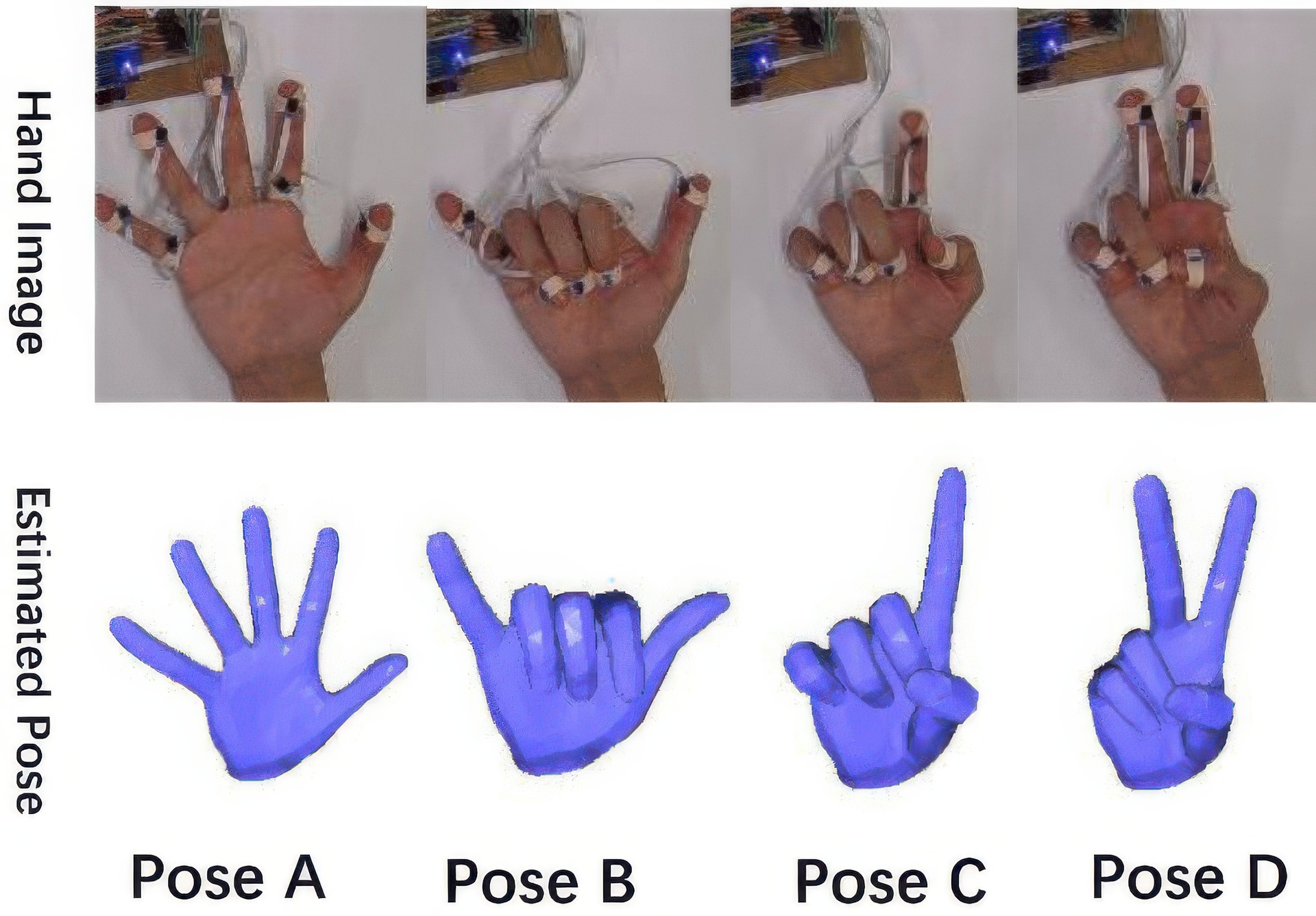

Hand tracking is an important aspect of human-computer interaction and has a wide range of applications in extended reality devices. However, current hand motion capture methods suffer from various limitations. For instance, visual-based hand pose estimation is susceptible to self-occlusion and changes in lighting conditions, while IMU-based tracking gloves experience significant drift and are not resistant to external magnetic field interference. To address these issues, we propose a novel and low-cost hand-tracking glove that utilizes several MEMS-ultrasonic sensors attached to the fingers, to measure the distance matrix among the sensors. Our lightweight deep network then reconstructs the hand pose from the distance matrix. Our experimental results demonstrate that this approach is both accurate, size-agnostic, and robust to external interference. We also show the design logic for the sensor selection, sensor configurations, circuit diagram, as well as model architecture.

References:

[1]

Yujun Cai, Liuhao Ge, Jianfei Cai, Nadia Magnenat Thalmann, and Junsong Yuan. 2020. 3D hand pose estimation using synthetic data and weakly labeled RGB images. IEEE transactions on pattern analysis and machine intelligence 43, 11 (2020), 3739–3753.

[2]

Yujun Cai, Liuhao Ge, Jianfei Cai, and Junsong Yuan. 2018. Weakly-supervised 3d hand pose estimation from monocular rgb images. In Proceedings of the European conference on computer vision (ECCV). 666–682.

[3]

Hsien-Ting Chang and Jen-Yuan Chang. 2019. Sensor glove based on novel inertial sensor fusion control algorithm for 3-D real-time hand gestures measurements. IEEE Transactions on Industrial Electronics 67, 1 (2019), 658–666.

[4]

Jean-Baptiste Chossat, Yiwei Tao, Vincent Duchaine, and Yong-Lae Park. 2015. Wearable soft artificial skin for hand motion detection with embedded microfluidic strain sensing. In 2015 IEEE international conference on robotics and automation (ICRA). IEEE, 2568–2573.

[5]

Simone Ciotti, Edoardo Battaglia, Nicola Carbonaro, Antonio Bicchi, Alessandro Tognetti, and Matteo Bianchi. 2016. A synergy-based optimally designed sensing glove for functional grasp recognition. Sensors 16, 6 (2016), 811.

[6]

James Connolly, Joan Condell, Brendan O’Flynn, Javier Torres Sanchez, and Philip Gardiner. 2017. IMU sensor-based electronic goniometric glove for clinical finger movement analysis. IEEE Sensors Journal 18, 3 (2017), 1273–1281.

[7]

Zicong Fan, Adrian Spurr, Muhammed Kocabas, Siyu Tang, Michael J Black, and Otmar Hilliges. 2021. Learning to disambiguate strongly interacting hands via probabilistic per-pixel part segmentation. In 2021 International Conference on 3D Vision (3DV). IEEE, 1–10.

[8]

Bin Fang, Fuchun Sun, Huaping Liu, and Di Guo. 2017. Development of a wearable device for motion capturing based on magnetic and inertial measurement units. Scientific Programming 2017 (2017).

[9]

Oliver Glauser. 2019. Youtube video for Interactive Hand Pose Estimation using a Stretch-Sensing Soft Glove (SIGGRAPH 2019). https://www.youtube.com/watch?v=Vrk4YMbRhac

[10]

Oliver Glauser, Shihao Wu, Daniele Panozzo, Otmar Hilliges, and Olga Sorkine-Hornung. 2019. Interactive hand pose estimation using a stretch-sensing soft glove. ACM Transactions on Graphics (ToG) 38, 4 (2019), 1–15.

[11]

Frank L Hammond, Yiğit Mengüç, and Robert J Wood. 2014. Toward a modular soft sensor-embedded glove for human hand motion and tactile pressure measurement. In 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems. IEEE, 4000–4007.

[12]

Shangchen Han, Beibei Liu, Randi Cabezas, Christopher D Twigg, Peizhao Zhang, Jeff Petkau, Tsz-Ho Yu, Chun-Jung Tai, Muzaffer Akbay, Zheng Wang, 2020. MEgATrack: monochrome egocentric articulated hand-tracking for virtual reality. ACM Transactions on Graphics (ToG) 39, 4 (2020), 87–1.

[13]

Shangchen Han, Beibei Liu, Robert Wang, Yuting Ye, Christopher D Twigg, and Kenrick Kin. 2018. Online optical marker-based hand tracking with deep labels. ACM Transactions on Graphics (TOG) 37, 4 (2018), 1–10.

[14]

Shangchen Han, Po-chen Wu, Yubo Zhang, Beibei Liu, Linguang Zhang, Zheng Wang, Weiguang Si, Peizhao Zhang, Yujun Cai, Tomas Hodan, 2022. UmeTrack: Unified multi-view end-to-end hand tracking for VR. In SIGGRAPH Asia 2022 Conference Papers. 1–9.

[15]

Yihui He, Rui Yan, Katerina Fragkiadaki, and Shoou-I Yu. 2020. Epipolar transformers. In Proceedings of the ieee/cvf conference on computer vision and pattern recognition. 7779–7788.

[16]

Bingcheng Hu, Tian Ding, Yuxin Peng, Li Liu, and Xu Wen. 2020. Flexible and attachable inertial measurement unit (IMU)-based motion capture instrumentation for the characterization of hand kinematics: A pilot study. Instrumentation Science & Technology 49, 2 (2020), 125–145.

[17]

Yinghao Huang, Manuel Kaufmann, Emre Aksan, Michael J Black, Otmar Hilliges, and Gerard Pons-Moll. 2018. Deep inertial poser: Learning to reconstruct human pose from sparse inertial measurements in real time. ACM Transactions on Graphics (TOG) 37, 6 (2018), 1–15.

[18]

Umar Iqbal, Pavlo Molchanov, Thomas Breuel Juergen Gall, and Jan Kautz. 2018. Hand pose estimation via latent 2.5 d heatmap regression. In Proceedings of the European Conference on Computer Vision (ECCV). 118–134.

[19]

Karim Iskakov, Egor Burkov, Victor Lempitsky, and Yury Malkov. 2019. Learnable triangulation of human pose. In Proceedings of the IEEE/CVF international conference on computer vision. 7718–7727.

[20]

Yifeng Jiang, Yuting Ye, Deepak Gopinath, Jungdam Won, Alexander W Winkler, and C Karen Liu. 2022. Transformer Inertial Poser: Real-time Human Motion Reconstruction from Sparse IMUs with Simultaneous Terrain Generation. In SIGGRAPH Asia 2022 Conference Papers. 1–9.

[21]

David Kim, Otmar Hilliges, Shahram Izadi, Alex D Butler, Jiawen Chen, Iason Oikonomidis, and Patrick Olivier. 2012. Digits: freehand 3D interactions anywhere using a wrist-worn gloveless sensor. In Proceedings of the 25th annual ACM symposium on User interface software and technology. 167–176.

[22]

Dong Uk Kim, Kwang In Kim, and Seungryul Baek. 2021. End-to-end detection and pose estimation of two interacting hands. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 11189–11198.

[23]

Kyun Kyu Kim, Min Kim, Kyungrok Pyun, Jin Kim, Jinki Min, Seunghun Koh, Samuel E Root, Jaewon Kim, Bao-Nguyen T Nguyen, Yuya Nishio, 2022. A substrate-less nanomesh receptor with meta-learning for rapid hand task recognition. Nature Electronics (2022), 1–12.

[24]

Nikolaos Kyriazis and Antonis Argyros. 2014. Scalable 3d tracking of multiple interacting objects. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 3430–3437.

[25]

Dennis Laurijssen, Steven Truijen, Wim Saeys, and Jan Steckel. 2015. Three sources, three receivers, six degrees of freedom: An ultrasonic sensor for pose estimation & motion capture. In 2015 IEEE SENSORS. IEEE, 1–4.

[26]

Mengcheng Li, Liang An, Hongwen Zhang, Lianpeng Wu, Feng Chen, Tao Yu, and Yebin Liu. 2022. Interacting attention graph for single image two-hand reconstruction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2761–2770.

[27]

Bor-Shing Lin, I-Jung Lee, Shu-Yu Yang, Yi-Chiang Lo, Junghsi Lee, and Jean-Lon Chen. 2018. Design of an inertial-sensor-based data glove for hand function evaluation. Sensors 18, 5 (2018), 1545.

[28]

Kevin Lin, Lijuan Wang, and Zicheng Liu. 2021a. End-to-end human pose and mesh reconstruction with transformers. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 1954–1963.

[29]

Kevin Lin, Lijuan Wang, and Zicheng Liu. 2021b. Mesh graphormer. In Proceedings of the IEEE/CVF international conference on computer vision. 12939–12948.

[30]

Yilin Liu, Shijia Zhang, and Mahanth Gowda. 2021. NeuroPose: 3D hand pose tracking using EMG wearables. In Proceedings of the Web Conference 2021. 1471–1482.

[31]

Federico Lorussi, Enzo Pasquale Scilingo, Mario Tesconi, Alessandro Tognetti, and Danilo De Rossi. 2005. Strain sensing fabric for hand posture and gesture monitoring. IEEE transactions on information technology in biomedicine 9, 3 (2005), 372–381.

[32]

Gyeongsik Moon, Ju Yong Chang, and Kyoung Mu Lee. 2018. V2v-posenet: Voxel-to-voxel prediction network for accurate 3d hand and human pose estimation from a single depth map. In Proceedings of the IEEE conference on computer vision and pattern Recognition. 5079–5088.

[33]

Gyeongsik Moon, Shoou-I Yu, He Wen, Takaaki Shiratori, and Kyoung Mu Lee. 2020. Interhand2.6M: A dataset and baseline for 3d interacting hand pose estimation from a single rgb image. In Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XX 16. Springer, 548–564.

[34]

Franziska Mueller, Florian Bernard, Oleksandr Sotnychenko, Dushyant Mehta, Srinath Sridhar, Dan Casas, and Christian Theobalt. 2018. Ganerated hands for real-time 3d hand tracking from monocular rgb. In Proceedings of the IEEE conference on computer vision and pattern recognition. 49–59.

[35]

Franziska Mueller, Micah Davis, Florian Bernard, Oleksandr Sotnychenko, Mickeal Verschoor, Miguel A Otaduy, Dan Casas, and Christian Theobalt. 2019. Real-time pose and shape reconstruction of two interacting hands with a single depth camera. ACM Transactions on Graphics (ToG) 38, 4 (2019), 1–13.

[36]

Iason Oikonomidis, Nikolaos Kyriazis, and Antonis A Argyros. 2011. Efficient model-based 3D tracking of hand articulations using Kinect. In BmVC, Vol. 1. 3.

[37]

Iasonas Oikonomidis, Nikolaos Kyriazis, and Antonis A Argyros. 2012. Tracking the articulated motion of two strongly interacting hands. In 2012 IEEE conference on computer vision and pattern recognition. IEEE, 1862–1869.

[38]

Timothy F O’Connor, Matthew E Fach, Rachel Miller, Samuel E Root, Patrick P Mercier, and Darren J Lipomi. 2017. The Language of Glove: Wireless gesture decoder with low-power and stretchable hybrid electronics. PloS one 12, 7 (2017), e0179766.

[39]

Wookeun Park, Kyongkwan Ro, Suin Kim, and Joonbum Bae. 2017. A soft sensor-based three-dimensional (3-D) finger motion measurement system. Sensors 17, 2 (2017), 420.

[40]

Yongbin Qi, Cheong Boon Soh, Erry Gunawan, and Kay-Soon Low. 2014. A wearable wireless ultrasonic sensor network for human arm motion tracking. In 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society. IEEE, 5960–5963.

[41]

Edoardo Remelli, Shangchen Han, Sina Honari, Pascal Fua, and Robert Wang. 2020. Lightweight multi-view 3d pose estimation through camera-disentangled representation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 6040–6049.

[42]

Javier Romero, Dimitrios Tzionas, and Michael J Black. 2022. Embodied hands: Modeling and capturing hands and bodies together. arXiv preprint arXiv:2201.02610 (2022).

[43]

Yu Rong, Jingbo Wang, Ziwei Liu, and Chen Change Loy. 2021. Monocular 3D reconstruction of interacting hands via collision-aware factorized refinements. In 2021 International Conference on 3D Vision (3DV). IEEE, 432–441.

[44]

Hochung Ryu, Sangki Park, Jong-Jin Park, and Jihyun Bae. 2018. A knitted glove sensing system with compression strain for finger movements. Smart Materials and Structures 27, 5 (2018), 055016.

[45]

Tomohiko Sato, Shigeki Nakamura, Kotaro Terabayashi, Masanori Sugimoto, and Hiromichi Hashizume. 2011. Design and implementation of a robust and real-time ultrasonic motion-capture system. In 2011 International Conference on Indoor Positioning and Indoor Navigation. IEEE, 1–6.

[46]

Zhong Shen, Juan Yi, Xiaodong Li, Mark Hin Pei Lo, Michael ZQ Chen, Yong Hu, and Zheng Wang. 2016. A soft stretchable bending sensor and data glove applications. Robotics and biomimetics 3, 1 (2016), 22.

[47]

Tomas Simon, Hanbyul Joo, Iain Matthews, and Yaser Sheikh. 2017. Hand keypoint detection in single images using multiview bootstrapping. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition. 1145–1153.

[48]

Adrian Spurr, Umar Iqbal, Pavlo Molchanov, Otmar Hilliges, and Jan Kautz. 2020. Weakly supervised 3d hand pose estimation via biomechanical constraints. In Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XVII 16. Springer, 211–228.

[49]

Danhang Tang, Hyung Jin Chang, Alykhan Tejani, and Tae-Kyun Kim. 2014. Latent regression forest: Structured estimation of 3d articulated hand posture. In Proceedings of the IEEE conference on computer vision and pattern recognition. 3786–3793.

[50]

Danhang Tang, Jonathan Taylor, Pushmeet Kohli, Cem Keskin, Tae-Kyun Kim, and Jamie Shotton. 2015. Opening the black box: Hierarchical sampling optimization for estimating human hand pose. In Proceedings of the IEEE international conference on computer vision. 3325–3333.

[51]

Dimitrios Tzionas, Luca Ballan, Abhilash Srikantha, Pablo Aponte, Marc Pollefeys, and Juergen Gall. 2016. Capturing hands in action using discriminative salient points and physics simulation. International Journal of Computer Vision 118 (2016), 172–193.

[52]

Daniel Vlasic, Rolf Adelsberger, Giovanni Vannucci, John Barnwell, Markus Gross, Wojciech Matusik, and Jovan Popović. 2007. Practical motion capture in everyday surroundings. ACM transactions on graphics (TOG) 26, 3 (2007), 35–es.

[53]

Timo Von Marcard, Bodo Rosenhahn, Michael J Black, and Gerard Pons-Moll. 2017. Sparse inertial poser: Automatic 3d human pose estimation from sparse imus. In Computer graphics forum, Vol. 36. Wiley Online Library, 349–360.

[54]

Jiayi Wang, Franziska Mueller, Florian Bernard, Suzanne Sorli, Oleksandr Sotnychenko, Neng Qian, Miguel A Otaduy, Dan Casas, and Christian Theobalt. 2020. Rgb2hands: real-time tracking of 3d hand interactions from monocular rgb video. ACM Transactions on Graphics (ToG) 39, 6 (2020), 1–16.

[55]

Robert Y Wang and Jovan Popović. 2009. Real-time hand-tracking with a color glove. ACM transactions on graphics (TOG) 28, 3 (2009), 1–8.

[56]

Fu Xiong, Boshen Zhang, Yang Xiao, Zhiguo Cao, Taidong Yu, Joey Tianyi Zhou, and Junsong Yuan. 2019. A2j: Anchor-to-joint regression network for 3d articulated pose estimation from a single depth image. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 793–802.

[57]

Xingchen Yang, Xueli Sun, Dalin Zhou, Yuefeng Li, and Honghai Liu. 2018. Towards wearable a-mode ultrasound sensing for real-time finger motion recognition. IEEE Transactions on Neural Systems and Rehabilitation Engineering 26, 6 (2018), 1199–1208.

[58]

Xingchen Yang, Yu Zhou, and Honghai Liu. 2020. Wearable ultrasound-based decoding of simultaneous wrist/hand kinematics. IEEE Transactions on Industrial Electronics 68, 9 (2020), 8667–8675.

[59]

Baowen Zhang, Yangang Wang, Xiaoming Deng, Yinda Zhang, Ping Tan, Cuixia Ma, and Hongan Wang. 2021. Interacting two-hand 3d pose and shape reconstruction from single color image. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 11354–11363.

[60]

Yang Zheng, Yu Peng, Gang Wang, Xinrong Liu, Xiaotong Dong, and Jue Wang. 2016. Development and evaluation of a sensor glove for hand function assessment and preliminary attempts at assessing hand coordination. Measurement 93 (2016), 1–12.

[61]

Christian Zimmermann and Thomas Brox. 2017. Learning to estimate 3d hand pose from single rgb images. In Proceedings of the IEEE international conference on computer vision. 4903–4911.