“Gaussian material synthesis” by Zsolnai-Fehér, Wonka and Wimmer

Conference:

Type(s):

Title:

- Gaussian material synthesis

Session/Category Title:

- Layers, Glints and Surface Microstructure

Presenter(s)/Author(s):

Entry Number:

- 76

Abstract:

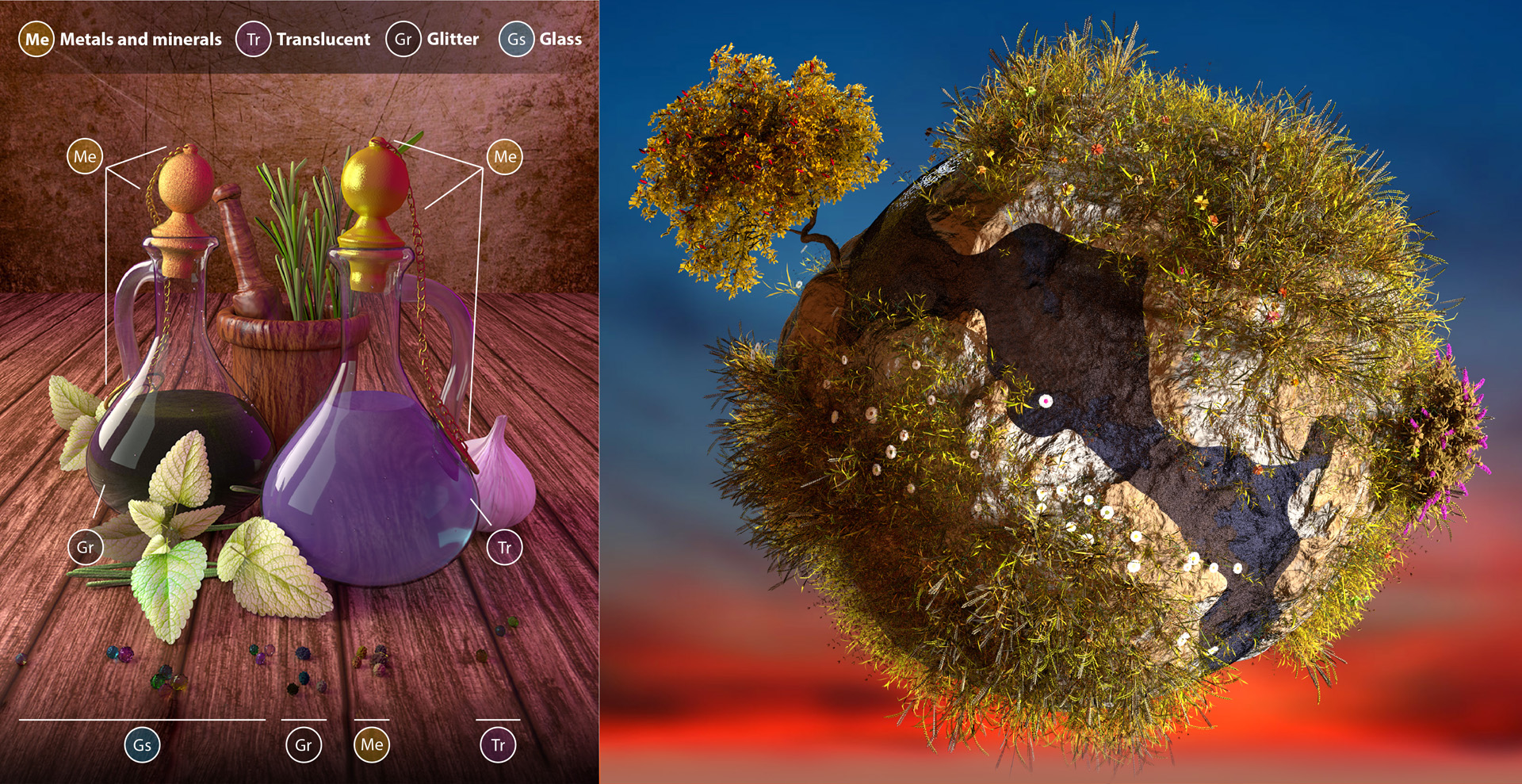

We present a learning-based system for rapid mass-scale material synthesis that is useful for novice and expert users alike. The user preferences are learned via Gaussian Process Regression and can be easily sampled for new recommendations. Typically, each recommendation takes 40-60 seconds to render with global illumination, which makes this process impracticable for real-world workflows. Our neural network eliminates this bottleneck by providing high-quality image predictions in real time, after which it is possible to pick the desired materials from a gallery and assign them to a scene in an intuitive manner. Workflow timings against Disney’s “principled” shader reveal that our system scales well with the number of sought materials, thus empowering even novice users to generate hundreds of high-quality material models without any expertise in material modeling. Similarly, expert users experience a significant decrease in the total modeling time when populating a scene with materials. Furthermore, our proposed solution also offers controllable recommendations and a novel latent space variant generation step to enable the real-time fine-tuning of materials without requiring any domain expertise.

References:

1. Miika Aittala, Timo Aila, and Jaakko Lehtinen. 2016. Reflectance modeling by neural texture synthesis. ACM Transactions on Graphics 35, 4 (2016), 65. Google ScholarDigital Library

2. Miika Aittala, Tim Weyrich, and Jaakko Lehtinen. 2013. Practical SVBRDF capture in the frequency domain. ACM Transactions on Graphics 32, 4 (2013), 110–1. Google ScholarDigital Library

3. Miika Aittala, Tim Weyrich, Jaakko Lehtinen, et al. 2015. Two-shot SVBRDF capture for stationary materials. ACM Transactions on Graphics 34, 4 (2015), 110–1. Google ScholarDigital Library

4. Ken Anjyo and JP Lewis. 2011. RBF interpolation and Gaussian process regression through an RKHS formulation. Journal of Math-for-Industry 3, 6 (2011), 63–71.Google Scholar

5. Melinos Averkiou, Vladimir G Kim, Youyi Zheng, and Niloy J Mitra. 2014. Shapesynth: Parameterizing model collections for coupled shape exploration and synthesis. In Computer Graphics Forum, Vol. 33. Wiley Online Library, 125–134. Google ScholarDigital Library

6. Steve Bako, Thijs Vogels, Brian Mcwilliams, Mark Meyer, Jan NováK, Alex Harvill, Pradeep Sen, Tony Derose, and Fabrice Rousselle. 2017. Kernel-predicting convolutional networks for denoising Monte Carlo renderings. ACM Transactions on Graphics 36, 4 (2017), 97. Google ScholarDigital Library

7. Mikhail Belkin and Partha Niyogi. 2003. Laplacian eigenmaps for dimensionality reduction and data representation. Neural computation 15, 6 (2003), 1373–1396. Google ScholarDigital Library

8. Sean Bell, Paul Upchurch, Noah Snavely, and Kavita Bala. 2013. OpenSurfaces: A richly annotated catalog of surface appearance. ACM Transactions on Graphics 32, 4 (2013), 111. Google ScholarDigital Library

9. Aner Ben-Artzi, Ryan Overbeck, and Ravi Ramamoorthi. 2006. Real-time BRDF editing in complex lighting. In ACM Transactions on Graphics, Vol. 25. ACM, 945–954. Google ScholarDigital Library

10. Manuel Blum and Martin A Riedmiller. 2013. Optimization of Gaussian process hyper-parameters using Rprop.. In ESANN. 339–344.Google Scholar

11. Piotr Bojanowski, Armand Joulin, David Lopez-Paz, and Arthur Szlam. 2017. Optimizing the Latent Space of Generative Networks. arXiv preprint arXiv:1707.05776 (2017).Google Scholar

12. Brent Burley and Walt Disney Animation Studios. 2012. Physically-based Shading at Disney. In ACM SIGGRAPH, Vol. 2012. 1–7.Google Scholar

13. Richard H Byrd, Peihuang Lu, Jorge Nocedal, and Ciyou Zhu. 1995. A limited memory algorithm for bound constrained optimization. SIAM Journal on Scientific Computing 16, 5 (1995), 1190–1208. Google ScholarDigital Library

14. Neill DF Campbell and Jan Kautz. 2014. Learning a manifold of fonts. ACM Transactions on Graphics 33, 4 (2014), 91. Google ScholarDigital Library

15. Kang Chen, Kun Xu, Yizhou Yu, Tian-Yi Wang, and Shi-Min Hu. 2015. Magic decorator: automatic material suggestion for indoor digital scenes. ACM Transactions on Graphics 34, 6 (2015), 232. Google ScholarDigital Library

16. Ewen Cheslack-Postava, Rui Wang, Oskar Akerlund, and Fabio Pellacini. 2008. Fast, realistic lighting and material design using nonlinear cut approximation. In ACM Transactions on Graphics, Vol. 27. ACM, 128. Google ScholarDigital Library

17. Djork-Arné Clevert, Thomas Unterthiner, and Sepp Hochreiter. 2015. Fast and accurate deep network learning by exponential linear units (elus). arXiv preprint arXiv:1511.07289 (2015).Google Scholar

18. Xavier Glorot and Yoshua Bengio. 2010. Understanding the difficulty of training deep feedforward neural networks. In Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics. 249–256.Google Scholar

19. He He and Wan-Chi Siu. 2011. Single image super-resolution using Gaussian process regression. In Computer Vision and Pattern Recognition (CVPR), 2011 IEEE Conference on. IEEE, 449–456. Google ScholarDigital Library

20. Geoffrey E Hinton and Ruslan R Salakhutdinov 2006. Reducing the dimensionality of data with neural networks. Science 313, 5786 (2006), 504–507.Google Scholar

21. Nima Khademi Kalantari, Steve Bako, and Pradeep Sen. 2015. A machine learning approach for filtering Monte Carlo noise. ACM Transactions on Graphics 34, 4 (2015), 122–1. Google ScholarDigital Library

22. Simon Kallweit, Thomas Müller, Brian McWilliams, Markus Gross, and Jan Novák. 2017. Deep Scattering: Rendering Atmospheric Clouds with Radiance-Predicting Neural Networks. ACM Transactions on Graphics (Proc. of Siggraph Asia) 36, 6, Article 231 (Nov. 2017), 11 pages. Google ScholarDigital Library

23. Ashish Kapoor, Kristen Grauman, Raquel Urtasun, and Trevor Darrell. 2007. Active learning with gaussian processes for object categorization. In Computer Vision, 2007. ICCV 2007. IEEE 11th International Conference on. IEEE, 1–8.Google ScholarCross Ref

24. Andrej Karpathy, George Toderici, Sanketh Shetty, Thomas Leung, Rahul Sukthankar, and Li Fei-Fei. 2014. Large-scale video classification with convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 1725–1732. Google ScholarDigital Library

25. William B Kerr and Fabio Pellacini. 2010. Toward evaluating material design interface paradigms for novice users. In ACM Transactions on Graphics, Vol. 29. ACM, 35. Google ScholarDigital Library

26. Diederik Kingma and Jimmy Ba. 2014. Adam: A method for stochastic optimization. arXiv preprint arXiv.1412.6980 (2014).Google Scholar

27. Yuki Koyama, Daisuke Sakamoto, and Takeo Igarashi. 2014. Crowd-powered parameter analysis for visual design exploration. In Proceedings of the 27th annual ACM symposium on User Interface Software and Technology. ACM, 65–74. Google ScholarDigital Library

28. Eric PF Lafortune, Sing-Choong Foo, Kenneth E Torrance, and Donald P Greenberg. 1997. Non-linear approximation of reflectance functions. In Proceedings of the 24th Annual Conference on Computer Graphics and Interactive Techniques. ACM Press/Addison-Wesley Publishing Co., 117–126. Google ScholarDigital Library

29. Neil D Lawrence. 2004. Gaussian process latent variable models for visualisation of high dimensional data. In Advances in Neural Information Processing Systems. 329–336. Google ScholarDigital Library

30. Yann LeCun, Léon Bottou, Yoshua Bengio, and Patrick Haffner. 1998. Gradient-based learning applied to document recognition. Proc. IEEE 86, 11 (1998), 2278–2324.Google ScholarCross Ref

31. Joel Lehman and Kenneth O Stanley. 2008. Exploiting Open-Endedness to Solve Problems Through the Search for Novelty.. In ALIFE. 329–336.Google Scholar

32. Joel Lehman and Kenneth O Stanley. 2011. Evolving a diversity of virtual creatures through novelty search and local competition. In Proceedings of the 13th Annual Conference on Genetic and Evolutionary Computation. ACM, 211–218. Google ScholarDigital Library

33. Laurens van der Maaten and Geoffrey Hinton. 2008. Visualizing data using t-SNE. Journal of Machine Learning Research 9, Nov (2008), 2579–2605.Google Scholar

34. David JC MacKay. 1996. Bayesian methods for backpropagation networks. In Models of Neural Networks III. Springer, 211–254.Google Scholar

35. Joe Marks, Brad Andalman, Paul A Beardsley, William Freeman, Sarah Gibson, Jessica Hodgins, Thomas Kang, Brian Mirtich, Hanspeter Pfister, Wheeler Ruml, et al. 1997. Design galleries: A general approach to setting parameters for computer graphics and animation. In Proceedings of the 24th annual conference on Computer graphics and interactive techniques. ACM Press/Addison-Wesley Publishing Co., 389–400. Google ScholarDigital Library

36. Jonathan Masci, Ueli Meier, Dan Cireşan, and Jürgen Schmidhuber. 2011. Stacked convolutional auto-encoders for hierarchical feature extraction. Artificial Neural Networks and Machine Learning-ICANN 2011 (2011), 52–59. Google ScholarDigital Library

37. Wojciech Matusik. 2003. A data-driven reflectance model. Ph.D. Dissertation. Massachusetts Institute of Technology. Google ScholarDigital Library

38. Leo Miyashita, Kota Ishihara, Yoshihiro Watanabe, and Masatoshi Ishikawa. 2016. Zoe-Matrope: A system for physical material design. In ACM SIGGRAPH 2016 Emerging Technologies. ACM, 24. Google ScholarDigital Library

39. Martin Fodslette Møller. 1993. A scaled conjugate gradient algorithm for fast supervised learning. Neural Networks 6, 4 (1993), 525–533. Google ScholarDigital Library

40. Oliver Nalbach, Elena Arabadzhiyska, Dushyant Mehta, H-P Seidel, and Tobias Ritschel. 2017. Deep Shading: Convolutional Neural Networks for Screen Space Shading. In Computer Graphics Forum, Vol. 36. Wiley Online Library, 65–78. Google ScholarDigital Library

41. Radford M Neal. 2012. Bayesian learning for neural networks. Vol. 118. Springer Science & Business Media.Google Scholar

42. Hyeonwoo Noh, Seunghoon Hong, and Bohyung Han. 2015. Learning deconvolution network for semantic segmentation. In Proceedings of the IEEE International Conference on Computer Vision. 1520–1528. Google ScholarDigital Library

43. Steven J Nowlan and Geoffrey E Hinton. 1992. Simplifying neural networks by soft weight-sharing. Neural Computation 4, 4 (1992), 473–493. Google ScholarDigital Library

44. Augustus Odena, Vincent Dumoulin, and Chris Olah. 2016. Deconvolution and Checkerboard Artifacts. Distill (2016).Google Scholar

45. Peiran Ren, Jiaping Wang, Minmin Gong, Stephen Lin, Xin Tong, and Baining Guo. 2013. Global illumination with radiance regression functions. ACM Transactions on Graphics 32, 4 (2013), 130. Google ScholarDigital Library

46. Martin Riedmiller and Heinrich Braun. 1992. RPROP-A fast adaptive learning algorithm. In Proc. of ISCIS VII), Universitat. Citeseer.Google Scholar

47. Shunsuke Saito, Lingyu Wei, Liwen Hu, Koki Nagano, and Hao Li. 2016. Photorealistic Facial Texture Inference Using Deep Neural Networks. arXiv preprint arXiv:1612.00523 (2016).Google Scholar

48. Pỳnar Satỳlmỳs, Thomas Bashford-Rogers, Alan Chalmers, and Kurt Debattista. 2017. A Machine-Learning-Driven Sky Model. IEEE Computer Graphics and Applications 37, 1 (2017), 80–91.Google ScholarDigital Library

49. Pradeep Sen and Soheil Darabi. 2012. On filtering the noise from the random parameters in Monte Carlo rendering. ACM Transactions on Graphics 31, 3 (2012), 18–1. Google ScholarDigital Library

50. Ana Serrano, Diego Gutierrez, Karol Myszkowski, Hans-Peter Seidel, and Belen Masia. 2016. An intuitive control space for material appearance. ACM Transactions on Graphics 35, 6 (2016), 186. Google ScholarDigital Library

51. Maria Shugrina, Jingwan Lu, and Stephen Diverdi. 2017. Playful palette: an interactive parametric color mixer for artists. ACM Transactions on Graphics 36, 4 (2017), 61. Google ScholarDigital Library

52. Nitish Srivastava, Geoffrey E Hinton, Alex Krizhevsky, Ilya Sutskever, and Ruslan Salakhutdinov. 2014. Dropout: a simple way to prevent neural networks from overfitting. Journal of Machine Learning Research 15, 1 (2014), 1929–1958. Google ScholarDigital Library

53. Xin Sun, Kun Zhou, Yanyun Chen, Stephen Lin, Jiaoying Shi, and Baining Guo. 2007. Interactive relighting with dynamic BRDFs. ACM Transactions on Graphics 26, 3 (2007), 27. Google ScholarDigital Library

54. Joshua B Tenenbaum, Vin De Silva, and John C Langford. 2000. A global geometric framework for nonlinear dimensionality reduction. Science 290, 5500 (2000), 2319–2323.Google ScholarCross Ref

55. Pascal Vincent, Hugo Larochelle, Isabelle Lajoie, Yoshua Bengio, and Pierre-Antoine Manzagol. 2010. Stacked denoising autoencoders: Learning useful representations in a deep network with a local denoising criterion. Journal of Machine Learning Research 11, Dec (2010), 3371–3408. Google ScholarDigital Library

56. Jack M Wang, David J Fleet, and Aaron Hertzmann. 2008. Gaussian process dynamical models for human motion. IEEE Transactions on Pattern Analysis and Machine Intelligence 30, 2 (2008), 283–298. Google ScholarDigital Library

57. Matthew D Zeiler, Dilip Krishnan, Graham W Taylor, and Rob Fergus. 2010. Deconvolutional networks. In Computer Vision and Pattern Recognition (CVPR), 2010 IEEE Conference on. IEEE, 2528–2535.Google ScholarCross Ref

58. Hui Zou and Trevor Hastie. 2005. Regularization and variable selection via the elastic net. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 67, 2 (2005), 301–320.Google ScholarCross Ref