“Flycon: real-time environment-independent multi-view human pose estimation with aerial vehicles”

Conference:

Type(s):

Title:

- Flycon: real-time environment-independent multi-view human pose estimation with aerial vehicles

Session/Category Title: Aerial propagation

Presenter(s)/Author(s):

Moderator(s):

Abstract:

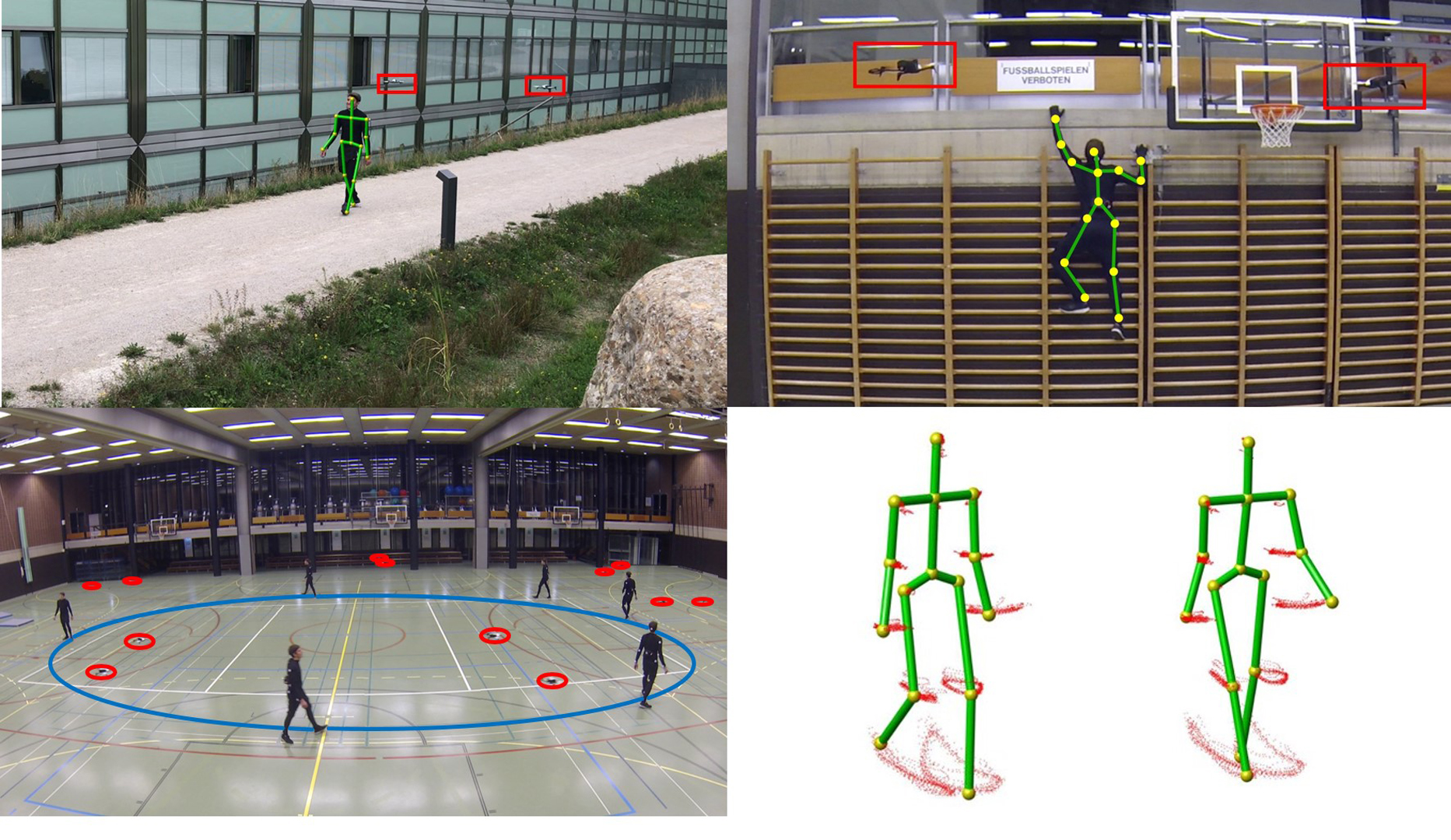

We propose a real-time method for the infrastructure-free estimation of articulated human motion. The approach leverages a swarm of camera-equipped flying robots and jointly optimizes the swarm’s and skeletal states, which include the 3D joint positions and a set of bones. Our method allows to track the motion of human subjects, for example an athlete, over long time horizons and long distances, in challenging settings and at large scale, where fixed infrastructure approaches are not applicable. The proposed algorithm uses active infra-red markers, runs in real-time and accurately estimates robot and human pose parameters online without the need for accurately calibrated or stationary mounted cameras. Our method i) estimates a global coordinate frame for the MAV swarm, ii) jointly optimizes the human pose and relative camera positions, and iii) estimates the length of the human bones. The entire swarm is then controlled via a model predictive controller to maximize visibility of the subject from multiple viewpoints even under fast motion such as jumping or jogging. We demonstrate our method in a number of difficult scenarios including capture of long locomotion sequences at the scale of a triplex gym, in non-planar terrain, while climbing and in outdoor scenarios.

References:

1. 2015. Parrot SDK. (2015). http://developer.parrot.com/.Google Scholar

2. Javier Alonso-Mora, Eduardo Montijano, Tobias Nägeli, Otmar Hilliges, Mac Schwager, and Daniela Rus. 2018. Distributed multi-robot formation control in dynamic environments. Autonomous Robots (July 2018).Google Scholar

3. Luca Ballan, Aparna Taneja, Jürgen Gall, Luc Van Gool, and Marc Pollefeys. 2012. Motion capture of hands in action using discriminative salient points. Computer Vision-ECCV 2012 (2012), 640–653. Google ScholarDigital Library

4. Meysam Basiri, Felix Schill, Dario Floreano, and Pedro Lima. 2013. Audio-based relative positioning system for multiple micro air vehicle systems. In Robotics: Science and Systems RSS2013.Google Scholar

5. Federica Bogo, Angjoo Kanazawa, Christoph Lassner, Peter Gehler, Javier Romero, and Michael J Black. 2016. Keep it SMPL: Automatic estimation of 3D human pose and shape from a single image. In European Conference on Computer Vision. Springer, 561–578.Google ScholarCross Ref

6. Christoph Bregler and Jitendra Malik. 1998. Tracking people with twists and exponential maps. In Computer Vision and Pattern Recognition, 1998. Proceedings. 1998 IEEE Computer Society Conference on. IEEE, 8–15. Google ScholarDigital Library

7. Pierre-Jean Bristeau, François Callou, David Vissière, and Nicolas Petit. 2011. The Navigation and Control technology inside the AR.Drone micro UAV. IFAC Proceedings Volumes 44, 1 (2011), 1477 — 1484. 18th IFAC World Congress.Google ScholarCross Ref

8. J A Castellanos, Jose Neira, and Juan Domingo Tardos. 2004. Limits to the consistency of EKF-based SLAM. (2004).Google Scholar

9. Xianjie Chen and Alan L Yuille. 2014. Articulated pose estimation by a graphical model with image dependent pairwise relations. In NIPS. 1736–1744. Google ScholarDigital Library

10. Edilson de Aguiar, Carsten Stoll, Christian Theobalt, Naveed Ahmed, Hans-Peter Seidel, and Sebastian Thrun. 2008. Performance Capture from Sparse Multi-view Video. In ACM SIGGRAPH 2008 Papers (SIGGRAPH ’08). ACM, New York, NY, USA, Article 98, 10 pages. Google ScholarDigital Library

11. N. de Palézieux, T. Nägeli, and O. Hilliges. 2016. Duo-VIO: Fast, light-weight, stereo inertial odometry. In 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). 2237–2242.Google Scholar

12. Mingsong Dou, Sameh Khamis, Yury Degtyarev, Philip Davidson, Sean Ryan Fanello, Adarsh Kowdle, Sergio Orts Escolano, Christoph Rhemann, David Kim, Jonathan Taylor, Pushmeet Kohli, Vladimir Tankovich, and Shahram Izadi. 2016. Fusion4D: Real-time Performance Capture of Challenging Scenes. ACM Trans. Graph. 35, 4, Article 114 (July 2016), 13 pages. Google ScholarDigital Library

13. Ahmed Elhayek, Edilson de Aguiar, Arjun Jain, J Thompson, Leonid Pishchulin, Mykhaylo Andriluka, Christoph Bregler, Bernt Schiele, and Christian Theobalt. 2017. MARCOnI—ConvNet-Based MARker-Less Motion Capture in Outdoor and Indoor Scenes. IEEE transactions on pattern analysis and machine intelligence 39, 3 (2017), 501–514. Google ScholarDigital Library

14. B. Friedland. 1969. Treatment of bias in recursive filtering. IEEE Trans. Automat. Control 14, 4 (August 1969), 359–367.Google ScholarCross Ref

15. Varun Ganapathi, Christian Plagemann, Daphne Koller, and Sebastian Thrun. 2012. Real-time human pose tracking from range data. In European conference on computer vision. Springer, 738–751. Google ScholarDigital Library

16. Christoph Gebhardt, Benjamin Hepp, Tobias Nägeli, Stefan Stevšić, and Otmar Hilliges. 2016. Airways: Optimization-Based Planning of Quadrotor Trajectories According to High-Level User Goals. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems (CHI ’16). ACM, New York, NY, USA, 2508–2519. Google ScholarDigital Library

17. Christoph Gebhardt, Stefan Stevsic, and Otmar Hilliges. 2018. Optimizing for Aesthetically Pleasing Quadrotor Camera Motion. ACM Trans. Graph. 37, 4, Article 90 (2018), 11 pages. Google ScholarDigital Library

18. Bruce P. Gibbs. 2011. Advanced Kalman filtering, least-squares and modeling. John Wiley & Sons.Google Scholar

19. Richard Hartley and Andrew Zisserman. 2003. Multiple View Geometry in Computer Vision (2 ed.). Cambridge University Press, New York, NY, USA. Google ScholarDigital Library

20. Chien-Shu Hsieh. 2000. Robust two-stage Kalman filters for systems with unknown inputs. IEEE Trans. Automat. Control 45, 12 (2000), 2374–2378.Google ScholarCross Ref

21. Chong Huang, Zhenyu Yang, Yan Kong, Peng Chen, Xin Yang, and Kwang-Ting Tim Cheng. 2018. Through-the-Lens Drone Filming. (2018).Google Scholar

22. Niels Joubert, Mike Roberts, Anh Truong, Floraine Berthouzoz, and Pat Hanrahan. 2015. An Interactive Tool for Designing Quadrotor Camera Shots. ACM Trans. Graph. 34, 6, Article 238, 11 pages. Google ScholarDigital Library

23. Ern J Lefferts, F Landis Markley, and Malcolm D Shuster. 1982. Kalman filtering for spacecraft attitude estimation. Journal of Guidance, Control, and Dynamics (1982).Google Scholar

24. Rui Li, Minjian Pang, Cong Zhao, Guyue Zhou, and Lu Fang. 2016. Monocular long-term target following on uavs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops. 29–37.Google ScholarCross Ref

25. Hyon Lim and Sudipta Sinha. 2015. Monocular Localization of a moving person onboard a Quadrotor MAV. https://www.microsoft.com/en-us/research/publication/trajrecon/Google Scholar

26. Huajun Liu, Xiaolin Wei, Jinxiang Chai, Inwoo Ha, and Taehyun Rhee. 2011. Realtime human motion control with a small number of inertial sensors. In Symposium on Interactive 3D Graphics and Games. ACM, 133–140. Google ScholarDigital Library

27. S. Lupashin, A. Schollig, M. Hehn, and R. D’Andrea. 2011. The Flying Machine Arena as of 2010. In IEEE ICRA ’11. 2970–2971.Google ScholarCross Ref

28. Dushyant Mehta, Srinath Sridhar, Oleksandr Sotnychenko, Helge Rhodin, Mohammad Shafiei, Hans-Peter Seidel, Weipeng Xu, Dan Casas, and Christian Theobalt. 2017. VNect: Real-time 3D Human Pose Estimation with a Single RGB Camera. ACM Transactions on Graphics 36, 4, 14. Google ScholarDigital Library

29. Nathan Michael, D. Mellinger, Q. Lindsey, and V. Kumar. 2010. The GRASP Multiple Micro-UAV Testbed. Robotics Automation Magazine, IEEE 17, 3 (2010), 56–65.Google Scholar

30. Thomas B Moeslund, Adrian Hilton, and Volker Krüger. 2006. A survey of advances in vision-based human motion capture and analysis. Computer vision and image understanding 104, 2 (2006), 90–126. Google ScholarDigital Library

31. T. Naegeli, J. Alonso-Mora, A. Domahidi, D. Rus, and O. Hilliges. 2017. Real-time Motion Planning for Aerial Videography with Dynamic Obstacle Avoidance and Viewpoint Optimization. IEEE Robotics and Automation Letters 2, 3 (2017), 1696–1703.Google ScholarCross Ref

32. Tobias Nägeli, Christian Conte, Alexander Domahidi, Manfred Morari, and Otmar Hilliges. 2014. Environment-independent formation flight for micro aerial vehicles. In Intelligent Robots and Systems (IROS 2014), 2014 IEEE/RSJ International Conference on. IEEE, 1141–1146.Google ScholarCross Ref

33. Tobias Nägeli, Lukas Meier, Alexander Domahidi, Javier Alonso-Mora, and Otmar Hilliges. 2017. Real-time Planning for Automated Multi-view Drone Cinematography. ACM Trans. Graph. 36, 4, Article 132 (July 2017), 10 pages. Google ScholarDigital Library

34. Richard A Newcombe, Dieter Fox, and Steven M Seitz. 2015. Dynamicfusion: Reconstruction and tracking of non-rigid scenes in real-time. In Proceedings of the IEEE conference on computer vision and pattern recognition. 343–352.Google ScholarCross Ref

35. Alejandro Newell, Kaiyu Yang, and Jia Deng. 2016. Stacked hourglass networks for human pose estimation. In ECCV. 483–499.Google Scholar

36. Iasonas Oikonomidis, Nikolaos Kyriazis, and Antonis A Argyros. 2012. Tracking the articulated motion of two strongly interacting hands. In Computer Vision and Pattern Recognition (CVPR), 2012 IEEE Conference on. IEEE, 1862–1869. Google ScholarDigital Library

37. Gerard Pons-Moll, Javier Romero, Naureen Mahmood, and Michael J. Black. 2015. Dyna: A Model of Dynamic Human Shape in Motion. ACM Trans. Graph. 34, 4, Article 120 (July 2015), 14 pages. Google ScholarDigital Library

38. Jim Pugh and Alcherio Martinoli. 2006. Relative localization and communication module for small-scale multi-robot systems. In Robotics and Automation, 2006. ICRA 2006. Proceedings 2006 IEEE International Conference on. IEEE, 188–193.Google ScholarCross Ref

39. Morgan Quigley, Ken Conley, Brian P. Gerkey, Josh Faust, Tully Foote, Jeremy Leibs, Rob Wheeler, and Andrew Y. Ng. 2009. ROS: an open-source Robot Operating System. In IEEE ICRA Workshop on Open Source Software.Google Scholar

40. Helge Rhodin, Nadia Robertini, Christian Richardt, Hans-Peter Seidel, and Christian Theobalt. 2015. A versatile scene model with differentiable visibility applied to generative pose estimation. In Proceedings of the IEEE International Conference on Computer Vision. 765–773. Google ScholarDigital Library

41. Nadia Robertini, Dan Casas, Helge Rhodin, Hans-Peter Seidel, and Christian Theobalt. 2016. Model-based Outdoor Performance Capture. In Proceedings of the 2016 International Conference on 3D Vision (3DV 2016). http://gvv.mpi-inf.mpg.de/projects/OutdoorPerfcap/Google ScholarCross Ref

42. Mike Roberts and Pat Hanrahan. 2016. Generating Dynamically Feasible Trajectories for Quadrotor Cameras. ACM Trans. Graph. 35, 4, Article 61 (July 2016), 11 pages. Google ScholarDigital Library

43. Daniel Roetenberg, Henk Luinge, and Per Slycke. 2007. Moven: Full 6dof human motion tracking using miniature inertial sensors. Xsen Technologies, December 2, 3 (2007), 8.Google Scholar

44. S. I. Roumeliotis, G. S. Sukhatme, and G. A. Bekey. 1999. Circumventing dynamic modeling: evaluation of the error-state Kalman filter applied to mobile robot localization. In Proceedings 1999 IEEE International Conference on Robotics and Automation (Cat. No.99CH36288C), Vol. 2. 1656–1663 vol.2.Google Scholar

45. Loren Schwarz, Diana Mateus, and Nassir Navab. 2009. Discriminative human full-body pose estimation from wearable inertial sensor data. Modelling the Physiological Human (2009), 159–172. Google ScholarDigital Library

46. Jamie Shotton, Toby Sharp, Alex Kipman, Andrew Fitzgibbon, Mark Finocchio, Andrew Blake, Mat Cook, and Richard Moore. 2013. Real-time human pose recognition in parts from single depth images. Commun. ACM 56, 1 (2013), 116–124. Google ScholarDigital Library

47. Jie Song, Limin Wang, Luc Van Gool, and Otmar Hilliges. 2017. Thin-Slicing Network: A Deep Structured Model for Pose Estimation in Videos. arXiv preprint arXiv:1703.10898 (2017).Google Scholar

48. Jonathan Starck and Adrian Hilton. 2003. Model-based multiple view reconstruction of people. In null. IEEE, 915. Google ScholarDigital Library

49. Carsten Stoll, Nils Hasler, Juergen Gall, Hans-Peter Seidel, and Christian Theobalt. 2011. Fast articulated motion tracking using a sums of gaussians body model. In Computer Vision (ICCV), 2011 IEEE International Conference on. IEEE, 951–958. Google ScholarDigital Library

50. Jochen Tautges, Arno Zinke, Björn Krüger, Jan Baumann, Andreas Weber, Thomas Helten, Meinard Müller, Hans-Peter Seidel, and Bernd Eberhardt. 2011. Motion reconstruction using sparse accelerometer data. ACM Transactions on Graphics (TOG) 30, 3 (2011), 18. Google ScholarDigital Library

51. Jonathan Taylor, Jamie Shotton, Toby Sharp, and Andrew Fitzgibbon. 2012. The vitruvian manifold: Inferring dense correspondences for one-shot human pose estimation. In Computer Vision and Pattern Recognition (CVPR), 2012 IEEE Conference on. IEEE, 103–110. Google ScholarDigital Library

52. Bugra Tekin, Pablo Márquez-Neila, Mathieu Salzmann, and Pascal Fua. 2016. Fusing 2D Uncertainty and 3D Cues for Monocular Body Pose Estimation. arXiv preprint arXiv:1611.05708 (2016).Google Scholar

53. Jonathan J Tompson, Arjun Jain, Yann LeCun, and Christoph Bregler. 2014. Joint training of a convolutional network and a graphical model for human pose estimation. In NIPS. 1799–1807. Google ScholarDigital Library

54. Alexander Toshev and Christian Szegedy. 2014. Deeppose: Human pose estimation via deep neural networks. In CVPR. 1653–1660. Google ScholarDigital Library

55. T. von Marcard, B. Rosenhahn, M. J. Black, and G. Pons-Moll. 2017. Sparse Inertial Poser: Automatic 3D Human Pose Estimation from Sparse IMUs. Comput. Graph. Forum 36, 2 (may 2017), 349–360. Google ScholarDigital Library

56. Shih-En Wei, Varun Ramakrishna, Takeo Kanade, and Yaser Sheikh. 2016. Convolutional pose machines. In CVPR. 4724–4732.Google Scholar

57. Lan Xu, Yebin Liu, Wei Cheng, Kaiwen Guo, Guyue Zhou, Qionghai Dai, and Lu Fang. 2017. FlyCap: Markerless motion capture using multiple autonomous flying cameras. IEEE transactions on visualization and computer graphics (2017).Google Scholar

58. Xiaowei Zhou, Menglong Zhu, Spyridon Leonardos, Konstantinos G Derpanis, and Kostas Daniilidis. 2016. Sparseness meets deepness: 3D human pose estimation from monocular video. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 4966–4975.Google ScholarCross Ref

59. Michael Zollhöfer, Matthias Nießner, Shahram Izadi, Christoph Rehmann, Christopher Zach, Matthew Fisher, Chenglei Wu, Andrew Fitzgibbon, Charles Loop, Christian Theobalt, et al. 2014. Real-time non-rigid reconstruction using an RGB-D camera. ACM Transactions on Graphics (TOG) 33, 4 (2014), 156. Google ScholarDigital Library