“Eye-catching crowds: saliency based selective variation” by McDonnell, Larkin, Hernández, Rudomin and O’Sullivan

Conference:

Type(s):

Title:

- Eye-catching crowds: saliency based selective variation

Presenter(s)/Author(s):

Abstract:

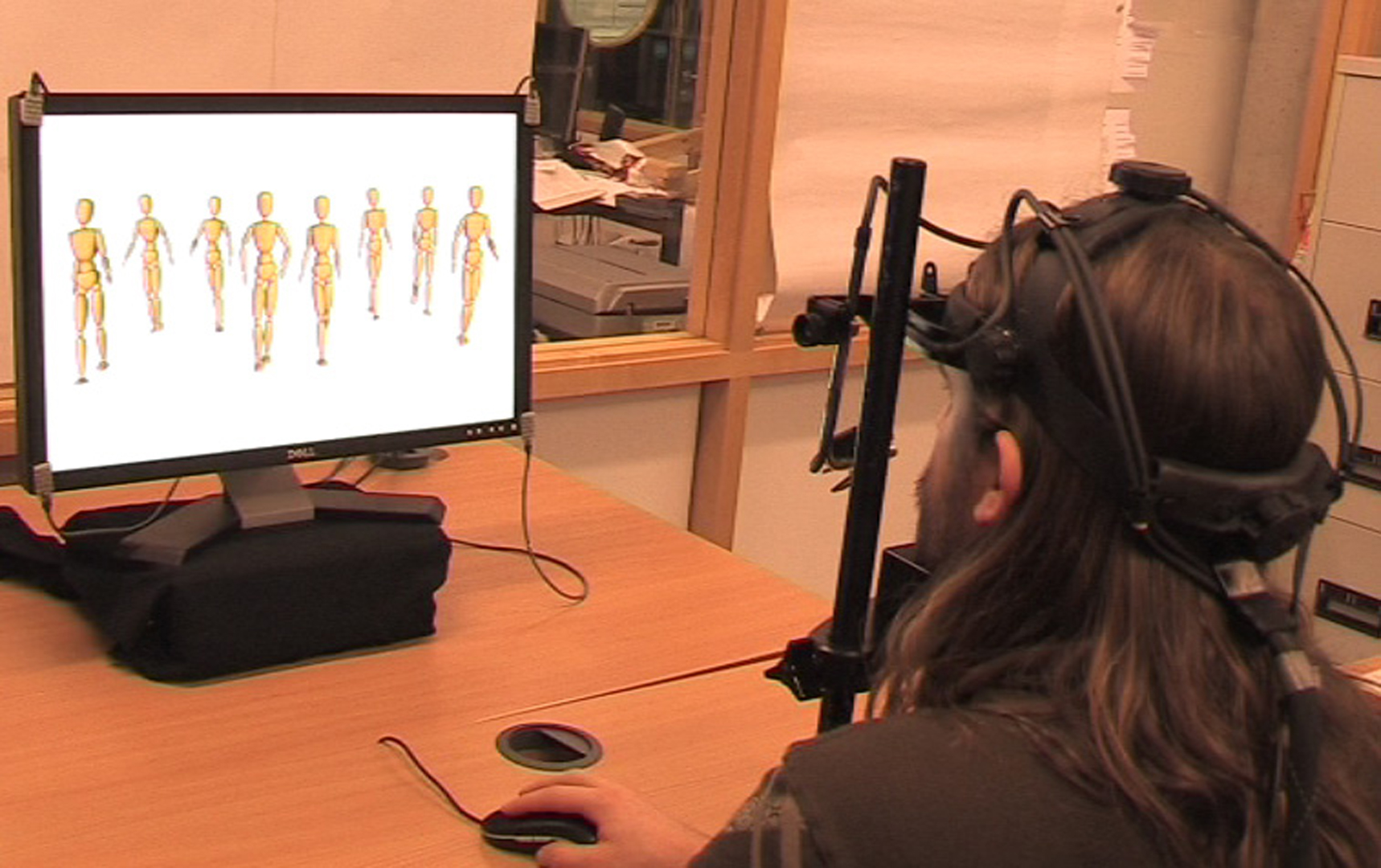

Populated virtual environments need to be simulated with as much variety as possible. By identifying the most salient parts of the scene and characters, available resources can be concentrated where they are needed most. In this paper, we investigate which body parts of virtual characters are most looked at in scenes containing duplicate characters or clones. Using an eye-tracking device, we recorded fixations on body parts while participants were asked to indicate whether clones were present or not. We found that the head and upper torso attract the majority of first fixations in a scene and are attended to most. This is true regardless of the orientation, presence or absence of motion, sex, age, size, and clothing style of the character. We developed a selective variation method to exploit this knowledge and perceptually validated our method. We found that selective colour variation is as effective at generating the illusion of variety as full colour variation. We then evaluated the effectiveness of four variation methods that varied only salient parts of the characters. We found that head accessories, top texture and face texture variation are all equally effective at creating variety, whereas facial geometry alterations are less so. Performance implications and guidelines are presented.

References:

1. Beardsworth, T., and Buckner, T. 1981. The ability to recognize oneself from a video recording of ones movements without seeing ones body. Bulletin of the Psychonomic Society 18, 1, 19–22.Google ScholarCross Ref

2. Cater, K., Chalmers, A., and Ward, G. 2003. Detail to attention: Exploiting visual tasks for selective rendering. In Proceedings of the Eurographics Symposium on Rendering, 270–280. Google ScholarDigital Library

3. Cutting, J., and Kozlowski, L. 1977. Recognizing friends by their walk: Gait perception without familiarity cues. Bulletin of the Psychonomic Society 9, 5, 353–356.Google ScholarCross Ref

4. Daly, S. J. 1998. Engineering observations from spatiovelocity and spatiotemporal visual models. Proceedings of SPIE 3299, 180–191.Google Scholar

5. de Heras Ciechomski, P., Schertenleib, S., Maïm, J., and Thalmann, D. 2005. Real-time shader rendering for crowds in virtual heritage. In Proceedings of the 6th international Symposium on Virtual Reality, Archeology and Cultural Heritage (VAST 2005), 1–8. Google ScholarDigital Library

6. de Heras Ciechomski, P., Schertenleib, S., Maïm, J., and Thalmann, D. 2005. Reviving the roman odeon of aphrodisias: Dynamic animation and variety control of crowds in virtual heritage. In VSMM: Virtual Systems and Multimedia, 601–610.Google Scholar

7. Dudash, B. 2007. Skinned instancing. In NVidia white paper.Google Scholar

8. Farah, M. J. 1992. Is an object an object an object? Cognitive and neuropsychological investigations of domain specificity in visual object recognition. Current Directions in Psychological Science 1, 5, 164–169.Google ScholarCross Ref

9. Gosselin, D., Sander, P., and Mitchell, J. 2005. Drawing a crowd. ShaderX3, 505–517.Google Scholar

10. Henderson, J. M., and Hollingworth, A. 1998. Eye movements during scene viewing: An overview. Eye Guidance in Reading and Scene Perception, 269–293.Google Scholar

11. Henderson, J. M. 1992. Object identification in context: the visual processing of natural scenes. Canadian Journal of Psychology 46, 3, 319–41.Google ScholarCross Ref

12. Hochstein, S., Barlasov, A., Hershler, O., and Shneor, A. N. S. 2004. Rapid vision is holistic. Journal of Vision 4, 8, 95.Google ScholarCross Ref

13. Howlett, S., Hamill, J., and O’Sullivan, C. 2005. Predicting and evaluating saliency for simplified polygonal models. ACM Transactions on Applied Perception 2, 3, 286–308. Google ScholarDigital Library

14. Itti, L., Koch, C., and Niebur, E. 1998. A model of saliency-based visual attention for rapid scene analysis. IEEE Transactions on Pattern Analysis and Machine Intelligence 20, 11, 1254–1259. Google ScholarDigital Library

15. Johnson, K. L., and Tassinary, L. G. 2005. Perceiving sex directly and indirectly: Meaning in motion and morphology. Psychological Science 16, 11, 890–897.Google ScholarCross Ref

16. Laarni, J., Ravaja, N., and T. Saari, T. 2003. Using eye tracking and psychophysiological methods to study spatial presence. In Proceedings of the 6th International Workshop on Presence.Google Scholar

17. Lee, C. H., Varshney, A., and Jacobs, D. 2005. Mesh saliency. ACM Transactions on Graphics 24, 3, 659–666. Google ScholarDigital Library

18. Maïm, J., Haegler, S., Yersin, B., Mueller, P., Thalmann, D., and VanGool, L. 2007. Populating ancient pompeii with crowds of virtual romans. In Proceedings of the 8th International Symposium on Virtual Reality, Archaeology and Cultural Heritage (VAST 2007), 26–30. Google ScholarDigital Library

19. McDonnell, R., Larkin, M., Dobbyn, S., Collins, S., and O’Sullivan, C. 2008. Clone attack! Perception of crowd variety. ACM Transactions on Graphics 27, 3, 1–8. Google ScholarDigital Library

20. Nothdurft, H. C. 1993. Faces and facial expressions do not pop out. Perception 22, 11, 1287–1298.Google ScholarCross Ref

21. Noton, D., and Stark, L. W. 1971. Scanpaths in eye movements during pattern perception. Science 171, 3968, 308–311.Google Scholar

22. Peters, R. J., and Itti, L. 2006. Computational mechanisms for gaze direction in interactive visual environments. In ETRA ’06: Proceedings of the 2006 symposium on Eye tracking research & applications, 27–32. Google ScholarDigital Library

23. Sinha, P., Balas, B., Ostrovsky, Y., and Russell, R. 2006. Face recognition by humans: Nineteen results all computer vision researchers should know about. Proceedings of the IEEE 94, 11, 1948–1962.Google ScholarCross Ref

24. Tecchia, F., Loscos, C., and Chrysanthou, Y. 2002. Visualizing crowds in real-time. Computer Graphics Forum (Eurographics 2002) 21, 4, 753–765.Google Scholar

25. Thalmann, D., and Musse, S. R. 2007. Crowd Simulation. Springer-Verlag. Google ScholarDigital Library

26. Thalmann, D., O’Sullivan, C., Yersin, B., Maïm, J., and McDonnell, R. 2007. Populating virtual environments with crowds. Eurographics Tutorials 1, 25–124.Google Scholar

27. Yarbus, A. L. 1967. Eye Movements and Vision. Plenum Press, New York.Google Scholar

28. Yee, H., Pattanaik, S., and Greenberg, D. P. 2001. Spatiotemporal sensitivity and visual attention for efficient rendering of dynamic environments. ACM Transactions on Graphics 20, 1, 39–65. Google ScholarDigital Library