“Expressive facial subspace construction from key face selection” by Takamizawa, Suzuki, Kubo, Maejima and Morishima

Conference:

Type(s):

Title:

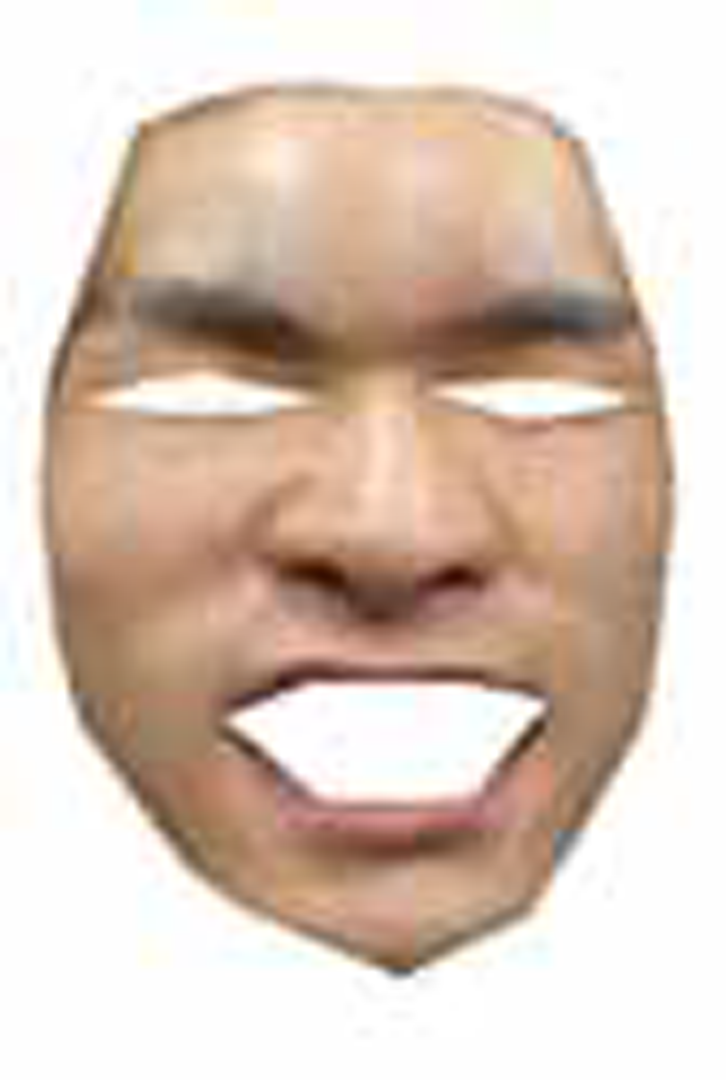

- Expressive facial subspace construction from key face selection

Presenter(s)/Author(s):

Abstract:

MoCap-based facial expression synthesis techniques have been applied to provide CG character with expressive and accurate facial expressions [Deng et al. 2006: Lau et al. 2007]. The representative performance of these techniques depends on the variety of captured facial expressions. It is also difficult to guess what expressions are needed to synthesize expressive face before capture. Therefore, much MoCap data are required to construct a subspace employing dimensional compression techniques, and then the space enables us to synthesize expressions with linear-combination of basis vectors of the space. However, it is hard work to take much facial MoCap data to obtain expressive result.

References:

1. Deng, Z. Chiang, PY. Neumann, U. et al. 2006. Animating Blendshape Faces by Cross-Mappipng Motion Capture Data. In Proceedings of ACM SIGGRAPH Symposium on Interactive 3D Graphics and Games, 43–48

2. Lau, M. Chai, J. Xu, YQ. et al. 2007. Face Poser: Interactive Modeling of 3D Facial Expressions Using Model Priors. In Proceedings of the 2007 ACM SIGGRAPH/Eurographics symposium on Computer animation, 161–170