“Editable free-viewpoint video using a layered neural representation” by Zhang, Liu, Ye, Zhao, Zhang, et al. …

Conference:

Type(s):

Title:

- Editable free-viewpoint video using a layered neural representation

Presenter(s)/Author(s):

Abstract:

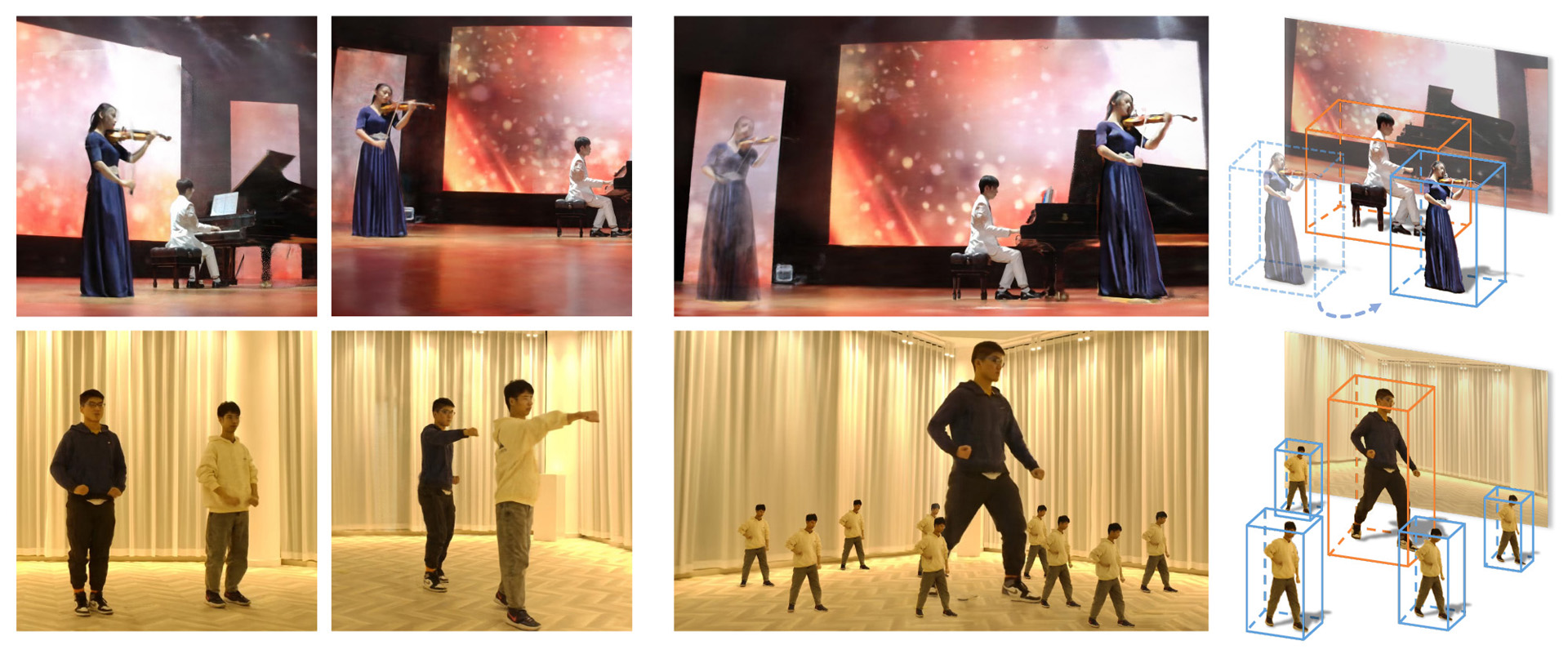

Generating free-viewpoint videos is critical for immersive VR/AR experience, but recent neural advances still lack the editing ability to manipulate the visual perception for large dynamic scenes. To fill this gap, in this paper, we propose the first approach for editable free-viewpoint video generation for large-scale view-dependent dynamic scenes using only 16 cameras. The core of our approach is a new layered neural representation, where each dynamic entity, including the environment itself, is formulated into a spatio-temporal coherent neural layered radiance representation called ST-NeRF. Such a layered representation supports manipulations of the dynamic scene while still supporting a wide free viewing experience. In our ST-NeRF, we represent the dynamic entity/layer as a continuous function, which achieves the disentanglement of location, deformation as well as the appearance of the dynamic entity in a continuous and self-supervised manner. We propose a scene parsing 4D label map tracking to disentangle the spatial information explicitly and a continuous deform module to disentangle the temporal motion implicitly. An object-aware volume rendering scheme is further introduced for the re-assembling of all the neural layers. We adopt a novel layered loss and motion-aware ray sampling strategy to enable efficient training for a large dynamic scene with multiple performers, Our framework further enables a variety of editing functions, i.e., manipulating the scale and location, duplicating or retiming individual neural layers to create numerous visual effects while preserving high realism. Extensive experiments demonstrate the effectiveness of our approach to achieve high-quality, photo-realistic, and editable free-viewpoint video generation for dynamic scenes.

References:

1. Kfir Aberman, Mingyi Shi, Jing Liao, Dani Lischinski, Baoquan Chen, and Daniel Cohen-Or. 2018. Deep Video-Based Performance Cloning. arXiv:1808.06847 [cs.CV]Google Scholar

2. Naveed Ahmed, Christian Theobalt, Petar Dobrev, Hans-Peter Seidel, and Sebastian Thrun. 2008. Robust fusion of dynamic shape and normal capture for high-quality reconstruction of time-varying geometry.. In CVPR. IEEE Computer Society. http://dblp.uni-trier.de/db/conf/cvpr/cvpr2008.html#AhmedTDST08Google ScholarCross Ref

3. Kara-Ali Aliev, Artem Sevastopolsky, Maria Kolos, Dmitry Ulyanov, and Victor Lempitsky. 2020. Neural Point-Based Graphics. In Computer Vision – ECCV 2020, Andrea Vedaldi, Horst Bischof, Thomas Brox, and Jan-Michael Frahm (Eds.). Springer International Publishing, Cham, 696–712.Google ScholarDigital Library

4. Aayush Bansal, Minh Vo, Yaser Sheikh, Deva Ramanan, and Srinivasa Narasimhan. 2020. 4D Visualization of Dynamic Events From Unconstrained Multi-View Videos. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). IEEE.Google ScholarCross Ref

5. Mojtaba Bemana, Karol Myszkowski, Hans-Peter Seidel, and Tobias Ritschel. 2020. XFields: implicit neural view-, light-and time-image interpolation. ACM Transactions on Graphics (TOG) 39, 6 (2020), 1–15.Google ScholarDigital Library

6. Michael Broxton, John Flynn, Ryan Overbeck, Daniel Erickson, Peter Hedman, Matthew Duvall, Jason Dourgarian, Jay Busch, Matt Whalen, and Paul Debevec. 2020. Immersive light field video with a layered mesh representation. ACM Transactions on Graphics (TOG) 39, 4 (2020), 86–1.Google ScholarDigital Library

7. Chris Buehler, Michael Bosse, Leonard McMillan, Steven Gortler, and Michael Cohen. 2001. Unstructured Lumigraph Rendering. In Proceedings of the 28th Annual Conference on Computer Graphics and Interactive Techniques (SIGGRAPH ’01). Association for Computing Machinery, New York, NY, USA, 425–432. Google ScholarDigital Library

8. Joel Carranza, Christian Theobalt, Marcus A Magnor, and Hans-Peter Seidel. 2003. Free-viewpoint video of human actors. ACM transactions on graphics (TOG) 22, 3 (2003), 569–577.Google Scholar

9. Caroline Chan, Shiry Ginosar, Tinghui Zhou, and Alexei Efros. 2019. Everybody Dance Now. In 2019 IEEE/CVF International Conference on Computer Vision (ICCV). 5932–5941. Google ScholarCross Ref

10. Gaurav Chaurasia, Sylvain Duchene, Olga Sorkine-Hornung, and George Drettakis. 2013. Depth synthesis and local warps for plausible image-based navigation. ACM Transactions on Graphics (TOG) 32, 3 (2013), 1–12.Google ScholarDigital Library

11. Shenchang Eric Chen and Lance Williams. 1993. View Interpolation for Image Synthesis. In Proceedings of the 20th Annual Conference on Computer Graphics and Interactive Techniques (Anaheim, CA) (SIGGRAPH ’93). Association for Computing Machinery, New York, NY, USA, 279–288. Google ScholarDigital Library

12. Inchang Choi, Orazio Gallo, Alejandro Troccoli, Min H. Kim, and Jan Kautz. 2019. Extreme View Synthesis. In 2019 IEEE/CVF International Conference on Computer Vision (ICCV). 7780–7789. Google ScholarCross Ref

13. Alvaro Collet, Ming Chuang, Pat Sweeney, Don Gillett, Dennis Evseev, David Calabrese, Hugues Hoppe, Adam Kirk, and Steve Sullivan. 2015. High-quality streamable free-viewpoint video. ACM Transactions on Graphics (TOG) 34, 4 (2015), 69.Google ScholarDigital Library

14. Abe Davis and Maneesh Agrawala. 2018. Visual Rhythm and Beat. ACM Trans. Graph. 37, 4, Article 122 (July 2018), 11 pages. Google ScholarDigital Library

15. Abe Davis, Marc Levoy, and Fredo Durand. 2012. Unstructured Light Fields. Comput. Graph. Forum 31, 2pt1 (May 2012), 305–314. Google ScholarDigital Library

16. Paul E. Debevec, Camillo J. Taylor, and Jitendra Malik. 1996. Modeling and Rendering Architecture from Photographs: A Hybrid Geometry- and Image-Based Approach. In Proceedings of the 23rd Annual Conference on Computer Graphics and Interactive Techniques (SIGGRAPH ’96). Association for Computing Machinery, New York, NY, USA, 11–20. Google ScholarDigital Library

17. Mingsong Dou, Philip Davidson, Sean Ryan Fanello, Sameh Khamis, Adarsh Kowdle, Christoph Rhemann, Vladimir Tankovich, and Shahram Izadi. 2017. Motion2Fusion: Real-time Volumetric Performance Capture. ACM Trans. Graph. 36, 6, Article 246 (Nov. 2017), 16 pages.Google ScholarDigital Library

18. Mingsong Dou, Sameh Khamis, Yury Degtyarev, Philip Davidson, Sean Ryan Fanello, Adarsh Kowdle, Sergio Orts Escolano, Christoph Rhemann, David Kim, Jonathan Taylor, Pushmeet Kohli, Vladimir Tankovich, and Shahram Izadi. 2016. Fusion4D: Real-Time Performance Capture of Challenging Scenes. ACM Trans. Graph. 35, 4, Article 114 (July 2016), 13 pages. Google ScholarDigital Library

19. John Flynn, Michael Broxton, Paul Debevec, Matthew DuVall, Graham Fyffe, Ryan Overbeck, Noah Snavely, and Richard Tucker. 2019. DeepView: View Synthesis With Learned Gradient Descent. In 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 2362–2371. Google ScholarCross Ref

20. John Flynn, Ivan Neulander, James Philbin, and Noah Snavely. 2016. Deep Stereo: Learning to Predict New Views from the World’s Imagery. In 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 5515–5524. Google ScholarCross Ref

21. Oran Gafni, Lior Wolf, and Yaniv Taigman. 2019. Vid2Game: Controllable Characters Extracted from Real-World Videos. arXiv:1904.08379 [cs.LG]Google Scholar

22. Dan B. Goldman, Chris Gonterman, Brian Curless, David Salesin, and Steven M. Seitz. 2008. Video Object Annotation, Navigation, and Composition. In Proceedings of the 21st Annual ACM Symposium on User Interface Software and Technology (Monterey, CA, USA) (UIST ’08). Association for Computing Machinery, New York, NY, USA, 3–12. Google ScholarDigital Library

23. Steven J. Gortler, Radek Grzeszczuk, Richard Szeliski, and Michael F. Cohen. 1996. The Lumigraph. In Proceedings of the 23rd Annual Conference on Computer Graphics and Interactive Techniques (SIGGRAPH ’96). Association for Computing Machinery, New York, NY, USA, 43–54. Google ScholarDigital Library

24. Yannan He, Anqi Pang, Xin Chen, Han Liang, Minye Wu, Yuexin Ma, and Lan Xu. 2021. ChallenCap: Monocular 3D Capture of Challenging Human Performances using Multi-Modal References. arXiv preprint arXiv:2103.06747 (2021).Google Scholar

25. Peter Hedman, Suhib Alsisan, Richard Szeliski, and Johannes Kopf. 2017. Casual 3D photography. ACM Transactions on Graphics (TOG) 36, 6 (2017), 1–15.Google ScholarDigital Library

26. Peter Hedman, Julien Philip, True Price, Jan-Michael Frahm, George Drettakis, and Gabriel Brostow. 2018. Deep Blending for Free-Viewpoint Image-Based Rendering. ACM Trans. Graph. 37, 6, Article 257 (Dec. 2018), 15 pages. Google ScholarDigital Library

27. Peter Hedman, Tobias Ritschel, George Drettakis, and Gabriel Brostow. 2016. Scalable inside-out image-based rendering. ACM Transactions on Graphics (TOG) 35, 6 (2016), 1–11.Google ScholarDigital Library

28. Shi Jin, Ruiynag Liu, Yu Ji, Jinwei Ye, and Jingyi Yu. 2018. Learning to Dodge A Bullet: Concyclic View Morphing via Deep Learning. In Computer Vision – ECCV 2018, Vittorio Ferrari, Martial Hebert, Cristian Sminchisescu, and Yair Weiss (Eds.). Springer International Publishing, Cham, 230–246.Google ScholarCross Ref

29. Hanbyul Joo, Tomas Simon, and Yaser Sheikh. 2018. Total Capture: A 3D Deformation Model for Tracking Faces, Hands, and Bodies. In 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. 8320–8329. Google ScholarCross Ref

30. Hansung Kim, Jean-Yves Guillemaut, Takeshi Takai, Muhammad Sarim, and Adrian Hilton. 2012. Outdoor Dynamic 3-D Scene Reconstruction. IEEE Transactions on Circuits and Systems for Video Technology 22, 11 (2012), 1611–1622. Google ScholarDigital Library

31. Suryansh Kumar, Yuchao Dai, and Hongdong Li. 2021. Superpixel Soup: Monocular Dense 3D Reconstruction of a Complex Dynamic Scene. IEEE Transactions on Pattern Analysis and Machine Intelligence 43, 5 (2021), 1705–1717. Google ScholarCross Ref

32. Kiriakos N Kutulakos and Steven M Seitz. 2000. A theory of shape by space carving. International journal of computer vision 38, 3 (2000), 199–218.Google Scholar

33. Youngjoong Kwon, Stefano Petrangeli, Dahun Kim, Haoliang Wang, Eunbyung Park, Viswanathan Swaminathan, and Henry Fuchs. 2020. Rotationally-Temporally Consistent Novel View Synthesis of Human Performance Video. In Computer Vision – ECCV 2020, Andrea Vedaldi, Horst Bischof, Thomas Brox, and Jan-Michael Frahm (Eds.). Springer International Publishing, Cham, 387–402.Google ScholarDigital Library

34. Marc Levoy and Pat Hanrahan. 1996. Light Field Rendering. In Proceedings of the 23rd Annual Conference on Computer Graphics and Interactive Techniques (SIGGRAPH ’96). Association for Computing Machinery, New York, NY, USA, 31–42. Google ScholarDigital Library

35. Zhengqi Li, Tali Dekel, Forrester Cole, Richard Tucker, Noah Snavely, Ce Liu, and William T. Freeman. 2019. Learning the Depths of Moving People by Watching Frozen People. In 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 4516–4525. Google ScholarCross Ref

36. Zhengqi Li, Simon Niklaus, Noah Snavely, and Oliver Wang. 2020. Neural Scene Flow Fields for Space-Time View Synthesis of Dynamic Scenes. arXiv preprint arXiv:2011.13084 (2020).Google Scholar

37. Christian Lipski, Christian Linz, Kai Berger, Anita Sellent, and Marcus Magnor. 2010. Virtual video camera: Image-based viewpoint navigation through space and time. In Computer Graphics Forum, Vol. 29. Wiley Online Library, 2555–2568.Google Scholar

38. Lingjie Liu, Weipeng Xu, Michael Zollhoefer, Hyeongwoo Kim, Florian Bernard, Marc Habermann, Wenping Wang, and Christian Theobalt. 2019. Neural rendering and reenactment of human actor videos. ACM Transactions on Graphics (TOG) 38, 5 (2019), 1–14.Google ScholarDigital Library

39. Stephen Lombardi, Tomas Simon, Jason Saragih, Gabriel Schwartz, Andreas Lehrmann, and Yaser Sheikh. 2019. Neural Volumes: Learning Dynamic Renderable Volumes from Images. ACM Trans. Graph. 38, 4, Article 65 (July 2019), 14 pages. Google ScholarDigital Library

40. Matthew Loper, Naureen Mahmood, Javier Romero, Gerard Pons-Moll, and Michael J Black. 2015. SMPL: A skinned multi-person linear model. ACM transactions on graphics (TOG) 34, 6 (2015), 1–16.Google ScholarDigital Library

41. Erika Lu, Forrester Cole, Tali Dekel, Weidi Xie, Andrew Zisserman, David Salesin, William T. Freeman, and Michael Rubinstein. 2020. Layered Neural Rendering for Retiming People in Video. arXiv:2009.07833 [cs.CV]Google Scholar

42. Keyang Luo, Tao Guan, Lili Ju, Haipeng Huang, and Yawei Luo. 2019. P-MVSNet: Learning Patch-Wise Matching Confidence Aggregation for Multi-View Stereo. In 2019 IEEE/CVF International Conference on Computer Vision (ICCV). 10451–10460. Google ScholarCross Ref

43. Xuan Luo, Jia-Bin Huang, Richard Szeliski, Kevin Matzen, and Johannes Kopf. 2020. Consistent video depth estimation. ACM Transactions on Graphics (TOG) 39, 4 (2020), 71–1.Google ScholarDigital Library

44. Zhaoyang Lv, Kihwan Kim, Alejandro Troccoli, Deqing Sun, James M. Rehg, and Jan Kautz. 2018. Learning Rigidity in Dynamic Scenes with a Moving Camera for 3D Motion Field Estimation. In Computer Vision – ECCV 2018, Vittorio Ferrari, Martial Hebert, Cristian Sminchisescu, and Yair Weiss (Eds.). Springer International Publishing, Cham, 484–501.Google ScholarCross Ref

45. Wojciech Matusik, Chris Buehler, Ramesh Raskar, Steven J. Gortler, and Leonard McMillan. 2000. Image-Based Visual Hulls. In Proceedings of the 27th Annual Conference on Computer Graphics and Interactive Techniques (SIGGRAPH ’00). ACM Press/Addison-Wesley Publishing Co., USA, 369–374. Google ScholarDigital Library

46. Moustafa Meshry, Dan B. Goldman, Sameh Khamis, Hugues Hoppe, Rohit Pandey, Noah Snavely, and Ricardo Martin-Brualla. 2019a. Neural Rerendering in the Wild. In 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 6871–6880. Google ScholarCross Ref

47. Moustafa Meshry, Dan B Goldman, Sameh Khamis, Hugues Hoppe, Rohit Pandey, Noah Snavely, and Ricardo Martin-Brualla. 2019b. Neural rerendering in the wild. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 6878–6887.Google ScholarCross Ref

48. Ben Mildenhall, Pratul P Srinivasan, Rodrigo Ortiz-Cayon, Nima Khademi Kalantari, Ravi Ramamoorthi, Ren Ng, and Abhishek Kar. 2019. Local light field fusion: Practical view synthesis with prescriptive sampling guidelines. ACM Transactions on Graphics (TOG) 38, 4 (2019), 1–14.Google ScholarDigital Library

49. Ben Mildenhall, Pratul P. Srinivasan, Matthew Tancik, Jonathan T. Barron, Ravi Ramamoorthi, and Ren Ng. 2020a. NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis. In Computer Vision – ECCV 2020, Andrea Vedaldi, Horst Bischof, Thomas Brox, and Jan-Michael Frahm (Eds.). Springer International Publishing, Cham, 405–421.Google ScholarDigital Library

50. Ben Mildenhall, Pratul P. Srinivasan, Matthew Tancik, Jonathan T. Barron, Ravi Ramamoorthi, and Ren Ng. 2020b. NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis. In Computer Vision – ECCV 2020, Andrea Vedaldi, Horst Bischof, Thomas Brox, and Jan-Michael Frahm (Eds.). Springer International Publishing, Cham, 405–421.Google ScholarDigital Library

51. Armin Mustafa, Hansung Kim, Jean-Yves Guillemaut, and Adrian Hilton. 2016. Temporally Coherent 4D Reconstruction of Complex Dynamic Scenes. In 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 4660–4669. Google ScholarCross Ref

52. Richard A. Newcombe, Dieter Fox, and Steven M. Seitz. 2015. DynamicFusion: Reconstruction and tracking of non-rigid scenes in real-time. In 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 343–352. Google ScholarCross Ref

53. Richard A. Newcombe, Shahram Izadi, Otmar Hilliges, David Molyneaux, David Kim, Andrew J. Davison, Pushmeet Kohi, Jamie Shotton, Steve Hodges, and Andrew Fitzgibbon. 2011. KinectFusion: Real-time dense surface mapping and tracking. In 2011 10th IEEE International Symposium on Mixed and Augmented Reality. 127–136. Google ScholarDigital Library

54. Julian Ost, Fahim Mannan, Nils Thuerey, Julian Knodt, and Felix Heide. 2020. Neural Scene Graphs for Dynamic Scenes.Google Scholar

55. Keunhong Park, Utkarsh Sinha, Jonathan T Barron, Sofien Bouaziz, Dan B Goldman, Steven M Seitz, and Ricardo-Martin Brualla. 2020. Deformable Neural Radiance Fields. arXiv preprint arXiv:2011.12948 (2020).Google Scholar

56. Eric Penner and Li Zhang. 2017. Soft 3D reconstruction for view synthesis. ACM Transactions on Graphics (TOG) 36, 6 (2017), 1–11.Google ScholarDigital Library

57. Albert Pumarola, Enric Corona, Gerard Pons-Moll, and Francesc Moreno-Noguer. 2020. D-NeRF: Neural Radiance Fields for Dynamic Scenes. arXiv preprint arXiv:2011.13961 (2020).Google Scholar

58. René Ranftl, Vibhav Vineet, Qifeng Chen, and Vladlen Koltun. 2016. Dense Monocular Depth Estimation in Complex Dynamic Scenes. In 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 4058–4066. Google ScholarCross Ref

59. Daniel Rebain, Wei Jiang, Soroosh Yazdani, Ke Li, Kwang Moo Yi, and Andrea Tagliasacchi. 2020. DeRF: Decomposed Radiance Fields. arXiv preprint arXiv:2011.12490 (2020).Google Scholar

60. Chris Russell, Rui Yu, and Lourdes Agapito. 2014. Video Pop-up: Monocular 3D Reconstruction of Dynamic Scenes. In Computer Vision – ECCV 2014, David Fleet, Tomas Pajdla, Bernt Schiele, and Tinne Tuytelaars (Eds.). Springer International Publishing, Cham, 583–598.Google Scholar

61. Jae Shin Yoon, Kihwan Kim, Orazio Gallo, Hyun Soo Park, and Jan Kautz. 2020. Novel View Synthesis of Dynamic Scenes With Globally Coherent Depths From a Monocular Camera. In 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 5335–5344. Google ScholarCross Ref

62. Vincent Sitzmann, Justus Thies, Felix Heide, Matthias Niessner, Gordon Wetzstein, and Michael Zollhofer. 2019a. DeepVoxels: Learning Persistent 3D Feature Embeddings. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).Google ScholarCross Ref

63. Vincent Sitzmann, Michael Zollhoefer, and Gordon Wetzstein. 2019b. Scene Representation Networks: Continuous 3D-Structure-Aware Neural Scene Representations. In Advances in Neural Information Processing Systems, H. Wallach, H. Larochelle, A. Beygelzimer, F. d’Alché-Buc, E. Fox, and R. Garnett (Eds.), Vol. 32. Cur-ran Associates, Inc., 1121–1132. https://proceedings.neurips.cc/paper/2019/file/b5dc4e5d9b495d0196f61d45b26ef33e-Paper.pdfGoogle Scholar

64. Noah Snavely, Steven M. Seitz, and Richard Szeliski. 2006. Photo Tourism: Exploring Photo Collections in 3D. ACM Trans. Graph. 25, 3 (July 2006), 835–846. Google ScholarDigital Library

65. Pratul P. Srinivasan, Richard Tucker, Jonathan T. Barron, Ravi Ramamoorthi, Ren Ng, and Noah Snavely. 2019. Pushing the Boundaries of View Extrapolation With Multiplane Images. In 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 175–184. Google ScholarCross Ref

66. Zhuo Su, Lan Xu, Zerong Zheng, Tao Yu, Yebin Liu, and Lu Fang. 2020. RobustFusion: Human Volumetric Capture with Data-Driven Visual Cues Using a RGBD Camera. In Computer Vision – ECCV 2020, Andrea Vedaldi, Horst Bischof, Thomas Brox, and Jan-Michael Frahm (Eds.). Springer International Publishing, Cham, 246–264.Google ScholarDigital Library

67. Xin Suo, Yuheng Jiang, Pei Lin, Yingliang Zhang, Kaiwen Guo, Minye Wu, and Lan Xu. 2021. NeuralHumanFVV: Real-Time Neural Volumetric Human Performance Rendering using RGB Cameras. arXiv preprint arXiv:2103.07700 (2021).Google Scholar

68. Aparna Taneja, Luca Ballan, and Marc Pollefeys. 2011. Modeling Dynamic Scenes Recorded with Freely Moving Cameras. In Computer Vision – ACCV 2010, Ron Kimmel, Reinhard Klette, and Akihiro Sugimoto (Eds.). Springer Berlin Heidelberg, Berlin, Heidelberg, 613–626.Google Scholar

69. A. Tewari, O. Fried, J. Thies, V. Sitzmann, S. Lombardi, K. Sunkavalli, R. Martin-Brualla, T. Simon, J. Saragih, M. Nießner, R. Pandey, S. Fanello, G. Wetzstein, J.-Y. Zhu, C. Theobalt, M. Agrawala, E. Shechtman, D. B Goldman, and M. Zollhöfer. 2020. State of the Art on Neural Rendering. Computer Graphics Forum 39, 2 (2020), 701–727. arXiv:https://onlinelibrary.wiley.com/doi/pdf/10.1111/cgf.14022 Google ScholarCross Ref

70. Justus Thies, Michael Zollhöfer, and Matthias Nießner. 2019. Deferred neural rendering: Image synthesis using neural textures. ACM Transactions on Graphics (TOG) 38, 4 (2019), 1–12.Google ScholarDigital Library

71. Justus Thies, Michael Zollhöfer, Christian Theobalt, Marc Stamminger, and Matthias Nießner. 2018. Ignor: Image-guided neural object rendering. arXiv preprint arXiv:1811.10720 (2018).Google Scholar

72. Edgar Tretschk, Ayush Tewari, Vladislav Golyanik, Michael Zollhöfer, Christoph Lassner, and Christian Theobalt. 2020. Non-Rigid Neural Radiance Fields: Reconstruction and Novel View Synthesis of a Deforming Scene from Monocular Video. arXiv preprint arXiv:2012.12247 (2020).Google Scholar

73. Geert Verhoeven. 2011. Taking computer vision aloft-archaeological three-dimensional reconstructions from aerial photographs with photoscan. Archaeological prospection 18, 1 (2011), 67–73.Google Scholar

74. Daniel Vlasic, Pieter Peers, Ilya Baran, Paul Debevec, Jovan Popović, Szymon Rusinkiewicz, and Wojciech Matusik. 2009. Dynamic Shape Capture Using Multi-View Photometric Stereo. In ACM SIGGRAPH Asia 2009 Papers (Yokohama, Japan) (SIGGRAPH Asia ’09). Association for Computing Machinery, New York, NY, USA, Article 174, 11 pages. Google ScholarDigital Library

75. Qiang Wang, Li Zhang, Luca Bertinetto, Weiming Hu, and Philip H.S. Torr. 2019. Fast Online Object Tracking and Segmentation: A Unifying Approach. In 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 1328–1338. Google ScholarCross Ref

76. Minye Wu, Haibin Ling, Ning Bi, Shenghua Gao, Qiang Hu, Hao Sheng, and Jingyi Yu. 2020a. Visual Tracking With Multiview Trajectory Prediction. IEEE Transactions on Image Processing 29 (2020), 8355–8367.Google ScholarCross Ref

77. Minye Wu, Yuehao Wang, Qiang Hu, and Jingyi Yu. 2020b. Multi-View Neural Human Rendering. In 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 1679–1688. Google ScholarCross Ref

78. Wenqi Xian, Jia-Bin Huang, Johannes Kopf, and Changil Kim. 2020. Space-time Neural Irradiance Fields for Free-Viewpoint Video. arXiv preprint arXiv:2011.12950 (2020).Google Scholar

79. Lan Xu, Wei Cheng, Kaiwen Guo, Lei Han, Yebin Liu, and Lu Fang. 2021. FlyFusion: Realtime Dynamic Scene Reconstruction Using a Flying Depth Camera. IEEE Transactions on Visualization and Computer Graphics 27, 1 (2021), 68–82. Google ScholarDigital Library

80. Lan Xu, Yebin Liu, Wei Cheng, Kaiwen Guo, Guyue Zhou, Qionghai Dai, and Lu Fang. 2018. FlyCap: Markerless Motion Capture Using Multiple Autonomous Flying Cameras. IEEE Transactions on Visualization and Computer Graphics 24, 8 (Aug 2018), 2284–2297. Google ScholarCross Ref

81. Lan Xu, Zhuo Su, Lei Han, Tao Yu, Yebin Liu, and Lu Fang. 2020. UnstructuredFusion: Realtime 4D Geometry and Texture Reconstruction Using Commercial RGBD Cameras. IEEE Trans. Pattern Anal. Mach. Intell. 42, 10 (Oct. 2020), 2508–2522. Google ScholarDigital Library

82. Zexiang Xu, Sai Bi, Kalyan Sunkavalli, Sunil Hadap, Hao Su, and Ravi Ramamoorthi. 2019a. Deep view synthesis from sparse photometric images. ACM Transactions on Graphics (TOG) 38, 4 (2019), 1–13.Google ScholarDigital Library

83. Zexiang Xu, Sai Bi, Kalyan Sunkavalli, Sunil Hadap, Hao Su, and Ravi Ramamoorthi. 2019b. Deep View Synthesis from Sparse Photometric Images. ACM Trans. Graph. 38, 4, Article 76 (July 2019), 13 pages. Google ScholarDigital Library

84. Longwen Zhang, Qixuan Zhang, Minye Wu, Jingyi Yu, and Lan Xu. 2021. Neural Video Portrait Relighting in Real-time via Consistency Modeling. arXiv preprint arXiv:2104.00484 (2021).Google Scholar

85. Richard Zhang, Phillip Isola, Alexei A. Efros, Eli Shechtman, and Oliver Wang. 2018. The Unreasonable Effectiveness of Deep Features as a Perceptual Metric. In 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. 586–595. Google ScholarCross Ref

86. Tinghui Zhou, Richard Tucker, John Flynn, Graham Fyffe, and Noah Snavely. 2018. Stereo Magnification: Learning View Synthesis Using Multiplane Images. ACM Trans. Graph. 37, 4, Article 65 (July 2018), 12 pages. Google ScholarDigital Library

87. C Lawrence Zitnick, Sing Bing Kang, Matthew Uyttendaele, Simon Winder, and Richard Szeliski. 2004. High-quality video view interpolation using a layered representation. ACM transactions on graphics (TOG) 23, 3 (2004), 600–608.Google Scholar