“Designing an encoder for StyleGAN image manipulation” by Tov, Alaluf, Nitzan, Patashnik and Cohen-Or

Conference:

Type(s):

Title:

- Designing an encoder for StyleGAN image manipulation

Session/Category Title:

- Summary and Q&A: Image Editing with GANs 2

Presenter(s)/Author(s):

Abstract:

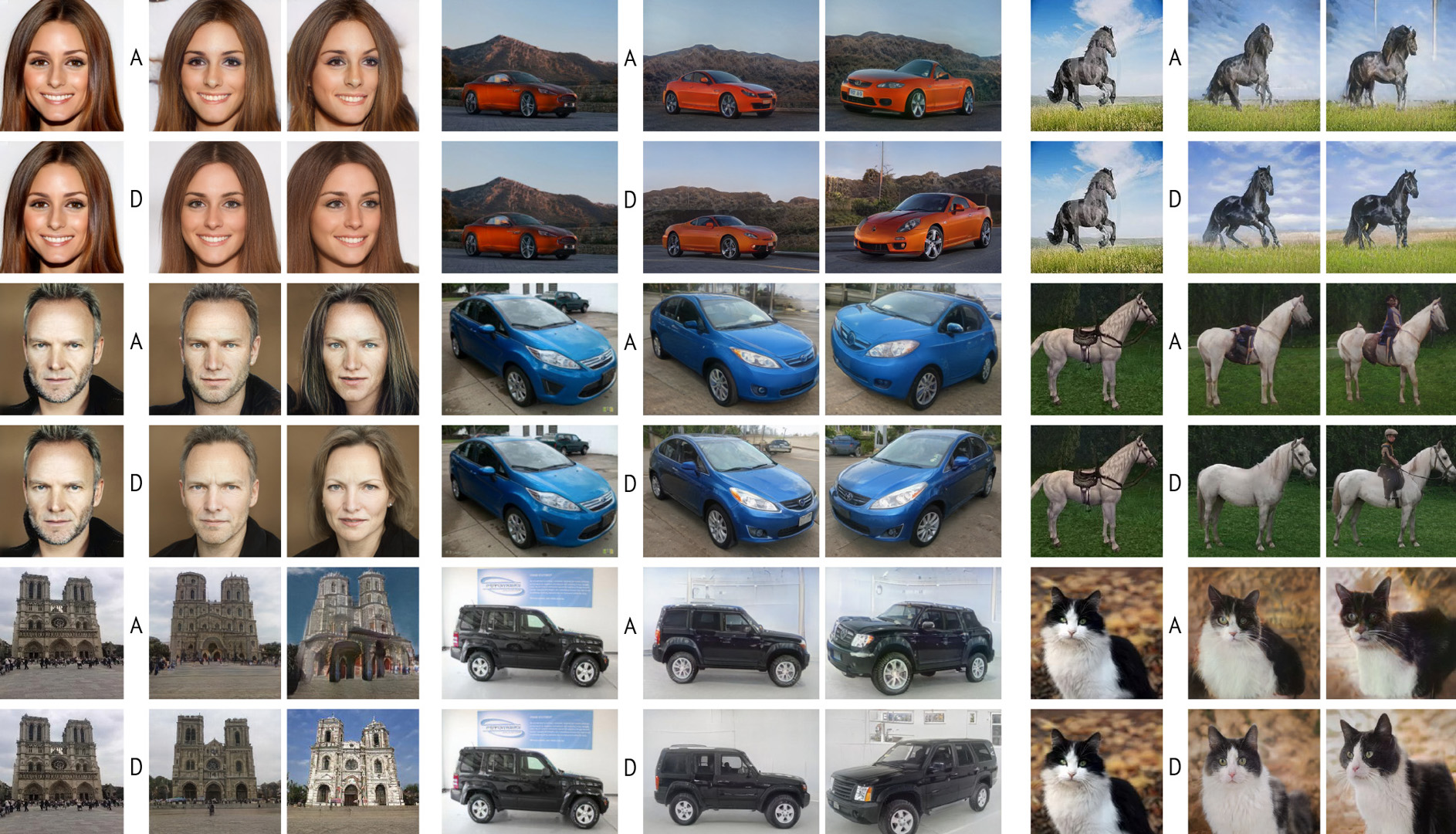

Recently, there has been a surge of diverse methods for performing image editing by employing pre-trained unconditional generators. Applying these methods on real images, however, remains a challenge, as it necessarily requires the inversion of the images into their latent space. To successfully invert a real image, one needs to find a latent code that reconstructs the input image accurately, and more importantly, allows for its meaningful manipulation. In this paper, we carefully study the latent space of StyleGAN, the state-of-the-art unconditional generator. We identify and analyze the existence of a distortion-editability tradeoff and a distortion-perception tradeoff within the StyleGAN latent space. We then suggest two principles for designing encoders in a manner that allows one to control the proximity of the inversions to regions that StyleGAN was originally trained on. We present an encoder based on our two principles that is specifically designed for facilitating editing on real images by balancing these tradeoffs. By evaluating its performance qualitatively and quantitatively on numerous challenging domains, including cars and horses, we show that our inversion method, followed by common editing techniques, achieves superior real-image editing quality, with only a small reconstruction accuracy drop.

References:

1. Rameen Abdal, Yipeng Qin, and Peter Wonka. 2019. Image2stylegan: How to embed images into the stylegan latent space?. In Proceedings of the IEEE international conference on computer vision. 4432–4441.Google ScholarCross Ref

2. Rameen Abdal, Yipeng Qin, and Peter Wonka. 2020a. Image2StyleGAN++: How to Edit the Embedded Images?. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 8296–8305.Google ScholarCross Ref

3. Rameen Abdal, Peihao Zhu, Niloy Mitra, and Peter Wonka. 2020b. StyleFlow: Attribute-conditioned Exploration of StyleGAN-Generated Images using Conditional Continuous Normalizing Flows. arXiv:2008.02401 [cs.CV]Google Scholar

4. Yuval Alaluf, Or Patashnik, and Daniel Cohen-Or. 2021. Only a Matter of Style: Age Transformation Using a Style-Based Regression Model. arXiv:2102.02754 [cs.CV]Google Scholar

5. Baylies. 2019. stylegan-encoder. https://github.com/pbaylies/stylegan-encoder. Accessed: April 2020.Google Scholar

6. Yochai Blau and Tomer Michaeli. 2018. The perception-distortion tradeoff. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 6228–6237.Google ScholarCross Ref

7. Xinlei Chen, Haoqi Fan, Ross Girshick, and Kaiming He. 2020. Improved Baselines with Momentum Contrastive Learning. arXiv:2003.04297 [cs.CV]Google Scholar

8. Edo Collins, Raja Bala, Bob Price, and Sabine Susstrunk. 2020. Editing in Style: Uncovering the Local Semantics of GANs. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 5771–5780.Google ScholarCross Ref

9. Antonia Creswell and Anil Anthony Bharath. 2018. Inverting the generator of a generative adversarial network. IEEE transactions on neural networks and learning systems 30, 7 (2018), 1967–1974.Google Scholar

10. Jiankang Deng, Jia Guo, Niannan Xue, and Stefanos Zafeiriou. 2019. Arcface: Additive angular margin loss for deep face recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 4690–4699.Google ScholarCross Ref

11. Emily Denton, Ben Hutchinson, Margaret Mitchell, and Timnit Gebru. 2019. Detecting bias with generative counterfactual face attribute augmentation. arXiv preprint arXiv:1906.06439 (2019).Google Scholar

12. Rinon Gal, Dana Cohen, Amit Bermano, and Daniel Cohen-Or. 2021. SWAGAN: A Style-based Wavelet-driven Generative Model. arXiv:2102.06108 [cs.CV]Google Scholar

13. Lore Goetschalckx, Alex Andonian, Aude Oliva, and Phillip Isola. 2019. GANalyze: Toward Visual Definitions of Cognitive Image Properties. arXiv:1906.10112 [cs.CV]Google Scholar

14. Ian J. Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2014. Generative Adversarial Nets. In Proceedings of the 27th International Conference on Neural Information Processing Systems – Volume 2 (Montreal, Canada) (NIPS’14). MIT Press, Cambridge, MA, USA, 2672–2680.Google ScholarDigital Library

15. Shanyan Guan, Ying Tai, Bingbing Ni, Feida Zhu, Feiyue Huang, and Xiaokang Yang. 2020. Collaborative Learning for Faster StyleGAN Embedding. arXiv preprint arXiv:2007.01758 (2020).Google Scholar

16. Erik H?rk?nen, Aaron Hertzmann, Jaakko Lehtinen, and Sylvain Paris. 2020. GANSpace: Discovering Interpretable GAN Controls. arXiv preprint arXiv:2004.02546 (2020).Google Scholar

17. Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2015. Deep Residual Learning for Image Recognition. arXiv:1512.03385 [cs.CV]Google Scholar

18. Martin Heusel, Hubert Ramsauer, Thomas Unterthiner, Bernhard Nessler, and Sepp Hochreiter. 2018. GANs Trained by a Two Time-Scale Update Rule Converge to a Local Nash Equilibrium. arXiv:1706.08500 [cs.LG]Google Scholar

19. Ali Jahanian, Lucy Chai, and Phillip Isola. 2019. On the “steerability” of generative adversarial networks. arXiv preprint arXiv:1907.07171 (2019).Google Scholar

20. Tero Karras, Timo Aila, Samuli Laine, and Jaakko Lehtinen. 2017. Progressive growing of gans for improved quality, stability, and variation. arXiv preprint arXiv:1710.10196 (2017).Google Scholar

21. Tero Karras, Miika Aittala, Janne Hellsten, Samuli Laine, Jaakko Lehtinen, and Timo Aila. 2020a. Training Generative Adversarial Networks with Limited Data. In Proc. NeurIPS.Google Scholar

22. Tero Karras, Samuli Laine, and Timo Aila. 2019. A style-based generator architecture for generative adversarial networks. In Proceedings of the IEEE conference on computer vision and pattern recognition. 4401–4410.Google ScholarCross Ref

23. Tero Karras, Samuli Laine, Miika Aittala, Janne Hellsten, Jaakko Lehtinen, and Timo Aila. 2020b. Analyzing and improving the image quality of stylegan. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 8110–8119.Google ScholarCross Ref

24. Jonathan Krause, Michael Stark, Jia Deng, and Li Fei-Fei. 2013. 3D Object Representations for Fine-Grained Categorization. In 4th International IEEE Workshop on 3D Representation and Recognition (3dRR-13). Sydney, Australia.Google ScholarDigital Library

25. Gihyun Kwon and Jong Chul Ye. 2021. Diagonal Attention and Style-based GAN for Content-Style Disentanglement in Image Generation and Translation. arXiv:2103.16146 [cs.CV]Google Scholar

26. Zachary C Lipton and Subarna Tripathi. 2017. Precise recovery of latent vectors from generative adversarial networks. arXiv preprint arXiv:1702.04782 (2017).Google Scholar

27. Yunfan Liu, Qi Li, Zhenan Sun, and Tieniu Tan. 2020. Style Intervention: How to Achieve Spatial Disentanglement with Style-based Generators? arXiv:2011.09699 [cs.CV]Google Scholar

28. Sachit Menon, Alexandru Damian, Shijia Hu, Nikhil Ravi, and Cynthia Rudin. 2020. PULSE: Self-Supervised Photo Upsampling via Latent Space Exploration of Generative Models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2437–2445.Google ScholarCross Ref

29. Lars Mescheder, Andreas Geiger, and Sebastian Nowozin. 2018. Which training methods for GANs do actually converge? arXiv preprint arXiv:1801.04406 (2018).Google Scholar

30. Yotam Nitzan, A. Bermano, Yangyan Li, and D. Cohen-Or. 2020. Face identity disentanglement via latent space mapping. ACM Transactions on Graphics (TOG) 39 (2020), 1 — 14.Google ScholarDigital Library

31. Guim Perarnau, Joost Van De Weijer, Bogdan Raducanu, and Jose M ?lvarez. 2016. Invertible conditional gans for image editing. arXiv preprint arXiv:1611.06355 (2016).Google Scholar

32. Stanislav Pidhorskyi, Donald Adjeroh, and Gianfranco Doretto. 2020. Adversarial Latent Autoencoders. arXiv:2004.04467 [cs.LG]Google Scholar

33. Antoine Plumerault, Herv? Le Borgne, and C?line Hudelot. 2020. Controlling generative models with continuous factors of variations. arXiv preprint arXiv:2001.10238 (2020).Google Scholar

34. Julien Rabin, Gabriel Peyr?, Julie Delon, and Marc Bernot. 2012. Wasserstein Barycenter and Its Application to Texture Mixing. In Scale Space and Variational Methods in Computer Vision, Alfred M. Bruckstein, Bart M. ter Haar Romeny, Alexander M. Bronstein, and Michael M. Bronstein (Eds.). Springer Berlin Heidelberg, Berlin, Heidelberg, 435–446.Google Scholar

35. Elad Richardson, Yuval Alaluf, Or Patashnik, Yotam Nitzan, Yaniv Azar, Stav Shapiro, and Daniel Cohen-Or. 2020. Encoding in Style: a StyleGAN Encoder for Image-to-Image Translation. arXiv preprint arXiv:2008.00951 (2020).Google Scholar

36. Tim Salimans, Ian Goodfellow, Wojciech Zaremba, Vicki Cheung, Alec Radford, and Xi Chen. 2016. Improved techniques for training gans. arXiv preprint arXiv:1606.03498 (2016).Google Scholar

37. Omry Sendik, Dani Lischinski, and Daniel Cohen-Or. 2020. Unsupervised K-modal Styled Content Generation. ACM Transactions on Graphics (TOG) (2020).Google Scholar

38. Kim Seonghyeon. 2019. stylegan2-pytorch. https://github.com/rosinality/stylegan2-pytorch.Google Scholar

39. Yujun Shen, Jinjin Gu, Xiaoou Tang, and Bolei Zhou. 2020. Interpreting the latent space of gans for semantic face editing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 9243–9252.Google ScholarCross Ref

40. Yujun Shen and Bolei Zhou. 2020. Closed-Form Factorization of Latent Semantics in GANs. arXiv preprint arXiv:2007.06600 (2020).Google Scholar

41. Nurit Spingarn-Eliezer, Ron Banner, and Tomer Michaeli. 2020. GAN Steerability without optimization. arXiv preprint arXiv:2012.05328 (2020).Google Scholar

42. Ayush Tewari, Mohamed Elgharib, Gaurav Bharaj, Florian Bernard, Hans-Peter Seidel, Patrick P?rez, Michael Zollh?fer, and Christian Theobalt. 2020a. StyleRig: Rigging StyleGAN for 3D Control over Portrait Images. arXiv preprint arXiv:2004.00121 (2020).Google Scholar

43. Ayush Tewari, Mohamed Elgharib, Mallikarjun B R., Florian Bernard, Hans-Peter Seidel, Patrick P?rez, Michael Zollh?fer, and Christian Theobalt. 2020b. PIE: Portrait Image Embedding for Semantic Control. arXiv:2009.09485 [cs.CV]Google Scholar

44. Andrey Voynov and Artem Babenko. 2020. Unsupervised Discovery of Interpretable Directions in the GAN Latent Space. arXiv preprint arXiv:2002.03754 (2020).Google Scholar

45. Binxu Wang and Carlos R Ponce. 2021. A Geometric Analysis of Deep Generative Image Models and Its Applications. In International Conference on Learning Representations. https://openreview.net/forum?id=GH7QRzUDdXGGoogle Scholar

46. Zongze Wu, Dani Lischinski, and Eli Shechtman. 2020. StyleSpace Analysis: Disentangled Controls for StyleGAN Image Generation. arXiv:2011.12799 [cs.CV]Google Scholar

47. Jonas Wulff and Antonio Torralba. 2020. Improving Inversion and Generation Diversity in StyleGAN using a Gaussianized Latent Space. arXiv:2009.06529 [cs.CV]Google Scholar

48. Weihao Xia, Yulun Zhang, Yujiu Yang, Jing-Hao Xue, Bolei Zhou, and Ming-Hsuan Yang. 2021. GAN Inversion: A Survey. arXiv:2101.05278 [cs.CV]Google Scholar

49. Fisher Yu, Ari Seff, Yinda Zhang, Shuran Song, Thomas Funkhouser, and Jianxiong Xiao. 2016. LSUN: Construction of a Large-scale Image Dataset using Deep Learning with Humans in the Loop. arXiv:1506.03365 [cs.CV]Google Scholar

50. Richard Zhang, Phillip Isola, Alexei A. Efros, Eli Shechtman, and Oliver Wang. 2018. The Unreasonable Effectiveness of Deep Features as a Perceptual Metric. arXiv:1801.03924 [cs.CV]Google Scholar

51. Jiapeng Zhu, Yujun Shen, Deli Zhao, and Bolei Zhou. 2020b. In-domain gan inversion for real image editing. arXiv preprint arXiv:2004.00049 (2020).Google Scholar

52. Jun-Yan Zhu, Philipp Kr?henb?hl, Eli Shechtman, and Alexei A Efros. 2016. Generative visual manipulation on the natural image manifold. In European conference on computer vision. Springer, 597–613.Google ScholarCross Ref

53. Peihao Zhu, Rameen Abdal, Yipeng Qin, and Peter Wonka. 2020a. Improved StyleGAN Embedding: Where are the Good Latents? arXiv:2012.09036 [cs.CV]Google Scholar