“DeepFaceEditing: deep face generation and editing with disentangled geometry and appearance control” by Chen, Liu, Lai, Rosin, Li, et al. …

Conference:

Type(s):

Title:

- DeepFaceEditing: deep face generation and editing with disentangled geometry and appearance control

Presenter(s)/Author(s):

Abstract:

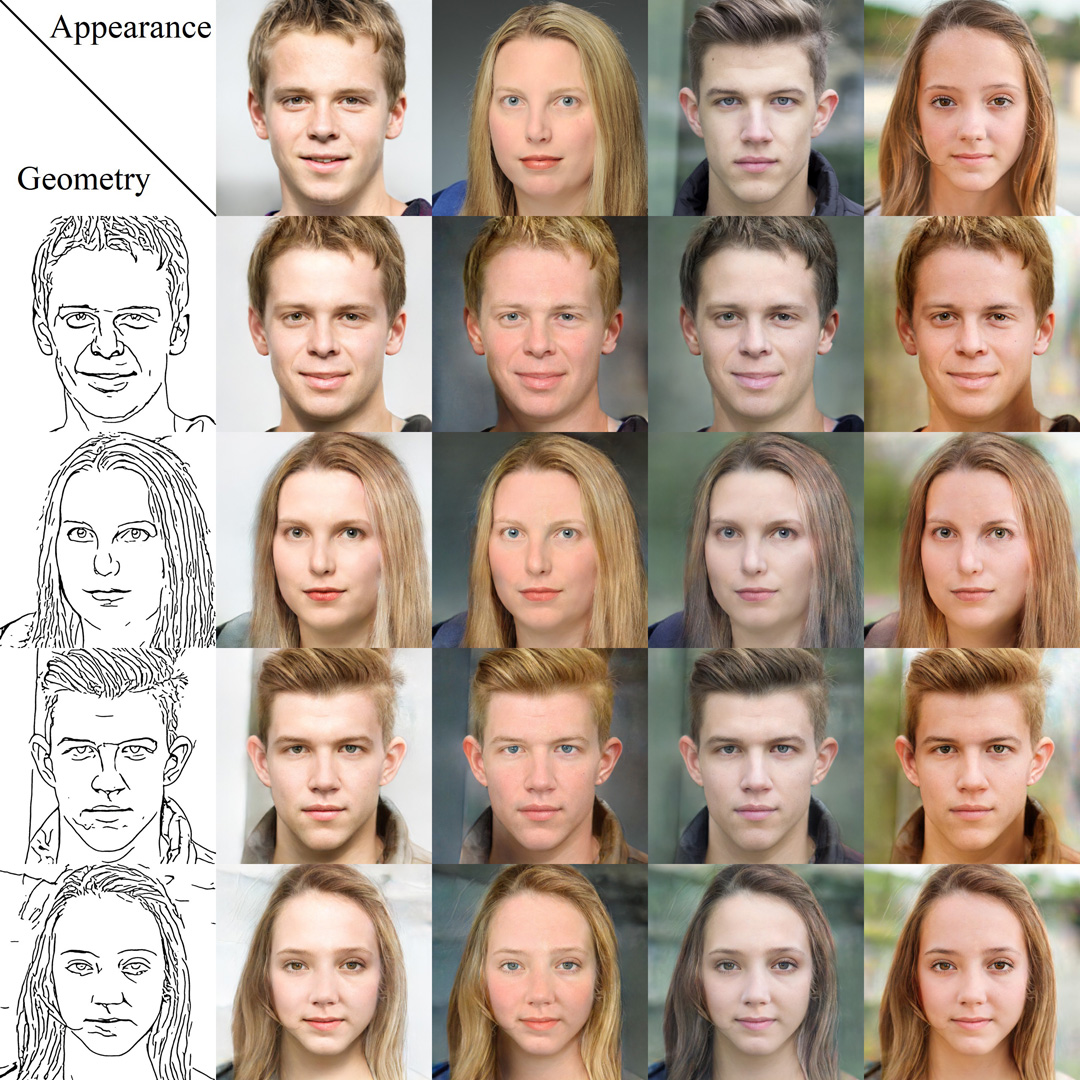

Recent facial image synthesis methods have been mainly based on conditional generative models. Sketch-based conditions can effectively describe the geometry of faces, including the contours of facial components, hair structures, as well as salient edges (e.g., wrinkles) on face surfaces but lack effective control of appearance, which is influenced by color, material, lighting condition, etc. To have more control of generated results, one possible approach is to apply existing disentangling works to disentangle face images into geometry and appearance representations. However, existing disentangling methods are not optimized for human face editing, and cannot achieve fine control of facial details such as wrinkles. To address this issue, we propose DeepFaceEditing, a structured disentanglement framework specifically designed for face images to support face generation and editing with disentangled control of geometry and appearance. We adopt a local-to-global approach to incorporate the face domain knowledge: local component images are decomposed into geometry and appearance representations, which are fused consistently using a global fusion module to improve generation quality. We exploit sketches to assist in extracting a better geometry representation, which also supports intuitive geometry editing via sketching. The resulting method can either extract the geometry and appearance representations from face images, or directly extract the geometry representation from face sketches. Such representations allow users to easily edit and synthesize face images, with decoupled control of their geometry and appearance. Both qualitative and quantitative evaluations show the superior detail and appearance control abilities of our method compared to state-of-the-art methods.

References:

1. Rameen Abdal, Peihao Zhu, Niloy Mitra, and Peter Wonka. 2020. StyleFlow: Attribute-conditioned Exploration of StyleGAN-Generated Images using Conditional Continuous Normalizing Flows. arXiv preprint (2020). arXiv:2008.02401Google Scholar

2. Andrew Brock, Theodore Lim, James M. Ritchie, and Nick Weston. 2016. Neural Photo Editing with Introspective Adversarial Networks. In ICIR.Google Scholar

3. John Canny. 1986. A computational approach to edge detection. PAMI (1986), 679–698.Google Scholar

4. Shu-Yu Chen, Wanchao Su, Lin Gao, Shihong Xia, and Hongbo Fu. 2020. DeepFace-Drawing: Deep Generation of Face Images from Sketches. ACM Trans. Graph. 39, 4, 72:1–72:16.Google ScholarDigital Library

5. Tao Chen, Ming-Ming Cheng, Ping Tan, Ariel Shamir, and Shi-Min Hu. 2009. Sketch2Photo: Internet Image Montage. ACM Trans. Graph. 28, 5 (2009), 1–10.Google ScholarDigital Library

6. Wengling Chen and James Hays. 2018. SketchyGAN: Towards Diverse and Realistic Sketch to Image Synthesis. In CVPR. 9416–9425.Google Scholar

7. Yu Deng, Jiaolong Yang, Dong Chen, Fang Wen, and Xin Tong. 2020. Disentangled and Controllable Face Image Generation via 3D Imitative-Contrastive Learning. In CVPR. 5153–5162.Google Scholar

8. Leon A. Gatys, Alexander S. Ecker, and Matthias Bethge. 2016. Image Style Transfer Using Convolutional Neural Networks. In CVPR. 2414–2423.Google Scholar

9. Abel Gonzalez-Garcia, Joost van de Weijer, and Yoshua Bengio. 2018. Image-to-image translation for cross-domain disentanglement. In NeurIPS. 1294–1305.Google Scholar

10. Ian J. Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2014. Generative adversarial networks. arXiv preprint arXiv:1406.2661 (2014).Google Scholar

11. Shuyang Gu, Jianmin Bao, Hao Yang, Dong Chen, Fang Wen, and Lu Yuan. 2019. Mask-Guided Portrait Editing With Conditional GANs. In CVPR. 3436–3445.Google Scholar

12. Xiaoguang Han, Chang Gao, and Yizhou Yu. 2017. DeepSketch2Face: A Deep Learning Based Sketching System for 3D Face and Caricature Modeling. ACM Trans. Graph. 36, 4 (2017), 126:1–126:12.Google ScholarDigital Library

13. Erik Härkönen, Aaron Hertzmann, Jaakko Lehtinen, and Sylvain Paris. 2020. GANSpace: Discovering Interpretable GAN Controls. In NeurIPS. 9841–9850.Google Scholar

14. Martin Heusel, Hubert Ramsauer, Thomas Unterthiner, Bernhard Nessler, and Sepp Hochreiter. 2017. GANs trained by a two time-scale update rule converge to a local Nash equilibrium. In NeurIPS. 6626–6637.Google Scholar

15. Shi-Min Hu, Dun Liang, Guo-Ye Yang, Guo-Wei Yang, and Wen-Yang Zhou. 2020. Jittor: a novel deep learning framework with meta-operators and unified graph execution. Science China Information Sciences 63, 222103 (2020), 1–21.Google Scholar

16. Rui Huang, Shu Zhang, Tianyu Li, and Ran He. 2017. Beyond Face Rotation: Global and Local Perception GAN for Photorealistic and Identity Preserving Frontal View Synthesis. In ICCV. 2458–2467.Google Scholar

17. Xun Huang and Serge Belongie. 2017. Arbitrary Style Transfer in Real-Time With Adaptive Instance Normalization. In ICCV. 1510–1519.Google Scholar

18. Xun Huang, Ming-Yu Liu, Serge Belongie, and Jan Kautz. 2018. Multimodal Unsuper-vised Image-to-image Translation. In ECCV. 179–196.Google Scholar

19. Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, and Alexei A. Efros. 2017. Image-To-Image Translation With Conditional Adversarial Networks. In CVPR. 5967–5976.Google Scholar

20. Youngjoo Jo and Jongyoul Park. 2019. SC-FEGAN: Face Editing Generative Adversarial Network With User’s Sketch and Color. In ICCV. 1745–1753.Google Scholar

21. Justin Johnson, Alexandre Alahi, and Fei-Fei Li. 2016. Perceptual Losses for Real-Time Style Transfer and Super-Resolution. In ECCV. 694–711.Google Scholar

22. Tero Karras, Samuli Laine, and Timo Aila. 2019. A Style-Based Generator Architecture for Generative Adversarial Networks. In CVPR. 4401–4410.Google Scholar

23. Tero Karras, Samuli Laine, Miika Aittala, Janne Hellsten, Jaakko Lehtinen, and Timo Aila. 2020. Analyzing and Improving the Image Quality of StyleGAN. In CVPR. 8107–8116.Google Scholar

24. Sunnie S. Y. Kim, Nicholas Kolkin, Jason Salavon, and Gregory Shakhnarovich. 2020. Deformable Style Transfer. In ECCV. 246–261.Google Scholar

25. Diederik P. Kingma and Jimmy Ba. 2015. Adam: A method for stochastic optimization. In ICIR.Google Scholar

26. Nicholas Kolkin, Jason Salavon, and Gregory Shakhnarovich. 2019. Style Transfer by Relaxed Optimal Transport and Self-Similarity. In CVPR. 10051–10060.Google Scholar

27. Cheng-Han Lee, Ziwei Liu, Lingyun Wu, and Ping Luo. 2020b. MaskGAN: Towards Diverse and Interactive Facial Image Manipulation. In CVPR. 5548–5557.Google Scholar

28. Hsin-Ying Lee, Hung-Yu Tseng, Qi Mao, Jia-Bin Huang, Yu-Ding Lu, Maneesh Singh, and Ming-Hsuan Yang. 2020c. DRIT++: Diverse image-to-image translation via disentangled representations. IJCV, 2402–2417.Google Scholar

29. Junsoo Lee, Eungyeup Kim, Yunsung Lee, Dongjun Kim, Jaehyuk Chang, and Jaegul Choo. 2020a. Reference-Based Sketch Image Colorization Using Augmented-Self Reference and Dense Semantic Correspondence. In CVPR. 5800–5809.Google Scholar

30. Chuan Li and Michael Wand. 2016. Combining Markov Random Fields and Convolutional Neural Networks for Image Synthesis. In CVPR. 2479–2486.Google Scholar

31. Mu Li, Wangmeng Zuo, and David Zhang. 2016. Convolutional Network for Attribute-driven and Identity-preserving Human Face Generation. arXiv preprint (2016).Google Scholar

32. Yuhang Li, Xuejin Chen, Feng Wu, and Zheng-Jun Zha. 2019. LinesToFacePhoto: Face Photo Generation From Lines With Conditional Self-Attention Generative Adversarial Networks. In ACM Multimedia. 2323–2331.Google Scholar

33. Yuhang Li, Xuejin Chen, Binxin Yang, Zihan Chen, Zhihua Cheng, and Zheng-Jun Zha. 2020. DeepFacePencil: Creating Face Images from Freehand Sketches. In ACM Multimedia. 991–999.Google Scholar

34. Yijun Li, Chen Fang, Jimei Yang, Zhaowen Wang, Xin Lu, and Ming-Hsuan Yang. 2017. Universal Style Transfer via Feature Transforms. In NeurIPS. 386–396.Google Scholar

35. Jing Liao, Yuan Yao, Lu Yuan, Gang Hua, and Sing Bing Kang. 2017. Visual Attribute Transfer through Deep Image Analogy. ACM Trans. Graph. 36, 4 (2017), 120:1–120:15.Google ScholarDigital Library

36. Alexander H. Liu, Yen-Cheng Liu, Yu-Ying Yeh, and Yu-Chiang Frank Wang. 2018. A Unified Feature Disentangler for Multi-Domain Image Translation and Manipulation. In NeurIPS. 2595–2604.Google Scholar

37. Bingchen Liu, Yizhe Zhu, Kunpeng Song, and Ahmed Elgammal. 2020. Self-Supervised Sketch-to-Image Synthesis. arXiv preprint (2020). arXiv:2012.09290Google Scholar

38. Ming-Yu Liu, Xun Huang, Arun Mallya, Tero Karras, Timo Aila, Jaakko Lehtinen, and Jan Kautz. 2019. Few-Shot Unsupervised Image-to-Image Translation. In ICCV. 10550–10559.Google Scholar

39. Roey Mechrez, Itamar Talmi, and Lihi Zelnik-Manor. 2018. The Contextual Loss for Image Transformation with Non-Aligned Data. In ECCV. 800–815.Google Scholar

40. Mehdi Mirza and Simon Osindero. 2014. Conditional Generative Adversarial Nets. arXiv preprint arXiv:1411.1784 (2014).Google Scholar

41. Thu Nguyen-Phuoc, Chuan Li, Lucas Theis, Christian Richardt, and Yong-Liang Yang. 2019. HoloGAN: Unsupervised Learning of 3D Representations From Natural Images. In ICCV. 7587–7596.Google Scholar

42. Taesung Park, Ming-Yu Liu, Ting-Chun Wang, and Jun-Yan Zhu. 2019. Semantic Image Synthesis With Spatially-Adaptive Normalization. In CVPR. 2337–2346.Google Scholar

43. Taesung Park, Jun-Yan Zhu, Oliver Wang, Jingwan Lu, Eli Shechtman, Alexei A. Efros, and Richard Zhang. 2020. Swapping Autoencoder for Deep Image Manipulation. In NeurIPS. 7198–7211.Google Scholar

44. Adam Paszke, Sam Gross, Francisco Massa, Adam Lerer, James Bradbury, Gregory Chanan, Trevor Killeen, Zeming Lin, Natalia Gimelshein, Luca Antiga, Alban Desmaison, Andreas Kopf, Edward Yang, Zachary DeVito, Martin Raison, Alykhan Tejani, Sasank Chilamkurthy, Benoit Steiner, Lu Fang, Junjie Bai, and Soumith Chintala. 2019. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In NeurIPS. 8024–8035.Google Scholar

45. Tiziano Portenier, Qiyang Hu, Attila Szabo, Siavash Arjomand Bigdeli, Paolo Favaro, and Matthias Zwicker. 2018. Faceshop: Deep sketch-based face image editing. ACM Trans. Graph. 37, 4 (2018), 99:1–99:13.Google ScholarDigital Library

46. Elad Richardson, Yuval Alaluf, Or Patashnik, Yotam Nitzan, Yaniv Azar, Stav Shapiro, and Daniel Cohen-Or. 2020. Encoding in Style: a StyleGAN Encoder for Image-to-Image Translation. arXiv preprint (2020). arXiv:2008.00951Google Scholar

47. Olaf Ronneberger, Philipp Fischer, and Thomas Brox. 2017. U-Net: Convolutional networks for biomedical image segmentation. In International Conference on Medical image computing and computer-assisted intervention. 234–241.Google Scholar

48. Patsorn Sangkloy, Jingwan Lu, Chen Fang, Fisher Yu, and James Hays. 2017. Scribbler: Controlling Deep Image Synthesis With Sketch and Color. In CVPR. 6836–6845.Google Scholar

49. Katja Schwarz, Yiyi Liao, Michael Niemeyer, and Andreas Geiger. 2020. GRAF: Generative Radiance Fields for 3D-Aware Image Synthesis. In NeurIPS. 20154–20166.Google Scholar

50. Yujun Shen, Jinjin Gu, Xiaoou Tang, and Bolei Zhou. 2020. Interpreting the Latent Space of GANs for Semantic Face Editing. In CVPR. 9240–9249.Google Scholar

51. Zhentao Tan, Menglei Chai, Dongdong Chen, Jing Liao, Qi Chu, Lu Yuan, Sergey Tulyakov, and Nenghai Yu. 2020. MichiGAN: Multi-Input-Conditioned Hair Image Generation for Portrait Editing. ACM Trans. Graph. 39, 4 (2020), 95:1–95:13.Google ScholarDigital Library

52. Ayush Tewari, Mohamed Elgharib, Gaurav Bharaj, Florian Bernard, Hans-Peter Seidel, Patrick Perez, Michael Zollhofer, and Christian Theobalt. 2020. StyleRig: Rigging StyleGAN for 3D Control Over Portrait Images. In CVPR. 6141–6150.Google Scholar

53. J. Wang, J. Zhang, Z. Lu, and S. Shan. 2019. DFT-Net: Disentanglement of Face Deformation and Texture Synthesis for Expression Editing. In International Conference on Image Processing (ICIP). 3881–3885.Google Scholar

54. Ting-Chun Wang, Ming-Yu Liu, Jun-Yan Zhu, Andrew Tao, Jan Kautz, and Bryan Catanzaro. 2018. High-Resolution Image Synthesis and Semantic Manipulation With Conditional GANs. In CVPR. 8798–8807.Google Scholar

55. Saining Xie and Zhuowen Tu. 2015. Holistically-Nested Edge Detection. In ICCV. 1395–1403.Google Scholar

56. Shuai Yang, Zhangyang Wang, Jiaying Liu, and Zongming Guo. 2020. Deep Plastic Surgery: Robust and Controllable Image Editing with Human-Drawn Sketches. In ECCV. 601–617.Google Scholar

57. Jaejun Yoo, Youngjung Uh, Sanghyuk Chun, Byeongkyu Kang, and Jung-Woo Ha. 2019. Photorealistic Style Transfer via Wavelet Transforms. In ICCV. 9035–9044.Google Scholar

58. Jiahui Yu, Zhe Lin, Jimei Yang, Xiaohui Shen, Xin Lu, and Thomas S. Huang. 2019b. Free-Form Image Inpainting With Gated Convolution. In ICCV. 4470–4479.Google Scholar

59. Xiaoming Yu, Yuanqi Chen, Shan Liu, Thomas Li, and Ge Li. 2019a. Multi-mapping Image-to-Image Translation via Learning Disentanglement. In NeurIPS. 2990–2999.Google Scholar

60. Jianfu Zhang, Yuanyuan Huang, Yaoyi Li, Weijie Zhao, and Liqing Zhang. 2019. MultiAttribute Transfer via Disentangled Representation. In AAAI. 9195–9202.Google Scholar

61. Pan Zhang, Bo Zhang, Dong Chen, Lu Yuan, and Fang Wen. 2020. Cross-domain Correspondence Learning for Exemplar-based Image Translation. In CVPR. 5142–5152.Google Scholar

62. Richard Zhang, Phillip Isola, Alexei A Efros, Eli Shechtman, and Oliver Wang. 2018. The Unreasonable Effectiveness of Deep Features as a Perceptual Metric. In CVPR. 586–595.Google Scholar

63. Jun-Yan Zhu, Richard Zhang, Deepak Pathak, Trevor Darrell, Alexei A. Efros, Oliver Wang, and Eli Shechtman. 2017b. Toward Multimodal Image-to-Image Translation. In NeurIPS. 465–476.Google Scholar

64. Jun-Yan Zhu, Taesung Park, Phillip Isola, and Alexei A. Efros. 2017a. Unpaired Image-To-Image Translation Using Cycle-Consistent Adversarial Networks. In ICCV. 2242–2251.Google Scholar

65. Peihao Zhu, Rameen Abdal, Yipeng Qin, and Peter Wonka. 2020. SEAN: Image Synthesis With Semantic Region-Adaptive Normalization. In CVPR. 5103–5112.Google Scholar