“Control strategies for physically simulated characters performing two-player competitive sports” by Won, Gopinath and Hodgins

Conference:

Type(s):

Title:

- Control strategies for physically simulated characters performing two-player competitive sports

Presenter(s)/Author(s):

Abstract:

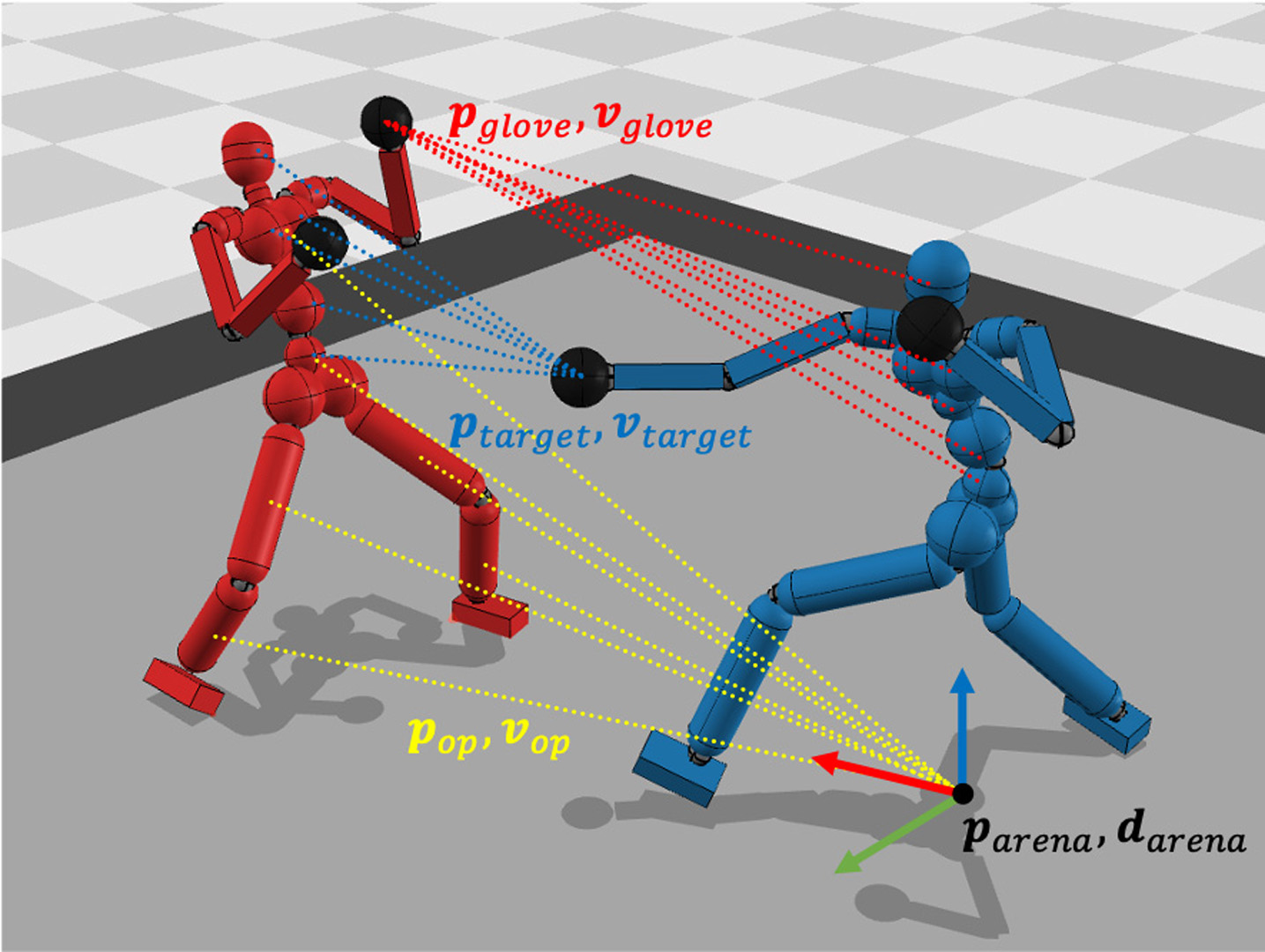

In two-player competitive sports, such as boxing and fencing, athletes often demonstrate efficient and tactical movements during a competition. In this paper, we develop a learning framework that generates control policies for physically simulated athletes who have many degrees-of-freedom. Our framework uses a two step-approach, learning basic skills and learning bout-level strategies, with deep reinforcement learning, which is inspired by the way that people how to learn competitive sports. We develop a policy model based on an encoder-decoder structure that incorporates an autoregressive latent variable, and a mixture-of-experts decoder. To show the effectiveness of our framework, we implemented two competitive sports, boxing and fencing, and demonstrate control policies learned by our framework that can generate both tactical and natural-looking behaviors. We also evaluate the control policies with comparisons to other learning configurations and with ablation studies.

References:

1. Yu Bai and Chi Jin. 2020. Provable Self-Play Algorithms for Competitive Reinforcement Learning. In Proceedings of the 37th International Conference on Machine Learning, Vol. 119. PMLR, 551–560. http://proceedings.mlr.press/v119/bai20a.htmlGoogle Scholar

2. Bowen Baker, Ingmar Kanitscheider, Todor M. Markov, Yi Wu, Glenn Powell, Bob McGrew, and Igor Mordatch. 2019. Emergent Tool Use From Multi-Agent Autocurricula. CoRR (2019). arXiv:1909.07528Google Scholar

3. Trapit Bansal, Jakub Pachocki, Szymon Sidor, Ilya Sutskever, and Igor Mordatch. 2018. Emergent Complexity via Multi-Agent Competition. arXiv:1710.03748Google Scholar

4. Kevin Bergamin, Simon Clavet, Daniel Holden, and James Richard Forbes. 2019. DReCon: Data-driven Responsive Control of Physics-based Characters. ACM Trans. Graph. 38, 6, Article 206 (2019). Google ScholarDigital Library

5. Glen Berseth, Cheng Xie, Paul Cernek, and Michiel van de Panne. 2018. Progressive Reinforcement Learning with Distillation for Multi-Skilled Motion Control. CoRR abs/1802.04765 (2018).Google Scholar

6. L. Busoniu, R. Babuska, and B. De Schutter. 2008. A Comprehensive Survey of Multiagent Reinforcement Learning. IEEE Transactions on Systems, Man, and Cybernetics, Part C (Applications and Reviews) 38, 2 (2008), 156–172. Google ScholarDigital Library

7. Nuttapong Chentanez, Matthias M?ller, Miles Macklin, Viktor Makoviychuk, and Stefan Jeschke. 2018. Physics-based motion capture imitation with deep reinforcement learning. In Motion, Interaction and Games, MIG 2018. ACM, 1:1–1:10. Google ScholarDigital Library

8. Caroline Claus and Craig Boutilier. 1998. The Dynamics of Reinforcement Learning in Cooperative Multiagent Systems. In Proceedings of the Fifteenth National/Tenth Conference on Artificial Intelligence/Innovative Applications of Artificial Intelligence (AAAI ’98/IAAI ’98). 746–752.Google ScholarDigital Library

9. Alexander Clegg, Wenhao Yu, Jie Tan, C. Karen Liu, and Greg Turk. 2018. Learning to Dress: Synthesizing Human Dressing Motion via Deep Reinforcement Learning. ACM Trans. Graph. 37, 6, Article 179 (2018). Google ScholarDigital Library

10. CMU. 2002. CMU Graphics Lab Motion Capture Database. http://mocap.cs.cmu.edu/.Google Scholar

11. Brandon Haworth, Glen Berseth, Seonghyeon Moon, Petros Faloutsos, and Mubbasir Kapadia. 2020. Deep Integration of Physical Humanoid Control and Crowd Navigation. In Motion, Interaction and Games (MIG ’20). Article 15. Google ScholarDigital Library

12. Joseph Henry, Hubert P. H. Shum, and Taku Komura. 2014. Interactive Formation Control in Complex Environments. IEEE Transactions on Visualization and Computer Graphics 20, 2 (2014), 211–222. Google ScholarDigital Library

13. Edmond S. L. Ho, Taku Komura, and Chiew-Lan Tai. 2010. Spatial Relationship Preserving Character Motion Adaptation. ACM Trans. Graph. 29, 4, Article 33 (2010). Google ScholarDigital Library

14. Daniel Holden, Taku Komura, and Jun Saito. 2017. Phase-functioned Neural Networks for Character Control. ACM Trans. Graph. 36, 4, Article 42 (2017). Google ScholarDigital Library

15. Junling Hu and Michael P. Wellman. 1998. Multiagent Reinforcement Learning: Theoretical Framework and an Algorithm. In Proceedings of the Fifteenth International Conference on Machine Learning (ICML ’98). 242–250.Google Scholar

16. K. Hyun, M. Kim, Y. Hwang, and J. Lee. 2013. Tiling Motion Patches. IEEE Transactions on Visualization and Computer Graphics 19, 11 (2013), 1923–1934. Google ScholarDigital Library

17. Jongmin Kim, Yeongho Seol, Taesoo Kwon, and Jehee Lee. 2014. Interactive Manipulation of Large-Scale Crowd Animation. ACM Trans. Graph. 33, 4, Article 83 (2014). Google ScholarDigital Library

18. Manmyung Kim, Kyunglyul Hyun, Jongmin Kim, and Jehee Lee. 2009. Synchronized Multi-Character Motion Editing. ACM Trans. Graph. 28, 3, Article 79 (2009). Google ScholarDigital Library

19. Naveen Kodali, Jacob D. Abernethy, James Hays, and Zsolt Kira. 2017. How to Train Your DRAGAN. CoRR abs/1705.07215 (2017).Google Scholar

20. T. Kwon, Y. Cho, S. I. Park, and S. Y. Shin. 2008. Two-Character Motion Analysis and Synthesis. IEEE Transactions on Visualization and Computer Graphics 14, 3 (2008), 707–720. Google ScholarDigital Library

21. Taesoo Kwon, Kang Hoon Lee, Jehee Lee, and Shigeo Takahashi. 2008. Group Motion Editing. ACM Trans. Graph. 27, 3 (2008), 1–8. Google ScholarDigital Library

22. Jehee Lee and Kang Hoon Lee. 2004. Precomputing Avatar Behavior from Human Motion Data. In Proceedings of the 2004 ACM SIGGRAPH/Eurographics Symposium on Computer Animation (SCA ’04). 79–87. Google ScholarDigital Library

23. Kang Hoon Lee, Myung Geol Choi, and Jehee Lee. 2006. Motion Patches: Building Blocks for Virtual Environments Annotated with Motion Data. ACM Trans. Graph. 25, 3 (2006), 898–906. Google ScholarDigital Library

24. Seunghwan Lee, Moonseok Park, Kyoungmin Lee, and Jehee Lee. 2019. Scalable Muscle-actuated Human Simulation and Control. ACM Trans. Graph. 38, 4, Article 73 (2019). Google ScholarDigital Library

25. Eric Liang, Richard Liaw, Philipp Moritz, Robert Nishihara, Roy Fox, Ken Goldberg, Joseph E. Gonzalez, Michael I. Jordan, and Ion Stoica. 2018. RLlib: Abstractions for Distributed Reinforcement Learning. arXiv:1712.09381Google Scholar

26. Michael L. Littman. 1994. Markov Games as a Framework for Multi-Agent Reinforcement Learning. In Proceedings of the Eleventh International Conference on International Conference on Machine Learning (ICML’94). 157–163.Google ScholarDigital Library

27. C. Karen Liu, Aaron Hertzmann, and Zoran Popovi?. 2006. Composition of Complex Optimal Multi-Character Motions. In Proceedings of the 2006 ACM SIGGRAPH/Eurographics Symposium on Computer Animation (SCA ’06). 215–222.Google ScholarDigital Library

28. Libin Liu and Jessica Hodgins. 2017. Learning to Schedule Control Fragments for Physics-Based Characters Using Deep Q-Learning. ACM Trans. Graph. 36, 3, Article 42a (2017). Google ScholarDigital Library

29. Libin Liu and Jessica Hodgins. 2018. Learning Basketball Dribbling Skills Using Trajectory Optimization and Deep Reinforcement Learning. ACM Trans. Graph. 37, 4, Article 142 (2018). Google ScholarDigital Library

30. Ryan Lowe, Yi Wu, Aviv Tamar, Jean Harb, Pieter Abbeel, and Igor Mordatch. 2017. Multi-Agent Actor-Critic for Mixed Cooperative-Competitive Environments. In Proceedings of the 31st International Conference on Neural Information Processing Systems (NIPS’17). 6382–6393.Google ScholarDigital Library

31. Josh Merel, Yuval Tassa, Dhruva TB, Sriram Srinivasan, Jay Lemmon, Ziyu Wang, Greg Wayne, and Nicolas Heess. 2017. Learning human behaviors from motion capture by adversarial imitation. CoRR abs/1707.02201 (2017).Google Scholar

32. T. T. Nguyen, N. D. Nguyen, and S. Nahavandi. 2020. Deep Reinforcement Learning for Multiagent Systems: A Review of Challenges, Solutions, and Applications. IEEE Transactions on Cybernetics 50, 9 (2020), 3826–3839. Google ScholarCross Ref

33. Afshin OroojlooyJadid and Davood Hajinezhad. 2020. A Review of Cooperative Multi-Agent Deep Reinforcement Learning. arXiv:1908.03963Google Scholar

34. Soohwan Park, Hoseok Ryu, Seyoung Lee, Sunmin Lee, and Jehee Lee. 2019. Learning Predict-and-simulate Policies from Unorganized Human Motion Data. ACM Trans. Graph. 38, 6, Article 205 (2019). Google ScholarDigital Library

35. Adam Paszke, Sam Gross, Francisco Massa, Adam Lerer, James Bradbury, Gregory Chanan, Trevor Killeen, Zeming Lin, Natalia Gimelshein, Luca Antiga, Alban Desmaison, Andreas Kopf, Edward Yang, Zachary DeVito, Martin Raison, Alykhan Tejani, Sasank Chilamkurthy, Benoit Steiner, Lu Fang, Junjie Bai, and Soumith Chintala. 2019. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Advances in Neural Information Processing Systems 32. 8024–8035.Google Scholar

36. Xue Bin Peng, Pieter Abbeel, Sergey Levine, and Michiel van de Panne. 2018. DeepMimic: Example-guided Deep Reinforcement Learning of Physics-based Character Skills. ACM Trans. Graph. 37, 4, Article 143 (2018). Google ScholarDigital Library

37. Xue Bin Peng, Glen Berseth, Kangkang Yin, and Michiel Van De Panne. 2017. DeepLoco: Dynamic Locomotion Skills Using Hierarchical Deep Reinforcement Learning. ACM Trans. Graph. 36, 4, Article 41 (2017). Google ScholarDigital Library

38. Yevgeny Seldin and Aleksandrs Slivkins. 2014. One Practical Algorithm for Both Stochastic and Adversarial Bandits. In Proceedings of the 31st International Conference on Machine Learning (Proceedings of Machine Learning Research, Vol. 32). 1287–1295. http://proceedings.mlr.press/v32/seldinb14.htmlGoogle Scholar

39. Hubert P. H. Shum, Taku Komura, Masashi Shiraishi, and Shuntaro Yamazaki. 2008b. Interaction Patches for Multi-Character Animation. ACM Trans. Graph. 27, 5 (2008). Google ScholarDigital Library

40. Hubert P. H. Shum, Taku Komura, and Shuntaro Yamazaki. 2007. Simulating Competitive Interactions Using Singly Captured Motions. In Proceedings of the 2007 ACM Symposium on Virtual Reality Software and Technology (VRST ’07). 65–72. Google ScholarDigital Library

41. Hubert P. H. Shum, Taku Komura, and Shuntaro Yamazaki. 2008a. Simulating Interactions of Avatars in High Dimensional State Space. In Proceedings of the 2008 Symposium on Interactive 3D Graphics and Games (I3D ’08). 131–138. Google ScholarDigital Library

42. H. P. H. Shum, T. Komura, and S. Yamazaki. 2012. Simulating Multiple Character Interactions with Collaborative and Adversarial Goals. IEEE Transactions on Visualization and Computer Graphics 18, 5 (2012), 741–752. Google ScholarDigital Library

43. Sebastian Starke, He Zhang, Taku Komura, and Jun Saito. 2019. Neural state machine for character-scene interactions. ACM Trans. Graph. 38, 6 (2019), 209:1–209:14. Google ScholarDigital Library

44. Jie Tan, C. Karen Liu, and Greg Turk. 2011. Stable Proportional-Derivative Controllers. IEEE Computer Graphics and Applications 31, 4 (2011), 34–44. Google ScholarDigital Library

45. Kevin Wampler, Erik Andersen, Evan Herbst, Yongjoon Lee, and Zoran Popovi?. 2010. Character Animation in Two-Player Adversarial Games. ACM Trans. Graph. 29, 3, Article 26 (2010). Google ScholarDigital Library

46. Ziyu Wang, Josh Merel, Scott Reed, Greg Wayne, Nando de Freitas, and Nicolas Heess. 2017. Robust Imitation of Diverse Behaviors. In Proceedings of the 31st International Conference on Neural Information Processing Systems (NIPS’17). http://dl.acm.org/citation.cfm?id=3295222.3295284Google ScholarDigital Library

47. Erik Wijmans, Abhishek Kadian, Ari Morcos, Stefan Lee, Irfan Essa, Devi Parikh, Manolis Savva, and Dhruv Batra. 2020. DD-PPO: Learning Near-Perfect PointGoal Navigators from 2.5 Billion Frames. In 8th International Conference on Learning Representations, ICLR 2020, Addis Ababa, Ethiopia, April 26-30, 2020.Google Scholar

48. Jungdam Won, Deepak Gopinath, and Jessica Hodgins. 2020. A Scalable Approach to Control Diverse Behaviors for Physically Simulated Characters. ACM Trans. Graph. 39, 4, Article 33 (2020). Google ScholarDigital Library

49. Jungdam Won and Jehee Lee. 2019. Learning Body Shape Variation in Physics-based Characters. ACM Trans. Graph. 38, 6, Article 207 (2019). Google ScholarDigital Library

50. Jungdam Won, Kyungho Lee, Carol O’Sullivan, Jessica K. Hodgins, and Jehee Lee. 2014. Generating and Ranking Diverse Multi-Character Interactions. ACM Trans. Graph. 33, 6, Article 219 (2014). Google ScholarDigital Library

51. Zhaoming Xie, Hung Yu Ling, Nam Hee Kim, and Michiel van de Panne. 2020. ALL-STEPS: Curriculum-driven Learning of Stepping Stone Skills. In Proc. ACM SIGGRAPH / Eurographics Symposium on Computer Animation.Google Scholar

52. Barbara Yersin, Jonathan Ma?m, Julien Pettr?, and Daniel Thalmann. 2009. Crowd Patches: Populating Large-Scale Virtual Environments for Real-Time Applications. In Proceedings of the 2009 Symposium on Interactive 3D Graphics and Games (I3D ’09). 207–214. Google ScholarDigital Library

53. Wenhao Yu, Greg Turk, and C. Karen Liu. 2018. Learning Symmetric and Low-energy Locomotion. ACM Trans. Graph. 37, 4, Article 144 (2018). Google ScholarDigital Library

54. Victor Brian Zordan and Jessica K. Hodgins. 2002. Motion Capture-Driven Simulations That Hit and React. In Proceedings of the 2002 ACM SIGGRAPH/Eurographics Symposium on Computer Animation (SCA ’02). 89–96. Google ScholarDigital Library

55. Victor Brian Zordan, Anna Majkowska, Bill Chiu, and Matthew Fast. 2005. Dynamic Response for Motion Capture Animation. ACM Trans. Graph. 24, 3 (2005), 697–701. Google ScholarDigital Library