“Audio Matters Too! Enhancing Markerless Motion Capture With Audio Signals for String Performance Capture”

Conference:

Type(s):

Title:

- Audio Matters Too! Enhancing Markerless Motion Capture With Audio Signals for String Performance Capture

Presenter(s)/Author(s):

Abstract:

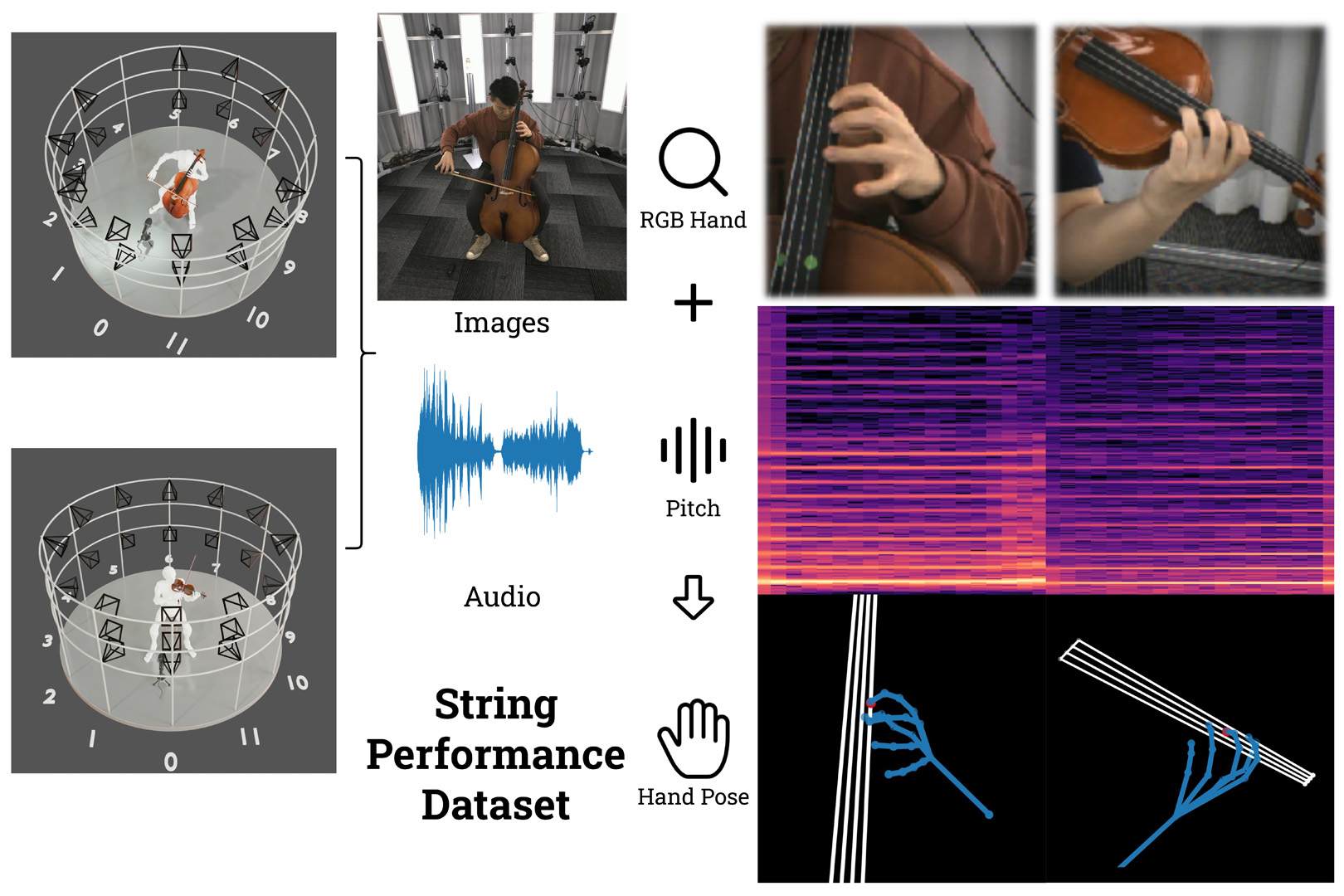

Motion Capture of musical instrument performance is challenging even with markers. By extracting playing cues inherent in the audio for markerless video motion capture, our method recovers subtle finger-string contacts and intricate playing movements. We further contribute the first large-scale String Performance Dataset (SPD) with high-quality motion and contact annotations.

References:

[1]

Alessio Bazzica, JC Van Gemert, Cynthia CS Liem, and Alan Hanjalic. 2017. Vision-based detection of acoustic timed events: a case study on clarinet note onsets. arXiv preprint arXiv:1706.09556 (2017).

[2]

Zhe Cao, Tomas Simon, Shih-En Wei, and Yaser Sheikh. 2017. Realtime multi-person 2d pose estimation using part affinity fields. In Proceedings of the IEEE conference on computer vision and pattern recognition. 7291–7299.

[3]

He Chen, Hyojoon Park, Kutay Macit, and Ladislav Kavan. 2021. Capturing detailed deformations of moving human bodies. ACM Transactions on Graphics (TOG) 40, 4 (2021), 1–18.

[4]

Carl Doersch, Yi Yang, Mel Vecerik, Dilara Gokay, Ankush Gupta, Yusuf Aytar, Joao Carreira, and Andrew Zisserman. 2023. TAPIR: Tracking Any Point with per-frame Initialization and temporal Refinement. ICCV (2023).

[5]

George ElKoura and Karan Singh. 2003. Handrix: animating the human hand. In Proceedings of the 2003 ACM SIGGRAPH/Eurographics symposium on Computer animation. 110–119.

[6]

Daiheng Gao, Yuliang Xiu, Kailin Li, Lixin Yang, Feng Wang, Peng Zhang, Bang Zhang, Cewu Lu, and Ping Tan. 2022. DART: Articulated hand model with diverse accessories and rich textures. Advances in Neural Information Processing Systems 35 (2022), 37055–37067.

[7]

Olivier Gillet and Ga?l Richard. 2006. Enst-drums: an extensive audio-visual database for drum signals processing. In International Society for Music Information Retrieval Conference (ISMIR).

[8]

Victor Gonzalez-Sanchez, Sofia Dahl, Johannes Lunde Hatfield, and Rolf Inge God?y. 2019. Characterizing movement fluency in musical performance: Toward a generic measure for technology enhanced learning. Frontiers in psychology 10 (2019), 84.

[9]

Aristotelis Hadjakos, Tobias Gro?hauser, and Werner Goebl. 2013. Motion analysis of music ensembles with the Kinect. In Conference on New Interfaces for Musical Expression. 106–110.

[10]

Shangchen Han, Po-chen Wu, Yubo Zhang, Beibei Liu, Linguang Zhang, Zheng Wang, Weiguang Si, Peizhao Zhang, Yujun Cai, Tomas Hodan, et al. 2022. UmeTrack: Unified multi-view end-to-end hand tracking for VR. In SIGGRAPH Asia 2022 Conference Papers. 1–9.

[11]

Pauline M Hilt, Leonardo Badino, Alessandro D’Ausilio, Gualtiero Volpe, Ser? Tokay, Luciano Fadiga, and Antonio Camurri. 2019. Multi-layer adaptation of group coordination in musical ensembles. Scientific reports 9, 1 (2019), 5854.

[12]

Asuka Hirata, Keitaro Tanaka, Masatoshi Hamanaka, and Shigeo Morishima. 2022. Audio-Driven Violin Performance Animation with Clear Fingering and Bowing. In ACM SIGGRAPH 2022 Posters. 1–2.

[13]

Asuka Hirata, Keitaro Tanaka, Ryo Shimamura, and Shigeo Morishima. 2021. Bowing-Net: Motion Generation for String Instruments Based on Bowing Information. In ACM SIGGRAPH 2021 Posters. 1–2.

[14]

Kelly Jakubowski, Tuomas Eerola, Paolo Alborno, Gualtiero Volpe, Antonio Camurri, and Martin Clayton. 2017. Extracting coarse body movements from video in music performance: A comparison of automated computer vision techniques with motion capture data. Frontiers in Digital Humanities 4 (2017), 9.

[15]

Glenn Jocher, Ayush Chaurasia, and Jing Qiu. 2023. YOLO by Ultralytics. https://github.com/ultralytics/ultralytics

[16]

Hsuan-Kai Kao and Li Su. 2020. Temporally guided music-to-body-movement generation. In Proceedings of the 28th ACM International Conference on Multimedia. 147–155.

[17]

Junhwan Kim, Frederic Cordier, and Nadia Magnenat-Thalmann. 2000. Neural network-based violinist’s hand animation. In Proceedings Computer Graphics International 2000. IEEE, 37–41.

[18]

Jong Wook Kim, Justin Salamon, Peter Li, and Juan Pablo Bello. 2018. Crepe: A convolutional representation for pitch estimation. In 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 161–165.

[19]

Nozomi Kugimoto, Rui Miyazono, Kosuke Omori, Takeshi Fujimura, Shinichi Furuya, Haruhiro Katayose, Hiroyoshi Miwa, and Noriko Nagata. 2009. CG animation for piano performance. In SIGGRAPH’09: Posters. 1–1.

[20]

Bochen Li, Xinzhao Liu, Karthik Dinesh, Zhiyao Duan, and Gaurav Sharma. 2018. Creating a multitrack classical music performance dataset for multimodal music analysis: Challenges, insights, and applications. IEEE Transactions on Multimedia 21, 2 (2018), 522–535.

[21]

Rui Li, Zhenyu Liu, and Jianrong Tan. 2019. A survey on 3D hand pose estimation: Cameras, methods, and datasets. Pattern Recognition 93 (2019), 251–272.

[22]

Camillo Lugaresi, Jiuqiang Tang, Hadon Nash, Chris McClanahan, Esha Uboweja, Michael Hays, Fan Zhang, Chuo-Ling Chang, Ming Guang Yong, Juhyun Lee, et al. 2019. Mediapipe: A framework for building perception pipelines. arXiv preprint arXiv:1906.08172 (2019).

[23]

Esteban Maestre, Panagiotis Papiotis, Marco Marchini, Quim Llimona, Oscar Mayor, Alfonso P?rez, and Marcelo M Wanderley. 2017. Enriched multimodal representations of music performances: Online access and visualization. Ieee Multimedia 24, 1 (2017), 24–34.

[24]

Marco Marchini, Rafael Ramirez, Panos Papiotis, and Esteban Maestre. 2014. The sense of ensemble: a machine learning approach to expressive performance modelling in string quartets. Journal of New Music Research 43, 3 (2014), 303–317.

[25]

Gyeongsik Moon, Shoou-I Yu, He Wen, Takaaki Shiratori, and Kyoung Mu Lee. 2020. Interhand2. 6m: A dataset and baseline for 3d interacting hand pose estimation from a single rgb image. In Computer Vision-ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XX 16. Springer, 548–564.

[26]

Yeonguk Oh, JoonKyu Park, Jaeha Kim, Gyeongsik Moon, and Kyoung Mu Lee. 2023. Recovering 3D hand mesh sequence from a single blurry image: A new dataset and temporal unfolding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 554–563.

[27]

Yifang Pan, Chris Landreth, Eugene Fiume, and Karan Singh. 2022. VOCAL: Vowel and Consonant Layering for Expressive Animator-Centric Singing Animation. In SIGGRAPH Asia 2022 Conference Papers. 1–9.

[28]

Panagiotis Papiotis et al. 2016. A computational approach to studying interdependence in string quartet performance. Ph. D. Dissertation. Universitat Pompeu Fabra.

[29]

Pierre Payeur, Gabriel Martins Gomes Nascimento, Jillian Beacon, Gilles Comeau, AnaMaria Cretu, Vincent D’Aoust, and Marc-Antoine Charpentier. 2014. Human gesture quantification: An evaluation tool for somatic training and piano performance. In 2014 IEEE International Symposium on Haptic, Audio and Visual Environments and Games (HAVE) Proceedings. IEEE, 100–105.

[30]

Alfonso Perez-Carrillo. 2019. Finger-string interaction analysis in guitar playing with optical motion capture. Frontiers in Computer Science 1 (2019), 8.

[31]

Alfonso Perez-Carrillo, Josep-Lluis Arcos, and Marcelo Wanderley. 2016. Estimation of guitar fingering and plucking controls based on multimodal analysis of motion, audio and musical score. In Music, Mind, and Embodiment: 11th International Symposium, CMMR 2015, Plymouth, UK, June 16–19, 2015, Revised Selected Papers 11. Springer, 71–87.

[32]

Javier Romero, Dimitrios Tzionas, and Michael J Black. 2022. Embodied hands: Modeling and capturing hands and bodies together. arXiv preprint arXiv:2201.02610 (2022).

[33]

Jocelyn Roz?, Mitsuko Aramaki, Richard Kronland-Martinet, and S?lvi Ystad. 2018. Assessing the effects of a primary control impairment on the cellists’ bowing gesture inducing harsh sounds. IEEE Access 6 (2018), 43683–43695.

[34]

Erwin Schoonderwaldt and Matthias Demoucron. 2009. Extraction of bowing parameters from violin performance combining motion capture and sensors. The Journal of the Acoustical Society of America 126, 5 (2009), 2695–2708.

[35]

Snehesh Shrestha, Cornelia Ferm?ller, Tianyu Huang, Pyone Thant Win, Adam Zukerman, Chethan M Parameshwara, and Yiannis Aloimonos. 2022. AIMusicGuru: Music Assisted Human Pose Correction. arXiv preprint arXiv:2203.12829 (2022).

[36]

Tomas Simon, Hanbyul Joo, Iain Matthews, and Yaser Sheikh. 2017. Hand keypoint detection in single images using multiview bootstrapping. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition. 1145–1153.

[37]

Euler CF Teixeira, Mauricio A Loureiro, Marcelo M Wanderley, and Hani C Yehia. 2015. Motion analysis of clarinet performers. Journal of New Music Research 44, 2 (2015), 97–111.

[38]

Marc R Thompson, Georgios Diapoulis, Tommi Himberg, and Petri Toiviainen. 2017. Interpersonal Coordination in Dyadic Performance. In The Routledge Companion to Embodied Music Interaction. Routledge, 186–194.

[39]

Micka?l Tits, Jo?lle Tilmanne, Nicolas D’alessandro, and Marcelo M Wanderley. 2015. Feature extraction and expertise analysis of pianists’ Motion-Captured Finger Gestures. In ICMC.

[40]

Winnie Tsang, Karan Singh, and Eugene Fiume. 2005. Helping hand: an anatomically accurate inverse dynamics solution for unconstrained hand motion. In Proceedings of the 2005 ACM SIGGRAPH/Eurographics symposium on Computer animation. 319–328.

[41]

Gualtiero Volpe, Ksenia Kolykhalova, Erica Volta, Simone Ghisio, George Waddell, Paolo Alborno, Stefano Piana, Corrado Canepa, and Rafael Ramirez-Melendez. 2017. A multimodal corpus for technology-enhanced learning of violin playing. In Proceedings of the 12th Biannual Conference on Italian SIGCHI Chapter. 1–5.

[42]

Marcelo M Wanderley. 2022. The Oxford Handbook of Music Performance. Vol. 2. Oxford University Press. 465–494 pages.

[43]

Nkenge Wheatland, Yingying Wang, Huaguang Song, Michael Neff, Victor Zordan, and Sophie J?rg. 2015. State of the art in hand and finger modeling and animation. In Computer Graphics Forum, Vol. 34. Wiley Online Library, 735–760.

[44]

Zhendong Yang, Ailing Zeng, Chun Yuan, and Yu Li. 2023. Effective whole-body pose estimation with two-stages distillation. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 4210–4220.

[45]

Diana Young and Anagha Deshmane. 2007. Bowstroke database: a web-accessible archive of violin bowing data. In Proceedings of the 7th international conference on New interfaces for musical expression. 352–357.

[46]

Fan Zhang, Valentin Bazarevsky, Andrey Vakunov, Andrei Tkachenka, George Sung, Chuo-Ling Chang, and Matthias Grundmann. 2020. Mediapipe hands: On-device real-time hand tracking. arXiv preprint arXiv:2006.10214 (2020).

[47]

Qiang Zhang, Yuanqiao Lin, Yubin Lin, and Szymon Rusinkiewicz. 2023a. Hand Pose Estimation with Mems-Ultrasonic Sensors. In SIGGRAPH Asia 2023 Conference Papers. 1–11.

[48]

Shujie Zhang, Tianyue Zheng, Zhe Chen, Jingzhi Hu, Abdelwahed Khamis, Jiajun Liu, and Jun Luo. 2023b. OCHID-Fi: Occlusion-Robust Hand Pose Estimation in 3D via RF-Vision. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 15112–15121.

[49]

Yu Zhang, Ziya Zhou, Xiaobing Li, Feng Yu, and Maosong Sun. 2022. CCOM-HuQin: an Annotated Multimodal Chinese Fiddle Performance Dataset. arXiv preprint arXiv:2209.06496 (2022).

[50]

Ce Zheng, Wenhan Wu, Chen Chen, Taojiannan Yang, Sijie Zhu, Ju Shen, Nasser Kehtarnavaz, and Mubarak Shah. 2023. Deep learning-based human pose estimation: A survey. Comput. Surveys 56, 1 (2023), 1–37.

[51]

Yi Zhou, Connelly Barnes, Jingwan Lu, Jimei Yang, and Hao Li. 2019. On the continuity of rotation representations in neural networks. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 5745–5753.

[52]

Ciyou Zhu, Richard H Byrd, Peihuang Lu, and Jorge Nocedal. 1997. Algorithm 778: LBFGS-B: Fortran subroutines for large-scale bound-constrained optimization. ACM Transactions on mathematical software (TOMS) 23, 4 (1997), 550–560.

[53]

Yuanfeng Zhu, Ajay Sundar Ramakrishnan, Bernd Hamann, and Michael Neff. 2013. A system for automatic animation of piano performances. Computer Animation and Virtual Worlds 24, 5 (2013), 445–457.

[54]

Christian Zimmermann, Max Argus, and Thomas Brox. 2021. Contrastive representation learning for hand shape estimation. In DAGM German Conference on Pattern Recognition. Springer, 250–264.