“Adaptive O-CNN: a patch-based deep representation of 3D shapes”

Conference:

Type(s):

Title:

- Adaptive O-CNN: a patch-based deep representation of 3D shapes

Session/Category Title:

- Learning Geometry

Presenter(s)/Author(s):

Moderator(s):

Abstract:

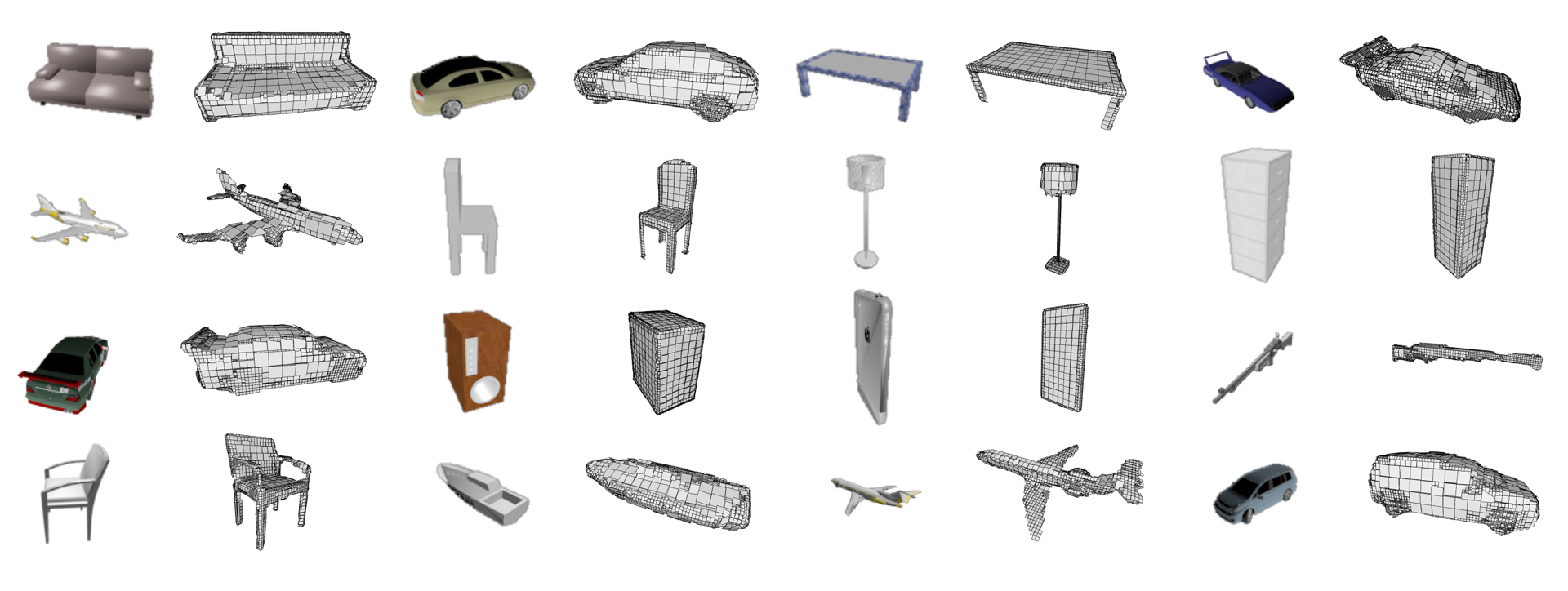

We present an Adaptive Octree-based Convolutional Neural Network (Adaptive O-CNN) for efficient 3D shape encoding and decoding. Different from volumetric-based or octree-based CNN methods that represent a 3D shape with voxels in the same resolution, our method represents a 3D shape adaptively with octants at different levels and models the 3D shape within each octant with a planar patch. Based on this adaptive patch-based representation, we propose an Adaptive O-CNN encoder and decoder for encoding and decoding 3D shapes. The Adaptive O-CNN encoder takes the planar patch normal and displacement as input and performs 3D convolutions only at the octants at each level, while the Adaptive O-CNN decoder infers the shape occupancy and subdivision status of octants at each level and estimates the best plane normal and displacement for each leaf octant. As a general framework for 3D shape analysis and generation, the Adaptive O-CNN not only reduces the memory and computational cost, but also offers better shape generation capability than the existing 3D-CNN approaches. We validate Adaptive O-CNN in terms of efficiency and effectiveness on different shape analysis and generation tasks, including shape classification, 3D autoencoding, shape prediction from a single image, and shape completion for noisy and incomplete point clouds.

References:

1. Panos Achlioptas, Olga Diamanti, Ioannis Mitliagkas, and Leonidas Guibas. 2018. Learning representations and generative models for 3D point clouds. In International Conference on Learning Representations.Google Scholar

2. Marco Attene, Marcel Campen, and Leif Kobbelt. 2013. Polygon mesh repairing: An application perspective. ACM Comput. Surv. 45, 2 (2013), 15:1–15:33. Google ScholarDigital Library

3. P. J. Besl and N. D. McKay. 1992. A method for registration of 3-D shapes. IEEE Trans. Pattern. Anal. Mach. Intell. 14, 2 (1992), 239–256. Google ScholarDigital Library

4. D. Boscaini, J. Masci, S. Melzi, M. M. Bronstein, U. Castellani, and P. Vandergheynst. 2015. Learning class-specific descriptors for deformable shapes using localized spectral convolutional networks. Comput. Graph. Forum 34, 5 (2015), 13–23.Google ScholarCross Ref

5. Andrew Brock, Theodore Lim, J.M. Ritchie, and Nick Weston. 2016. Generative and discriminative voxel modeling with convolutional neural networks. In 3D deep learning workshop (NIPS).Google Scholar

6. M. M. Bronstein, J. Bruna, Y. LeCun, A. Szlam, and P. Vandergheynst. 2017. Geometric deep learning: going beyond Euclidean data. IEEE Sig. Proc. Magazine 34 (2017), 18–42. Issue 4.Google ScholarCross Ref

7. Angel X. Chang, Thomas Funkhouser, and etal. 2015. ShapeNet: an information-rich 3D model repository. arXiv:1512.03012 {cs.GR}. (2015).Google Scholar

8. Christopher B Choy, Danfei Xu, JunYoung Gwak, Kevin Chen, and Silvio Savarese. 2016. 3D-R2N2: A unified approach for single and multi-view 3D object reconstruction. In European Conference on Computer Vision (ECCV). 628–644.Google ScholarCross Ref

9. Jia Deng, Wei Dong, Richard Socher, Li jia Li, Kai Li, and Li Fei-fei. 2009. ImageNet: a large-scale hierarchical image database. In Computer Vision and Pattern Recognition (CVPR).Google Scholar

10. Sarah F. Frisken, Ronald N. Perry, Alyn P. Rockwood, and Thouis R. Jones. 2000. Adaptively sampled distance fields: A general representation of shape for computer graphics. In SIGGRAPH. 249–254. Google ScholarDigital Library

11. Simon Fuhrmann and Michael Goesele. 2014. Floating scale surface reconstruction. ACM Trans. Graph. (SIGGRAPH) 33, 4 (2014), 46:1–46:11. Google ScholarDigital Library

12. Ian J. Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2016. Generative adversarial networks. In Neural Information Processing Systems (NIPS).Google Scholar

13. Ben Graham. 2015. Sparse 3D convolutional neural networks. In British Machine Vision Conference (BMVC).Google ScholarCross Ref

14. Thibault Groueix, Matthew Fisher, Vladimir G. Kim, Bryan C. Russell, and Mathieu Aubry. 2018. AtlasNet: A Papier-Mâché approach to learning 3D surface generation. In Computer Vision and Pattern Recognition (CVPR).Google Scholar

15. Christian Häne, Shubham Tulsiani, and Jitendra Malik. 2017. Hierarchical surface prediction for 3D object reconstruction. In Proc. Int. Conf. on 3D Vision (3DV).Google ScholarCross Ref

16. K. He, X. Zhang, S. Ren, and J. Sun. 2016. Deep residual learning for image recognition. In Computer Vision and Pattern Recognition (CVPR).Google Scholar

17. Yangqing Jia, Evan Shelhamer, Jeff Donahue, Sergey Karayev, Jonathan Long, Ross Girshick, Sergio Guadarrama, and Trevor Darrell. 2014. Caffe: convolutional architecture for fast feature embedding. In ACM Multimedia (ACMMM). 675–678. Google ScholarDigital Library

18. Tao Ju. 2004. Robust repair of polygonal models. ACM Trans. Graph. (SIGGRAPH) 23, 3 (2004), 888–895. Google ScholarDigital Library

19. Hiroharu Kato, Yoshitaka Ushiku, and Tatsuya Harada. 2018. Neural 3D Mesh Renderer. In Computer Vision and Pattern Recognition (CVPR).Google Scholar

20. Michael Kazhdan and Hugues Hoppe. 2013. Screened Poisson surface reconstruction. ACM Trans. Graph. 32, 3 (2013), 29:1–29:13. Google ScholarDigital Library

21. Diederik P. Kingma and Max Welling. 2014. Auto-encoding variational Bayes. In International Conference on Learning Representations.Google Scholar

22. Roman Klokov and Victor Lempitsky. 2017. Escape from cells: Deep Kd-networks for the recognition of 3D point cloud models. In International Conference on Computer Vision (ICCV).Google ScholarCross Ref

23. Y. Lecun, L. Bottou, Y. Bengio, and P. Haffner. 1998. Gradient-based learning applied to document recognition. Proc. IEEE 86, 11 (1998), 2278–2324.Google ScholarCross Ref

24. Jun Li, Kai Xu, Siddhartha Chaudhuri, Ersin Yumer, Hao Zhang, and Leonidas Guibas. 2017. GRASS: Generative recursive autoencoders for shape structures. ACM Trans. Graph. (SIGGRAPH) 36, 4 (2017), 52:1–52:14. Google ScholarDigital Library

25. Chen-Hsuan Lin, Chen Kong, and Simon Lucey. 2018. Learning efficient point cloud generation for dense 3D object reconstruction. arXiv:1706.07036 {cs.CV}. In AAAI Conference on Artificial Intelligence.Google Scholar

26. Zhaoliang Lun, Matheus Gadelha, Evangelos Kalogerakis, Subhransu Maji, and Rui Wang. 2017. 3D shape reconstruction from sketches via multi-view convolutional networks. In Proc. Int. Conf. on 3D Vision (3DV).Google ScholarCross Ref

27. Jonathan Masci, Davide Boscaini, Michael M. Bronstein, and Pierre Vandergheynst. 2015. Geodesic convolutional neural networks on Riemannian manifolds. In International Conference on Computer Vision (ICCV). Google ScholarDigital Library

28. D. Maturana and S. Scherer. 2015. VoxNet: A 3D convolutional neural network for real-time object recognition. In International Conference on Intelligent Robots and Systems (IROS).Google Scholar

29. Donald Meagher. 1982. Geometric modeling using octree encoding. Computer Graphics and Image Processing 19 (1982), 129–147.Google ScholarCross Ref

30. Charles R. Qi, Hao Su, Kaichun Mo, and Leonidas J. Guibas. 2017. PointNet: Deep learning on point sets for 3D classification and segmentation. In Computer Vision and Pattern Recognition (CVPR).Google Scholar

31. Charles Ruizhongtai Qi, Hao Su, Matthias Nießner, Angela Dai, Mengyuan Yan, and Leonidas J. Guibas. 2016. Volumetric and multi-view CNNs for object classification on 3D data. In Computer Vision and Pattern Recognition (CVPR).Google Scholar

32. Charles R Qi, Li Yi, Hao Su, and Leonidas J Guibas. 2017. PointNet++: Deep hierarchical feature learning on point sets in a metric space. In Neural Information Processing Systems (NIPS). Google ScholarDigital Library

33. Gernot Riegler, Ali Osman Ulusoy, Horst Bischof, and Andreas Geiger. 2017b. OctNet-Fusion: Learning depth fusion from data. In Proc. Int. Conf. on 3D Vision (3DV).Google ScholarCross Ref

34. Gernot Riegler, Ali Osman Ulusoy, and Andreas Geiger. 2017a. OctNet: Learning deep 3D representations at high resolutions. In Computer Vision and Pattern Recognition (CVPR).Google Scholar

35. Gopal Sharma, Rishabh Goyal, Difan Liu, Evangelos Kalogerakis, and Subhransu Maji. 2018. CSGNet: Neural shape parser for constructive solid geometry. In Computer Vision and Pattern Recognition (CVPR).Google Scholar

36. Ayan Sinha, Asim Unmesh, Qixing Huang, and Karthik Ramani. 2017. SurfNet: Generating 3D shape surfaces using deep residual networks. In Computer Vision and Pattern Recognition (CVPR).Google Scholar

37. Amir Arsalan Soltani, Haibin Huang, Jiajun Wu, Tejas D. Kulkarni, and Joshua B. Tenenbaum. 2017. Synthesizing 3D shapes via modeling multi-view depth maps and silhouettes with deep generative networks. In Computer Vision and Pattern Recognition (CVPR).Google Scholar

38. Hao Su, Haoqiang Fan, and Leonidas Guibas. 2017. A point set generation network for 3D object reconstruction from a single image. In Computer Vision and Pattern Recognition (CVPR).Google Scholar

39. H. Su, S. Maji, E. Kalogerakis, and E. Learned-Miller. 2015. Multi-view convolutional neural networks for 3D shape recognition. In International Conference on Computer Vision (ICCV). Google ScholarDigital Library

40. M. Tatarchenko, A. Dosovitskiy, and T. Brox. 2017. Octree generating networks: efficient convolutional architectures for high-resolution 3D outputs. In International Conference on Computer Vision (ICCV).Google Scholar

41. Shubham Tulsiani, Hao Su, Leonidas Guibas, Alexei A. Efros, and Jitendra Malik. 2017. Learning shape abstractions by assembling volumetric primitives. In Computer Vision and Pattern Recognition (CVPR).Google Scholar

42. Jonas Uhrig, Nick Schneider, Lukas Schneider, Thomas Brox, and Andreas Geiger. 2017. Sparsity invariant CNNs. In Proc. Int. Conf. on 3D Vision (3DV).Google ScholarCross Ref

43. Nanyang Wang, Yinda Zhang, Zhuwen Li, Yanwei Fu, Wei Liu, and Yu-Gang Jiang. 2018. Pixel2Mesh: Generating 3D mesh models from single RGB images. In Computer Vision and Pattern Recognition (CVPR).Google Scholar

44. Peng-Shuai Wang, Yang Liu, Yu-Xiao Guo, Chun-Yu Sun, and Xin Tong. 2017. O-CNN: Octree-based convolutional neural networks for 3D shape analysis. ACM Trans. Graph. (SIGGRAPH) 36, 4 (2017), 72:1–72:11. Google ScholarDigital Library

45. Jane Wilhelms and Allen Van Gelder. 1992. Octrees for faster isosurface generation. ACM Trans. Graph. 11, 3 (1992), 201–227. Google ScholarDigital Library

46. Jiajun Wu, Chengkai Zhang, Tianfan Xue, William T. Freeman, and Joshua B. Tenenbaum. 2016. Learning a probabilistic latent space of object shapes via 3D generative-adversarial modeling. In Neural Information Processing Systems (NIPS). Google ScholarDigital Library

47. Zhirong Wu, Shuran Song, Aditya Khosla, Fisher Yu, Linguang Zhang, Xiaoou Tang, and Jianxiong Xiao. 2015. 3D ShapeNets: A deep representation for volumetric shape modeling. In Computer Vision and Pattern Recognition (CVPR).Google Scholar

48. Bo Yang, Stefano Rosa, Andrew Markham, Niki Trigoni, and Hongkai Wen. 2018b. 3D object dense reconstruction from a single depth view. arXiv:1802.00411 {cs.CV}. (2018).Google Scholar

49. Yaoqing Yang, Chen Feng, Yiru Shen, and Dong Tian. 2018a. FoldingNet: Point cloud auto-encoder via deep grid deformation. In Computer Vision and Pattern Recognition (CVPR).Google Scholar

50. Chuhang Zou, Ersin Yumer, Jimei Yang, Duygu Ceylan, and Derek Hoiem. 2017. 3D-PRNN: Generating shape primitives with recurrent neural networks. In International Conference on Computer Vision (ICCV).Google ScholarCross Ref