“A reduced-precision network for image reconstruction” by Thomas, Vaidyanathan, Liktor and Forbes

Conference:

Type(s):

Title:

- A reduced-precision network for image reconstruction

Session/Category Title:

- Learning New Viewpoints

Presenter(s)/Author(s):

Abstract:

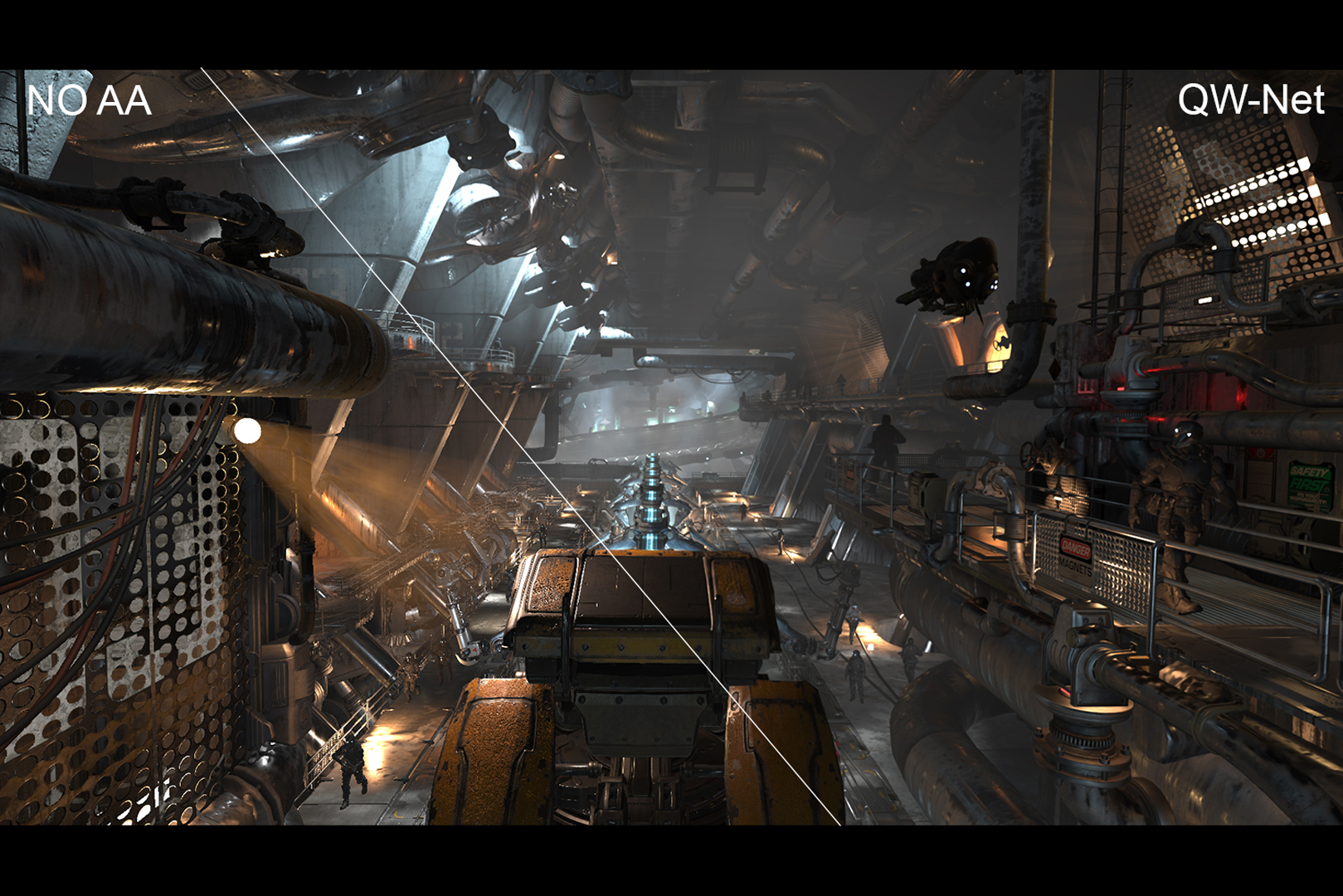

Neural networks are often quantized to use reduced-precision arithmetic, as it greatly improves their storage and computational costs. This approach is commonly used in image classification and natural language processing applications. However, using a quantized network for the reconstruction of HDR images can lead to a significant loss in image quality. In this paper, we introduce QW-Net, a neural network for image reconstruction, in which close to 95% of the computations can be implemented with 4-bit integers. This is achieved using a combination of two U-shaped networks that are specialized for different tasks, a feature extraction network based on the U-Net architecture, coupled to a filtering network that reconstructs the output image. The feature extraction network has more computational complexity but is more resilient to quantization errors. The filtering network, on the other hand, has significantly fewer computations but requires higher precision. Our network recurrently warps and accumulates previous frames using motion vectors, producing temporally stable results with significantly better quality than TAA, a widely used technique in current games.

References:

1. Kurt Akeley. 1993. RealityEngine Graphics. In Proc. ACM SIGGRAPH. 109–116.Google Scholar

2. Steve Bako, Thijs Vogels, Brian Mcwilliams, Mark Meyer, Jan Novák, Alex Harvill, Pradeep Sen, Tony Derose, and Fabrice Rousselle. 2017. Kernel-Predicting Convolutional Networks for Denoising Monte Carlo Renderings. ACM Transactions on Graphics 36, 4, Article 97 (July 2017), 14 pages.Google ScholarDigital Library

3. Peter G. J. Barten. 2003. Formula for the contrast sensitivity of the human eye. In Image Quality and System Performance, Yoichi Miyake and D. Rene Rasmussen (Eds.), Vol. 5294. International Society for Optics and Photonics, SPIE, 231–238.Google Scholar

4. Yoshua Bengio. 2013. Estimating or Propagating Gradients Through Stochastic Neurons. arXiv preprint arXiv:1305.2982 (2013).Google Scholar

5. H. C. Burger, C. J. Schuler, and S. Harmeling. 2012. Image denoising: Can plain neural networks compete with BM3D?. In Proc. IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 2392–2399.Google Scholar

6. Jose Caballero, Christian Ledig, Andrew Aitken, Alejandro Acosta, Johannes Totz, Zehan Wang, and Wenzhe Shi. 2017. Real-Time Video Super-Resolution with SpatioTemporal Networks and Motion Compensation. In Proc. IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 4778–4787.Google Scholar

7. Chakravarty R. Alla Chaitanya, Anton S. Kaplanyan, Christoph Schied, Marco Salvi, Aaron Lefohn, Derek Nowrouzezahrai, and Timo Aila. 2017. Interactive Reconstruction of Monte Carlo Image Sequences Using a Recurrent Denoising Autoencoder. ACM Transactions on Graphics 36, 4, Article 98 (July 2017), 12 pages.Google Scholar

8. Jungwook Choi, Zhuo Wang, Swagath Venkataramani, Pierce I-Jen Chuang, Vijayalakshmi Srinivasan, and Kailash Gopalakrishnan. 2018. PACT: Parameterized Clipping Activation for Quantized Neural Networks. arXiv preprint arXiv:1805.06085 (2018).Google Scholar

9. Ö. Çiçek, A. Abdulkadir, S.S. Lienkamp, T. Brox, and O. Ronneberger. 2016. 3D U-Net: Learning Dense Volumetric Segmentation from Sparse Annotation. In Medical Image Computing and Computer-Assisted Intervention (MICCAI), Vol. 9901. Springer, 424–432.Google Scholar

10. Djork-Arné Clevert, Thomas Unterthiner, and Sepp Hochreiter. 2015. Fast and accurate deep network learning by exponential linear units (ELUs). arXiv preprint arXiv:1511.07289 (2015).Google Scholar

11. Robert L. Cook, Thomas Porter, and Loren Carpenter. 1984. Distributed Ray Tracing. In Computer Graphics (Proc. ACM SIGGRAPH), Vol. 18. 137–145.Google ScholarDigital Library

12. Matthieu Courbariaux, Yoshua Bengio, and Jean-Pierre David. 2015. BinaryConnect: Training deep neural networks with binary weights during propagations. arXiv preprint arXiv:1511.00363 (2015).Google Scholar

13. Matthieu Courbariaux, Itay Hubara, Daniel Soudry, Ran El-Yaniv, and Yoshua Bengio. 2016. Binarized neural networks: Training deep neural networks with weights and activations constrained to +1 or -1. arXiv preprint arXiv:1602.02830 (2016).Google Scholar

14. Steven K Esser, Jeffrey L McKinstry, Deepika Bablani, Rathinakumar Appuswamy, and Dharmendra S Modha. 2019. Learned step size quantization. arXiv preprint arXiv:1902.08153 (2019).Google Scholar

15. Epic Games. 2019. Unreal Engine 4.24 on GitHub. https://github.com/EpicGames/UnrealEngine/tree/4.24Google Scholar

16. Michaël Gharbi, Tzu-Mao Li, Miika Aittala, Jaakko Lehtinen, and Frédo Durand. 2019. Sample-Based Monte Carlo Denoising Using a Kernel-Splatting Network. ACM Transactions on Graphics 38, 4, Article 125 (July 2019), 12 pages.Google ScholarDigital Library

17. J. Hasselgren, J. Munkberg, M. Salvi, A. Patney, and A. Lefohn. 2020. Neural Temporal Adaptive Sampling and Denoising. Computer Graphics Forum 39, 2 (2020), 147–155.Google ScholarCross Ref

18. Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2015. Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification. Proc. IEEE International Conference on Computer Vision (ICCV) (Dec 2015), 1026–1034.Google ScholarDigital Library

19. Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2016. Deep Residual Learning for Image Recognition. Proc. IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (Jun 2016), 770–778.Google ScholarCross Ref

20. Sergey Ioffe and Christian Szegedy. 2015. Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv preprint arXiv:1502.03167 (2015).Google ScholarDigital Library

21. Benoit Jacob, Skirmantas Kligys, Bo Chen, Menglong Zhu, Matthew Tang, Andrew Howard, Hartwig Adam, and Dmitry Kalenichenko. 2018. Quantization and training of neural networks for efficient integer-arithmetic-only inference. In Proc. IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 2704–2713.Google ScholarCross Ref

22. Sambhav R Jain, Albert Gural, Michael Wu, and Chris H Dick. 2019. Trained quantization thresholds for accurate and efficient fixed-point inference of deep neural networks. arXiv preprint arXiv:1903.08066 (2019).Google Scholar

23. Jorge Jimenez, Jose I. Echevarria, Tiago Sousa, and Diego Gutierrez. 2012. SMAA: Enhanced Morphological Antialiasing. Computer Graphics Forum 31, 2 (2012), 355–364.Google ScholarDigital Library

24. Florian Kainz, Rod Bogart, and Drew Hess. 2004. The OpenEXR image file format. In GPU Gems, Randima Fernando (Ed.). Addison-Wesley Reading, Chapter 26.Google Scholar

25. Nima Khademi Kalantari, Steve Bako, and Pradeep Sen. 2015. A Machine Learning Approach for Filtering Monte Carlo Noise. ACM Transactions on Graphics, 34, 4, Article 122 (2015), 12 pages.Google ScholarDigital Library

26. A. S. Kaplanyan, S. Hill, A. Patney, and A. Lefohn. 2016. Filtering Distributions of Normals for Shading Antialiasing. In Proc. High-Performance Graphics (HPG). 151–162.Google Scholar

27. Anton S. Kaplanyan, Anton Sochenov, Thomas Leimkühler, Mikhail Okunev, Todd Goodall, and Gizem Rufo. 2019. DeepFovea: Neural Reconstruction for Foveated Rendering and Video Compression Using Learned Statistics of Natural Videos. ACM Transactions on Graphics 38, 6, Article 212 (Nov. 2019), 13 pages.Google ScholarDigital Library

28. Brian Karis. 2014. High Quality Temporal Supersampling. SIGGRAPH 2014 Advances in Real-Time Rendering in Games course. http://advances.realtimerendering.com/s2014/Google Scholar

29. Raghuraman Krishnamoorthi. 2018. Quantizing deep convolutional networks for efficient inference: A whitepaper. arXiv preprint arXiv:1806.08342 (2018).Google Scholar

30. Alex Krizhevsky. 2010. Convolutional deep belief networks on CIFAR-10. https://www.cs.toronto.edu/~kriz/conv-cifar10-aug2010.pdfGoogle Scholar

31. Darryl D. Lin, Sachin S. Talathi, and V. Sreekanth Annapureddy. 2016. Fixed Point Quantization of Deep Convolutional Networks. In Proc. International Conference on Machine Learning (ICML). 2849–2858.Google Scholar

32. Edward Liu. 2020. DLSS 2.0 – Image Reconstruction for Real-time Rendering with Deep Learning. In GPU Technology Conference (GTC). https://developer.nvidia.com/gtc/2020/video/s22698-vidGoogle Scholar

33. Liyuan Liu, Haoming Jiang, Pengcheng He, Weizhu Chen, Xiaodong Liu, Jianfeng Gao, and Jiawei Han. 2019. On the Variance of the Adaptive Learning Rate and Beyond. arXiv preprint arXiv:1908.03265 (2019).Google Scholar

34. Andrew L. Maas, Awni Y. Hannun, and Andrew Y. Ng. 2013. Rectifier nonlinearities improve neural network acoustic models. In Proc. ICML Workshop on Deep Learning for Audio, Speech and Language Processing.Google Scholar

35. Szymon Migacz. 2017. 8-bit Inference with TensorRT. In GPU Technology Conference (GTC). https://on-demand.gputechconf.com/gtc/2017/presentation/s7310-8-bit-inference-with-tensorrt.pdfGoogle Scholar

36. Ben Mildenhall, Jonathan T Barron, Jiawen Chen, Dillon Sharlet, Ren Ng, and Robert Carroll. 2018. Burst Denoising with Kernel Prediction Networks. In Proc. IEEE Computer Vision and Pattern Recognition (CVPR). 2502–2510.Google ScholarCross Ref

37. Don P. Mitchell and Arun N. Netravali. 1988. Reconstruction Filters in Computer Graphics. SIGGRAPH Computer Graphics 22, 4 (1988), 221–228.Google ScholarDigital Library

38. Prateeth Nayak, Degan Zhang, and Sek Chai. 2019. Bit Efficient Quantization for Deep Neural Networks. arXiv preprint arXiv:1910.04877 (2019).Google Scholar

39. Diego Nehab, Pedro V. Sander, Jason Lawrence, Natalya Tatarchuk, and John R. Isidoro. 2007. Accelerating Real-time Shading with Reverse Reprojection Caching. In Graphics Hardware. 25–35.Google Scholar

40. NVIDIA. 2018. NVIDIA Turing GPU Architecture Whitepaper. https://www.nvidia.com/content/dam/en-zz/Solutions/design-visualization/technologies/turing-architecture/NVIDIA-Turing-Architecture-Whitepaper.pdfGoogle Scholar

41. Anjul Patney and Aaron Lefohn. 2018. Detecting Aliasing Artifacts in Image Sequences Using Deep Neural Networks. In Proc. High-Performance Graphics (HPG). Article 4, 4 pages.Google ScholarDigital Library

42. John G. Proakis and Dimitris K Manolakis. 2006. Digital Signal Processing (4th Edition). Prentice Hall.Google Scholar

43. Mohammad Rastegari, Vicente Ordonez, Joseph Redmon, and Ali Farhadi. 2016. XNOR-NET: ImageNet classification using binary convolutional neural networks. In Proc. European Conference on Computer Vision (ECCV). 525–542.Google ScholarCross Ref

44. Alexander Reshetov. 2009. Morphological Antialiasing. In Proc. High-Performance Graphics (HPG). 109–116.Google Scholar

45. Olaf Ronneberger, Philipp Fischer, and Thomas Brox. 2015. U-Net: Convolutional Networks for Biomedical Image Segmentation. arXiv preprint arXiv 1505.04597 (2015).Google Scholar

46. Mehdi SM Sajjadi, Raviteja Vemulapalli, and Matthew Brown. 2018. Frame-recurrent video super-resolution. In Proc. IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 6626–6634.Google ScholarCross Ref

47. Marco Salvi. 2016. An excursion in temporal supersampling. In Game Developer’s Conference (GDC). https://developer.download.nvidia.com/gameworks/events/GDC2016/msalvi_temporal_supersampling.pdfGoogle Scholar

48. Marco Salvi. 2017. Deep Learning: The Future of Real-Time Rendering?. In ACM SIGGRAPH Courses: Open Problems in Real-Time Rendering. https://openproblems.realtimerendering.com/s2017/index.htmlGoogle Scholar

49. SMPTE. 2014. ST 2084:2014 – SMPTE Standard – High Dynamic Range Electro-Optical Transfer Function of Mastering Reference Displays. ST 2084:2014 (2014), 1–14.Google Scholar

50. Christian Szegedy, Wei Liu, Yangqing Jia, Pierre Sermanet, Scott Reed, Dragomir Anguelov, Dumitru Erhan, Vincent Vanhoucke, and Andrew Rabinovich. 2015. Going deeper with convolutions. In Proc. IEEE Computer Vision and Pattern Recognition (CVPR). 1–9.Google ScholarCross Ref

51. Thijs Vogels, Fabrice Rousselle, Brian Mcwilliams, Gerhard Röthlin, Alex Harvill, David Adler, Mark Meyer, and Jan Novák. 2018. Denoising with Kernel Prediction and Asymmetric Loss Functions. ACM Transactions on Graphics 37, 4, Article 124 (July 2018), 15 pages.Google ScholarDigital Library

52. Less Wright. 2019. New Deep Learning Optimizer, Ranger: Synergistic combination of RAdam + LookAhead for the best of both. https://medium.com/@lessw/new-deep-learning-optimizer-ranger-synergistic-combination-of-radam-lookahead-for-the-best-of-2dc83f79a48dGoogle Scholar

53. Lei Xiao, Salah Nouri, Matt Chapman, Alexander Fix, Douglas Lanman, and Anton Kaplanyan. 2020. Neural supersampling for real-time rendering. ACM Transactions on Graphics 39, 4, Article 142 (2020), 12 pages.Google ScholarDigital Library

54. Lei Yang, Shiqiu Liu, and Marco Salvi. 2020. A Survey of Temporal Antialiasing Techniques. Computer Graphics Forum 39 (2020), 607–621.Google ScholarCross Ref

55. Lei Yang, Diego Nehab, Pedro V. Sander, Pitchaya Sitthi-amorn, Jason Lawrence, and Hugues Hoppe. 2009. Amortized Supersampling. ACM Transactions on Graphics 28, 5, Article 135 (Dec. 2009), 12 pages.Google ScholarDigital Library

56. Dongqing Zhang, Jiaolong Yang, Dongqiangzi Ye, and Gang Hua. 2018. LQ-Nets: Learned Quantization for Highly Accurate and Compact Deep Neural Networks. In Proc. European Conference on Computer Vision (ECCV). 365–382.Google ScholarCross Ref

57. Michael R. Zhang, James Lucas, Geoffrey Hinton, and Jimmy Ba. 2019. Lookahead Optimizer: k steps forward, 1 step back. arXiv preprint arXiv:1907.08610 (2019).Google Scholar

58. Aojun Zhou, Anbang Yao, Yiwen Guo, Lin Xu, and Yurong Chen. 2017. Incremental Network Quantization: Towards Lossless CNNs with Low-Precision Weights. In International Conference on Learning Representations (ICLR).Google Scholar

59. Shuchang Zhou, Zekun Ni, Xinyu Zhou, He Wen, Yuxin Wu, and Yuheng Zou. 2016. DoReFa-Net: Training Low Bitwidth Convolutional Neural Networks with Low Bitwidth Gradients. arXiv preprint arXiv:1606.06160 (2016).Google Scholar

60. Chenzhuo Zhu, Song Han, Huizi Mao, and William J. Dally. 2016. Trained Ternary Quantization. arXiv preprint arXiv:1612.01064 (2016).Google Scholar