“A novel framework for inverse procedural texture modeling” by Hu, Dorsey and Rushmeier

Conference:

Type(s):

Title:

- A novel framework for inverse procedural texture modeling

Session/Category Title:

- Synthesis in the Arvo

Presenter(s)/Author(s):

Moderator(s):

Abstract:

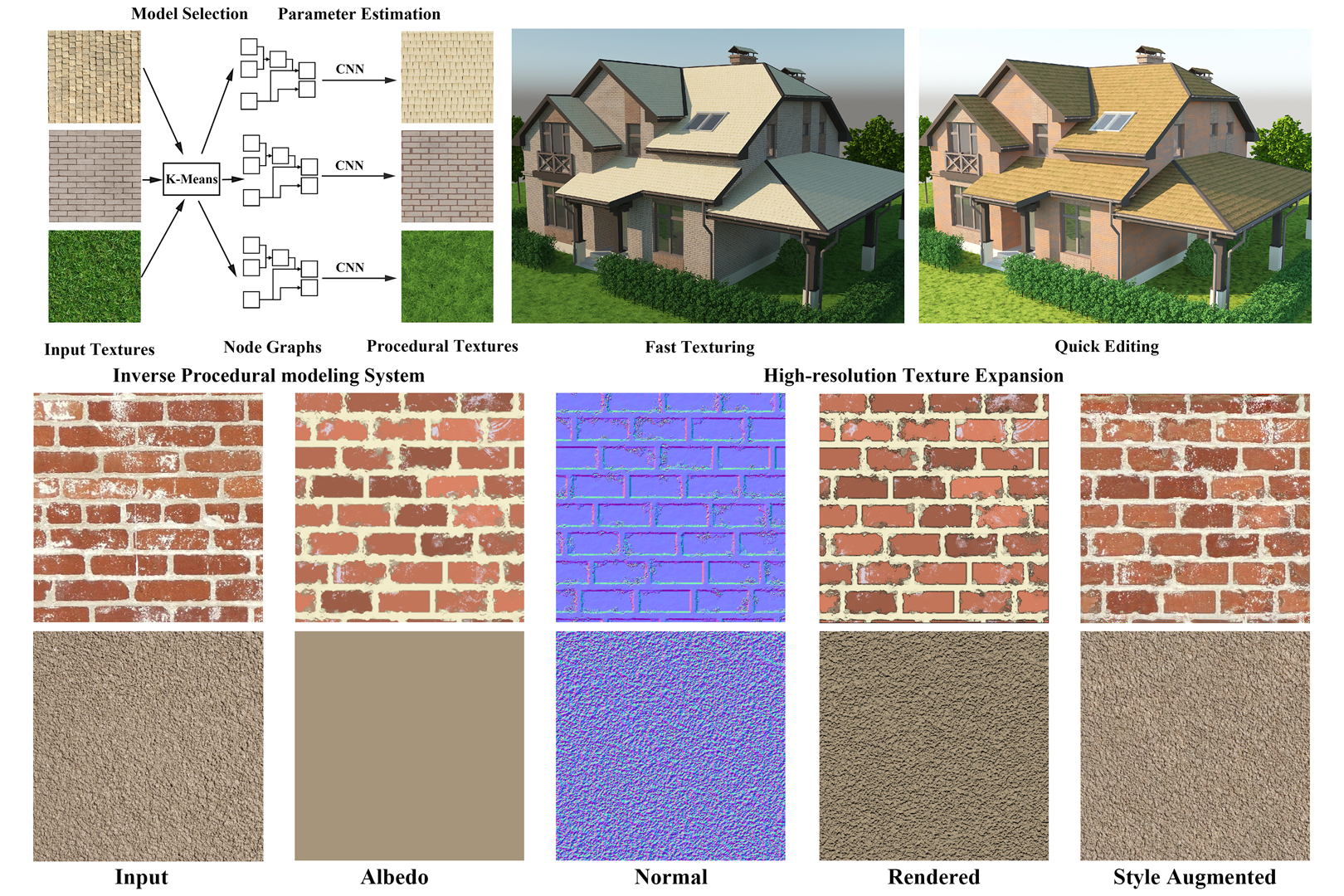

Procedural textures are powerful tools that have been used in graphics for decades. In contrast to the alternative exemplar-based texture synthesis techniques, procedural textures provide user control and fast texture generation with low-storage cost and unlimited texture resolution. However, creating procedural models for complex textures requires a time-consuming process of selecting a combination of procedures and parameters. We present an example-based framework to automatically select procedural models and estimate parameters. In our framework, we consider textures categorized by commonly used high level classes. For each high level class we build a data-driven inverse modeling system based on an extensive collection of real-world textures and procedural texture models in the form of node graphs. We use unsupervised learning on collected real-world images in a texture class to learn sub-classes. We then classify the output of each of the collected procedural models into these sub-classes. For each of the collected models we train a convolutional neural network (CNN) to learn the parameters to produce a specific output texture. To use our framework, a user provides an exemplar texture image within a high level class. The system first classifies the texture into a sub-class, and selects the procedural models that produce output in that sub-class. The pre-trained CNNs of the selected models are used to estimate the parameters of the texture example. With the predicted parameters, the system can generate appropriate procedural textures for the user. The user can easily edit the textures by adjusting the node graph parameters. In a last optional step, style transfer augmentation can be applied to the fitted procedural textures to recover details lost in the procedural modeling process. We demonstrate our framework for four high level classes and show that our inverse modeling system can produce high-quality procedural textures for both structural and non-structural textures.

References:

1. Miika Aittala, Timo Aila, and Jaakko Lehtinen. 2016. Reflectance Modeling by Neural Texture Synthesis. ACM Trans. Graph. 35, 4, Article 65 (July 2016), 13 pages. Google ScholarDigital Library

2. Allegorithmic. 2019a. Substance Designer. https://www.allegorithmic.com/products/substance-designerGoogle Scholar

3. Allegorithmic. 2019b. Substance Share. https://share.allegorithmic.comGoogle Scholar

4. Blender. 2019a. https://www.blender.org/Google Scholar

5. Blender. 2019b. Blender Materials. https://matrep.parastudios.de/Google Scholar

6. Valentin Deschaintre, Miika Aittala, Fredo Durand, George Drettakis, and Adrien Bousseau. 2018. Single-image SVBRDF Capture with a Rendering-aware Deep Network. ACM Trans. Graph. 37, 4, Article 128 (July 2018), 15 pages. Google ScholarDigital Library

7. J-M Dischler, Karl Maritaud, Bruno Lévy, and Djamchid Ghazanfarpour. 2002. Texture particles. In Computer Graphics Forum, Vol. 21. Wiley Online Library, 401–410.Google Scholar

8. Alexei A. Efros and William T Freeman. 2001. Image quilting for texture synthesis and transfer. In Proceedings of the 28th annual conference on Computer graphics and interactive techniques. ACM, 341–346.Google Scholar

9. Alexei A. Efros and Thomas K. Leung. 1999. Texture Synthesis by Non-Parametric Sampling. In Proceedings of the International Conference on Computer Vision-Volume 2 – Volume 2 (ICCV ’99). IEEE Computer Society, Washington, DC, USA, 1033-. http://dl.acm.org/citation.cfm?id=850924.851569Google ScholarDigital Library

10. Bruno Galerne, Ares Lagae, Sylvain Lefebvre, and George Drettakis. 2012. Gabor Noise by Example. ACM Trans. Graph. 31, 4, Article 73 (July 2012), 9 pages. Google ScholarDigital Library

11. Bruno Galerne, Arthur Leclaire, and Lionel Moisan. 2017. Texton noise. In Computer Graphics Forum, Vol. 36. Wiley Online Library, 205–218.Google Scholar

12. Leon Gatys, Alexander S Ecker, and Matthias Bethge. 2015. Texture synthesis using convolutional neural networks. In Advances in neural information processing systems. 262–270.Google Scholar

13. Leon A. Gatys, Alexander S. Ecker, and Matthias Bethge. 2016. Image style transfer using convolutional neural networks. In Proceedings of the IEEE conference on computer vision and pattern recognition. 2414–2423.Google ScholarCross Ref

14. Guillaume Gilet, Basile Sauvage, Kenneth Vanhoey, Jean-Michel Dischler, and Djamchid Ghazanfarpour. 2014. Local random-phase noise for procedural texturing. ACM Transactions on Graphics (TOG) 33, 6 (2014), 195.Google ScholarDigital Library

15. Eric Heitz and Fabrice Neyret. 2018. High-Performance By-Example Noise Using a Histogram-Preserving Blending Operator. Proc. ACM Comput. Graph. Interact. Tech. 1, 2, Article 31 (Aug. 2018), 25 pages. Google ScholarDigital Library

16. Xun Huang and Serge Belongie. 2017. Arbitrary style transfer in real-time with adaptive instance normalization. In Proceedings of the IEEE International Conference on Computer Vision. 1501–1510.Google ScholarCross Ref

17. Eric Jones, Travis Oliphant, Pearu Peterson, et al. 2001. SciPy: Open source scientific tools for Python. http://www.scipy.org/ [Online; accessed <today>].Google Scholar

18. Alex Krizhevsky, Ilya Sutskever, and Geoffrey E Hinton. 2012. Imagenet classification with deep convolutional neural networks. In Advances in neural information processing systems. 1097–1105.Google Scholar

19. Vivek Kwatra, Irfan Essa, Aaron Bobick, and Nipun Kwatra. 2005. Texture optimization for example-based synthesis. In ACM Transactions on Graphics (ToG), Vol. 24. ACM, 795–802.Google ScholarDigital Library

20. Vivek Kwatra, Arno Schödl, Irfan Essa, Greg Turk, and Aaron Bobick. 2003. Graphcut textures: image and video synthesis using graph cuts. ACM Transactions on Graphics (ToG) 22, 3 (2003), 277–286.Google ScholarDigital Library

21. Ares Lagae, Peter Vangorp, Toon Lenaerts, and Philip Dutré. 2010. Procedural Isotropic Stochastic Textures by Example. Comput. Graph. 34, 4 (Aug. 2010), 312–321. Google ScholarDigital Library

22. Laurent Lefebvre and Pierre Poulin. 2000. Analysis and synthesis of structural textures. In Graphics Interface, Vol. 2000. 77–86.Google Scholar

23. Xiao Li, Yue Dong, Pieter Peers, and Xin Tong. 2017a. Modeling Surface Appearance from a Single Photograph Using Self-augmented Convolutional Neural Networks. ACM Trans. Graph. 36, 4, Article 45 (July 2017), 11 pages. Google ScholarDigital Library

24. Yanghao Li, Naiyan Wang, Jiaying Liu, and Xiaodi Hou. 2017b. Demystifying Neural Style Transfer. In Proceedings of the 26th International Joint Conference on Artificial Intelligence (IJCAI’17). AAAI Press, 2230–2236. http://dl.acm.org/citation.cfm?id=3172077.3172198Google ScholarCross Ref

25. Zhengqin Li, Kalyan Sunkavalli, and Manmohan Chandraker. 2018a. Materials for masses: SVBRDF acquisition with a single mobile phone image. In Proceedings of the European Conference on Computer Vision (ECCV). 72–87.Google ScholarDigital Library

26. Zhengqin Li, Zexiang Xu, Ravi Ramamoorthi, Kalyan Sunkavalli, and Manmohan Chandraker. 2018b. Learning to Reconstruct Shape and Spatially-varying Reflectance from a Single Image. ACM Trans. Graph. 37, 6, Article 269 (Dec. 2018), 11 pages. Google ScholarDigital Library

27. Gen Nishida, Ignacio Garcia-Dorado, Daniel G. Aliaga, Bedrich Benes, and Adrien Bousseau. 2016. Interactive Sketching of Urban Procedural Models. ACM Trans. Graph. 35, 4, Article 130 (July 2016), 11 pages. Google ScholarDigital Library

28. Ruggero Pintus, Ying Yang, and Holly Rushmeier. 2015. ATHENA: Automatic text height extraction for the analysis of text lines in old handwritten manuscripts. Journal on Computing and Cultural Heritage (JOCCH) 8, 1 (2015), 1.Google ScholarDigital Library

29. K. Simonyan and A. Zisserman. 2014. Very Deep Convolutional Networks for Large-Scale Image Recognition. CoRR abs/1409.1556 (2014).Google Scholar

30. Robert Tibshirani, Guenther Walther, and Trevor Hastie. 2001. Estimating the number of clusters in a data set via the gap statistic. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 63, 2 (2001), 411–423.Google ScholarCross Ref

31. Lisa Torrey and Jude Shavlik. 2010. Transfer learning. In Handbook of research on machine learning applications and trends: algorithms, methods, and techniques. IGI Global, 242–264.Google Scholar

32. Dmitry Ulyanov, Vadim Lebedev, Andrea Vedaldi, and Victor S Lempitsky. 2016. Texture Networks: Feed-forward Synthesis of Textures and Stylized Images. In ICML, Vol. 1. 4.Google Scholar

33. Li-Yi Wei, Jianwei Han, Kun Zhou, Hujun Bao, Baining Guo, and Heung-Yeung Shum. 2008. Inverse Texture Synthesis. In ACM SIGGRAPH 2008 Papers (SIGGRAPH ’08). ACM, New York, NY, USA, Article 52, 9 pages. Google ScholarDigital Library

34. Li-Yi Wei, Sylvain Lefebvre, Vivek Kwatra, and Greg Turk. 2009. State of the art in example-based texture synthesis. In Eurographics 2009, State of the Art Report, EG-STAR. Eurographics Association, 93–117.Google Scholar

35. Li-Yi Wei and Marc Levoy. 2000. Fast Texture Synthesis Using Tree-structured Vector Quantization. In Proceedings of the 27th Annual Conference on Computer Graphics and Interactive Techniques (SIGGRAPH ’00). ACM Press/Addison-Wesley Publishing Co., New York, NY, USA, 479–488. Google ScholarDigital Library

36. Yang Zhou, Zhen Zhu, Xiang Bai, Dani Lischinski, Daniel Cohen-Or, and Hui Huang. 2018. Non-stationary Texture Synthesis by Adversarial Expansion. ACM Trans. Graph. 37, 4, Article 49 (July 2018), 13 pages. Google ScholarDigital Library