“Tangible UI by Object and Material Classification with Radar” by Yeo, Ens and Quigley

Conference:

Experience Type(s):

Title:

- Tangible UI by Object and Material Classification with Radar

Organizer(s)/Presenter(s):

Description:

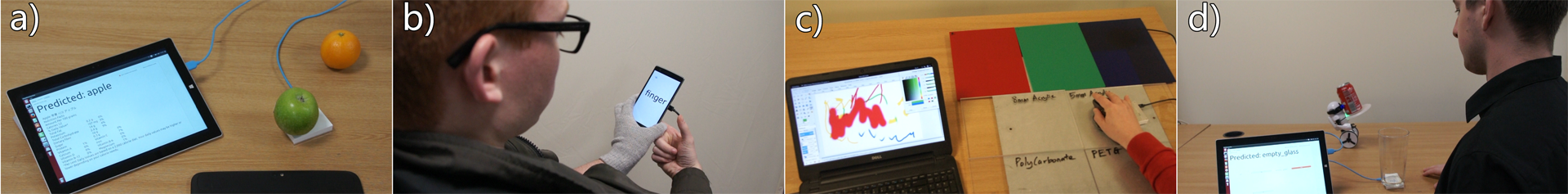

Radar signals penetrate, scatter, absorb and reflect energy into proximate objects, indeed ground penetrating and aerial radar systems are well established. We describe a highly accurate system based on a combination of a monostatic radar (Google Soli), supervised machine learning to support object and material classification based UIs. Based on RadarCat techniques, we explore the development of tangible user interfaces without modification to the objects or complex infrastructures. This affords new forms of interaction with digital devices and proximate objects.

References:

[1] Jaime Lien, Nicholas Gillian, M. Emre Karagozler, Patrick Amihood, Carsten Schwesig, Erik Olson, Hakim Raja, and Ivan Poupyrev. 2016. Soli: Ubiquitous Gesture Sensing with Millimeter Wave Radar. ACM Trans. Graph. 35, 4, Article 142 (July 2016), 19 pages.

[2] Hui-Shyong Yeo, Gergely Flamich, Patrick Schrempf, David Harris-Birtill, and Aaron Quigley. 2016. RadarCat: Radar Categorization for Input & Interaction. In Proceedings of the 29th Annual Symposium on User Interface Software and Technology (UIST ’16). ACM, New York, NY, USA, 833–841.

[3] Hui-Shyong Yeo, Juyoung Lee, Andrea Bianchi, David Harris-Birtill, and Aaron Quigley. 2017. SpeCam: Sensing Surface Color and Material with the Front-facing Camera of a Mobile Device. In Proceedings of the 19th International Conference on Human-Computer Interaction with Mobile Devices and Services (MobileHCI ’17). ACM, New York, NY, USA, Article 25, 9 pages.