“Metappearance: Meta-Learning for Visual Appearance Reproduction” by Fischer and Ritschel

Conference:

Type(s):

Title:

- Metappearance: Meta-Learning for Visual Appearance Reproduction

Session/Category Title: Appearence Modeling and Capture

Presenter(s)/Author(s):

Abstract:

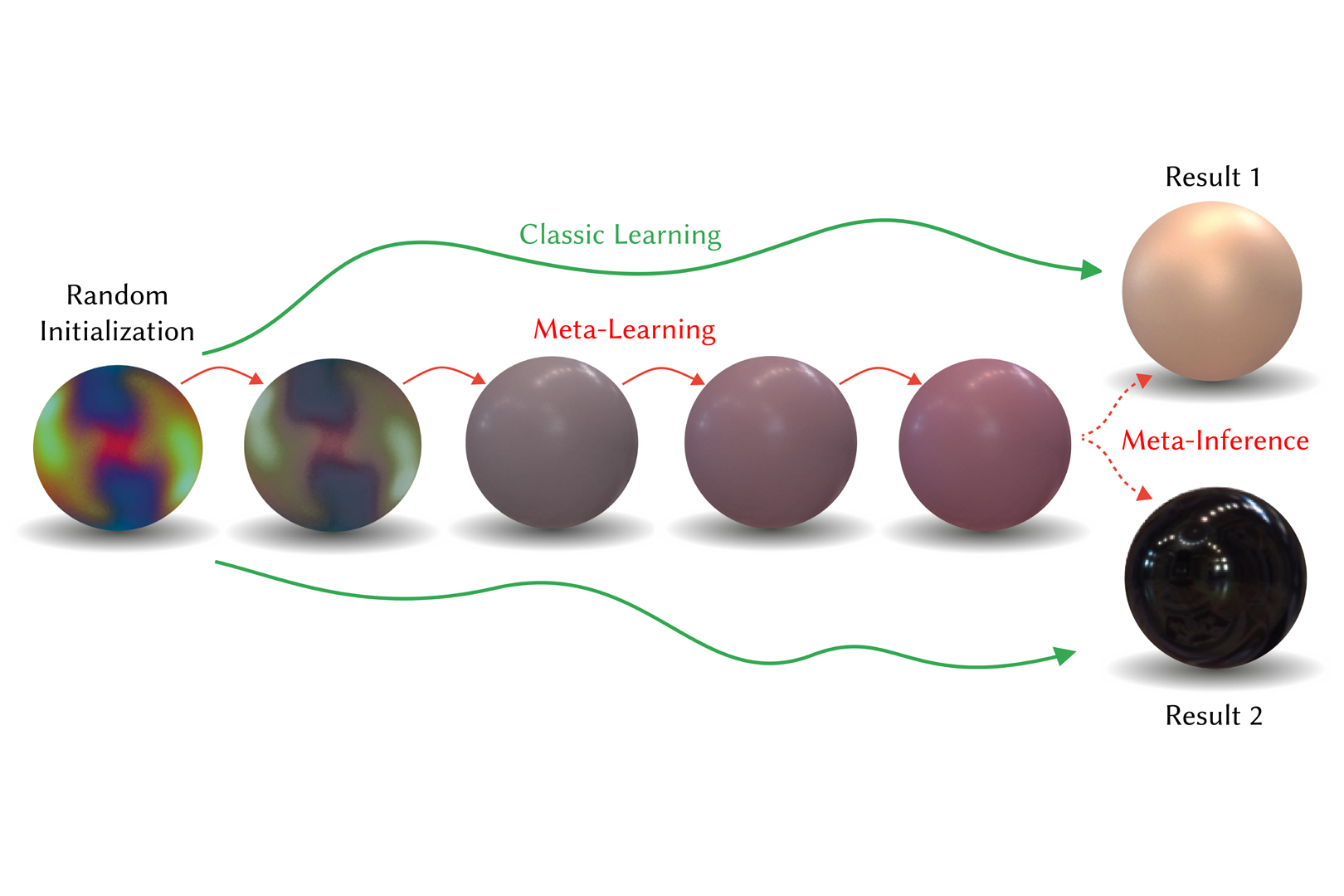

There currently exist two main approaches to reproducing visual appearance using Machine Learning (ML): The first is training models that generalize over different instances of a problem, e.g., different images of a dataset. As one-shot approaches, these offer fast inference, but often fall short in quality. The second approach does not train models that generalize across tasks, but rather over-fit a single instance of a problem, e.g., a flash image of a material. These methods offer high quality, but take long to train. We suggest to combine both techniques end-to-end using meta-learning: We over-fit onto a single problem instance in an inner loop, while also learning how to do so efficiently in an outer-loop across many exemplars. To this end, we derive the required formalism that allows applying meta-learning to a wide range of visual appearance reproduction problems: textures, Bidirectional Reflectance Distribution Functions (BRDFs), spatially-varying BRDFs (svBRDFs), illumination or the entire light transport of a scene. The effects of meta-learning parameters on several different aspects of visual appearance are analyzed in our framework, and specific guidance for different tasks is provided. Metappearance enables visual quality that is similar to over-fit approaches in only a fraction of their runtime while keeping the adaptivity of general models.

References:

1. Jonas Adler and Ozan Öktem. 2018. Learned primal-dual reconstruction. IEEE transactions on medical imaging 37, 6 (2018).

2. Miika Aittala, Timo Aila, and Jaakko Lehtinen. 2016. Reflectance modeling by neural texture synthesis. ACM Trans. Graph. (Proc. SIGGRAPH) 35, 4 (2016).

3. Maruan Al-Shedivat, Liam Li, Eric Xing, and Ameet Talwalkar. 2021. On data efficiency of meta-learning. In International Conference on Artificial Intelligence and Statistics. PMLR, 1369–1377.

4. Antreas Antoniou, Harrison Edwards, and Amos Storkey. 2018. How to train your MAML. arXiv:1810.09502 (2018).

5. Steve Bako, Mark Meyer, Tony DeRose, and Pradeep Sen. 2019. Offline deep importance sampling for Monte Carlo path tracing. In Comp. Graph. Forum (Proc. EGSR), Vol. 38.

6. Alexander William Bergman, Petr Kellnhofer, and Gordon Wetzstein. 2021. Fast training of neural lumigraph representations using meta learning. In NeuriIPS.

7. Sai Bi, Stephen Lombardi, Shunsuke Saito, Tomas Simon, Shih-En Wei, Kevyn Mcphail, Ravi Ramamoorthi, Yaser Sheikh, and Jason Saragih. 2021. Deep relightable appearance models for animatable faces. ACM Trans. Graph. 40, 4 (2021).

8. Kristin J Dana and Jing Wang. 2004. Device for convenient measurement of spatially varying bidirectional reflectance. JOSA A 21, 1 (2004).

9. Valentin Deschaintre, Miika Aittala, Fredo Durand, George Drettakis, and Adrien Bousseau. 2018. Single-image svbrdf capture with a rendering-aware deep network. ACM Trans. Graph. (Proc. SIGGRAPH) 37, 4 (2018).

10. Valentin Deschaintre, George Drettakis, and Adrien Bousseau. 2020. Guided Fine-Tuning for Large-Scale Material Transfer. In Comp. Graph. Forum, Vol. 39.

11. Julie Dorsey, Holly Rushmeier, and François Sillion. 2010. Digital modeling of material appearance.

12. Alexei A Efros and Thomas K Leung. 1999. Texture synthesis by non-parametric sampling. In ICCV.

13. Chelsea Finn, Pieter Abbeel, and Sergey Levine. 2017. Model-agnostic meta-learning for fast adaptation of deep networks. In ICML.

14. John Flynn, Michael Broxton, Paul Debevec, Matthew DuVall, Graham Fyffe, Ryan Overbeck, Noah Snavely, and Richard Tucker. 2019. Deepview: View synthesis with learned gradient descent. In CVPR.

15. Duan Gao, Xiao Li, Yue Dong, Pieter Peers, Kun Xu, and Xin Tong. 2019. Deep inverse rendering for high-resolution SVBRDF estimation from an arbitrary number of images. ACM Trans. Graph. 38, 4 (2019).

16. Marc-André Gardner, Kalyan Sunkavalli, Ersin Yumer, Xiaohui Shen, Emiliano Gambaretto, Christian Gagné, and Jean-François Lalonde. 2017. Learning to predict indoor illumination from a single image. arXiv preprint arXiv:1704.00090 (2017).

17. Leon A Gatys, Alexander S Ecker, and Matthias Bethge. 2015. A neural algorithm of artistic style. arXiv:1508.06576 (2015).

18. Stamatios Georgoulis, Konstantinos Rematas, Tobias Ritschel, Efstratios Gavves, Mario Fritz, Luc Van Gool, and Tinne Tuytelaars. 2017. Reflectance and natural illumination from single-material specular objects using deep learning. IEEE PAMI 40, 8 (2017).

19. Darya Guarnera, Giuseppe Claudio Guarnera, Abhijeet Ghosh, Cornelia Denk, and Mashhuda Glencross. 2016. BRDF representation and acquisition. 35, 2 (2016).

20. Yu Guo, Cameron Smith, Miloš Hašan, Kalyan Sunkavalli, and Shuang Zhao. 2020. MaterialGAN: reflectance capture using a generative svBRDF model. arXiv:2010.00114 (2020).

21. David Ha, Andrew Dai, and Quoc V Le. 2016. Hypernetworks. arXiv:1609.09106 (2016).

22. Philipp Henzler, Valentin Deschaintre, Niloy J Mitra, and Tobias Ritschel. 2021. Generative Modelling of BRDF Textures from Flash Images. ACM Trans Graph (Proc SIGGRAPH Asia) 40, 5 (2021).

23. Philipp Henzler, Niloy J Mitra, and Tobias Ritschel. 2020. Learning a neural 3d texture space from 2d exemplars. In CVPR.

24. Sebastian Herholz, Oskar Elek, Jiří Vorba, Hendrik Lensch, and Jaroslav Křivánek. 2016. Product importance sampling for light transport path guiding. 35, 4 (2016).

25. Bingyang Hu, Jie Guo, Yanjun Chen, Mengtian Li, and Yanwen Guo. 2020. DeepBRDF: A Deep Representation for Manipulating Measured BRDF. 39, 2 (2020).

26. Xuecai Hu, Haoyuan Mu, Xiangyu Zhang, Zilei Wang, Tieniu Tan, and Jian Sun. 2019. Meta-SR: A magnification-arbitrary network for super-resolution. In CVPR.

27. Xun Huang and Serge Belongie. 2017. Arbitrary style transfer in real-time with adaptive instance normalization. In ICCV.

28. Yuchi Huo, Rui Wang, Ruzahng Zheng, Hualin Xu, Hujun Bao, and Sung-Eui Yoon. 2020. Adaptive incident radiance field sampling and reconstruction using deep reinforcement learning. ACM Trans. Graph. 39, 1 (2020), 1–17.

29. Bela Julesz. 1975. Experiments in the visual perception of texture. Scientific American 232, 4 (1975).

30. Kaizhang Kang, Zimin Chen, Jiaping Wang, Kun Zhou, and Hongzhi Wu. 2018. Efficient reflectance capture using an autoencoder. ACM Trans. Graph. 37, 4 (2018).

31. Kaizhang Kang, Cihui Xie, Chengan He, Mingqi Yi, Minyi Gu, Zimin Chen, Kun Zhou, and Hongzhi Wu. 2019. Learning efficient illumination multiplexing for joint capture of reflectance and shape. ACM Trans. Graph. 38, 6 (2019), 165–1.

32. Alexander Keller, Pascal Grittmann, Jiří Vorba, Iliyan Georgiev, Martin Šik, Eugene d’Eon, Pascal Gautron, Petr Vévoda, and Ivo Kondapaneni. 2020. Advances in Monte Carlo Rendering: The Legacy of Jaroslav Křivánek. In SIGGRAPH Courses. Article 3.

33. Alexandr Kuznetsov, Milos Hasan, Zexiang Xu, Ling-Qi Yan, Bruce Walter, Nima Khademi Kalantari, Steve Marschner, and Ravi Ramamoorthi. 2019. Learning generative models for rendering specular microgeometry. ACM Trans. Graph. 38, 6 (2019).

34. Alexandr Kuznetsov, Krishna Mullia, Zexiang Xu, Miloš Hašan, and Ravi Ramamoorthi. 2021. NeuMIP: multi-resolution neural materials. ACM Trans. Graph. (Proc. SIGGRAPH) 40, 4 (2021).

35. Eric P Lafortune and Yves D Willems. 1995. A 5D tree to reduce the variance of Monte Carlo ray tracing. In EGSR. 11–20.

36. Zhenguo Li, Fengwei Zhou, Fei Chen, and Hang Li. 2017. Meta-sgd: Learning to learn quickly for few-shot learning. arXiv:1707.09835 (2017).

37. Wojciech Matusik. 2003. A data-driven reflectance model. Ph.D. Dissertation. Massachusetts Institute of Technology.

38. Maxim Maximov, Laura Leal-Taixé, Mario Fritz, and Tobias Ritschel. 2019. Deep appearance maps. In ICCV.

39. Ben Mildenhall, Pratul P Srinivasan, Matthew Tancik, Jonathan T Barron, Ravi Ramamoorthi, and Ren Ng. 2020. Nerf: Representing scenes as neural radiance fields for view synthesis. In ECCV.

40. Thomas Müller, Markus Gross, and Jan Novák. 2017. Practical path guiding for efficient light-transport simulation. In Comp. Graph. Forum, Vol. 36.

41. Thomas Müller, Brian McWilliams, Fabrice Rousselle, Markus Gross, and Jan Novák. 2019. Neural importance sampling. ACM Trans. Graph. (Proc, SIGGRAPH) 38, 5 (2019).

42. Addy Ngan, Frédo Durand, and Wojciech Matusik. 2005. Experimental Analysis of BRDF Models. Rendering Techniques (2005).

43. Javier Portilla and Eero P Simoncelli. 2000. A parametric texture model based on joint statistics of complex wavelet coefficients. Int J Computer Vision 40, 1 (2000).

44. Lara Raad, Axel Davy, Agnès Desolneux, and Jean-Michel Morel. 2018. A survey of exemplar-based texture synthesis. Annals of Mathematical Sciences and Applications 3, 1 (2018).

45. Gilles Rainer, Wenzel Jakob, Abhijeet Ghosh, and Tim Weyrich. 2019. Neural BTF compression and interpolation. In Comp. Graph. Forum, Vol. 38.

46. Sachin Ravi and Hugo Larochelle. 2016. Optimization as a model for few-shot learning. (2016).

47. Danilo Rezende and Shakir Mohamed. 2015. Variational inference with normalizing flows. In ICML.

48. Vincent Sitzmann, Eric R Chan, Richard Tucker, Noah Snavely, and Gordon Wetzstein. 2020. MetaSDF: Meta-learning signed distance functions. arXiv:2006.09662 (2020).

49. Vincent Sitzmann, Michael Zollhöfer, and Gordon Wetzstein. 2019. Scene representation networks: Continuous 3d-structure-aware neural scene representations. arXiv:1906.01618 (2019).

50. Alejandro Sztrajman, Gilles Rainer, Tobias Ritschel, and Tim Weyrich. 2021. Neural BRDF Representation and Importance Sampling. In Comp. Graph. Forum, Vol. 40.

51. Shufeng Tan and Michael L Mayrovouniotis. 1995. Reducing data dimensionality through optimizing neural network inputs. AIChE Journal 41, 6 (1995).

52. Matthew Tancik, Ben Mildenhall, Terrance Wang, Divi Schmidt, Pratul P Srinivasan, Jonathan T Barron, and Ren Ng. 2021. Learned initializations for optimizing coordinate-based neural representations. In CVPR.

53. Ayush Tewari, Ohad Fried, Justus Thies, Vincent Sitzmann, Stephen Lombardi, Kalyan Sunkavalli, Ricardo Martin-Brualla, Tomas Simon, Jason Saragih, Matthias Nießner, et al. 2020. State of the art on neural rendering. In Comp. Graph. Forum, Vol. 39.

54. Dmitry Ulyanov, Vadim Lebedev, Andrea Vedaldi, and Victor S Lempitsky. 2016. Texture networks: Feed-forward synthesis of textures and stylized images.. In ICML.

55. Eric Veach. 1998. Robust Monte Carlo methods for light transport simulation. Stanford University.

56. Jiří Vorba, Ondřej Karlík, Martin Šik, Tobias Ritschel, and Jaroslav Křivánek. 2014. On-line learning of parametric mixture models for light transport simulation. ACM Trans. Graph. 33, 4 (2014).

57. Shaofei Wang, Marko Mihajlovic, Qianli Ma, Andreas Geiger, and Siyu Tang. 2021. MetaAvatar: Learning Animatable Clothed Human Models from Few Depth Images. arXiv:2106.11944 (2021).

58. Xiuming Zhang, Pratul P Srinivasan, Boyang Deng, Paul Debevec, William T Freeman, and Jonathan T Barron. 2021. NeRFactor: Neural Factorization of Shape and Reflectance Under an Unknown Illumination. arXiv:2106.01970 (2021).

59. Quan Zheng and Matthias Zwicker. 2019. Learning to importance sample in primary sample space. 38, 2 (2019).

60. Shilin Zhu, Zexiang Xu, Henrik Wann Jensen, Hao Su, and Ravi Ramamoorthi. 2020. Deep kernel density estimation for photon mapping. In Comp. Graph. Forum. (proc. EGSR), Vol. 39.

61. Shilin Zhu, Zexiang Xu, Tiancheng Sun, Alexandr Kuznetsov, Mark Meyer, Henrik Wann Jensen, Hao Su, and Ravi Ramamoorthi. 2021. Photon-Driven Neural Reconstruction for Path Guiding. ACM Trans. Graph. 41, 1 (2021).