“EyeHacker: Gaze-Based Automatic Reality Manipulation” by Ito, Wakisaka, Izumihara, Yamaguchi, Hiyama, et al. …

Conference:

Experience Type(s):

Title:

- EyeHacker: Gaze-Based Automatic Reality Manipulation

Program Title:

- New Technologies Research & Education

Organizer(s)/Presenter(s):

Description:

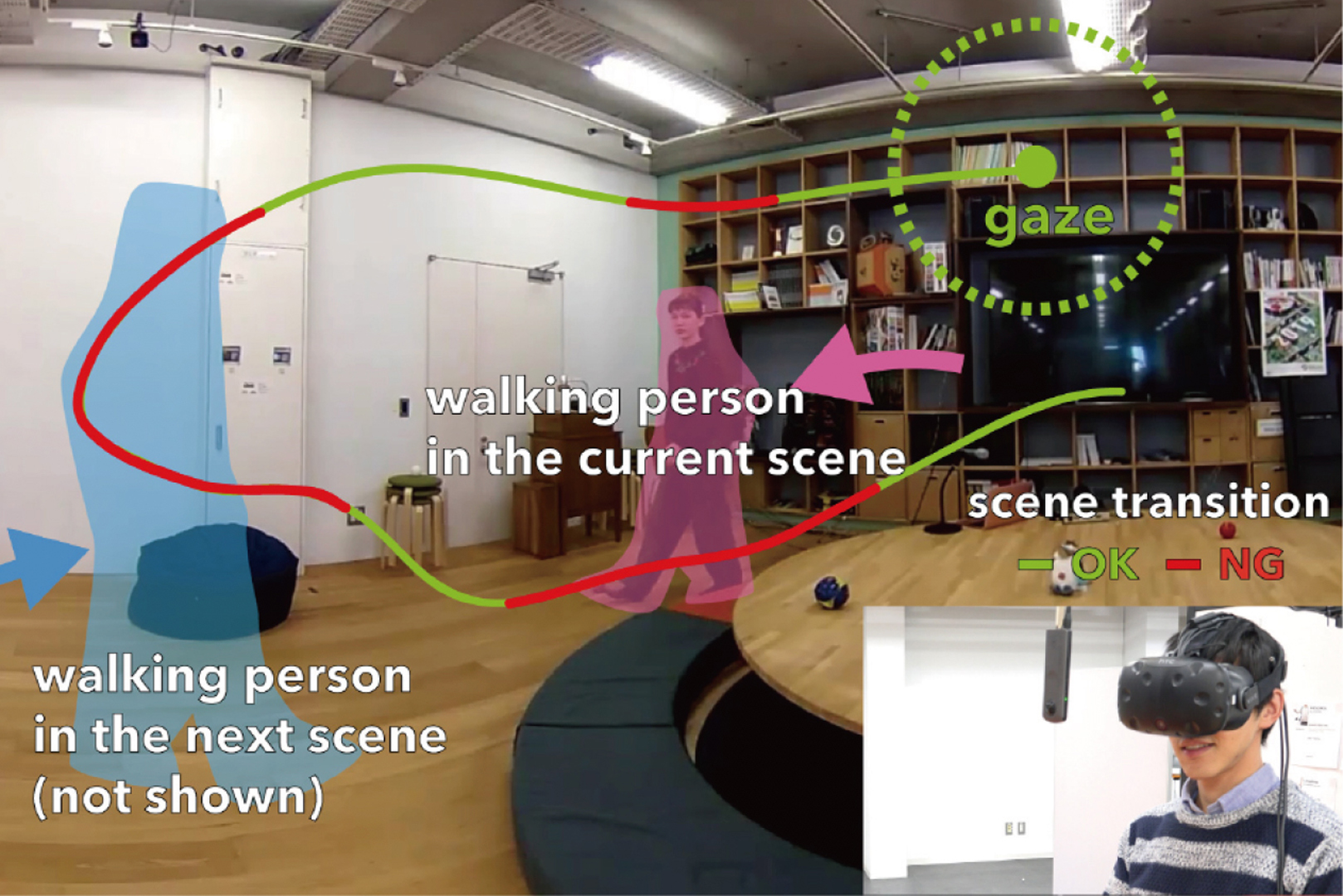

EyeHacker is a VR system that spatiotemporally mixes the live scene and the recorded/edited scenes based on the measurement of the user’s gaze (the locus of attention). Our system can manipulate visual reality without being noticed by users (i.e., eye hacking), and trigger various kinds of reality confusion.

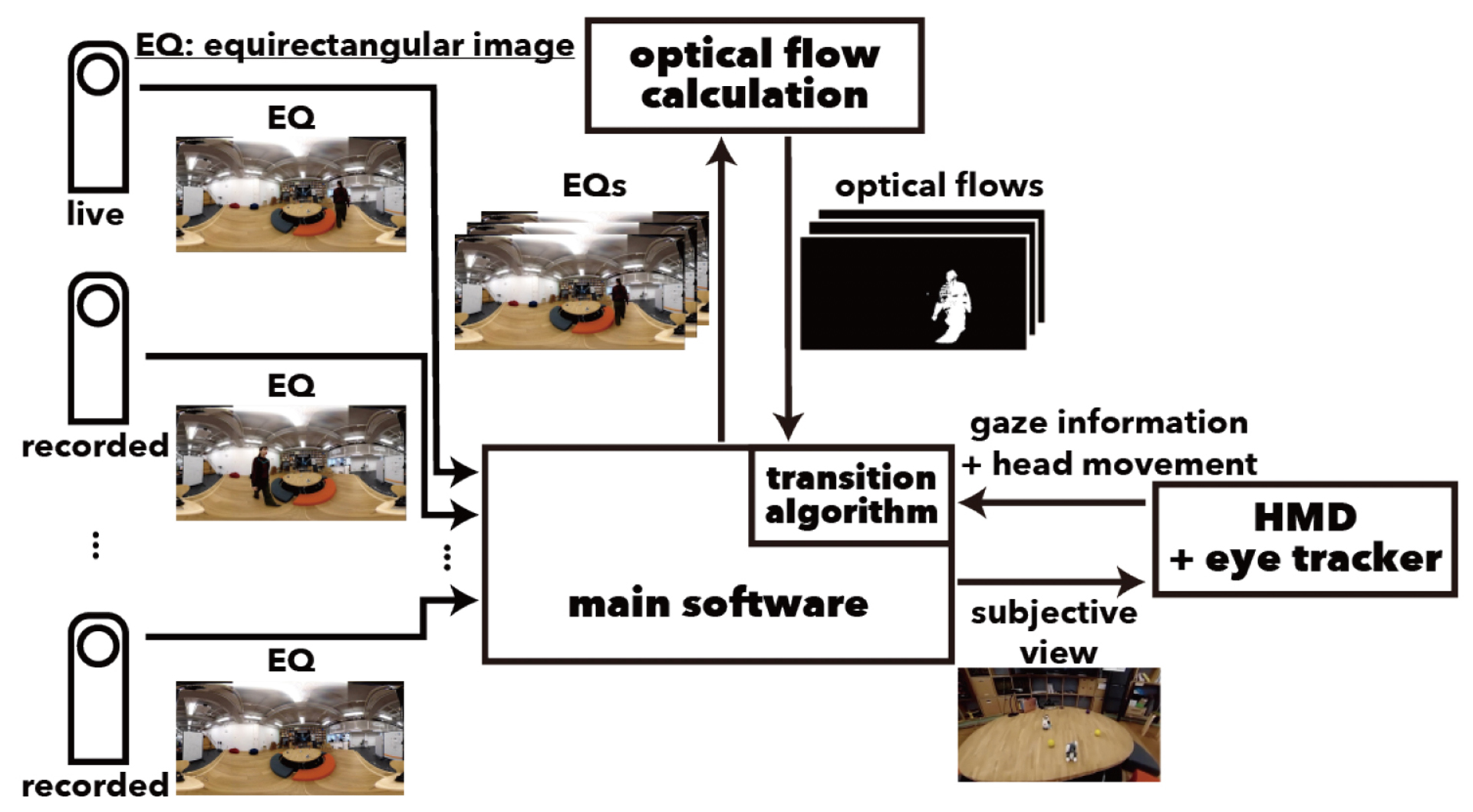

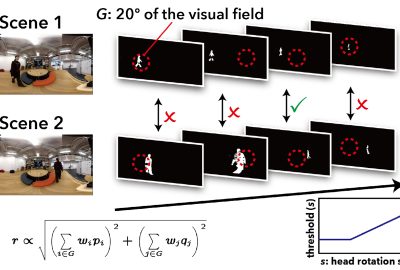

In this study, we introduce EyeHacker, which is an immersive virtual reality (VR) system that spatiotemporally mixes the live and recorded/edited scenes based on the measurement of the users’ gaze. This system updates the transition risk in real time by utilizing the gaze information of the users (i.e., the locus of attention) and the optical flow of scenes. Scene transitions are allowed when the risk is less than the threshold, which is modulated by the head movement data of the users (i.e., the faster their head movement, the higher will be the threshold). Using this algorithm and experience scenario prepared in advance, visual reality can be manipulated without being noticed by users (i.e., eye hacking). For example, consider a situation in which the objects around the users perpetually disappear and appear. The users would often have a strange feeling that something was wrong and, sometimes, would even find what happened but only later; they cannot visually perceive the changes in real time. Further, with the other variant of risk algorithms, the system can implement a variety of experience scenarios, resulting in reality confusion.

References:

[1] Kirsten Cater, Alan Chalmers, and Patrick Ledda. 2002. Selective Quality Rendering by Exploiting Human Inattentional Blindness: Looking but Not Seeing. In Proceedings of the ACM Symposium on Virtual Reality Software and Technology (VRST ’02). ACM, New York, NY, USA, 17–24.

[2] Ronald Rensink. 2009. Attention: Change Blindness and Inattentional Blindness. 47–59.

[3] Evan A. Suma, Seth Clark, David Krum, Samantha Finkelstein, Mark Bolas, and Zachary Warte. 2011. Leveraging change blindness for redirection in virtual environments. In 2011 IEEE Virtual Reality Conference. 159–166.

[4] Keisuke Suzuki, Sohei Wakisaka, and Naotaka Fujii. 2012. Substitutional Reality System: A Novel Experimental Platform for Experiencing Alternative Reality. Scientific Reports 2 (21 Jun 2012), 459.

[5] Jochen Triesch, Dana H. Ballard, Mary M. Hayhoe, and Brian T. Sullivan. 2003. What you see is what you need. Journal of Vision 3, 1 (02 2003), 9–9.

Additional Images:

- 2019 ETech Ito: EyeHacker: Gaze-Based Automatic Reality Manipulation