“MOCHA: Real-Time Motion Characterization via Context Matching” by Jang, Ye, Won and Lee

Conference:

Type(s):

Title:

- MOCHA: Real-Time Motion Characterization via Context Matching

Session/Category Title: Robots & Characters

Presenter(s)/Author(s):

Abstract:

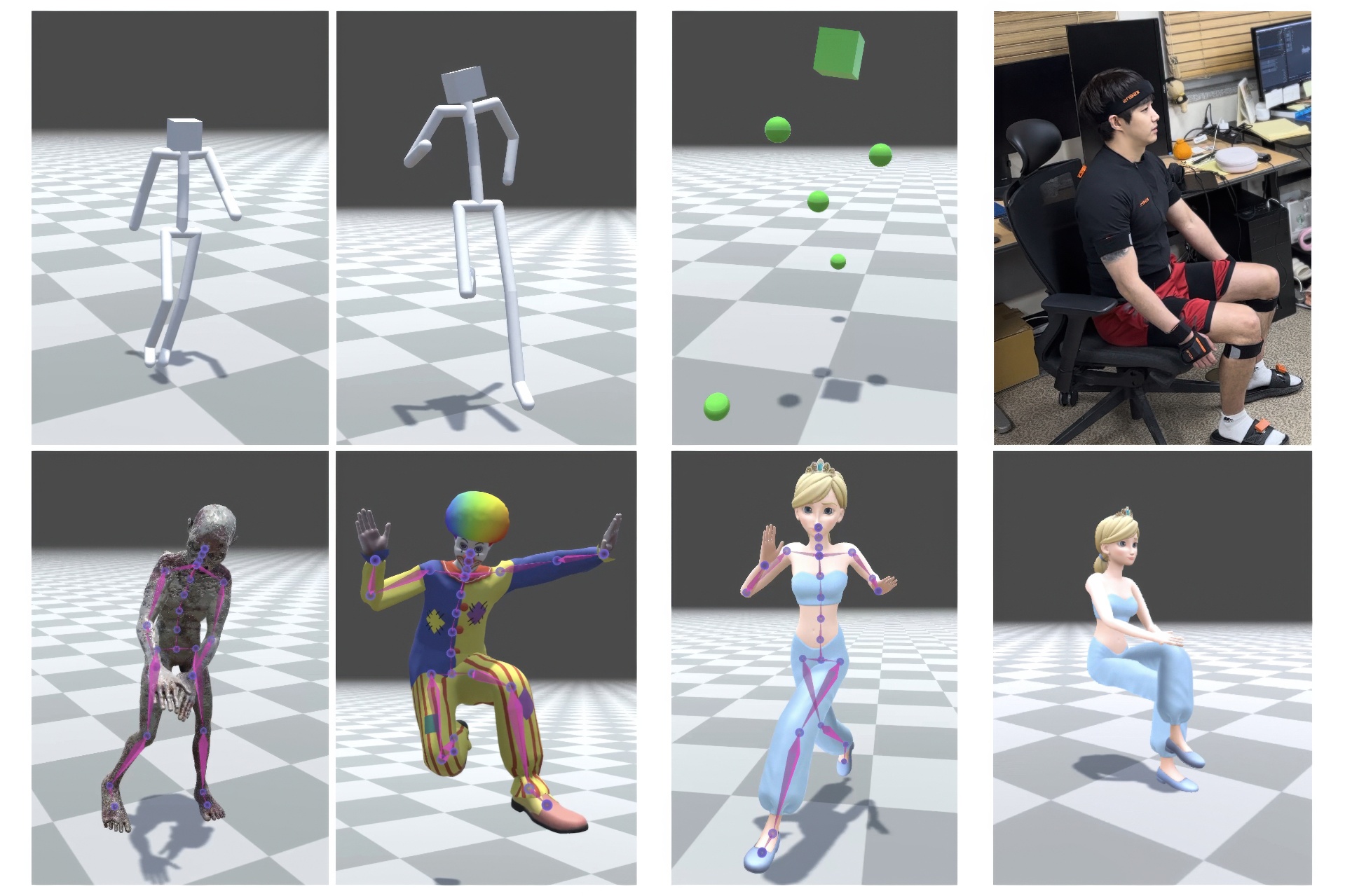

Transforming neutral, characterless input motions to embody the distinct style of a notable character in real time is highly compelling for character animation. This paper introduces MOCHA, a novel online motion characterization framework that transfers both motion styles and body proportions from a target character to an input source motion. MOCHA begins by encoding the input motion into a motion feature that structures the body part topology and captures motion dependencies for effective characterization. Central to our framework is the Neural Context Matcher, which generates a motion feature for the target character with the most similar context to the input motion feature. The conditioned autoregressive model of the Neural Context Matcher can produce temporally coherent character features in each time frame. To generate the final characterized pose, our Characterizer network incorporates the characteristic aspects of the target motion feature into the input motion feature while preserving its context. This is achieved through a transformer model that introduces the adaptive instance normalization and context mapping-based cross-attention, effectively injecting the character feature into the source feature. We validate the performance of our framework through comparisons with prior work and an ablation study. Our framework can easily accommodate various applications, including characterization with only sparse input and real-time characterization. Additionally, we contribute a high-quality motion dataset comprising six different characters performing a range of motions, which can serve as a valuable resource for future research.

References:

[1]

Kfir Aberman, Peizhuo Li, Dani Lischinski, Olga Sorkine-Hornung, Daniel Cohen-Or, and Baoquan Chen. 2020a. Skeleton-aware networks for deep motion retargeting. ACM Transactions on Graphics (TOG) 39, 4 (2020), 62–1. https://doi.org/10.1145/3386569.3392462

[2]

Kfir Aberman, Yijia Weng, Dani Lischinski, Daniel Cohen-Or, and Baoquan Chen. 2020b. Unpaired motion style transfer from video to animation. ACM Transactions on Graphics (TOG) 39, 4 (2020), 64–1. https://doi.org/10.1145/3386569.3392469

[3]

Kenji Amaya, Armin Bruderlin, and Tom Calvert. 1996. Emotion from Motion. In Proceedings of the Graphics Interface 1996 Conference, May 22-24, 1996, Toronto, Ontario, Canada. Canadian Human-Computer Communications Society, 222–229.

[4]

Andreas Aristidou, Anastasios Yiannakidis, Kfir Aberman, Daniel Cohen-Or, Ariel Shamir, and Yiorgos Chrysanthou. 2022. Rhythm is a dancer: Music-driven motion synthesis with global structure. IEEE Transactions on Visualization and Computer Graphics (2022).

[5]

Matthew Brand and Aaron Hertzmann. 2000. Style Machines. In Proceedings of the 27th Annual Conference on Computer Graphics and Interactive Techniques(SIGGRAPH ’00). ACM Press/Addison-Wesley Publishing Co., USA, 183–192. https://doi.org/10.1145/344779.344865

[6]

Yunjey Choi, Youngjung Uh, Jaejun Yoo, and Jung-Woo Ha. 2020. Stargan v2: Diverse image synthesis for multiple domains. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 8188–8197.

[7]

Simon Clavet. 2016. The road to Next Gen Animation. https://www.gdcvault.com/play/1023280/Motion-Matching-and-The-Road.

[8]

Yuzhu Dong, Andreas Aristidou, Ariel Shamir, Moshe Mahler, and Eakta Jain. 2020. Adult2child: Motion Style Transfer Using CycleGANs. In Proceedings of the 13th ACM SIGGRAPH Conference on Motion, Interaction and Games. Article 13, 11 pages. https://doi.org/10.1145/3424636.3426909

[9]

Yuzhu Dong, Sachin Paryani, Neha Rana, Aishat Aloba, Lisa Anthony, and Eakta Jain. 2017. Adult2Child: Dynamic Scaling Laws to Create Child-Like Motion. In Proceedings of the 10th International Conference on Motion in Games. 13:1–13:10. https://doi.org/10.1145/3136457.3136460

[10]

Mira Dontcheva, Gary Yngve, and Zoran Popović. 2003. Layered Acting for Character Animation. ACM Trans. Graph. 22, 3 (jul 2003), 409–416. https://doi.org/10.1145/882262.882285

[11]

Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, 2020. An image is worth 16×16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929 (2020).

[12]

Han Du, Erik Herrmann, Janis Sprenger, Klaus Fischer, and Philipp Slusallek. 2019. Stylistic locomotion modeling and synthesis using variational generative models. In Motion, Interaction and Games. 1–10. https://doi.org/10.1145/3359566.3360083

[13]

Leon A. Gatys, Alexander S. Ecker, and Matthias Bethge. 2015. A Neural Algorithm of Artistic Style.CoRR abs/1508.06576 (2015). http://dblp.uni-trier.de/db/journals/corr/corr1508.html#GatysEB15a

[14]

Keith Grochow, Steven L. Martin, Aaron Hertzmann, and Zoran Popović. 2004. Style-Based Inverse Kinematics. ACM Trans. Graph. 23, 3 (aug 2004), 522–531. https://doi.org/10.1145/1015706.1015755

[15]

Daniel Holden, Oussama Kanoun, Maksym Perepichka, and Tiberiu Popa. 2020. Learned motion matching. ACM Transactions on Graphics (TOG) 39, 4 (2020), 53–1. https://doi.org/10.1145/3386569.3392440

[16]

Daniel Holden, Jun Saito, and Taku Komura. 2016. A deep learning framework for character motion synthesis and editing. ACM Transactions on Graphics (TOG) 35, 4 (2016), 1–11. https://doi.org/10.1145/2897824.2925975

[17]

Xun Huang and Serge Belongie. 2017. Arbitrary style transfer in real-time with adaptive instance normalization. In Proceedings of the IEEE International Conference on Computer Vision. 1501–1510.

[18]

Leslie Ikemoto, Okan Arikan, and David Forsyth. 2009. Generalizing Motion Edits with Gaussian Processes. ACM Trans. Graph. 28, 1, Article 1 (feb 2009), 12 pages. https://doi.org/10.1145/1477926.1477927

[19]

Deok-Kyeong Jang, Soomin Park, and Sung-Hee Lee. 2022. Motion Puzzle: Arbitrary Motion Style Transfer by Body Part. ACM Trans. Graph. 41, 3, Article 33 (jun 2022), 16 pages. https://doi.org/10.1145/3516429

[20]

Paul G Kry, Lionel Revéret, François Faure, and M-P Cani. 2009. Modal locomotion: Animating virtual characters with natural vibrations. In Computer Graphics Forum, Vol. 28. Wiley Online Library, 289–298.

[21]

Wanli Ma, Shihong Xia, Jessica K Hodgins, Xiao Yang, Chunpeng Li, and Zhaoqi Wang. 2010. Modeling style and variation in human motion. In Proceedings of the 2010 ACM SIGGRAPH/Eurographics Symposium on Computer Animation. 21–30. https://doi.org/10.1109/IUCS.2010.5666642

[22]

Ian Mason, Sebastian Starke, He Zhang, Hakan Bilen, and Taku Komura. 2018. Few-shot Learning of Homogeneous Human Locomotion Styles. In Computer Graphics Forum, Vol. 37. Wiley Online Library, 143–153. https://doi.org/10.1111/cgf.13555

[23]

Jianyuan Min, Yen-Lin Chen, and Jinxiang Chai. 2009. Interactive Generation of Human Animation with Deformable Motion Models. ACM Trans. Graph. 29, 1, Article 9 (dec 2009), 12 pages. https://doi.org/10.1145/1640443.1640452

[24]

Aaron van den Oord, Yazhe Li, and Oriol Vinyals. 2018. Representation learning with contrastive predictive coding. arXiv preprint arXiv:1807.03748 (2018).

[25]

Soomin Park, Deok-Kyeong Jang, and Sung-Hee Lee. 2021. Diverse motion stylization for multiple style domains via spatial-temporal graph-based generative model. Proceedings of the ACM on Computer Graphics and Interactive Techniques 4, 3 (2021), 1–17. https://doi.org/10.1145/3480145

[26]

Davis Rempe, Tolga Birdal, Aaron Hertzmann, Jimei Yang, Srinath Sridhar, and Leonidas J Guibas. 2021. Humor: 3d human motion model for robust pose estimation. In Proceedings of the IEEE/CVF international conference on computer vision. 11488–11499.

[27]

C. Rose, M.F. Cohen, and B. Bodenheimer. 1998. Verbs and adverbs: multidimensional motion interpolation. IEEE Computer Graphics and Applications 18, 5 (1998), 32–40. https://doi.org/10.1109/38.708559

[28]

Yeongho Seol, Carol O’Sullivan, and Jehee Lee. 2013. Creature Features: Online Motion Puppetry for Non-Human Characters. In Proceedings of the 12th ACM SIGGRAPH/Eurographics Symposium on Computer Animation(SCA ’13). 213–221. https://doi.org/10.1145/2485895.2485903

[29]

Ari Shapiro, Yong Cao, and Petros Faloutsos. 2006. Style components. In Proceedings of Graphics Interface 2006 (Québec, Québec, Canada) (GI 2006). Canadian Human-Computer Communications Society, Toronto, Ontario, Canada, 33–39.

[30]

Harrison Jesse Smith, Chen Cao, Michael Neff, and Yingying Wang. 2019. Efficient neural networks for real-time motion style transfer. Proceedings of the ACM on Computer Graphics and Interactive Techniques 2, 2 (2019), 1–17. https://doi.org/10.1145/3340254

[31]

T. Tao, X. Zhan, Z. Chen, and M. van de Panne. 2022a. Style-ERD: Responsive and Coherent Online Motion Style Transfer. In 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). IEEE Computer Society, Los Alamitos, CA, USA, 6583–6593. https://doi.org/10.1109/CVPR52688.2022.00648

[32]

Tianxin Tao, Xiaohang Zhan, Zhongquan Chen, and Michiel van de Panne. 2022b. Style-ERD: responsive and coherent online motion style transfer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 6593–6603.

[33]

Munetoshi Unuma, Ken Anjyo, and Ryozo Takeuchi. 1995. Fourier principles for emotion-based human figure animation. In Proceedings of the 22nd annual conference on Computer graphics and interactive techniques. 91–96.

[34]

Jack M. Wang, David J. Fleet, and Aaron Hertzmann. 2007. Multifactor Gaussian Process Models for Style-Content Separation. In Proceedings of the 24th International Conference on Machine Learning(ICML ’07). 975–982. https://doi.org/10.1145/1273496.1273619

[35]

Shihong Xia, Congyi Wang, Jinxiang Chai, and Jessica Hodgins. 2015. Realtime style transfer for unlabeled heterogeneous human motion. ACM Transactions on Graphics (TOG) 34, 4 (2015), 1–10. https://doi.org/10.1145/2766999

[36]

Sijie Yan, Yuanjun Xiong, and Dahua Lin. 2018. Spatial temporal graph convolutional networks for skeleton-based action recognition. In Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 32.

[37]

M Ersin Yumer and Niloy J Mitra. 2016. Spectral style transfer for human motion between independent actions. ACM Transactions on Graphics (TOG) 35, 4 (2016), 1–8. https://doi.org/10.1145/2897824.2925955

[38]

T. Zhou, P. Krahenbuhl, M. Aubry, Q. Huang, and A. A. Efros. 2016. Learning Dense Correspondence via 3D-Guided Cycle Consistency. In 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). IEEE Computer Society, Los Alamitos, CA, USA, 117–126. https://doi.org/10.1109/CVPR.2016.20